- UnitBox An Advanced Object Detection Network,arxiv 16.08

- UnitBox An Advanced Object Detection Network,arxiv 16.08 (download)

该论文提出了一种新的loss function:IoU loss。这点比较有意思,也容易复现。

======

论文分析了faster-rcnn和densebox的优缺点:

1 faster-rcnn:rpn用来predict the bounding boxes of object candidates from anchors,但是这些anchors是事先定义好的(如3 scales & 3 aspect ratios),RPN shows difficult to handle the object candidates with large shape variations, especially for small objects. 也就是RPN不能很好cover所有的情况(以至于很多基于faster-rcnn的论文都在改善这点)

2 densebox:utilizes every pixel of the feature map to regress a 4-D distance vector (the distances between the current pixel and the four bounds of object candidate containing it). However, DenseBox optimizes the four-side distances as four independent variables, under the simplistic lL2 loss,;besides, to balance the bounding boxes with varied scales, DenseBox requires the training image patches to be resized to a fixed scale. As a consequence, DenseBox has to perform detection on image pyramids, which unavoidably affects the eciency of the framework.

=====

论文的做法:adopts a fully convolutional network architecture, to predict the object bounds as well as the pixel-wise classification scores on the feature maps directly. Particularly, UnitBox takes advantage of a novel Intersection over Union (IoU) loss function for bounding box prediction. The IoU loss directly enforces the maximal overlap between the predicted bounding box and the ground truth, and jointly regress all the bound variables as a whole unit The UnitBox demonstrates not only more accurate box prediction, but also faster training convergence. UnitBox is enabled with variable- scale training. It implies the capability to localize objects in arbitrary shapes and scales, and to perform more e cient testing by just one pass on singe scale.

=====

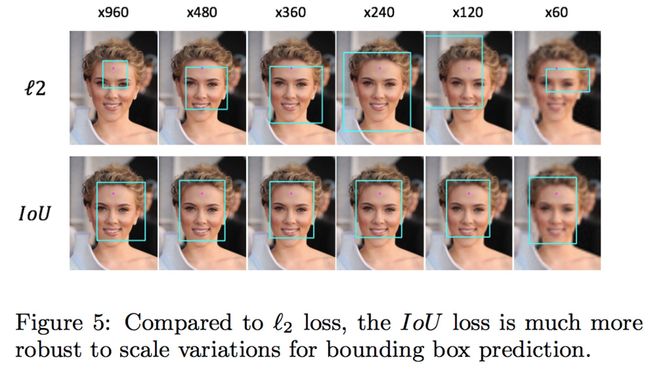

通常L2 loss会有两个缺点:

1 the coordinates of a bounding box are optimized as four independent variables。

This assumption violates the fact that the bounds of an object are highly correlated.

It results in a number of failure cases in which one or two bounds of a predicted box are very close to the ground truth,

but the entire bounding box is unacceptable.

2 if the L2 loss is unnormalized, given two pixels, one falls in a larger bounding box while the other falls in a smaller one,

the former will have a larger effiect on the penalty than the latter.

=====

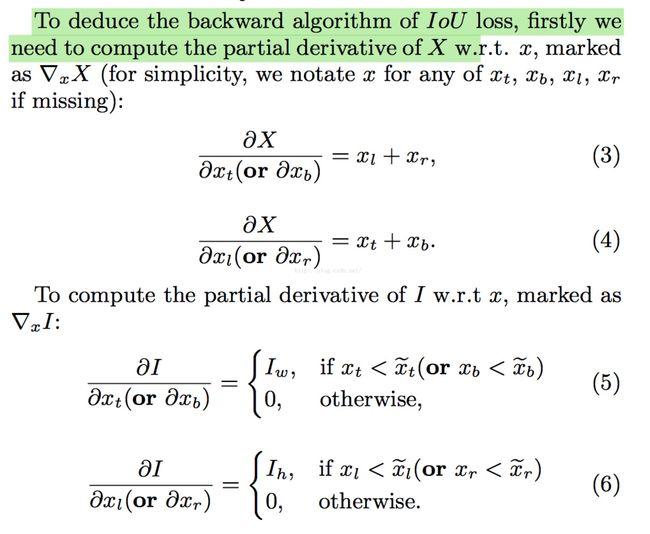

IoU loss(需要ReLU,确保four coordinates>=0)

最小化IoU loss,相当于最大化intersection area以及predicted box尽可能fit的ground truth。

=====

framework:

基于VGG16的modified network,为什么在conv4后面接cls-net,而在conv5后接det-net?

The reason is that to regress the bounding box as a unit,

the bounding box branch needs a larger receptive field than the confidence branch.

cls-net:sigmoid cross-entropy loss

det-net:IoU loss

训练时,可以joint-train,也可以seperate train。

测试时,to generate bounding boxes of faces, firstly we fit the faces by ellipses on the thresholded con- fidence heatmaps. Since the face ellipses are too coarse to localize objects, we further select the center pixels of these coarse face ellipses and extract the corresponding bounding boxes from these selected pixels.

=====

有趣的对比:

1 unitbox比L2收敛快,而且效果好

2 可以处理不同的尺度的物体(或者同一类物体)

=====

问题:

1 IoU loss需要对four coordinates进行归一化么?还是直接回归真实坐标?

2 训练测试时的输入大小是多少?

3 IoU loss适合图像只有很少物体的情况么,如<2?

4 IoU loos可以扩展多多类上么(论文里只有bg和face)?

5 测试时,speed的瓶颈是哪部分?

6 对于cls-net和det-net,可以多接几个层么?

7 在生成ground truth 的confidence map和coordinate maps时,怎么处理overlap的ground truths?

=====

如果这篇博文对你有帮助,可否赏笔者喝杯奶茶?

![]()