Scala---文件读取、写入、控制台操作

Scala文件读取

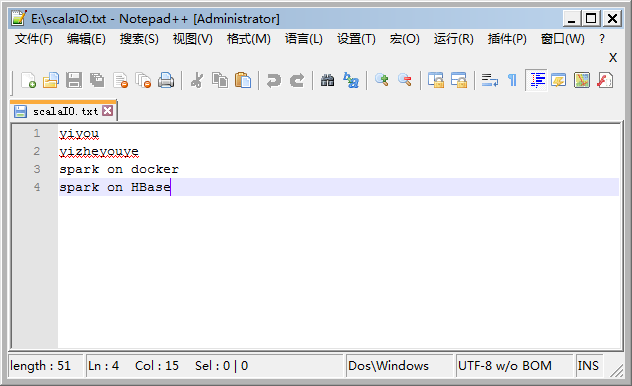

E盘根目录下scalaIO.txt文件内容如下:

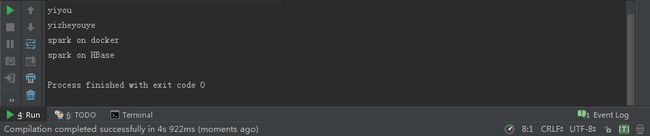

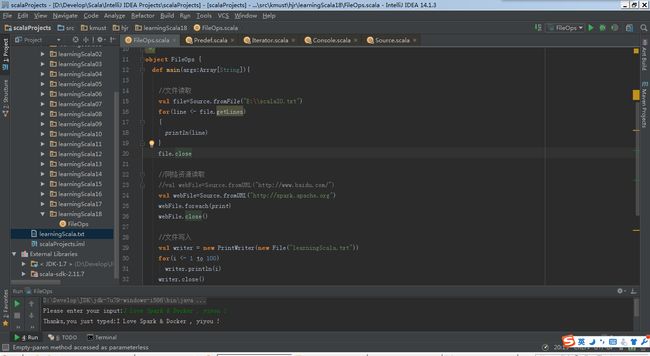

文件读取示例代码:

//文件读取

val file=Source.fromFile("E:\\scalaIO.txt")

for(line <- file.getLines)

{

println(line)

}

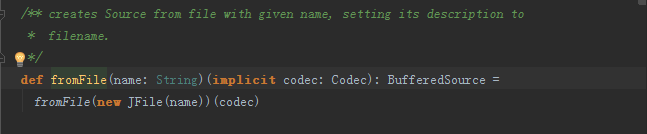

file.close- 说明1:file=Source.fromFile(“E:\scalaIO.txt”),其中Source中的fromFile()方法源自 import scala.io.Source源码包,源码如下图:

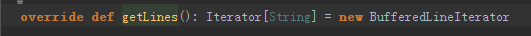

- file.getLines(),返回的是一个迭代器-Iterator;源码如下:(scala.io)

-

Scala 网络资源读取

//网络资源读取

val webFile=Source.fromURL("http://spark.apache.org")

webFile.foreach(print)

webFile.close()fromURL()方法源码如下:

/** same as fromURL(new URL(s))

*/

def fromURL(s: String)(implicit codec: Codec): BufferedSource =

fromURL(new URL(s))(codec)读取的网络资源资源内容如下:

<html lang="en">

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>

Apache Spark™ - Lightning-Fast Cluster Computing

title>

<meta name="description" content="Apache Spark is a fast and general engine for big data processing, with built-in modules for streaming, SQL, machine learning and graph processing.">

<link href="/css/cerulean.min.css" rel="stylesheet">

<link href="/css/custom.css" rel="stylesheet">

<script type="text/javascript">

var _gaq = _gaq || [];

_gaq.push(['_setAccount', 'UA-32518208-2']);

_gaq.push(['_trackPageview']);

(function() {

var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true;

ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www') + '.google-analytics.com/ga.js';

var s = document.getElementsByTagName('script')[0]; s.parentNode.insertBefore(ga, s);

})();

function trackOutboundLink(link, category, action) {

try {

_gaq.push(['_trackEvent', category , action]);

} catch(err){}

setTimeout(function() {

document.location.href = link.href;

}, 100);

}

script>

head>

<body>

<script src="https://code.jquery.com/jquery.js">script>

<script src="//netdna.bootstrapcdn.com/bootstrap/3.0.3/js/bootstrap.min.js">script>

<script src="/js/lang-tabs.js">script>

<script src="/js/downloads.js">script>

<div class="container" style="max-width: 1200px;">

<div class="masthead">

<p class="lead">

<a href="/">

<img src="/images/spark-logo.png"

style="height:100px; width:auto; vertical-align: bottom; margin-top: 20px;">a><span class="tagline">

Lightning-fast cluster computing

span>

p>

div>

<nav class="navbar navbar-default" role="navigation">

<div class="navbar-header">

<button type="button" class="navbar-toggle" data-toggle="collapse"

data-target="#navbar-collapse-1">

<span class="sr-only">Toggle navigationspan>

<span class="icon-bar">span>

<span class="icon-bar">span>

<span class="icon-bar">span>

button>

div>

<div class="collapse navbar-collapse" id="navbar-collapse-1">

<ul class="nav navbar-nav">

<li><a href="/downloads.html">Downloada>li>

<li class="dropdown">

<a href="#" class="dropdown-toggle" data-toggle="dropdown">

Libraries <b class="caret">b>

a>

<ul class="dropdown-menu">

<li><a href="/sql/">SQL and DataFramesa>li>

<li><a href="/streaming/">Spark Streaminga>li>

<li><a href="/mllib/">MLlib (machine learning)a>li>

<li><a href="/graphx/">GraphX (graph)a>li>

<li class="divider">li>

<li><a href="http://spark-packages.org">Third-Party Packagesa>li>

ul>

li>

<li class="dropdown">

<a href="#" class="dropdown-toggle" data-toggle="dropdown">

Documentation <b class="caret">b>

a>

<ul class="dropdown-menu">

<li><a href="/docs/latest/">Latest Release (Spark 1.5.1)a>li>

<li><a href="/documentation.html">Other Resourcesa>li>

ul>

li>

<li><a href="/examples.html">Examplesa>li>

<li class="dropdown">

<a href="/community.html" class="dropdown-toggle" data-toggle="dropdown">

Community <b class="caret">b>

a>

<ul class="dropdown-menu">

<li><a href="/community.html">Mailing Listsa>li>

<li><a href="/community.html#events">Events and Meetupsa>li>

<li><a href="/community.html#history">Project Historya>li>

<li><a href="https://cwiki.apache.org/confluence/display/SPARK/Powered+By+Spark">Powered Bya>li>

<li><a href="https://cwiki.apache.org/confluence/display/SPARK/Committers">Project Committersa>li>

<li><a href="https://issues.apache.org/jira/browse/SPARK">Issue Trackera>li>

ul>

li>

<li><a href="/faq.html">FAQa>li>

ul>

div>

nav>

<div class="row">

<div class="col-md-3 col-md-push-9">

<div class="news" style="margin-bottom: 20px;">

<h5>Latest Newsh5>

<ul class="list-unstyled">

<li><a href="/news/submit-talks-to-spark-summit-east-2016.html">Submission is open for Spark Summit East 2016a>

<span class="small">(Oct 14, 2015)span>li>

<li><a href="/news/spark-1-5-1-released.html">Spark 1.5.1 releaseda>

<span class="small">(Oct 02, 2015)span>li>

<li><a href="/news/spark-1-5-0-released.html">Spark 1.5.0 releaseda>

<span class="small">(Sep 09, 2015)span>li>

<li><a href="/news/spark-summit-europe-agenda-posted.html">Spark Summit Europe agenda posteda>

<span class="small">(Sep 07, 2015)span>li>

ul>

<p class="small" style="text-align: right;"><a href="/news/index.html">Archivea>p>

div>

<div class="hidden-xs hidden-sm">

<a href="/downloads.html" class="btn btn-success btn-lg btn-block" style="margin-bottom: 30px;">

Download Spark

a>

<p style="font-size: 16px; font-weight: 500; color: #555;">

Built-in Libraries:

p>

<ul class="list-none">

<li><a href="/sql/">SQL and DataFramesa>li>

<li><a href="/streaming/">Spark Streaminga>li>

<li><a href="/mllib/">MLlib (machine learning)a>li>

<li><a href="/graphx/">GraphX (graph)a>li>

ul>

<a href="http://spark-packages.org">Third-Party Packagesa>

div>

div>

<div class="col-md-9 col-md-pull-3">

<div class="jumbotron">

<b>Apache Spark™b> is a fast and general engine for large-scale data processing.

div>

<div class="row row-padded">

<div class="col-md-7 col-sm-7">

<h2>Speedh2>

<p class="lead">

Run programs up to 100x faster than

Hadoop MapReduce in memory, or 10x faster on disk.

p>

<p>

Spark has an advanced DAG execution engine that supports cyclic data flow and

in-memory computing.

p>

div>

<div class="col-md-5 col-sm-5 col-padded-top col-center">

<div style="width: 100%; max-width: 272px; display: inline-block; text-align: center;">

<img src="/images/logistic-regression.png" style="width: 100%; max-width: 250px;" />

<div class="caption" style="min-width: 272px;">Logistic regression in Hadoop and Sparkdiv>

div>

div>

div>

<div class="row row-padded">

<div class="col-md-7 col-sm-7">

<h2>Ease of Useh2>

<p class="lead">

Write applications quickly in Java, Scala, Python, R.

p>

<p>

Spark offers over 80 high-level operators that make it easy to build parallel apps.

And you can use it <em>interactivelyem>

from the Scala, Python and R shells.

p>

div>

<div class="col-md-5 col-sm-5 col-padded-top col-center">

<div style="text-align: left; display: inline-block;">

<div class="code">

text_file = spark.textFile(<span class="string">"hdfs://..."span>)<br />

<br />

text_file.<span class="sparkop">flatMapspan>(<span class="closure">lambda line: line.split()span>)<br />

.<span class="sparkop">mapspan>(<span class="closure">lambda word: (word, 1)span>)<br />

.<span class="sparkop">reduceByKeyspan>(<span class="closure">lambda a, b: a+bspan>)

div>

<div class="caption">Word count in Spark's Python APIdiv>

div>

div>

div>

<div class="row row-padded">

<div class="col-md-7 col-sm-7">

<h2>Generalityh2>

<p class="lead">

Combine SQL, streaming, and complex analytics.

p>

<p>

Spark powers a stack of libraries including

<a href="/sql/">SQL and DataFramesa>, <a href="/mllib/">MLliba> for machine learning,

<a href="/graphx/">GraphXa>, and <a href="/streaming/">Spark Streaminga>.

You can combine these libraries seamlessly in the same application.

p>

div>

<div class="col-md-5 col-sm-5 col-padded-top col-center">

<img src="/images/spark-stack.png" style="margin-top: 15px; width: 100%; max-width: 296px;" usemap="#stack-map" />

<map name="stack-map">

<area shape="rect" coords="0,0,74,95" href="/sql/" alt="Spark SQL" title="Spark SQL" />

<area shape="rect" coords="74,0,150,95" href="/streaming/" alt="Spark Streaming" title="Spark Streaming" />

<area shape="rect" coords="150,0,224,95" href="/mllib/" alt="MLlib (machine learning)" title="MLlib" />

<area shape="rect" coords="225,0,300,95" href="/graphx/" alt="GraphX" title="GraphX" />

map>

div>

div>

<div class="row row-padded" style="margin-bottom: 15px;">

<div class="col-md-7 col-sm-7">

<h2>Runs Everywhereh2>

<p class="lead">

Spark runs on Hadoop, Mesos, standalone, or in the cloud. It can access diverse data sources including HDFS, Cassandra, HBase, and S3.

p>

<p>

You can run Spark using its <a href="/docs/latest/spark-standalone.html">standalone cluster modea>, on <a href="/docs/latest/ec2-scripts.html">EC2a>, on Hadoop YARN, or on <a href="http://mesos.apache.org">Apache Mesosa>.

Access data in <a href="http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HdfsUserGuide.html">HDFSa>, <a href="http://cassandra.apache.org">Cassandraa>, <a href="http://hbase.apache.org">HBasea>,

<a href="http://hive.apache.org">Hivea>, <a href="http://tachyon-project.org">Tachyona>, and any Hadoop data source.

p>

div>

<div class="col-md-5 col-sm-5 col-padded-top col-center">

<img src="/images/spark-runs-everywhere.png" style="width: 100%; max-width: 280px;" />

div>

div>

div>

div>

<div class="row">

<div class="col-md-4 col-padded">

<h3>Communityh3>

<p>

Spark is used at a wide range of organizations to process large datasets.

You can find example use cases at the <a href="http://spark-summit.org/summit-2013/">Spark Summita>

conference, or on the

<a href="https://cwiki.apache.org/confluence/display/SPARK/Powered+By+Spark">Powered Bya>

page.

p>

<p>

There are many ways to reach the community:

p>

<ul class="list-narrow">

<li>Use the <a href="/community.html#mailing-lists">mailing listsa> to ask questions.li>

<li>In-person events include the <a href="http://www.meetup.com/spark-users/">Bay Area Spark meetupa> and

<a href="http://spark-summit.org/">Spark Summita>.li>

<li>We use <a href="https://issues.apache.org/jira/browse/SPARK">JIRAa> for issue tracking.li>

ul>

div>

<div class="col-md-4 col-padded">

<h3>Contributorsh3>

<p>

Apache Spark is built by a wide set of developers from over 200 companies.

Since 2009, more than 800 developers have contributed to Spark!

p>

<p>

The project's

<a href="https://cwiki.apache.org/confluence/display/SPARK/Committers">committersa>

come from 16 organizations.

p>

<p>

If you'd like to participate in Spark, or contribute to the libraries on top of it, learn

<a href="https://cwiki.apache.org/confluence/display/SPARK/Contributing+to+Spark">how to

contributea>.

p>

div>

<div class="col-md-4 col-padded">

<h3>Getting Startedh3>

<p>Learning Spark is easy whether you come from a Java or Python background:p>

<ul class="list-narrow">

<li><a href="/downloads.html">Downloada> the latest release — you can run Spark locally on your laptop.li>

<li>Read the <a href="/docs/latest/quick-start.html">quick start guidea>.li>

<li>

Spark Summit 2014 contained free <a href="http://spark-summit.org/2014/training">training videos and exercisesa>.

li>

<li>Learn how to <a href="/docs/latest/#launching-on-a-cluster">deploya> Spark on a cluster.li>

ul>

div>

div>

<div class="row">

<div class="col-sm-12 col-center">

<a href="/downloads.html" class="btn btn-success btn-lg" style="width: 262px;">Download Sparka>

div>

div>

<footer class="small">

<hr>

Apache Spark, Spark, Apache, and the Spark logo are trademarks of

<a href="http://www.apache.org">The Apache Software Foundationa>.

footer>

div>

body>

html>

Process finished with exit code 0

//网络资源读取

val webFile=Source.fromURL("http://www.baidu.com/")

webFile.foreach(print)

webFile.close()读取中文资源站点,出现编码混乱问题如下:(解决办法自行解决,本文不是重点)

Exception in thread "main" java.nio.charset.MalformedInputException: Input length = 1

at java.nio.charset.CoderResult.throwException(CoderResult.java:277)

at sun.nio.cs.StreamDecoder.implRead(StreamDecoder.java:338)

at sun.nio.cs.StreamDecoder.read(StreamDecoder.java:177)

at java.io.InputStreamReader.read(InputStreamReader.java:184)

at java.io.BufferedReader.fill(BufferedReader.java:154)

at java.io.BufferedReader.read(BufferedReader.java:175)

at scala.io.BufferedSource$$anonfun$iter$1$$anonfun$apply$mcI$sp$1.apply$mcI$sp(BufferedSource.scala:40)

at scala.io.Codec.wrap(Codec.scala:69)

at scala.io.BufferedSource$$anonfun$iter$1.apply(BufferedSource.scala:40)

at scala.io.BufferedSource$$anonfun$iter$1.apply(BufferedSource.scala:40)

at scala.collection.Iterator$$anon$9.next(Iterator.scala:162)

at scala.collection.Iterator$$anon$16.hasNext(Iterator.scala:536)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:369)

at scala.io.Source.hasNext(Source.scala:238)

at scala.collection.Iterator$class.foreach(Iterator.scala:742)

at scala.io.Source.foreach(Source.scala:190)

at kmust.hjr.learningScala18.FileOps$.main(FileOps.scala:24)

at kmust.hjr.learningScala18.FileOps.main(FileOps.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:140)

Process finished with exit code 1Scala 文件写入

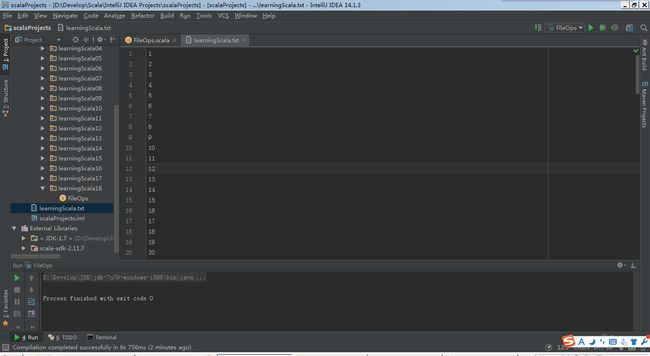

//文件写入

val writer = new PrintWriter(new File("learningScala.txt"))

for(i <- 1 to 100)

writer.println(i)

writer.close()报错:

Error:(28, 38) not found: type File

val writer = new PrintWriter(new File("learningScala.txt"))

^- 解决方法:

添加Java包:

import java.io.PrintWriter

import java.io.File /**

* Creates a new PrintWriter, without automatic line flushing, with the

* specified file. This convenience constructor creates the necessary

* intermediate {@link java.io.OutputStreamWriter OutputStreamWriter},

* which will encode characters using the {@linkplain

* java.nio.charset.Charset#defaultCharset() default charset} for this

* instance of the Java virtual machine.

*

* @param file

* The file to use as the destination of this writer. If the file

* exists then it will be truncated to zero size; otherwise, a new

* file will be created. The output will be written to the file

* and is buffered.

*

* @throws FileNotFoundException

* If the given file object does not denote an existing, writable

* regular file and a new regular file of that name cannot be

* created, or if some other error occurs while opening or

* creating the file

*

* @throws SecurityException

* If a security manager is present and {@link

* SecurityManager#checkWrite checkWrite(file.getPath())}

* denies write access to the file

*

* @since 1.5

*/

public PrintWriter(File file) throws FileNotFoundException {

this(new BufferedWriter(new OutputStreamWriter(new FileOutputStream(file))),

false);

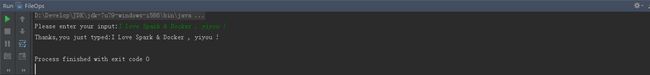

}控制台操作

//控制台交互

print("Please enter your input:")

val line=Console.readLine()

println("Thanks,you just typed:"+line)- 附录:Console - package scala

/* __ *\

** ________ ___ / / ___ Scala API **

** / __/ __// _ | / / / _ | (c) 2003-2013, LAMP/EPFL **

** __\ \/ /__/ __ |/ /__/ __ | http://scala-lang.org/ **

** /____/\___/_/ |_/____/_/ | | **

** |/ **

\* */

package scala

import java.io.{ BufferedReader, InputStream, InputStreamReader, OutputStream, PrintStream, Reader }

import scala.io.{ AnsiColor, StdIn }

import scala.util.DynamicVariable

/** Implements functionality for

* printing Scala values on the terminal as well as reading specific values.

* Also defines constants for marking up text on ANSI terminals.

*

* @author Matthias Zenger

* @version 1.0, 03/09/2003

*/

object Console extends DeprecatedConsole with AnsiColor {

private val outVar = new DynamicVariable[PrintStream](java.lang.System.out)

private val errVar = new DynamicVariable[PrintStream](java.lang.System.err)

private val inVar = new DynamicVariable[BufferedReader](

new BufferedReader(new InputStreamReader(java.lang.System.in)))

protected def setOutDirect(out: PrintStream): Unit = outVar.value = out

protected def setErrDirect(err: PrintStream): Unit = errVar.value = err

protected def setInDirect(in: BufferedReader): Unit = inVar.value = in

/** The default output, can be overridden by `setOut` */

def out = outVar.value

/** The default error, can be overridden by `setErr` */

def err = errVar.value

/** The default input, can be overridden by `setIn` */

def in = inVar.value

/** Sets the default output stream for the duration

* of execution of one thunk.

*

* @example {{{

* withOut(Console.err) { println("This goes to default _error_") }

* }}}

*

* @param out the new output stream.

* @param thunk the code to execute with

* the new output stream active

* @return the results of `thunk`

* @see `withOut[T](out:OutputStream)(thunk: => T)`

*/

def withOut[T](out: PrintStream)(thunk: =>T): T =

outVar.withValue(out)(thunk)

/** Sets the default output stream for the duration

* of execution of one thunk.

*

* @param out the new output stream.

* @param thunk the code to execute with

* the new output stream active

* @return the results of `thunk`

* @see `withOut[T](out:PrintStream)(thunk: => T)`

*/

def withOut[T](out: OutputStream)(thunk: =>T): T =

withOut(new PrintStream(out))(thunk)

/** Set the default error stream for the duration

* of execution of one thunk.

* @example {{{

* withErr(Console.out) { println("This goes to default _out_") }

* }}}

*

* @param err the new error stream.

* @param thunk the code to execute with

* the new error stream active

* @return the results of `thunk`

* @see `withErr[T](err:OutputStream)(thunk: =>T)`

*/

def withErr[T](err: PrintStream)(thunk: =>T): T =

errVar.withValue(err)(thunk)

/** Sets the default error stream for the duration

* of execution of one thunk.

*

* @param err the new error stream.

* @param thunk the code to execute with

* the new error stream active

* @return the results of `thunk`

* @see `withErr[T](err:PrintStream)(thunk: =>T)`

*/

def withErr[T](err: OutputStream)(thunk: =>T): T =

withErr(new PrintStream(err))(thunk)

/** Sets the default input stream for the duration

* of execution of one thunk.

*

* @example {{{

* val someFile:Reader = openFile("file.txt")

* withIn(someFile) {

* // Reads a line from file.txt instead of default input

* println(readLine)

* }

* }}}

*

* @param thunk the code to execute with

* the new input stream active

*

* @return the results of `thunk`

* @see `withIn[T](in:InputStream)(thunk: =>T)`

*/

def withIn[T](reader: Reader)(thunk: =>T): T =

inVar.withValue(new BufferedReader(reader))(thunk)

/** Sets the default input stream for the duration

* of execution of one thunk.

*

* @param in the new input stream.

* @param thunk the code to execute with

* the new input stream active

* @return the results of `thunk`

* @see `withIn[T](reader:Reader)(thunk: =>T)`

*/

def withIn[T](in: InputStream)(thunk: =>T): T =

withIn(new InputStreamReader(in))(thunk)

/** Prints an object to `out` using its `toString` method.

*

* @param obj the object to print; may be null.

*/

def print(obj: Any) {

out.print(if (null == obj) "null" else obj.toString())

}

/** Flushes the output stream. This function is required when partial

* output (i.e. output not terminated by a newline character) has

* to be made visible on the terminal.

*/

def flush() { out.flush() }

/** Prints a newline character on the default output.

*/

def println() { out.println() }

/** Prints out an object to the default output, followed by a newline character.

*

* @param x the object to print.

*/

def println(x: Any) { out.println(x) }

/** Prints its arguments as a formatted string to the default output,

* based on a string pattern (in a fashion similar to printf in C).

*

* The interpretation of the formatting patterns is described in

*

* `java.util.Formatter`.

*

* @param text the pattern for formatting the arguments.

* @param args the arguments used to instantiating the pattern.

* @throws java.lang.IllegalArgumentException if there was a problem with the format string or arguments

*/

def printf(text: String, args: Any*) { out.print(text format (args : _*)) }

}

private[scala] abstract class DeprecatedConsole {

self: Console.type =>

/** Internal usage only. */

protected def setOutDirect(out: PrintStream): Unit

protected def setErrDirect(err: PrintStream): Unit

protected def setInDirect(in: BufferedReader): Unit

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readBoolean(): Boolean = StdIn.readBoolean()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readByte(): Byte = StdIn.readByte()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readChar(): Char = StdIn.readChar()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readDouble(): Double = StdIn.readDouble()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readFloat(): Float = StdIn.readFloat()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readInt(): Int = StdIn.readInt()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readLine(): String = StdIn.readLine()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readLine(text: String, args: Any*): String = StdIn.readLine(text, args: _*)

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readLong(): Long = StdIn.readLong()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readShort(): Short = StdIn.readShort()

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readf(format: String): List[Any] = StdIn.readf(format)

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readf1(format: String): Any = StdIn.readf1(format)

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readf2(format: String): (Any, Any) = StdIn.readf2(format)

@deprecated("Use the method in scala.io.StdIn", "2.11.0") def readf3(format: String): (Any, Any, Any) = StdIn.readf3(format)

/** Sets the default output stream.

*

* @param out the new output stream.

*/

@deprecated("Use withOut", "2.11.0") def setOut(out: PrintStream): Unit = setOutDirect(out)

/** Sets the default output stream.

*

* @param out the new output stream.

*/

@deprecated("Use withOut", "2.11.0") def setOut(out: OutputStream): Unit = setOutDirect(new PrintStream(out))

/** Sets the default error stream.

*

* @param err the new error stream.

*/

@deprecated("Use withErr", "2.11.0") def setErr(err: PrintStream): Unit = setErrDirect(err)

/** Sets the default error stream.

*

* @param err the new error stream.

*/

@deprecated("Use withErr", "2.11.0") def setErr(err: OutputStream): Unit = setErrDirect(new PrintStream(err))

/** Sets the default input stream.

*

* @param reader specifies the new input stream.

*/

@deprecated("Use withIn", "2.11.0") def setIn(reader: Reader): Unit = setInDirect(new BufferedReader(reader))

/** Sets the default input stream.

*

* @param in the new input stream.

*/

@deprecated("Use withIn", "2.11.0") def setIn(in: InputStream): Unit = setInDirect(new BufferedReader(new InputStreamReader(in)))

}

附录一:本节所有程序源码

package kmust.hjr.learningScala18

import java.io.PrintWriter

import java.io.File

import scala.io.Source

/**

* Created by Administrator on 2015/10/16.

*/

object FileOps {

def main(args:Array[String]){

//文件读取

val file=Source.fromFile("E:\\scalaIO.txt")

for(line <- file.getLines)

{

println(line)

}

file.close

//网络资源读取

//val webFile=Source.fromURL("http://www.baidu.com/")

val webFile=Source.fromURL("http://spark.apache.org")

webFile.foreach(print)

webFile.close()

//文件写入

val writer = new PrintWriter(new File("learningScala.txt"))

for(i <- 1 to 100)

writer.println(i)

writer.close()

//控制台交互

print("Please enter your input:")

val line=Console.readLine()

println("Thanks,you just typed:"+line)

}

}

附录二: Source.scala源码

/* __ *\

** ________ ___ / / ___ Scala API **

** / __/ __// _ | / / / _ | (c) 2003-2013, LAMP/EPFL **

** __\ \/ /__/ __ |/ /__/ __ | http://scala-lang.org/ **

** /____/\___/_/ |_/____/_/ | | **

** |/ **

\* */

package scala

package io

import scala.collection.AbstractIterator

import java.io.{ FileInputStream, InputStream, PrintStream, File => JFile }

import java.net.{ URI, URL }

/** This object provides convenience methods to create an iterable

* representation of a source file.

*

* @author Burak Emir, Paul Phillips

* @version 1.0, 19/08/2004

*/

object Source {

val DefaultBufSize = 2048

/** Creates a `Source` from System.in.

*/

def stdin = fromInputStream(System.in)

/** Creates a Source from an Iterable.

*

* @param iterable the Iterable

* @return the Source

*/

def fromIterable(iterable: Iterable[Char]): Source = new Source {

val iter = iterable.iterator

} withReset(() => fromIterable(iterable))

/** Creates a Source instance from a single character.

*/

def fromChar(c: Char): Source = fromIterable(Array(c))

/** creates Source from array of characters, with empty description.

*/

def fromChars(chars: Array[Char]): Source = fromIterable(chars)

/** creates Source from a String, with no description.

*/

def fromString(s: String): Source = fromIterable(s)

/** creates Source from file with given name, setting its description to

* filename.

*/

def fromFile(name: String)(implicit codec: Codec): BufferedSource =

fromFile(new JFile(name))(codec)

/** creates Source from file with given name, using given encoding, setting

* its description to filename.

*/

def fromFile(name: String, enc: String): BufferedSource =

fromFile(name)(Codec(enc))

/** creates `ource` from file with given file `URI`.

*/

def fromFile(uri: URI)(implicit codec: Codec): BufferedSource =

fromFile(new JFile(uri))(codec)

/** creates Source from file with given file: URI

*/

def fromFile(uri: URI, enc: String): BufferedSource =

fromFile(uri)(Codec(enc))

/** creates Source from file, using default character encoding, setting its

* description to filename.

*/

def fromFile(file: JFile)(implicit codec: Codec): BufferedSource =

fromFile(file, Source.DefaultBufSize)(codec)

/** same as fromFile(file, enc, Source.DefaultBufSize)

*/

def fromFile(file: JFile, enc: String): BufferedSource =

fromFile(file)(Codec(enc))

def fromFile(file: JFile, enc: String, bufferSize: Int): BufferedSource =

fromFile(file, bufferSize)(Codec(enc))

/** Creates Source from `file`, using given character encoding, setting

* its description to filename. Input is buffered in a buffer of size

* `bufferSize`.

*/

def fromFile(file: JFile, bufferSize: Int)(implicit codec: Codec): BufferedSource = {

val inputStream = new FileInputStream(file)

createBufferedSource(

inputStream,

bufferSize,

() => fromFile(file, bufferSize)(codec),

() => inputStream.close()

)(codec) withDescription ("file:" + file.getAbsolutePath)

}

/** Create a `Source` from array of bytes, decoding

* the bytes according to codec.

*

* @return the created `Source` instance.

*/

def fromBytes(bytes: Array[Byte])(implicit codec: Codec): Source =

fromString(new String(bytes, codec.name))

def fromBytes(bytes: Array[Byte], enc: String): Source =

fromBytes(bytes)(Codec(enc))

/** Create a `Source` from array of bytes, assuming

* one byte per character (ISO-8859-1 encoding.)

*/

def fromRawBytes(bytes: Array[Byte]): Source =

fromString(new String(bytes, Codec.ISO8859.name))

/** creates `Source` from file with given file: URI

*/

def fromURI(uri: URI)(implicit codec: Codec): BufferedSource =

fromFile(new JFile(uri))(codec)

/** same as fromURL(new URL(s))(Codec(enc))

*/

def fromURL(s: String, enc: String): BufferedSource =

fromURL(s)(Codec(enc))

/** same as fromURL(new URL(s))

*/

def fromURL(s: String)(implicit codec: Codec): BufferedSource =

fromURL(new URL(s))(codec)

/** same as fromInputStream(url.openStream())(Codec(enc))

*/

def fromURL(url: URL, enc: String): BufferedSource =

fromURL(url)(Codec(enc))

/** same as fromInputStream(url.openStream())(codec)

*/

def fromURL(url: URL)(implicit codec: Codec): BufferedSource =

fromInputStream(url.openStream())(codec)

/** Reads data from inputStream with a buffered reader, using the encoding

* in implicit parameter codec.

*

* @param inputStream the input stream from which to read

* @param bufferSize buffer size (defaults to Source.DefaultBufSize)

* @param reset a () => Source which resets the stream (if unset, reset() will throw an Exception)

* @param close a () => Unit method which closes the stream (if unset, close() will do nothing)

* @param codec (implicit) a scala.io.Codec specifying behavior (defaults to Codec.default)

* @return the buffered source

*/

def createBufferedSource(

inputStream: InputStream,

bufferSize: Int = DefaultBufSize,

reset: () => Source = null,

close: () => Unit = null

)(implicit codec: Codec): BufferedSource = {

// workaround for default arguments being unable to refer to other parameters

val resetFn = if (reset == null) () => createBufferedSource(inputStream, bufferSize, reset, close)(codec) else reset

new BufferedSource(inputStream, bufferSize)(codec) withReset resetFn withClose close

}

def fromInputStream(is: InputStream, enc: String): BufferedSource =

fromInputStream(is)(Codec(enc))

def fromInputStream(is: InputStream)(implicit codec: Codec): BufferedSource =

createBufferedSource(is, reset = () => fromInputStream(is)(codec), close = () => is.close())(codec)

}

/** An iterable representation of source data.

* It may be reset with the optional `reset` method.

*

* Subclasses must supply [[scala.io.Source@iter the underlying iterator]].

*

* Error handling may be customized by overriding the [[scala.io.Source@report report]] method.

*

* The [[scala.io.Source@ch current input]] and [[scala.io.Source@pos position]],

* as well as the [[scala.io.Source@next next character]] methods delegate to

* [[scala.io.Source$Positioner the positioner]].

*

* The default positioner encodes line and column numbers in the position passed to `report`.

* This behavior can be changed by supplying a

* [[scala.io.Source@withPositioning(pos:Source.this.Positioner):Source.this.type custom positioner]].

*

* @author Burak Emir

* @version 1.0

*/

abstract class Source extends Iterator[Char] {

/** the actual iterator */

protected val iter: Iterator[Char]

// ------ public values

/** description of this source, default empty */

var descr: String = ""

var nerrors = 0

var nwarnings = 0

private def lineNum(line: Int): String = (getLines() drop (line - 1) take 1).mkString

class LineIterator extends AbstractIterator[String] with Iterator[String] {

private[this] val sb = new StringBuilder

lazy val iter: BufferedIterator[Char] = Source.this.iter.buffered

def isNewline(ch: Char) = ch == '\r' || ch == '\n'

def getc() = iter.hasNext && {

val ch = iter.next()

if (ch == '\n') false

else if (ch == '\r') {

if (iter.hasNext && iter.head == '\n')

iter.next()

false

}

else {

sb append ch

true

}

}

def hasNext = iter.hasNext

def next = {

sb.clear()

while (getc()) { }

sb.toString

}

}

/** Returns an iterator who returns lines (NOT including newline character(s)).

* It will treat any of \r\n, \r, or \n as a line separator (longest match) - if

* you need more refined behavior you can subclass Source#LineIterator directly.

*/

def getLines(): Iterator[String] = new LineIterator()

/** Returns `'''true'''` if this source has more characters.

*/

def hasNext = iter.hasNext

/** Returns next character.

*/

def next(): Char = positioner.next()

class Positioner(encoder: Position) {

def this() = this(RelaxedPosition)

/** the last character returned by next. */

var ch: Char = _

/** position of last character returned by next */

var pos = 0

/** current line and column */

var cline = 1

var ccol = 1

/** default col increment for tabs '\t', set to 4 initially */

var tabinc = 4

def next(): Char = {

ch = iter.next()

pos = encoder.encode(cline, ccol)

ch match {

case '\n' =>

ccol = 1

cline += 1

case '\t' =>

ccol += tabinc

case _ =>

ccol += 1

}

ch

}

}

/** A Position implementation which ignores errors in

* the positions.

*/

object RelaxedPosition extends Position {

def checkInput(line: Int, column: Int): Unit = ()

}

object RelaxedPositioner extends Positioner(RelaxedPosition) { }

object NoPositioner extends Positioner(Position) {

override def next(): Char = iter.next()

}

def ch = positioner.ch

def pos = positioner.pos

/** Reports an error message to the output stream `out`.

*

* @param pos the source position (line/column)

* @param msg the error message to report

* @param out PrintStream to use (optional: defaults to `Console.err`)

*/

def reportError(

pos: Int,

msg: String,

out: PrintStream = Console.err)

{

nerrors += 1

report(pos, msg, out)

}

private def spaces(n: Int) = List.fill(n)(' ').mkString

/**

* @param pos the source position (line/column)

* @param msg the error message to report

* @param out PrintStream to use

*/

def report(pos: Int, msg: String, out: PrintStream) {

val line = Position line pos

val col = Position column pos

out println "%s:%d:%d: %s%s%s^".format(descr, line, col, msg, lineNum(line), spaces(col - 1))

}

/**

* @param pos the source position (line/column)

* @param msg the warning message to report

* @param out PrintStream to use (optional: defaults to `Console.out`)

*/

def reportWarning(

pos: Int,

msg: String,

out: PrintStream = Console.out)

{

nwarnings += 1

report(pos, "warning! " + msg, out)

}

private[this] var resetFunction: () => Source = null

private[this] var closeFunction: () => Unit = null

private[this] var positioner: Positioner = RelaxedPositioner

def withReset(f: () => Source): this.type = {

resetFunction = f

this

}

def withClose(f: () => Unit): this.type = {

closeFunction = f

this

}

def withDescription(text: String): this.type = {

descr = text

this

}

/** Change or disable the positioner. */

def withPositioning(on: Boolean): this.type = {

positioner = if (on) RelaxedPositioner else NoPositioner

this

}

def withPositioning(pos: Positioner): this.type = {

positioner = pos

this

}

/** The close() method closes the underlying resource. */

def close() {

if (closeFunction != null) closeFunction()

}

/** The reset() method creates a fresh copy of this Source. */

def reset(): Source =

if (resetFunction != null) resetFunction()

else throw new UnsupportedOperationException("Source's reset() method was not set.")

}