Hadoop3.0.3 + hive3.0.0填一个大坑

Hadoop3.0.3 + hive3.0.0填一个大坑

- Hadoop3.0.3 + hive3.0.0填一个大坑

- 1.问题

- 2. 配置过程

- 2.1. 解压文件配置环境变量

- 2.2. 配置hive-env.sh

- 2.3. 配置hive-site.xml

- 2.4. 初始化hive

- 3. 总结

- 坑一:Schema initialization FAILED

- 坑二:Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

1.问题

因为需要熟悉新版本的功能,所以部署了Hadoop3.0.3。看了下hive的配套说明:

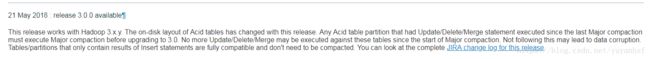

This release works with Hadoop 3.x.y. 所以选择hive3.0.0进行安装。但很无奈hive的文档真的太不详细了,按其一步步进行安装报了两个错,困扰了很久,现总结一下配置过程。前置条件为hadoop3.0.3正常运行,mysql服务配置完全

2. 配置过程

2.1. 解压文件配置环境变量

- 解压文件

$ tar -xzvf hive-x.y.z.tar.gz- 配置环境变量

export HIVE_HOME={{pwd}}

export PATH=$HIVE_HOME/bin:$PATH- 将mysql的JDBC包放到hive的lib文件夹中。注意是.jar文件

2.2. 配置hive-env.sh

HADOOP_HOME=/opt/modules/hadoop-3.0.3

export HIVE_CONF_DIR=/opt/modules/hive-3.0.0-bin2.3. 配置hive-site.xml

<configuration>

<property>

<name>hive.metastore.schema.verificationname>

<value>falsevalue>

property>

<property>

<name>hive.metastore.warehouse.dirname>

<value>/user/hive/warehousevalue>

property>

<property>

<name>hive.exec.mode.local.autoname>

<value>falsevalue>

<description> Let Hive determine whether to run in local mode automatically <

/description>

property>

<property>

<name>hive.metastore.urisname>

<value>thrift://spark.bigdata.com:9083value>

<description>Thrift URI for the remote metastore. Used by metastore client to

connect to remote metastore.description>

property>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://spark.bigdata.com:3306/hive?createDatabaseIfNotExist=true

value>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>hivevalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>hivemysqlvalue>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

property>

configuration>2.4. 初始化hive

- 使用schematool初始化

schematool -dbType mysql -initSchema此处会出现第一个问题:直接修改hive-default.xml,配置未生效,可以看下面的LOG,我已经将hive-default.xml中的hiveuser改为了hive,URL,Driver也做了相应的修改,可是执行schematool -dbType mysql -initSchema进行初始化的时候,还是使用了默认的URL,Driver和user APP。所以此处需要将hive-default.xml改为hive-site.xml,执行初始化成功,但在hive官网上没有明确说明(或者是我没理解透)。

[hadoop@spark conf]$ schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/modules/hive-3.0.0-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/modules/hadoop-3.0.3/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 3.0.0

Initialization script hive-schema-3.0.0.mysql.sql

Error: Syntax error: Encountered "" at line 1, column 64. (state=42X01,code=30000)

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

Use --verbose for detailed stacktrace.

*** schemaTool failed ***- (可选)在mysql中进行metastore初始化

这一步不太确定是否一定需要,可以先跳过,后面发现有问题的话再执行这一句。

mysql> source /opt/modules/hive-3.0.0-bin/scripts/metastore/upgrade/mysql/hive-schema-3.0.0.mysql.sql- 启动hive的metastore服务

这一步在官网上完全没有找到,但是没有这一步的话就会一直报错FAILED: HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient。

[hadoop@spark bin]$ ./hive --service metastore &- 最后进入hive,执行show databases;测试

hive (default)> show databases;

OK

database_name

default

Time taken: 3.157 seconds, Fetched: 1 row(s)3. 总结

- 和以前版本相比,从hive2.1开始需要使用schematool 进行初始化操作。Starting from Hive 2.1, we need to run the schematool command below as an initialization step. For example, we can use “derby” as db type.

- 配置文件目录也有点不太一样,hive-site.xml文件需要自己创建,当然也可以从hive-default.xml复制。

- 需要启动hive的metastore 服务,不启动无法关联到mysql的metastore

- mysql中的数据库名更改为了hive,以前是metastore

坑一:Schema initialization FAILED

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

Use --verbose for detailed stacktrace.

*** schemaTool failed ***需要在hive-site.xml配置好mysql访问用户名和密码,在hive-default.xml配置未生效。

坑二:Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

hive (default)> show databases;

FAILED: HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClienthive.metastore.schema.verification参数设置需要为false,且在schemaTool初始化成功后,启动hive的metastore服务: ./hive –service metastore &

(文档结束)

Author:@Fighter10

Shenzhen,2018-07-11 13:55