阿里云服务器搭建Hadoop集群

阿里云服务器搭建Hadoop集群

- 一、环境介绍

- 二、修改hosts、hostname文件

- 三、ssh互信

- 四、安装java(只需在master操作,配置后再发送到slave机器)

- 五、安装并配置Hadoop

- 六、阿里云踩过的坑

一、环境介绍

服务器:一台阿里云服务器master,一台腾讯云服务器slave

操作系统:centOS7

Hadoop:hadoop-2.7.7.tar.gz

Java:jdk-8u172-linux-x64.tar.gz

二、修改hosts、hostname文件

2.1 修改hosts文件(/etc/hosts)

把原有的代码都注释掉

假如master是阿里云。则在阿里配置

其中 ip=阿里的内网ip;ip1=腾讯的外网ip;

ip master

ip1 slave1

在腾讯配置,其中 ip=阿里的外网ip;ip1=腾讯的内网ip。

ip master

ip1 slave1

2.2 修改hostname文件

[root@master ~]vim /etc/hostname

把原有的内容删掉

master的机器添加内容:master;slave机器添加内容slave

输入命令"reboot"重启下机器

验证一下:

[root@master ~]# hostname

master

[root@slave ~]# hostname

slave

三、ssh互信

3.1 每台机器都执行以下代码(执行过程只需要按回车)

[root@master .ssh]ssh-keygen -t rsa -P ''

执行完打开.ssh目录,会有以下三个文件

[root@master ~]# cd ~/.ssh

[root@master .ssh]# ls

authorized_keys id_rsa id_rsa.pub known_hosts

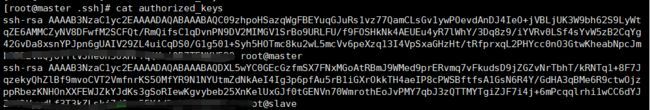

3.2 把每台机器的公钥(在id_rsa.pub内)都复制到每台机器的authorized_keys,意思就是每台机器要拥有其他任何机器的公钥。

在master敲:

[root@master src]ssh slave

可以检查是否互信成功

四、安装java(只需在master操作,配置后再发送到slave机器)

jdk下载:

[root@master src]wget http://download.oracle.com/otn-pub/java/jdk/8u171-b11/512cd62ec5174c3487ac17c61aaa89e8/jdk-8u171=2-linux-x64.tar.gz

4.1 解压jdk

[root@master src]# tar -zxvf jdk-8u172-linux-x64.tar.gz

在这里我是解压到 /usr/local/src

4.2 修改 /etc/profile 文件,配置环境变量

在文件末端添加:

# SET JAVA_PATH

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

注:根据你jdk安装的位置修改,我这里jdk位置是/usr/local/src/

添加后source一下,使环境变量生效:

[root@master src]# source /etc/profile

检验:

[root@master ~]# java -version

java version "1.8.0_172"

Java(TM) SE Runtime Environment (build 1.8.0_172-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.172-b11, mixed mode)

4.3 把jdk发送到slave机器上

[root@master src]# scp -r jdk1.8.0_172 root@slave:/usr/local/src/

4.4 把 /etc/profile文件发送到slave机器上

[root@master src]# scp /etc/profile root@slave:/etc/

在slave机器上source一下,并检验:

[root@slave ~]# source /etc/profile

[root@slave ~]# java -version

java version "1.8.0_172"

Java(TM) SE Runtime Environment (build 1.8.0_172-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.172-b11, mixed mode)

五、安装并配置Hadoop

Hadoop下载:

[root@master src]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

5.1 解压hadoop

[root@master src]# tar -zxvf hadoop-2.7.7.tar.gz

在这里我是解压到 /usr/local/src

5.2 配置各个文件

- hadoop-env.sh

在最后添加:

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

- yarn-env.sh

在"JAVA_HEAP_MAX=-Xmx1000m"下面一行添加:

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

- slaves文件

删掉内容"localhost",添加:

slave

- core-site.xml

修改:

fs.defaultFS

hdfs://master:9000

hadoop.tmp.dir

file:/usr/local/src/hadoop-2.7.7/tmp/

- hdfs-site.xml

修改:

dfs.namenode.secondary.http-address

master:9001

dfs.namenode.name.dir

file:/usr/local/src/hadoop-2.7.7/dfs/name

dfs.datanode.data.dir

file:/usr/local/src/hadoop-2.7.7/dfs/data

dfs.replication

3

- mapred-site.xml.template

修改:

mapreduce.framework.name

yarn

- yarn-site.xml

修改:

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8035

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.vmem-pmem-ratio

5

yarn.log-aggregation-enable

true

yarn.nodemanager.log-aggregation.roll-monitoring-interval-seconds

3600

yarn.nodemanager.remote-app-log-dir

/tmp/logs

有兴趣了解各个配置作用的自行百度~

5.3 创建目录

回到hadoop-2.7.7目录下,创建3个目录:

[root@master hadoop-2.7.7]# mkdir -p dfs/name

[root@master hadoop-2.7.7]# mkdir -p dfs/data

[root@master hadoop-2.7.7]# mkdir tmp

5.4 修改 /etc/profile 文件,配置环境变量

在文件末端添加:

# SET HADOOP_PATH

export HADOOP_HOME=/usr/local/src/hadoop-2.7.7

export PATH=$PATH:$HADOOP_HOME/bin

注:根据你hadoop安装的位置修改,我这里hadoop位置是/usr/local/src/

添加后source一下,使环境变量生效:

[root@master src]# source /etc/profile

检验:

[root@master hadoop-2.7.7]# hadoop version

Hadoop 2.7.7

Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac

Compiled by stevel on 2018-07-18T22:47Z

Compiled with protoc 2.5.0

From source with checksum 792e15d20b12c74bd6f19a1fb886490

This command was run using /usr/local/src/hadoop-2.7.7/share/hadoop/common/hadoop-common-2.7.7.jar

5.5 把hadoo发送到slave机器上

[root@master src]# scp -r hadoop-2.7.7 root@slave:/usr/local/src/

5.6 /etc/profile文件发送到slave机器上

[root@master src]# scp /etc/profile root@slave:/etc/

在slave机器上source一下,并检验:

[root@slave ~]# hadoop version

Hadoop 2.7.7

Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac

Compiled by stevel on 2018-07-18T22:47Z

Compiled with protoc 2.5.0

From source with checksum 792e15d20b12c74bd6f19a1fb886490

This command was run using /usr/local/src/hadoop-2.7.7/share/hadoop/common/hadoop-common-2.7.7.jar

5.7 在master上格式化集群

[root@master logs]# hdfs namenode -format

开启集群:

[root@master sbin]# ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop-2.7.7/logs/hadoop-root-namenode-master.out

slave: starting datanode, logging to /usr/local/src/hadoop-2.7.7/logs/hadoop-root-datanode-slave.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /usr/local/src/hadoop-2.7.7/logs/hadoop-root-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop-2.7.7/logs/yarn-root-resourcemanager-master.outslave:

starting nodemanager, logging to /usr/local/src/hadoop-2.7.7/logs/yarn-root-nodemanager-slave.out

检验:

[root@master sbin]# jps

5301 ResourceManager

5558 Jps

4967 NameNode

5150 SecondaryNameNode

[root@slave hadoop-2.7.7]# jps

8725 Jps

8470 DataNode

8607 NodeManager

到这里就已经搭建成功了

六、阿里云踩过的坑

- 阿里云服务器默认防火墙开启,实验前需关闭。

- 因阿里云服务器原只支持22、80和443端口,所以需要到控制台中添加防火墙规则,使其支持9000端口,如果出现上传文件到hdfs失败的情况,需要开启50010端口