机器学习(二)——线性回归

- 一、单变量线性回归

- 1.1 监督学习算法工作流程

- 1.2 线性回归模型表示

- 1.3 代价函数

- 1.4 梯度下降

- 1.5 梯度下降的线性回归

- 二、多变量线性回归

- 2.1 多维特征

- 2.2 多变量梯度下降

- 2.2.1 特征缩放

- 2.2.2 学习率

- 2.3 特征和多项式回归

- 2.4 正规方程

一、单变量线性回归

单变量线性回归(Univariate linear regression)又称一元线性回归(Linear regression with one variable)。

1.1 监督学习算法工作流程

1.2 线性回归模型表示

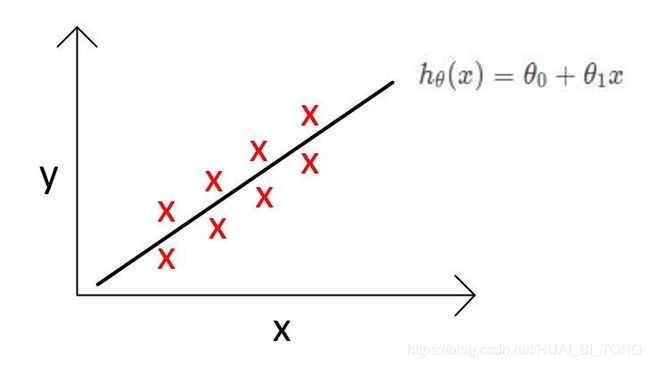

假设函数(Hypothesis) h θ ( x ) = θ 0 + θ 1 x h_{\theta }(x)=\theta _{0}+\theta _{1}x hθ(x)=θ0+θ1x ,其中 θ \theta θ是模型参数, x x x是输入变量/特征。下图中 y y y是输出/目标变量。

1.3 代价函数

代价函数(Cost function)也叫平方误差函数或平方误差代价函数。它的作用是为我们的训练样本 ( x , y ) (x,y) (x,y)选择 θ 0 、 θ 1 \theta _{0}、\theta _{1} θ0、θ1,使得 h θ ( x ) h_{\theta }(x) hθ(x)接近于 y y y。

代价函数 J ( θ 0 , θ 1 ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta _{0},\theta _{1})=\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2} J(θ0,θ1)=2m1i=1∑m(hθ(x(i))−y(i))2

其中, m m m是训练样本的数量。

我们的目标是 m i n i m i z e J ( θ 0 , θ 1 ) minimizeJ(\theta _{0},\theta _{1}) minimizeJ(θ0,θ1),得到 θ 0 、 θ 1 \theta _{0}、\theta _{1} θ0、θ1的值。

1.4 梯度下降

梯度下降(Gradient descent)是一个用来求函数极小值的算法,将使用梯度下降算法来求出代价函数 J ( θ 0 , θ 1 ) J(\theta _{0},\theta _{1}) J(θ0,θ1)的最小值。但当选择不同的初始参数组合,可能会找到不同的局部最小值。梯度下降算法的实现:

R e p e a t u n t i l c o n v e r g e n c e { θ j : = θ j − α ∂ ∂ θ j J ( θ 0 , θ 1 ) ( f o r j = 0 a n d j = 1 ) } Repeat\ _{}\ _{}until\ _{}\ _{}convergence\ _{}\ _{}\left \{ \theta _{j}:=\theta _{j}-\alpha \frac{\partial }{\partial\theta _{j} }J(\theta _{0},\theta _{1})\ _{}\ _{}(for j=0 \ _{}and\ _{} j=1) \right \} Repeat until convergence {θj:=θj−α∂θj∂J(θ0,θ1) (forj=0 and j=1)}

其中, α \alpha α是学习率,用来控制梯度下降时的步长。若 α \alpha α很大,则说明梯度下降会很迅速;若 α \alpha α很小,则说明梯度下降会很缓慢。并且上式中 θ 0 \theta _{0} θ0和 θ 1 \theta _{1} θ1需同时更新(Simultaneous update)。

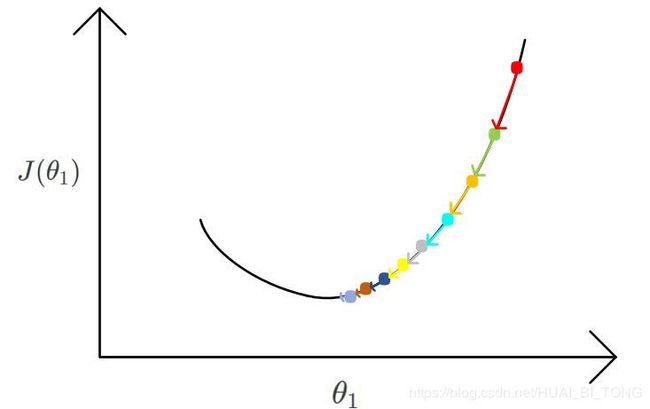

对于上图来说,只有一个参数 θ 1 \theta _{1} θ1,当运行梯度下降接近局部最小值时,导数项 d d θ 1 J ( θ 1 ) \frac{d }{d\theta _{1} }J(\theta _{1}) dθ1dJ(θ1)越小,步长 α \alpha α会自动减小,直到到达最低点( α = 0 \alpha=0 α=0),不需要另外减小 α \alpha α。

对于上图来说,只有一个参数 θ 1 \theta _{1} θ1,当运行梯度下降接近局部最小值时,导数项 d d θ 1 J ( θ 1 ) \frac{d }{d\theta _{1} }J(\theta _{1}) dθ1dJ(θ1)越小,步长 α \alpha α会自动减小,直到到达最低点( α = 0 \alpha=0 α=0),不需要另外减小 α \alpha α。

1.5 梯度下降的线性回归

线性回归模型: h θ ( x ) = θ 0 + θ 1 x h_{\theta }(x)=\theta _{0}+\theta _{1}x hθ(x)=θ0+θ1x

J ( θ 0 , θ 1 ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta _{0},\theta _{1})=\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2} J(θ0,θ1)=2m1i=1∑m(hθ(x(i))−y(i))2

梯度下降算法:

R e p e a t u n t i l c o n v e r g e n c e { θ j : = θ j − α ∂ ∂ θ j J ( θ 0 , θ 1 ) ( f o r j = 0 a n d j = 1 ) } Repeat\ _{}\ _{}until\ _{}\ _{}convergence\ _{}\ _{}\left \{ \theta _{j}:=\theta _{j}-\alpha \frac{\partial }{\partial\theta _{j} }J(\theta _{0},\theta _{1})\ _{}\ _{}(for j=0 \ _{}and\ _{} j=1) \right \} Repeat until convergence {θj:=θj−α∂θj∂J(θ0,θ1) (forj=0 and j=1)}

用梯度下降算法实现线性回归模型代价函数的最小化: min θ 0 , θ 1 J ( θ 0 , θ 1 ) \min_{\theta _{0},\theta _{1}}J(\theta _{0},\theta _{1}) θ0,θ1minJ(θ0,θ1)

推导可得 θ 0 , θ 1 \theta _{0},\theta _{1} θ0,θ1的更新公式:

∂ ∂ θ j J ( θ 0 , θ 1 ) = ∂ ∂ θ j 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 = ∂ ∂ θ j 1 2 m ∑ i = 1 m ( θ 0 + θ 1 x ( i ) − y ( i ) ) 2 \frac{\partial }{\partial\theta _{j} }J(\theta _{0},\theta _{1})=\frac{\partial }{\partial\theta _{j} }\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2}=\frac{\partial }{\partial\theta _{j} }\frac{1}{2m}\sum_{i=1}^{m} (\theta _{0}+\theta _{1}x^{(i)}-y^{(i)})^{2} ∂θj∂J(θ0,θ1)=∂θj∂2m1i=1∑m(hθ(x(i))−y(i))2=∂θj∂2m1i=1∑m(θ0+θ1x(i)−y(i))2

{ θ 0 j = 0 : ∂ ∂ θ 0 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) θ 1 j = 1 : ∂ ∂ θ 1 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x ( i ) \left\{\begin{matrix} \theta _{0}\ _{}\ _{} j=0:\ _{}\ _{}\frac{\partial }{\partial\theta _{0} }J(\theta _{0},\theta _{1})=\frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})\ _{}\ _{}\ _{}\ _{}\ _{}\\ \theta _{1}\ _{}\ _{} j=1:\ _{}\ _{}\frac{\partial }{\partial\theta _{1} }J(\theta _{0},\theta _{1})=\frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})x^{(i)} \end{matrix}\right. {θ0 j=0: ∂θ0∂J(θ0,θ1)=m1∑i=1m(hθ(x(i))−y(i)) θ1 j=1: ∂θ1∂J(θ0,θ1)=m1∑i=1m(hθ(x(i))−y(i))x(i)

R e p e a t u n t i l c o n v e r g e n c e { θ 0 : = θ 0 − α 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) Repeat\ _{}\ _{}until\ _{}\ _{}convergence \left \{ \theta _{0} :=\theta _{0}-\alpha \frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)}) \right. Repeat until convergence{θ0:=θ0−αm1i=1∑m(hθ(x(i))−y(i))

θ 1 : = θ 1 − α 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x ( i ) } \ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\left. \theta _{1}:=\theta _{1}-\alpha \frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})x^{(i)} \right \} θ1:=θ1−αm1i=1∑m(hθ(x(i))−y(i))x(i)}

二、多变量线性回归

2.1 多维特征

假设函数 h θ ( x ) = θ 0 + θ 1 x 1 + θ 2 x 2 + . . . + θ n x n = θ T X h_{\theta }(x)=\theta _{0}+\theta _{1}x_{1}+\theta _{2}\ x_{2}+...+\theta _{n}\ x_{n}=\theta ^{T}X hθ(x)=θ0+θ1x1+θ2 x2+...+θn xn=θTX ,其中 n n n是特征数量, θ \theta θ是模型参数, x x x是输入变量/特征, θ \theta θ和 x x x都是 n + 1 n+1 n+1维向量。

X = [ x 0 x 1 . . . x n ] θ = [ θ 0 θ 1 . . . θ n ] \begin{matrix} \begin{matrix} X=\begin{bmatrix} x_{0}\\ x_{1}\\ ...\\ x_{n} \end{bmatrix}& \theta =\begin{bmatrix} \theta_{0}\\ \theta_{1}\\ ...\\ \theta_{n} \end{bmatrix} \end{matrix} & \end{matrix} X=⎣⎢⎢⎡x0x1...xn⎦⎥⎥⎤θ=⎣⎢⎢⎡θ0θ1...θn⎦⎥⎥⎤

2.2 多变量梯度下降

H y p o t h e s i s : h θ ( x ) = θ T X = θ 0 + θ 1 x 1 + θ 2 x 2 + . . . + θ n x n P a r a m e t e r s : θ 0 , θ 1 , . . . , θ n C o s t f u n c t i o n : J ( θ ) = J ( θ 0 , θ 1 , . . . , θ n ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 G r a d i e n t d e s c e n t : R e p e a t { θ j : = θ j − α ∂ ∂ θ j J ( θ ) } N e w a l g o r i t h m ( n ≥ 1 ) : R e p e a t { θ j : = θ j − α 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) ) } \begin{matrix} Hypothesis:h_{\theta }(x)=\theta ^{T}X=\theta _{0}+\theta _{1}x_{1}+\theta _{2}\ x_{2}+...+\theta _{n}\ x_{n}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\\ Parameters:\theta _{0},\theta _{1},...,\theta _{n}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\\ Cost\ _{}\ _{}function:J(\theta )=J(\theta _{0},\theta _{1},...,\theta _{n})=\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\\ Gradient\ _{}\ _{}descent:Repeat\left \{ \theta _{j}:=\theta _{j}-\alpha \frac{\partial }{\partial\theta _{j} }J(\theta) \right \}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\\ New\ _{}\ _{}algorithm(n\geq 1):Repeat\left \{ \theta _{j}:=\theta _{j}-\alpha \frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)}) {x_{j}}^{(i))} \right \} \end{matrix} Hypothesis:hθ(x)=θTX=θ0+θ1x1+θ2 x2+...+θn xn Parameters:θ0,θ1,...,θn Cost function:J(θ)=J(θ0,θ1,...,θn)=2m1∑i=1m(hθ(x(i))−y(i))2 Gradient descent:Repeat{θj:=θj−α∂θj∂J(θ)} New algorithm(n≥1):Repeat{θj:=θj−αm1∑i=1m(hθ(x(i))−y(i))xj(i))}

2.2.1 特征缩放

特征缩放(Feature scaling)是为了确保特征在一个相近的范围内,使得算法更快收敛。可以使用均值归一化的方法实现特征缩放。

均值归一化: x n = x n − μ n S n x_{n}=\frac{x_{n}-\mu_{n}}{S_{n}} xn=Snxn−μn

其中, μ n \mu_{n} μn是平均值, S n S_{n} Sn是标准差。

2.2.2 学习率

梯度下降算法收敛所需要的迭代次数根据模型的不同而不同,我们可以通过绘制迭代次数和代价函数的图来观察算法在何时趋于收敛。如果在一次迭代中, J ( θ ) J(\theta) J(θ)的值小于 1 0 − 3 ε \frac{10^{-3}}{\varepsilon} ε10−3,则可以说此次迭代是收敛的。

梯度下降算法收敛所需要的迭代次数根据模型的不同而不同,我们可以通过绘制迭代次数和代价函数的图来观察算法在何时趋于收敛。如果在一次迭代中, J ( θ ) J(\theta) J(θ)的值小于 1 0 − 3 ε \frac{10^{-3}}{\varepsilon} ε10−3,则可以说此次迭代是收敛的。

学习率 α \alpha α的选择:

- α \alpha α太小:收敛很慢;

- α \alpha α太大:每一次迭代中 J ( θ ) J(\theta) J(θ)可能不总是下降的,可能会导致最后不收敛;

- 选择 α \alpha α时,可以尝试 . . . , 0.001 , 0.003 , 0.01 , 0.03 , 0.1 , 0.3 , 1 , . . . \ _{}\ _{}...,0.001,0.003,0.01,0.03,0.1,0.3,1,... ...,0.001,0.003,0.01,0.03,0.1,0.3,1,...等值。

2.3 特征和多项式回归

多项式回归可以用线性回归的方法来拟合,非常复杂的函数,甚至是非线性函数都可以。

假设函数 h θ ( x ) = θ 0 + θ 1 x 1 + θ 2 x 2 + θ 3 x 3 = θ 0 + θ 1 ( f e a t u r e ) + θ 2 ( f e a t u r e ) 2 + θ 3 ( f e a t u r e ) 3 h_{\theta }(x)=\theta _{0}+\theta _{1}x_{1}+\theta _{2}\ x_{2}+\theta _{3}\ x_{3}=\theta _{0}+\theta _{1}(feature)+\theta _{2} (feature)^{2}+\theta _{3}(feature)^{3} hθ(x)=θ0+θ1x1+θ2 x2+θ3 x3=θ0+θ1(feature)+θ2(feature)2+θ3(feature)3 。其中, x 1 = ( f e a t u r e ) x_{1}=(feature) x1=(feature), x 2 = ( f e a t u r e ) 2 \ x_{2}= (feature)^{2} x2=(feature)2, x 3 = ( f e a t u r e ) 3 \ x_{3}= (feature)^{3} x3=(feature)3

假设函数 h θ ( x ) = θ 0 + θ 1 x 1 + θ 2 x 2 + θ 3 x 3 = θ 0 + θ 1 ( f e a t u r e ) + θ 2 ( f e a t u r e ) 2 + θ 3 ( f e a t u r e ) 3 h_{\theta }(x)=\theta _{0}+\theta _{1}x_{1}+\theta _{2}\ x_{2}+\theta _{3}\ x_{3}=\theta _{0}+\theta _{1}(feature)+\theta _{2} (feature)^{2}+\theta _{3}(feature)^{3} hθ(x)=θ0+θ1x1+θ2 x2+θ3 x3=θ0+θ1(feature)+θ2(feature)2+θ3(feature)3 。其中, x 1 = ( f e a t u r e ) x_{1}=(feature) x1=(feature), x 2 = ( f e a t u r e ) 2 \ x_{2}= (feature)^{2} x2=(feature)2, x 3 = ( f e a t u r e ) 3 \ x_{3}= (feature)^{3} x3=(feature)3

2.4 正规方程

正规方程(Normal Equation)是一种求 θ \theta θ的解析解法,它是通过求解方程 ∂ ∂ θ j J ( θ j ) = 0 \frac{\partial }{\partial\theta _{j} }J(\theta _{j})=0 ∂θj∂J(θj)=0来找出使得代价函数最小的参数的 θ \theta θ ,不需要进行特征缩放。

使用正规方程解出: θ = ( X T X ) − 1 X T y \theta=(X^{T}X)^{-1}X^{T}y θ=(XTX)−1XTy

θ = ( X T X ) − 1 X T y \theta=(X^{T}X)^{-1}X^{T}y θ=(XTX)−1XTy 的推导过程:

J ( θ ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta)=\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2} J(θ)=2m1i=1∑m(hθ(x(i))−y(i))2

将上式转化为矩阵表达形式:

J ( θ ) = 1 2 ( X θ − y ) T ( X θ − y ) = 1 2 ( θ T X T − y T ) ( X θ − y ) _{}\ _{}\ _{}\ _{}\ J(\theta)=\frac{1}{2}(X\theta-y)^{T}(X\theta-y)=\frac{1}{2}(\theta^{T}X^{T}-y^{T})(X\theta-y) J(θ)=21(Xθ−y)T(Xθ−y)=21(θTXT−yT)(Xθ−y)

= 1 2 ( θ T X T X θ − θ T X T y − ( y T X ) T − y T y ) =\frac{1}{2}(\theta^{T}X^{T}X\theta-\theta^{T}X^{T}y-(y^{T}X)^{T}-y^{T}y) =21(θTXTXθ−θTXTy−(yTX)T−yTy)

接下来对 J ( θ ) J(\theta) J(θ)求偏导(提示: d A B d B = A T , d X T A X d X = 2 A X \frac{dAB}{dB}=A^{T},\frac{dX^{T}AX}{dX}=2AX dBdAB=AT,dXdXTAX=2AX):

∂ J ( θ ) ∂ θ = 1 2 ( 2 X T X θ − X T y − ( y T X ) T − 0 ) \frac{\partial J(\theta )}{\partial \theta }=\frac{1}{2}(2X^{T}X\theta-X^{T}y-(y^{T}X)^{T}-0) ∂θ∂J(θ)=21(2XTXθ−XTy−(yTX)T−0)

= 1 2 ( 2 X T X θ − X T y − X T y − 0 ) _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ =\frac{1}{2}(2X^{T}X\theta-X^{T}y-X^{T}y-0) =21(2XTXθ−XTy−XTy−0)

= X T X θ − X T y =X^{T}X\theta-X^{T}y _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ _{}\ =XTXθ−XTy

令 ∂ J ( θ ) ∂ θ = 0 \frac{\partial J(\theta )}{\partial \theta }=0 ∂θ∂J(θ)=0,则 θ = ( X T X ) − 1 X T y \theta=(X^{T}X)^{-1}X^{T}y θ=(XTX)−1XTy

梯度下降与正规方程的比较:

| 梯度下降 | 正规方程 |

|---|---|

| 需要选择 α \alpha α | 不需要选择 α \alpha α |

| 需要多次迭代 | 一次运算得出 |

| 当特征数量 n n n大时也能较好适用 | 需要计算 ( X T X ) − 1 (X^{T}X)^{-1} (XTX)−1 ,当特征数量 n n n大时会很慢 |

| 适应于各种类型的模型 | 只适用于线性模型,不适合Logisic回归等其他模型 |