pytorch实现CIFAR-10多分类

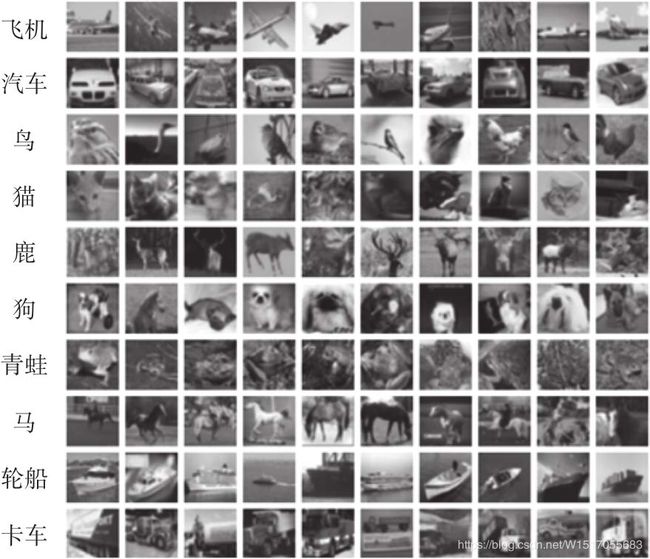

数据集说明

CIFAR-10数据集由10个类的 60000 个 32x32 彩色图像组成,每个类有6000个图像。有50000个训练图像和10000个测试图像。数据集划分为5个训练批次和1个测试批次,每个批次有10000个图像,测试批次包含来自每个类别的恰好1000个随机选择的图像。训练批次以随机顺序包含剩余图像,但由于一些训练批次可能来源一个类别的图像比另一个多,因此总体来看,5个训练集之和包含来自每个类的正好5000张图像。

数据集下载

加载数据

这个可以采用PyTorch提供的数据集加载工具 torchvision,同时对数据进行预处理,可以预先把数据集下载好解压,并放在当前目录的 data下, 所以参数 download = False

import torchvision

import torchvision.transforms as transforms

transform = transforms.Compose(

# B,G,R 三个通道归一化 标准差为 0.5, 方差为0.5

[transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5))]

)

trainset = torchvision.datasets.CIFAR10(root = './data', train = True, download=False, transform = transform)

trainLoader = torch.utils.data.DataLoader(trainset,batch_size = 4, shuffle = True, num_workers = 2)

testset = torchvision.datasets.CIFAR10(root = './data', train = False, download=False, transform = transform)

testLoader = torch.utils.data.DataLoader(testset, batch_size = 4, shuffle = False, num_workers = 2)

classes = ('plane', 'car','bird','cat','deer','dog','frog','horse','ship','truck')

构建网络

class CNNNet(nn.Module):

def __init__(self):

super(CNNNet, self).__init__()

self.conv1 = nn.Conv2d(in_channels = 3, out_channels=16, kernel_size= 5, stride=1)

self.pool1 = nn.MaxPool2d(kernel_size=2, stride = 2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36, kernel_size=3, stride=1)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride = 2)

self.fc1 = nn.Linear(1296, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self,x):

x = self.pool1(F.relu(self.conv1(x)))

x = self.pool2(F.relu(self.conv2(x)))

x = x.view(-1, 36*6*6)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

return x

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

net = CNNNet()

net = net.to(device)

查看一下网络结构

CNNNet(

(conv1): Conv2d(3, 16, kernel_size=(5, 5), stride=(1, 1))

(pool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(16, 36, kernel_size=(3, 3), stride=(1, 1))

(pool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(fc1): Linear(in_features=1296, out_features=128, bias=True)

(fc2): Linear(in_features=128, out_features=10, bias=True)

)

模型构建后,要初始化参数

for m in net.modules():

if isinstance(m, nn.Conv2d):

nn.init.normal_(m.weight)

nn.init.xavier_normal_(m.weight)

nn.init.kaiming_normal_(m.weight) # 卷积层参数初始化

nn.init.constant_(m.bias,0)

elif isinstance(m,nn.Linear):

nn.init.normal_(m.weight) #全连接层参数初始化

训练模型

# 优化器

import torch.optim as optim

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr = 0.001, momentum = 0.9)

# 训练模型

for epoch in range(10):

running_loss = 0.0

for i, data in enumerate(trainLoader, 0):

# 训练数据

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

# 权重参数清零

optimizer.zero_grad()

outputs = net(inputs) # 正向传播

loss = criterion(outputs, labels)

loss.backward() # 反向传播

optimizer.step()

running_loss += loss.item()

if i %2000 == 1999:

print('[%d, %4d] loss:%.3f'%(epoch + 1, i+1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

测试模型

correct = 0

total = 0

with torch.no_grad():

for data in testLoader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

_,predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images:%d%%'%(100 * correct / total))

结果:

[10, 2000] loss:0.940

[10, 4000] loss:0.947

[10, 6000] loss:0.952

[10, 8000] loss:0.928

[10, 10000] loss:0.921

[10, 12000] loss:0.946

Finished Training

Start Testing

Accuracy of the network on the 10000 test images:66%

采用全局平均池化

pytorch可以 用 nn.AdaptiveAvgPool2d(1) 实现全局平均池化或全局最大池化

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(in_channels = 3, out_channels=16, kernel_size=5)

self.pool1 = nn.MaxPool2d(kernel_size = 2, stride = 2)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=36, kernel_size= 5)

self.pool2 = nn.MaxPool2d(kernel_size=2, stride = 2)

# 使用全局平均池化

self.aap = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Linear(36,10)

def forward(self,x):

x = self.pool1(F.relu(self.conv1(x)))

x = self.pool2(F.relu(self.conv2(x)))

x = self.aap(x)

x = x.view(x.shape[0], -1)

x = self.fc(x)

return x

使用模型集成提升性能

模型集成是提升分类器或预测系统效果的重要方法,模型集成的原理比较简单,就好比盲人摸象,每个盲人只能摸到大象的一部分,但是综合每个人摸到的一部分,就能形成一个比较完整,复合实际的图像。每个盲人就好像单个模型,如果集成这些模型,就能得到一个强于单个模型的模型。在具体使用时,除了要考虑各个模型的差异性,还要考虑模型的性能,如果模型的性能都差不多,可以取各个模型预测结果的平均值;如果模型性能相差比较大,模型集成后的性能可能还不及单个模型,相差比较大时,可以采用加权平均法。

采用三个模型(CNNNet,Net, LeNet)集成

LeNet

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=6, kernel_size=5)

self.conv2 = nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.max_pool2d(out, 2)

out = F.relu(self.conv2(out))

out = F.max_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

把三个模型放在列表中

print('==> Building model..')

net1 = CNNNet()

net2=Net()

net3=LeNet()

#定义一些超参数

BATCHSIZE=100

DOWNLOAD_MNIST=False

EPOCHES=20

LR=0.001

# 把3个网络模型放在一个列表里

mlps = [net1.to(device), net2.to(device), net3.to(device)]

optimizer = torch.optim.Adam([{"params": mlp.parameters()} for mlp in mlps], lr=LR)

loss_function = nn.CrossEntropyLoss()

for ep in range(EPOCHES):

for img, label in trainloader:

img, label = img.to(device), label.to(device)

optimizer.zero_grad() # 10个网络清除梯度

for mlp in mlps:

mlp.train()

out = mlp(img)

loss = loss_function(out, label)

loss.backward() # 网络们获得梯度

optimizer.step()

pre = []

vote_correct = 0

mlps_correct = [0 for i in range(len(mlps))]

for img, label in testloader:

img, label = img.to(device), label.to(device)

for i, mlp in enumerate(mlps):

mlp.eval()

out = mlp(img)

_, prediction = torch.max(out, 1) # 按行取最大值

pre_num = prediction.cpu().numpy()

mlps_correct[i] += (pre_num == label.cpu().numpy()).sum()

pre.append(pre_num)

arr = np.array(pre)

pre.clear()

result = [Counter(arr[:, i]).most_common(1)[0][0] for i in range(BATCHSIZE)]

vote_correct += (result == label.cpu().numpy()).sum()

print("epoch:" + str(ep) + "集成模型的正确率" + str(vote_correct / len(testloader)))

for idx, coreect in enumerate(mlps_correct):

print("模型" + str(idx) + "的正确率为:" + str(coreect / len(testloader)))

结果:

epoch:18集成模型的正确率69.64

模型0的正确率为:61.44

模型1的正确率为:61.41

模型2的正确率为:65.49

epoch:19集成模型的正确率68.71

模型0的正确率为:62.31

模型1的正确率为:60.47

模型2的正确率为:65.8

VGG 模型

import torch

import torch.nn as nn

import numpy as np

import torch.functional as F

import torchvision.datasets

import torchvision.transforms as transforms

from collections import Counter

#定义一些超参数

BATCHSIZE=100

DOWNLOAD_MNIST=False

EPOCHES=20

LR=0.001

cfg = {

'vgg16':[64,64,'m',128,128,'m',256,256,256,'m',512,512,512,'m',512,512,512,'m'],

'vgg19':[64,64,'m',128,128,'m',256,256,256,256,'m',512,512,512,512,'m',512,512,512,512,'m']

}

class VGG(nn.Module):

def __init__(self, vgg_name):

super(VGG, self).__init__()

self.features = self.make_layers(cfg[vgg_name])

self.classifier = nn.Linear(512, 10)

def forward(self,x):

out = self.features(x)

out = out.view(out.size(0), -1)

out = self.classifier(out)

return out

def make_layers(self,cfg):

layers = []

in_channels = 3

for x in cfg:

if x == 'm':

layers += [nn.MaxPool2d(kernel_size=2, stride = 2)]

else:

layers += [nn.Conv2d(in_channels, x, kernel_size=3, padding = 1),

nn.BatchNorm2d(x),

nn.ReLU(inplace = True)]

in_channels = x

layers += [nn.AvgPool2d(kernel_size=1,stride = 1)]

return nn.Sequential(*layers)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

])

transform_test = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)),

])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=False, transform=transform_train)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=128, shuffle=True, num_workers=0)

testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=False, transform=transform_test)

testloader = torch.utils.data.DataLoader(testset, batch_size=100, shuffle=False, num_workers=0)

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

net = VGG('vgg16')

mlps = [net.to(device)]

optimizer = torch.optim.Adam([{"params": mlp.parameters()} for mlp in mlps], lr=LR)

loss_function = nn.CrossEntropyLoss()

for ep in range(EPOCHES):

for img, label in trainloader:

img, label = img.to(device), label.to(device)

optimizer.zero_grad() # 10个网络清除梯度

for mlp in mlps:

mlp.train()

out = mlp(img)

loss = loss_function(out, label)

loss.backward() # 网络们获得梯度

optimizer.step()

pre = []

vote_correct = 0

mlps_correct = [0 for i in range(len(mlps))]

for img, label in testloader:

img, label = img.to(device), label.to(device)

for i, mlp in enumerate(mlps):

mlp.eval()

out = mlp(img)

_, prediction = torch.max(out, 1) # 按行取最大值

pre_num = prediction.cpu().numpy()

mlps_correct[i] += (pre_num == label.cpu().numpy()).sum()

pre.append(pre_num)

arr = np.array(pre)

pre.clear()

result = [Counter(arr[:, i]).most_common(1)[0][0] for i in range(BATCHSIZE)]

vote_correct += (result == label.cpu().numpy()).sum()

# print("epoch:" + str(ep)+"集成模型的正确率"+str(vote_correct/len(testloader)))

for idx, coreect in enumerate(mlps_correct):

print("VGG19模型迭代" + str(ep) + "次的正确率为:" + str(coreect / len(testloader)))

vgg16

VGG16模型迭代11次的正确率为:85.62

VGG16模型迭代12次的正确率为:87.13

VGG16模型迭代13次的正确率为:87.86

VGG16模型迭代14次的正确率为:86.83

VGG16模型迭代15次的正确率为:88.15

VGG16模型迭代16次的正确率为:88.19

VGG16模型迭代17次的正确率为:89.2

VGG16模型迭代18次的正确率为:88.65

VGG16模型迭代19次的正确率为:87.97

vgg19

VGG19模型迭代11次的正确率为:84.89

VGG19模型迭代12次的正确率为:83.92

VGG19模型迭代13次的正确率为:85.73

VGG19模型迭代14次的正确率为:86.56

VGG19模型迭代15次的正确率为:86.86

VGG19模型迭代16次的正确率为:87.43

VGG19模型迭代17次的正确率为:87.38

VGG19模型迭代18次的正确率为:87.03

VGG19模型迭代19次的正确率为:87.69