【论文阅读】【三维目标检测】VoteNet:Deep Hough Voting for 3D Object Detection in Point Clouds

文章目录

- Hough Voting

- VoteNet

- 网络结构

- Voting in Point Clouds

- Object Proposal and Classification from Votes

- 实验

- 思考

- 代码解读

- backbone_net

- vgen:Voting Module

- pnet:Proposal Module

- Loss

文章:Deep Hough Voting for 3D Object Detection in Point Clouds

2019CVPR

Charles R. Qi 与 Kaiming He两位大佬的文章

Hough Voting

本文的标题是Deep Hough Voting,先来说一下Hough Voting。

用Hough变换检测直线大家想必都听过:对于一条直线,可以使用(r, θ)两个参数进行描述,那么对于图像中的一点,过这个点的直线有很多条,可以生成一系列的(r, θ),在参数平面内就是一条曲线,也就是说,一个点对应着参数平面内的一个曲线。那如果有很多个点,则会在参数平面内生成很多曲线。那么,如果这些点是能构成一条直线的,那么这条直线的参数(r*, θ*)就在每条曲线中都存在,所以看起来就像是多条曲线相交在(r*,θ*)。可以用多条曲线投票的方式来看,其他点都是很少的票数,而(r*,θ*)则票数很多,所以直线的参数就是(r*,θ*)。

所以Hough变换的思想就是在于,在参数空间内进行投票,投票得数高的就是要得到的值。

文中提到的Hough Voting如下:

A traditional Hough voting 2D detector [24] comprises an offline and an online step. First, given a collection of images with annotated object bounding boxes, a codebook is constructed with stored mappings between image patches (or their features) and their offsets to the corresponding object centers. At inference time, interest points are selected from the image to extract patches around them. These patches are then compared against patches in the codebook to retrieve offsets and compute votes. As object patches will tend to vote in agreement, clusters will form near object centers. Finally, the object boundaries are retrieved by tracing cluster votes back to their generating patches.

对于这一段话的理解则是,已经有了一部分已经标注好的框和图片(或者feature),那么每一个框中的图片或者feature就相当于直线检测中的点,框相对于物体中心点的offset就相当于要vote的参数。在inference时,先选取一些RoI,然后将这些RoI或者其feature放入参数空间内,检索offset并且计算vote。具体的,也可以参考引文[24]

VoteNet

本文要解决的核心问题是不同于2D Object Detection,3D 物体的中心往往离扫描到的点有一定距离,而且在空白处:

We face a major challenge when directly predicting bounding box parameters from scene points: a 3D object centroid can be far from any surface point thus hard to regress accurately in one step.

相对应Hough Vote,本文提出了VoteNet的网络结构,对Hough Vote做了如下的改进:

“Interest points are described and selected by deep neural networks instead of depending on hand-crafted features.

Vote generation is learned by a network instead of using a codebook. Levaraging larger receptive fields, voting can be made less ambiguous and thus more effective. In addition, a vote location can be augmented with a feature vector allowing for better aggregation.

Vote aggregation is realized through point cloud process- ing layers with trainable parameters. Utilizing the vote fea- tures, the network can potentially filter out lowquality votes and generate improved proposals.

Object proposals in the form of: location, dimensions, ori- entation and even semantic classes can be directly generated from the aggregated features, mitigating the need to trace back votes’ origins.”

以上四部分都是针对Hough Vote做的改进,主要是使用神经网络进行兴趣点的选取,生成Vote,聚集Vote和生成框。

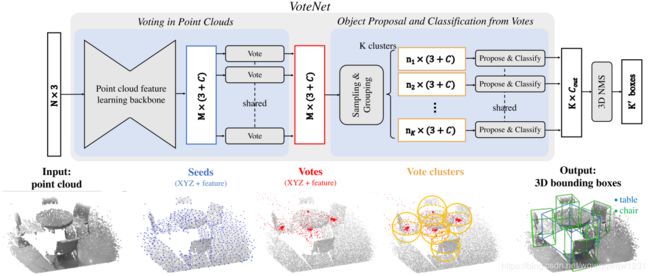

网络结构

上图是VoteNet的网络结构,其实这个结构如果读者看过PointRCNN很容易理解。

Voting in Point Clouds

这部分就是PointNet++作为主干网络,包含4个SA层与2个FP层,得到 M × ( 3 + C ) M \times (3 + C) M×(3+C)的Tensor,这个tensor表示着选出来的Seed和每个Seed对应的特征向量。那么主干网络具体的参数如下表,也就是M=1024,C=256。

Vote的过程其实就是使用MLP对Seed回归其对应中心的offset的过程。但在回归是,不仅回归其归属的物体的中心,而且回归一个feature的offset。那么这一块的结构文中提到:

“The voting module MLP has output sizes of 256, 256, 259 for its fully connected layers. The last fully connected layer does not have ReLU or BatchNorm.”

得到的位置的offset和feature的offset,element-wise的加到主干网络的输出上,更新Seed的位置和feature。

Object Proposal and Classification from Votes

Sampling and Grouping

对Vote产生的Seed进行furthest sampling,然后按照一定距离内的进行Grouping操作。实验中验证了这个方式的有效性,距离在0.2时是最好的。

Proposal and Classify

对于Grouping得到的特征,送入到PoinNet中,进行一次MLP,然后MaxPooling操作得到特征向量,然后再进行一次MLP得到输出。令人疑惑的是,在正文中说第一个MLP是PointNet-like的形式,而在Appendix中则提到是SA结构的:

“The proposal module as mentioned in the main paper is a SA layer followed by another MLP after the max-pooling in each local region. ”

我认为PointNet的特殊之处是在MLP之前加入了T-Net,但SA module中则是使用相对位置做直接使用MLP的。这一块很疑惑,文中也没有解释太清楚。

第二层的MLP的输出的channel数为 5 + 2 N H + 4 N S + N C 5+2NH+4NS+NC 5+2NH+4NS+NC,是参考了FrustumNet的输出,文中解释为:

“The layer’s output has 5+2NH+4NS+NC channels whereNH is the number of heading bins (we predict a classification score for each heading bin and a regression offset for each bin–relative to the bin center and normalized by the bin size), NS is the number of size templates (we predict a classification score for each size template and 3 scale regression offsets for height, width and length) and NC is the number of seman- tic classes. In SUN RGB-D: NH = 12,NS = NC = 10, in ScanNet: NH = 12,NS = NC = 18. In the first 5 channels, the first two are for objectness classification and the rest three are for center regression (relative to the vote cluster center).”

实验

实现细节,在采输入的时候:

“The floor height is estimated as the 1% percentile of all points’ heights. To augment the training data, we randomly sub-sample the points from the scene points on-the-fly.”

实验表明该方法的有效性:

“VoteNet outperforms all previous methods by at least 3.7 and 18.4 mAP increase in SUN RGB-D and ScanNet respectively. Notably,”

Ablation Study

实验验证了Vote的有效性,对比BoxNet(可以认为是PointRCNN中的RPN网络),效果有了明显的提升。而且对于不同的category,扫到的点距离中心点越远,vote的所带的提升越大。

使用对比实验证明了Vote Aggregation的有效性。

实验说明了本网络是的参数少,运算时间快

Appendix

我认为有意思的提升点:

“We report two ways of using the proposals: joint and per-class. For the joint proposal we propose K objects’ bounding boxes for all the 10 categories, where we consider each proposal as the semantic class it has the largest confidence in, and use their objectness scores to rank them. For the per-class proposal we duplicate the K proposal 10 times thus have K proposals per class where we use the multiplication of semantic probability for that class and the objectness prob- ability to rank them. The latterway of using proposals gives us a slight improvement on AP and a big boost on AR.”

思考

这个模型到底是One-stage还是Two-stage?

如果将One-stage和Two-stage的区分是基于是否存在对proposal的优化,那本文中其实只预测一次box,就是在最后。在主干网络的输出中,预测了Seed的偏移,这个部分可以理解为是对Seed的offset,也可以理解为是预测了Proposal的中心。本文中的模型不存在显式地对proposal的优化,但确实在主干网络的输出后还进行了又一个stage的操作。

与PointRCNN的对比

PointRCNN就可以认为是传统的Two-stage的模型。如果相对比来看,本文的全部网络可以当做是PointRCNN的RPN网络出现,然后再用PointRCNN的第二阶段进行优化。

但如果将本文的网络是认为Two-stage的模型,可以认为在Seed的计算offset的阶段,其实是在生成proposal的中心。后面的sampling和grouping则是在进行RoI Crop的操作。

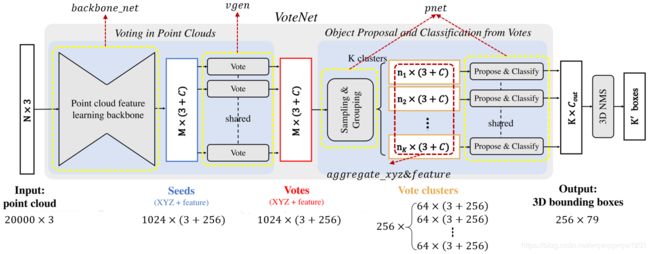

代码解读

代码链接:https://github.com/facebookresearch/votenet

代码写的非常优美,使用end_points字典来储存中间变量,使得查看重要的变量非常方便。

网络分为backbone_net,vgen和pnet几个模块,分别如下:

# VoteNet的前向计算过程

def forward(self, inputs):

""" Forward pass of the network

Args:

inputs: dict

{point_clouds}

point_clouds: Variable(torch.cuda.FloatTensor)

(B, N, 3 + input_channels) tensor

Point cloud to run predicts on

Each point in the point-cloud MUST

be formated as (x, y, z, features...)

Returns:

end_points: dict

"""

end_points = {} # 储存前向计算过程中的中间变量

batch_size = inputs['point_clouds'].shape[0]

end_points = self.backbone_net(inputs['point_clouds'], end_points)

# --------- HOUGH VOTING ---------

xyz = end_points['fp2_xyz']

features = end_points['fp2_features']

end_points['seed_inds'] = end_points['fp2_inds']

end_points['seed_xyz'] = xyz # Seeds's xyz

end_points['seed_features'] = features # Seeds' feature

xyz, features = self.vgen(xyz, features) # Vote

features_norm = torch.norm(features, p=2, dim=1)

features = features.div(features_norm.unsqueeze(1))

end_points['vote_xyz'] = xyz # Votes's xyz

end_points['vote_features'] = features # Votes's feature

end_points = self.pnet(xyz, features, end_points) # Sampling & Grouping, Porpose & Classify

return end_points

backbone_net

def __init__(self, input_feature_dim=0):

"""

backbone_net由4层SA和2层FP构成

"""

super().__init__()

self.sa1 = PointnetSAModuleVotes(

npoint=2048, # 采样2048个点

radius=0.2, # 每个球域半径0.2m

nsample=64, # 每个球域内采样64个点

mlp=[input_feature_dim, 64, 64, 128], # MLP的通道数

use_xyz=True, # 使用xyz做feature

normalize_xyz=True # 在使用xyz做feature时,将xyz除以radius以normalization

)

self.sa2 = PointnetSAModuleVotes(

npoint=1024,

radius=0.4,

nsample=32,

mlp=[128, 128, 128, 256],

use_xyz=True,

normalize_xyz=True

)

self.sa3 = PointnetSAModuleVotes(

npoint=512,

radius=0.8,

nsample=16,

mlp=[256, 128, 128, 256],

use_xyz=True,

normalize_xyz=True

)

self.sa4 = PointnetSAModuleVotes(

npoint=256,

radius=1.2,

nsample=16,

mlp=[256, 128, 128, 256],

use_xyz=True,

normalize_xyz=True

)

self.fp1 = PointnetFPModule(mlp=[256+256,256,256]) # MLP的输入通道数为256,输出分别未256,256

self.fp2 = PointnetFPModule(mlp=[256+256,256,256])

vgen:Voting Module

def forward(self, seed_xyz, seed_features):

""" Forward pass.

Arguments:

seed_xyz: (batch_size, num_seed, 3) Pytorch tensor

seed_features: (batch_size, feature_dim, num_seed) Pytorch tensor

Returns:

vote_xyz: (batch_size, num_seed*vote_factor, 3)

vote_features: (batch_size, vote_feature_dim, num_seed*vote_factor)

"""

batch_size = seed_xyz.shape[0]

num_seed = seed_xyz.shape[1] # num_seed=1024

num_vote = num_seed*self.vote_factor # num_vote=1024

net = F.relu(self.bn1(self.conv1(seed_features)))

net = F.relu(self.bn2(self.conv2(net)))

net = self.conv3(net) # (batch_size, (3+out_dim)*vote_factor, num_seed), vote_factor=1

net = net.transpose(2,1).view(batch_size, num_seed, self.vote_factor, 3+self.out_dim)

offset = net[:,:,:,0:3]

# Seeds -> Votes

vote_xyz = seed_xyz.unsqueeze(2) + offset

vote_xyz = vote_xyz.contiguous().view(batch_size, num_vote, 3)

residual_features = net[:,:,:,3:] # (batch_size, num_seed, vote_factor, out_dim)

vote_features = seed_features.transpose(2,1).unsqueeze(2) + residual_features

vote_features = vote_features.contiguous().view(batch_size, num_vote, self.out_dim)

vote_features = vote_features.transpose(2,1).contiguous()

return vote_xyz, vote_features

pnet:Proposal Module

在将Votes的特征送入pnet之前,还进行了normalize的操作,参考第一个代码块。

class ProposalModule(nn.Module):

def __init__(self, num_class, num_heading_bin, num_size_cluster, mean_size_arr, num_proposal, sampling, seed_feat_dim=256):

super().__init__()

self.num_class = num_class

self.num_heading_bin = num_heading_bin

self.num_size_cluster = num_size_cluster

self.mean_size_arr = mean_size_arr

self.num_proposal = num_proposal

self.sampling = sampling

self.seed_feat_dim = seed_feat_dim

# Vote clustering

self.vote_aggregation = PointnetSAModuleVotes(

npoint=self.num_proposal,

radius=0.3,

nsample=16,

mlp=[self.seed_feat_dim, 128, 128, 128],

use_xyz=True,

normalize_xyz=True

)

# Object proposal/detection

# Objectness scores (2), center residual (3),

# heading class+residual (num_heading_bin*2), size class+residual(num_size_cluster*4)

self.conv1 = torch.nn.Conv1d(128,128,1)

self.conv2 = torch.nn.Conv1d(128,128,1)

self.conv3 = torch.nn.Conv1d(128,2+3+num_heading_bin*2+num_size_cluster*4+self.num_class,1)

self.bn1 = torch.nn.BatchNorm1d(128)

self.bn2 = torch.nn.BatchNorm1d(128)

def forward(self, xyz, features, end_points):

"""

Args:

xyz: (B,K,3)

features: (B,C,K)

Returns:

scores: (B,num_proposal,2+3+NH*2+NS*4)

"""

# Farthest point sampling (FPS) on votes

xyz, features, fps_inds = self.vote_aggregation(xyz, features)

sample_inds = fps_inds

end_points['aggregated_vote_xyz'] = xyz # (batch_size, num_proposal, 3)

end_points['aggregated_vote_inds'] = sample_inds # (batch_size, num_proposal,) # should be 0,1,2,...,num_proposal

# --------- PROPOSAL GENERATION ---------

net = F.relu(self.bn1(self.conv1(features)))

net = F.relu(self.bn2(self.conv2(net)))

net = self.conv3(net) # (batch_size, 2+3+num_heading_bin*2+num_size_cluster*4, num_proposal)

end_points = decode_scores(net, end_points, self.num_class, self.num_heading_bin, self.num_size_cluster, self.mean_size_arr)

return end_points

要将最终得到的变量net解析出来,使用decode_scores这个函数

def decode_scores(net, end_points, num_class, num_heading_bin, num_size_cluster, mean_size_arr):

net_transposed = net.transpose(2,1) # (batch_size, 1024, ..)

batch_size = net_transposed.shape[0]

num_proposal = net_transposed.shape[1]

objectness_scores = net_transposed[:,:,0:2]

end_points['objectness_scores'] = objectness_scores

base_xyz = end_points['aggregated_vote_xyz'] # (batch_size, num_proposal, 3)

center = base_xyz + net_transposed[:,:,2:5] # (batch_size, num_proposal, 3)

end_points['center'] = center

heading_scores = net_transposed[:,:,5:5+num_heading_bin]

heading_residuals_normalized = net_transposed[:,:,5+num_heading_bin:5+num_heading_bin*2]

end_points['heading_scores'] = heading_scores # Bxnum_proposalxnum_heading_bin

end_points['heading_residuals_normalized'] = heading_residuals_normalized # Bxnum_proposalxnum_heading_bin (should be -1 to 1)

end_points['heading_residuals'] = heading_residuals_normalized * (np.pi/num_heading_bin) # Bxnum_proposalxnum_heading_bin

size_scores = net_transposed[:,:,5+num_heading_bin*2:5+num_heading_bin*2+num_size_cluster]

size_residuals_normalized = net_transposed[:,:,5+num_heading_bin*2+num_size_cluster:5+num_heading_bin*2+num_size_cluster*4].view([batch_size, num_proposal, num_size_cluster, 3]) # Bxnum_proposalxnum_size_clusterx3

end_points['size_scores'] = size_scores

end_points['size_residuals_normalized'] = size_residuals_normalized

end_points['size_residuals'] = size_residuals_normalized * torch.from_numpy(mean_size_arr.astype(np.float32)).cuda().unsqueeze(0).unsqueeze(0)

sem_cls_scores = net_transposed[:,:,5+num_heading_bin*2+num_size_cluster*4:] # Bxnum_proposalx10

end_points['sem_cls_scores'] = sem_cls_scores

return end_points

Loss

在解析了输出之后,进入Loss的计算。

def compute_loss():

# 简化版的loss,可以看出loss主要包括vote_loss,objectness_loss,box_loss,sem_cls_loss

# box_loss则跟center,heading,size有关

box_loss = center_loss + 0.1*heading_cls_loss + heading_reg_loss + 0.1*size_cls_loss + size_reg_loss

loss = vote_loss + 0.5*objectness_loss + box_loss + 0.1*sem_cls_loss

def compute_vote_loss(end_points):

""" Compute vote loss: Match predicted votes to GT votes.

Args:

end_points: dict (read-only)

Returns:

vote_loss: scalar Tensor

Overall idea:

If the seed point belongs to an object (votes_label_mask == 1),

then we require it to vote for the object center.

Each seed point may vote for multiple translations v1,v2,v3

A seed point may also be in the boxes of multiple objects:

o1,o2,o3 with corresponding GT votes c1,c2,c3

Then the loss for this seed point is:

min(d(v_i,c_j)) for i=1,2,3 and j=1,2,3

"""

# Load ground truth votes and assign them to seed points

batch_size = end_points['seed_xyz'].shape[0]

num_seed = end_points['seed_xyz'].shape[1] # B,num_seed,3

vote_xyz = end_points['vote_xyz'] # B,num_seed*vote_factor,3

seed_inds = end_points['seed_inds'].long() # B,num_seed in [0,num_points-1]

# Get groundtruth votes for the seed points

# vote_label_mask: Use gather to select B,num_seed from B,num_point

# non-object point has no GT vote mask = 0, object point has mask = 1

# vote_label: Use gather to select B,num_seed,9 from B,num_point,9

# with inds in shape B,num_seed,9 and 9 = GT_VOTE_FACTOR * 3

seed_gt_votes_mask = torch.gather(end_points['vote_label_mask'], 1, seed_inds)

seed_inds_expand = seed_inds.view(batch_size,num_seed,1).repeat(1,1,3*GT_VOTE_FACTOR)

seed_gt_votes = torch.gather(end_points['vote_label'], 1, seed_inds_expand)

seed_gt_votes += end_points['seed_xyz'].repeat(1,1,3)

# Compute the min of min of distance

vote_xyz_reshape = vote_xyz.view(batch_size*num_seed, -1, 3) # from B,num_seed*vote_factor,3 to B*num_seed,vote_factor,3

seed_gt_votes_reshape = seed_gt_votes.view(batch_size*num_seed, GT_VOTE_FACTOR, 3) # from B,num_seed,3*GT_VOTE_FACTOR to B*num_seed,GT_VOTE_FACTOR,3

# A predicted vote to no where is not penalized as long as there is a good vote near the GT vote.

dist1, _, dist2, _ = nn_distance(vote_xyz_reshape, seed_gt_votes_reshape, l1=True)

votes_dist, _ = torch.min(dist2, dim=1) # (B*num_seed,vote_factor) to (B*num_seed,)

votes_dist = votes_dist.view(batch_size, num_seed)

vote_loss = torch.sum(votes_dist*seed_gt_votes_mask.float())/(torch.sum(seed_gt_votes_mask.float())+1e-6)

return vote_loss

def compute_objectness_loss(end_points):

""" Compute objectness loss for the proposals.

Args:

end_points: dict (read-only)

Returns:

objectness_loss: scalar Tensor

objectness_label: (batch_size, num_seed) Tensor with value 0 or 1

objectness_mask: (batch_size, num_seed) Tensor with value 0 or 1

object_assignment: (batch_size, num_seed) Tensor with long int

within [0,num_gt_object-1]

"""

# Associate proposal and GT objects by point-to-point distances

aggregated_vote_xyz = end_points['aggregated_vote_xyz']

gt_center = end_points['center_label'][:,:,0:3]

B = gt_center.shape[0]

K = aggregated_vote_xyz.shape[1]

K2 = gt_center.shape[1]

dist1, ind1, dist2, _ = nn_distance(aggregated_vote_xyz, gt_center) # dist1: BxK, dist2: BxK2

# Generate objectness label and mask

# objectness_label: 1 if pred object center is within NEAR_THRESHOLD of any GT object

# objectness_mask: 0 if pred object center is in gray zone (DONOTCARE), 1 otherwise

euclidean_dist1 = torch.sqrt(dist1+1e-6)

objectness_label = torch.zeros((B,K), dtype=torch.long).cuda()

objectness_mask = torch.zeros((B,K)).cuda()

objectness_label[euclidean_dist1<NEAR_THRESHOLD] = 1

objectness_mask[euclidean_dist1<NEAR_THRESHOLD] = 1

objectness_mask[euclidean_dist1>FAR_THRESHOLD] = 1

# Compute objectness loss

objectness_scores = end_points['objectness_scores']

criterion = nn.CrossEntropyLoss(torch.Tensor(OBJECTNESS_CLS_WEIGHTS).cuda(), reduction='none')

objectness_loss = criterion(objectness_scores.transpose(2,1), objectness_label)

objectness_loss = torch.sum(objectness_loss * objectness_mask)/(torch.sum(objectness_mask)+1e-6)

# Set assignment

object_assignment = ind1 # (B,K) with values in 0,1,...,K2-1

return objectness_loss, objectness_label, objectness_mask, object_assignment

def compute_box_and_sem_cls_loss(end_points, config):

""" Compute 3D bounding box and semantic classification loss.

Args:

end_points: dict (read-only)

Returns:

center_loss

heading_cls_loss

heading_reg_loss

size_cls_loss

size_reg_loss

sem_cls_loss

"""

num_heading_bin = config.num_heading_bin

num_size_cluster = config.num_size_cluster

num_class = config.num_class

mean_size_arr = config.mean_size_arr

object_assignment = end_points['object_assignment']

batch_size = object_assignment.shape[0]

# Compute center loss

pred_center = end_points['center']

gt_center = end_points['center_label'][:,:,0:3]

dist1, ind1, dist2, _ = nn_distance(pred_center, gt_center) # dist1: BxK, dist2: BxK2

box_label_mask = end_points['box_label_mask']

objectness_label = end_points['objectness_label'].float()

centroid_reg_loss1 = \

torch.sum(dist1*objectness_label)/(torch.sum(objectness_label)+1e-6)

centroid_reg_loss2 = \

torch.sum(dist2*box_label_mask)/(torch.sum(box_label_mask)+1e-6)

center_loss = centroid_reg_loss1 + centroid_reg_loss2

# Compute heading loss

heading_class_label = torch.gather(end_points['heading_class_label'], 1, object_assignment) # select (B,K) from (B,K2)

criterion_heading_class = nn.CrossEntropyLoss(reduction='none')

heading_class_loss = criterion_heading_class(end_points['heading_scores'].transpose(2,1), heading_class_label) # (B,K)

heading_class_loss = torch.sum(heading_class_loss * objectness_label)/(torch.sum(objectness_label)+1e-6)

heading_residual_label = torch.gather(end_points['heading_residual_label'], 1, object_assignment) # select (B,K) from (B,K2)

heading_residual_normalized_label = heading_residual_label / (np.pi/num_heading_bin)

# Ref: https://discuss.pytorch.org/t/convert-int-into-one-hot-format/507/3

heading_label_one_hot = torch.cuda.FloatTensor(batch_size, heading_class_label.shape[1], num_heading_bin).zero_()

heading_label_one_hot.scatter_(2, heading_class_label.unsqueeze(-1), 1) # src==1 so it's *one-hot* (B,K,num_heading_bin)

heading_residual_normalized_loss = huber_loss(torch.sum(end_points['heading_residuals_normalized']*heading_label_one_hot, -1) - heading_residual_normalized_label, delta=1.0) # (B,K)

heading_residual_normalized_loss = torch.sum(heading_residual_normalized_loss*objectness_label)/(torch.sum(objectness_label)+1e-6)

# Compute size loss

size_class_label = torch.gather(end_points['size_class_label'], 1, object_assignment) # select (B,K) from (B,K2)

criterion_size_class = nn.CrossEntropyLoss(reduction='none')

size_class_loss = criterion_size_class(end_points['size_scores'].transpose(2,1), size_class_label) # (B,K)

size_class_loss = torch.sum(size_class_loss * objectness_label)/(torch.sum(objectness_label)+1e-6)

size_residual_label = torch.gather(end_points['size_residual_label'], 1, object_assignment.unsqueeze(-1).repeat(1,1,3)) # select (B,K,3) from (B,K2,3)

size_label_one_hot = torch.cuda.FloatTensor(batch_size, size_class_label.shape[1], num_size_cluster).zero_()

size_label_one_hot.scatter_(2, size_class_label.unsqueeze(-1), 1) # src==1 so it's *one-hot* (B,K,num_size_cluster)

size_label_one_hot_tiled = size_label_one_hot.unsqueeze(-1).repeat(1,1,1,3) # (B,K,num_size_cluster,3)

predicted_size_residual_normalized = torch.sum(end_points['size_residuals_normalized']*size_label_one_hot_tiled, 2) # (B,K,3)

mean_size_arr_expanded = torch.from_numpy(mean_size_arr.astype(np.float32)).cuda().unsqueeze(0).unsqueeze(0) # (1,1,num_size_cluster,3)

mean_size_label = torch.sum(size_label_one_hot_tiled * mean_size_arr_expanded, 2) # (B,K,3)

size_residual_label_normalized = size_residual_label / mean_size_label # (B,K,3)

size_residual_normalized_loss = torch.mean(huber_loss(predicted_size_residual_normalized - size_residual_label_normalized, delta=1.0), -1) # (B,K,3) -> (B,K)

size_residual_normalized_loss = torch.sum(size_residual_normalized_loss*objectness_label)/(torch.sum(objectness_label)+1e-6)

# 3.4 Semantic cls loss

sem_cls_label = torch.gather(end_points['sem_cls_label'], 1, object_assignment) # select (B,K) from (B,K2)

criterion_sem_cls = nn.CrossEntropyLoss(reduction='none')

sem_cls_loss = criterion_sem_cls(end_points['sem_cls_scores'].transpose(2,1), sem_cls_label) # (B,K)

sem_cls_loss = torch.sum(sem_cls_loss * objectness_label)/(torch.sum(objectness_label)+1e-6)

return center_loss, heading_class_loss, heading_residual_normalized_loss, size_class_loss, size_residual_normalized_loss, sem_cls_loss