阿里云三台节点,搭建完全分布式hadoop集群,超简单

完全分布式的安装

1、集群规划

角色分配

| NODE-47 | NODE-101 | NODE-106 | |

| HDFS | Namenode Datanode |

SecondaryNamenode Datanode |

Datanode |

| YARN | Nodemanager | Nodemanager | RecourceManager Nodemanager |

| Histrory | HistroryServer |

2、阿里云环境

CentOS 7.4 hadoop 2.8.3 jdk1.8 (centos 版本无影响)

2.1 . 关闭防火墙 (3台) (root)

systemctl stop firewalld.service #停止firewall

systemctl disable firewalld.service #禁止firewall开机启动

firewall-cmd --state #查看默认防火墙状态(关闭后显示notrunning,开启后显示running)

2.2 . 配置主机映射 【三台都需要需要添加】

# vi /etc/hosts (node-47为例)

106.xx.xx.xxx node-106 ( 外网ip)

172.xxx.xx.xx node-47 ( 内网ip)

101.xx.xx.1xx node-101 (外网ip)

注意:这里有坑,就是每台机器配置hosts的时候,自己的ip一定要设置为内网ip,其他节点ip设置为外网ip。否则hdfs或者yarn启动的时候都会报端口被占用的异常,不信可以试试。

2.3. 安装jdk

先卸载自带的jdk

# rpm -qa | grep jdk

# rpm -e --nodeps tzdata-java-2012j-1.el6.noarch

# rpm -e --nodeps java-1.6.0-openjdk-1.6.0.0-1.50.1.11.5.el6_3.x86_64

# rpm -e --nodeps java-1.7.0-openjdk-1.7.0.9-2.3.4.1.el6_3.x86_64

下载jdk

wget http://download.oracle.com/otn-pub/java/jdk/8u181-b13/96a7b8442fe848ef90c96a2fad6ed6d1/jdk-8u181-linux-x64.tar.gz

2.4 配置Java环境变量

# vi /etc/profile

#JAVA_HOME

export JAVA_HOME=/usr/jdk

export PATH=$JAVA_HOME/bin:$PATH

生效配置

source /etc/profile

检查Java环境变量

[root@47 ~]# java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

3、配置SSH免密钥登录

使用ssh登录的时候不需要用户名密码

对node-47执行

$ ssh-keygen 回车,生产当前主机的公钥和私钥

//分发密钥(要向3台都发送)

$ ssh-copy-id node-47

$ ssh-copy-id node-101

$ ssh-copy-id node-106

然后就可以测试一下,node-47 ssh 其他机器是不是可以免密码登陆了,如果ok,如下

[root@47 .ssh]# ssh node-101

Last login: Thu Sep 13 14:32:22 2018 from 180.169.129.212

Welcome to Alibaba Cloud Elastic Compute Service !

那接下来,操作另外两台机器对其他机器的免密码登陆。

$ ssh-keygen

$ ssh-copy-id node-47

$ ssh-copy-id node-101

$ ssh-copy-id node-106

分发完成之后会在用户主目录下.ssh目录生成以下文件

$ ls .ssh/

authorized_keys id_rsa id_rsa.pub known_hosts

测试失败,需要先删除.ssh目录下的所有文件,重做一遍

4、安装Hadoop

1. 下载hadoop

wget http://archive.apache.org/dist/hadoop/common/hadoop-2.8.3/hadoop-2.8.3.tar.gz

2. 删除${HADOOP_HOME}/share/doc

$ rm -rf doc/

3. 配置java环境支持在${HADOOP_HOME}/etc/hadoop

在hadoop-env.sh mapred-env.sh yarn-env.sh中配置

export JAVA_HOME=/usr/jdk

4.=======core-site.xml===

=============hdfs-site.xml==========

=================mapred-site.xml=======

================yarn-site.xml======

===============================

5. 配置slaves

node-47

node-101

node-106

5、分发hadoop(已经配置好的)目录到其他两台服务器上

scp -r /opt/modules/hadoop-2.8.3 node-101:/opt/modules/

scp -r /opt/modules/hadoop-2.8.3 node-106:/opt/modules/

6、格式化Namenode

先配置hadoop环境变量

export HADOOP_HOME=/opt/modules/hadoop-2.8.3

export PATH=$HADOOP_HOME/bin:$PATH

在node-47上的${HADOOP_HOME}/bin

$ bin/hdfs namenode -format

【注意】

1.先将node-47的hadoop配置目录分发到node-101和node-106

2.保证3台上的配置内容一模一样

3.先确保将3台之前残留的data 和 logs删除掉(如果没有data目录,需要先创建data目录)

4.最后格式化

7、启动进程

在node-47上使用如下命令启动HDFS

$ sbin/start-dfs.sh

在node-106上使用如下命令启动YARN

$ sbin/start-yarn.sh

停止进程

在node-47上使用如下命令停止HDFS

$ sbin/stop-dfs.sh

在node-106上使用如下命令停止YARN

$ sbin/stop-yarn.sh

【注意】

修改任何配置文件,请先停止所有进程,然后重新启动

8、检查启动是否正常

3台上jps查看进程,参考之前的集群规划

node-47:

15328 SecondaryNameNode

15411 NodeManager

15610 Jps

15228 DataNode

node-101:

15328 SecondaryNameNode

15411 NodeManager

15620 Jps

15228 DataNode

PC3

17170 DataNode

17298 ResourceManager

17401 NodeManager

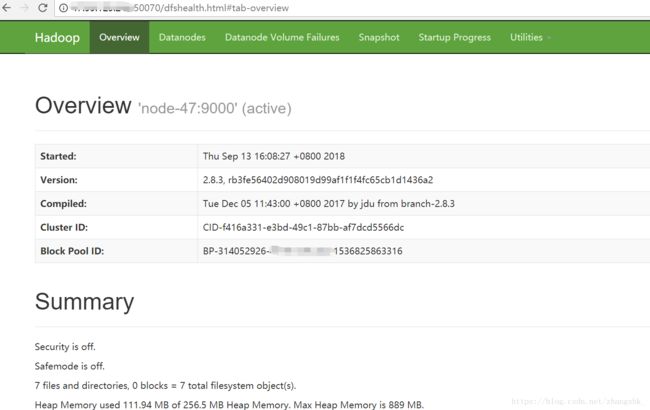

验证namenode的web访问是否正常

namenode的web访问主机名 (注意如果访问不了,应该是端口没有打开,可以配置安全组,将50070端口打开)

此外可以看到三个节点连接正常:

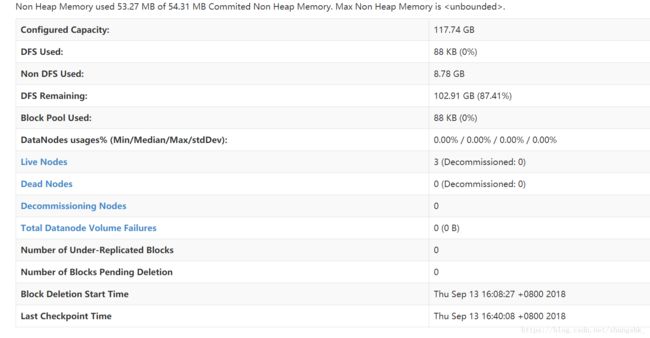

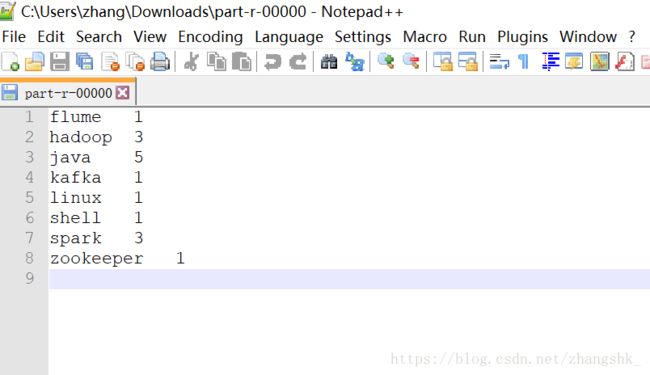

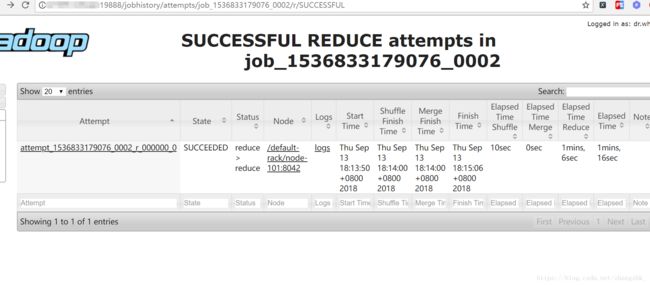

9.wordcount验证

下面,通过hadoop自带的example ,入门的wordcount,测试一下MR, 同时看一下日志聚集功能是否正常。

hdfs dfs -put wc.txt /test(put的时候遇到了个问题,https://blog.csdn.net/zhangshk_/article/details/82692628)

hadoop jar /opt/modules/hadoop-2.8.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.3.jar wordcount /test/wc.txt /output/wc

发现可以正常运行:

[root@47 datasource]# hadoop jar /opt/modules/hadoop-2.8.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.3.jar wordcount /test/wc.txt /output/wc

18/09/13 18:12:49 INFO client.RMProxy: Connecting to ResourceManager at node-106/106.15.182.83:8032

18/09/13 18:12:51 INFO input.FileInputFormat: Total input files to process : 1

18/09/13 18:12:52 INFO mapreduce.JobSubmitter: number of splits:1

18/09/13 18:12:54 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536833179076_0002

18/09/13 18:12:56 INFO impl.YarnClientImpl: Submitted application application_1536833179076_0002

18/09/13 18:12:56 INFO mapreduce.Job: The url to track the job: http://node-106:8088/proxy/application_1536833179076_0002/

18/09/13 18:12:56 INFO mapreduce.Job: Running job: job_1536833179076_0002

18/09/13 18:13:13 INFO mapreduce.Job: Job job_1536833179076_0002 running in uber mode : false

18/09/13 18:13:13 INFO mapreduce.Job: map 0% reduce 0%

18/09/13 18:13:49 INFO mapreduce.Job: map 100% reduce 0%

18/09/13 18:14:13 INFO mapreduce.Job: map 100% reduce 100%

18/09/13 18:18:55 INFO mapreduce.Job: Job job_1536833179076_0002 completed successfully

18/09/13 18:18:56 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=106

FILE: Number of bytes written=315145

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=194

HDFS: Number of bytes written=68

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Rack-local map tasks=1

Total time spent by all maps in occupied slots (ms)=32367

Total time spent by all reduces in occupied slots (ms)=76224

Total time spent by all map tasks (ms)=32367

Total time spent by all reduce tasks (ms)=76224

Total vcore-milliseconds taken by all map tasks=32367

Total vcore-milliseconds taken by all reduce tasks=76224

Total megabyte-milliseconds taken by all map tasks=33143808

Total megabyte-milliseconds taken by all reduce tasks=78053376

Map-Reduce Framework

Map input records=7

Map output records=16

Map output bytes=162

Map output materialized bytes=106

Input split bytes=96

Combine input records=16

Combine output records=8

Reduce input groups=8

Reduce shuffle bytes=106

Reduce input records=8

Reduce output records=8

Spilled Records=16

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=275

CPU time spent (ms)=1360

Physical memory (bytes) snapshot=387411968

Virtual memory (bytes) snapshot=4232626176

Total committed heap usage (bytes)=226557952

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=98

File Output Format Counters

Bytes Written=68

日志聚集也正常: