FCN Tensorflow源码阅读注释和总结

项目地址:https://github.com/shekkizh/FCN.tensorflow

为了直观的从头到尾捋一遍代码和tensorflow语法,所以把 tensorflowUtils.py 文件中的大多和tensorflow相关的辅助函数在 FCN.py 中重新实现了一遍。

FCN 优点:

- 可以输入任意大小的图像;

- 全卷积代替全连接,减少参数数目、运算量;

FCN缺点:

- 结果还是不够精细;

- 对各个像素进行分类,没有充分考虑像素与像素之间的关系,缺乏空间一致性;

为方便理解,假定输入的单张图像尺寸为 224 x 224 x3;

预定义:

FLAGS = tf.flags.FLAGS

# 名称, 值, 说明

tf.flags.DEFINE_integer('batch_size', '2', 'batch size for training')

tf.flags.DEFINE_string('logs_dir', 'log/', 'path to logs directory')

tf.flags.DEFINE_string('data_dir', './datasets/MIT_SceneParsing/', 'path to datasets')

tf.flags.DEFINE_float('learning_rate', '1e-4', 'learning rate for Adam Optimizer')

tf.flags.DEFINE_string('model_dir', 'datasets/', 'path to vgg model mat')

tf.flags.DEFINE_bool('debug', 'False', 'Debug mode: True / False')

tf.flags.DEFINE_string('mode', 'train', 'Mode: train / visualize / test')一些参数设置 :

# vgg19 模型文件地址(如果未下载)

MODEL_URL = 'http://www.vlfeat.org/matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat'

# 最大迭代次数 10^5+1

MAX_ITERATION = int(1e5 + 1)

# 分割类别 150 + 1(背景)

NUM_OF_CLASSES = 151

# 图像尺寸 224 x 224

IMAGE_SIZE = 224vgg mat文件转换为需要的格式:

def vgg_net(weights, image):

layers = (

'conv1_1', 'relu1_1', # layer 1

'conv1_2', 'relu1_2', 'pool1', # layer 2

'conv2_1', 'relu2_1', # layer 3

'conv2_2', 'relu2_2', 'pool2', # layer 4

'conv3_1', 'relu3_1', # layer 5

'conv3_2', 'relu3_2', # layer 6

'conv3_3', 'relu3_3', # layer 7

'conv3_4', 'relu3_4', 'pool3', # layer 8

'conv4_1', 'relu4_1', # layer 9

'conv4_2', 'relu4_2', # layer 10

'conv4_3', 'relu4_3', # layer 11

'conv4_4', 'relu4_4', 'pool4', # layer 12

'conv5_1', 'relu5_1', # layer 13

'conv5_2', 'relu5_2', # layer 14

'conv5_3', 'relu5_3', # layer 15

'conv5_4', 'relu5_4' # layer 16

)

net = {}

current = image

for i, name in enumerate(layers):

kind = name[:4]

# 卷积层

if kind == 'conv':

# vgg mat文件本质为一个字典,详情定义见下一篇博客

kernels, bias = weights[i][0][0][0][0]

# matconvnet: weights 格式 [width, height, in_channels, out_channels]

# tensorflow: weights 格式 [height, width, in_channels, out_channels]

init_kernels = tf.constant_initializer(np.transpose(kernels, (1, 0, 2, 3)), dtype=tf.float32)

kernels = tf.get_variable(name=name+'_w', initializer=init_kernels, shape=kernels.shape)

init_bias = tf.constant_initializer(bias.reshape(-1), dtype=tf.float32)

bias = tf.get_variable(name=name+'_b', initializer=init_bias, shape=bias.shape)

conv = tf.nn.conv2d(current, kernels, strides=[1, 1, 1, 1], padding='SAME')

current = tf.nn.bias_add(conv, bias)

# relu

elif kind == 'relu':

current = tf.nn.relu(current, name=name)

# 池化层(均值池化)

elif kind == 'pool':

current = tf.nn.avg_pool(current, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

net[name] = current

# 返回重新构建的网络权重

return net推断(预测):

# inference: prediction

# 参数为输入的 单张图片 和 dropout 保留比例

def inference(image, keep_prob):

# image value should be in range 0-255

print("setting up vgg initialized conv layers ...")

# 获取模型文件,函数实现见 tensorflowUtils.py 文件

model_data = utils.get_model_data(FLAGS.model_dir, MODEL_URL)

# 模型文件中存储的均值图像集

mean = model_data['normalization'][0][0][0]

# 图像的均值,(在 h, w 两个维度取均值)

mean_pixel = np.mean(mean, axis=(0, 1))

# 降维

weights = np.squeeze(model_data['layers'])

# 0均值化,反向传播中权重参数的收敛

processed_image = image - mean_pixel

# 指明作用域,便于同一作用域变量共享和防止不同作用域变量冲突

with tf.variable_scope('inference'):

image_net = vgg_net(weights, processed_image)

# 使用vgg19网络的最后一层(即舍弃最后的全连接层)

conv_final_layer = image_net['conv5_3']

pool5 = tf.nn.max_pool(conv_final_layer, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

# 第6个卷积部分,输入512个7x7的卷积核(获得更大感受野),输出维度4096

# 流程:随机初始化weight, 0初始化bias,调用tf.get_variable, ->

# -> 卷积操作,添加bias,relu激活函数,dropout防止过拟合

W6_init = tf.truncated_normal([7, 7, 512, 4096], stddev=0.02)

W6 = tf.get_variable('W6', initializer=W6_init)

b6_init = tf.constant(0.0, shape=[4096])

b6 = tf.get_variable('b6', initializer=b6_init)

convolution6 = tf.nn.conv2d(pool5, W6, strides=[1, 1, 1, 1], padding='SAME')

conv6 = tf.nn.bias_add(convolution6, b6)

relu6 = tf.nn.relu(conv6, name='relu6')

relu_dropout6 = tf.nn.dropout(relu6, keep_prob=keep_prob)

# 第7个卷积部分,输入4096个1x1卷积核,输出维度4096

W7_init = tf.truncated_normal([1, 1, 4096, 4096], stddev=0.02)

W7 = tf.get_variable('W7', initializer=W7_init)

b7_init = tf.constant(0.0, shape=[4096])

b7 = tf.get_variable('b7', initializer=b7_init)

convolution7 = tf.nn.conv2d(relu_dropout6, W7,

strides=[1, 1, 1, 1], padding='SAME')

conv7 = tf.nn.bias_and(convolution7, b7)

relu7 = tf.nn.relu(conv7, name='relu7')

relu_dropout7 = tf.nn.dropout(relu7, keep_prob=keep_prob)

# 第8个卷积部分, 输入4096个1x1的卷积核,输出 NUM_OF_CLASSES,即要预测的类别数

# 没有 relu 和 dropout

W8_init = tf.truncated_normal([1, 1, 4096, NUM_OF_CLASSES], stddev=0.02)

W8 = tf.get_variable('W8', initializer=W8_init)

b8_init = tf.constant(0.0, shape=[NUM_OF_CLASSES])

b8 = tf.get_variable('b7', initializer=b8_init)

convolution8 = tf.nn.conv2d(relu_dropout7, W8,

strides=[1, 1, 1, 1], padding='SAME')

conv8 = tf.nn.bias_and(convolution8, b8)

'''

pool1 比原图缩小2倍

pool2 比原图缩小4倍

pool3 比原图缩小8倍

pool4 比原图缩小16倍

pool5 比原图缩小32倍

'''

# upsampling 1 conv8 <==> pool4

# 上采样,转置卷积,conv8 和 pool4 特征融合

# pool4 形状: 14 x 14 x 512

deconv_shape1 = image_net['pool4'].get_shape()

# W_t1 形状:4 x 4 x 512 x 151

W_t1_init = tf.truncated_normal([4, 4, deconv_shape1[3].value, NUM_OF_CLASSES], stddev=0.02)

W_t1 = tf.get_variable(name='W_t1', initializer=W_t1_init)

b_t1_init = tf.constant(0.0, shape=[deconv_shape1[3].value])

b_t1 = tf.get_variable(name='b_t1', initializer=b_t1_init)

# 如果conv_t1_output_shape为空,则可以由conv8推导出形状(源代码中有)

conv_t1_output_shape = tf.shape(image_net['pool4'])

convolution_t1 = tf.nn.conv2d_transpose(conv8, W_t1, conv_t1_output_shape,

strides=[1, 2, 2, 1], padding='SAME')

conv_t1 = tf.nn.bias_and(convolution_t1, b_t1)

# 反卷积后的 conv_t1 和 pool4 融合 (相加)

fuse_1 = tf.add(conv_t1, image_net['pool4'], name='fuse_1')

# upsampling 2 fuse_1 <==> pool3, fuse_1 和 pool3 特征融合

# pool3 形状:28 x 28 x 512

deconv_shape2 = image_net['pool3'].get_shape()

# W_t2 形状:4 x 4 x 512 x 512

W_t2_init = tf.truncated_normal([4, 4, deconv_shape2[3].value, deconv_shape1[3].value], stddev=0.02)

W_t2 = tf.get_variable(name='W_t2', initializer=W_t2_init)

b_t2_init = tf.constant(0.0, shape=[deconv_shape2[3].value])

b_t2 = tf.get_variable(name='b_t2', initializer=b_t2_init)

conv_t2_output_shape = tf.shape(image_net['pool3'])

convolution_t2 = tf.nn.conv2d_transpose(fuse_1, W_t2, conv_t2_output_shape,

strides=[1, 2, 2, 1], padding='SAME')

conv_t2 = tf.nn.bias_and(convolution_t2, b_t2)

fuse_2 = tf.add(conv_t2, image_net['pool3'], name='fuse_2')

# upsampling 3: fuse_2 ==> image, fuse_2 上采样后大小为原图大小

# image shape: 224 x 224 x 3

shape = tf.shape(image)

# tf.stack 拼接张量,axis默认为0

# deconv_shape3: 224 x 224 x 3 x 151

deconv_shape3 = tf.stack([shape[0], shape[1], shape[2], NUM_OF_CLASSES])

W_t3_init = tf.truncated_normal([16, 16, NUM_OF_CLASSES, deconv_shape2[3].value], stddev=0.02)

W_t3 = tf.get_variable(name='W_t3', initializer=W_t3_init)

b_t3_init = tf.constant(0.0, shape=[deconv_shape2[3].value])

b_t3 = tf.get_variable(name='b_t3', initializer=b_t3_init)

conv_t3_output_shape = tf.shape(deconv_shape3)

convolution_t3 = tf.nn.conv2d_transpose(fuse_2, W_t3, conv_t3_output_shape,

strides=[1, 8, 8, 1], padding='SAME')

conv_t3 = tf.nn.bias_add(convolution_t3, b_t3)

# prediciton,返回151个类别中预测值最大的类别的index(即第几类)

annotation_pred = tf.argmax(conv_t3, dimension=3, name='prediction')

# tf.expand_dims 扩展维度为: h, w, NUM_OF_CLASSES, 1

return tf.expand_dims(annotation_pred, dim=3), conv_t3

训练阶段 train:

def train(loss_val, var_list):

# Adam优化器

optimizer = tf.train.AdamOptimizer(FLAGS.learning_rate)

# 梯度定义

grads = optimizer.compute_gradients(loss_val, var_list=var_list)

return optimizer.apply_gradients(grads)

主函数 main:

def main():

# dropout 占位符

keep_probability = tf.placeholder(tf.float32, name="keep_probabilty")

# 输入图像, None 表示样本数依据输入自动定义

image = tf.placeholder(tf.float32, shape=[None, IMAGE_SIZE, IMAGE_SIZE, 3], name="input_image")

# 图像对应的标签

annotation = tf.placeholder(tf.int32, shape=[None, IMAGE_SIZE, IMAGE_SIZE, 1], name="annotation")

# 预测图像的标签 和 最后一层网络的输出logits(非归一化对数概率,即未归一化的标签)

pred_annotation, logits = inference(image, keep_probability)

# 可视化: 原图, 真实标签, 预测标签

tf.summary.image("input_image", image, max_outputs=2)

tf.summary.image("ground_truth", tf.cast(annotation, tf.uint8), max_outputs=2)

tf.summary.image("pred_annotation", tf.cast(pred_annotation, tf.uint8), max_outputs=2)

# 定义损失函数,传入的logits为神经网络输出层的输出,shape为[batch_size,NUM_OF_CLASSES]

# 传入的label为一个一维的vector,长度等于batch_size,每一个值的取值区间必须是[0,NUM_OF_CLASSES)

# 先计算logits的softmax值,再计算softmax与label的cross_entropy

loss = tf.reduce_mean((tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=logits,

labels=tf.squeeze(annotation, squeeze_dims=[3]),

name="entropy")))

# 可视化 loss

loss_summary = tf.summary.scalar("entropy", loss)

# 返回需要训练的变量列表

trainable_var = tf.trainable_variables()

# 调用之前定义的优化器函数然后可视化

train_op = train(loss, trainable_var)

# 定义合并变量操作,一次性生成所有摘要数据

summary_op = tf.summary.merge_all()

print("Setting up image reader...")

# 读取训练集、验证集

train_records, valid_records = scene_parsing.read_dataset(FLAGS.data_dir)

print("Setting up dataset reader")

image_options = {'resize': True, 'resize_size': IMAGE_SIZE}

# 读取数据集并按要求转换形状格式

if FLAGS.mode == 'train':

train_dataset_reader = dataset.BatchDatset(train_records, image_options)

validation_dataset_reader = dataset.BatchDatset(valid_records, image_options)

sess = tf.Session()

print("Setting up Saver...")

saver = tf.train.Saver()

# 在 FLAGS.logs_dir 内分别创建 'train' 和 'validation' 两个文件夹

# 写入logs为将来可视化做准备

train_writer = tf.summary.FileWriter(FLAGS.logs_dir + '/train', sess.graph)

validation_writer = tf.summary.FileWriter(FLAGS.logs_dir + '/validation')

sess.run(tf.global_variables_initializer())

# 加载之前的checkpoint(检查点日志)检查点保存在logs文件里

ckpt = tf.train.get_checkpoint_state(FLAGS.logs_dir)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

print("Model restored...")

if FLAGS.mode == "train":

for itr in range(MAX_ITERATION):

# 按 batch_size 读取图片和标签

train_images, train_annotations = train_dataset_reader.next_batch(FLAGS.batch_size)

feed_dict = {image: train_images, annotation: train_annotations, keep_probability: 0.85}

sess.run(train_op, feed_dict=feed_dict)

# 打印模型训练过程训练集损失每10步打印一次并可视化

if itr % 10 == 0:

train_loss, summary_str = sess.run([loss, loss_summary], feed_dict=feed_dict)

print("Step: %d, Train_loss:%g" % (itr, train_loss))

# 每10步搜集所有的写文件

train_writer.add_summary(summary_str, itr)

# 每500步打印测试集送入模型后的预测损失保存生成的检查点文件

if itr % 500 == 0:

valid_images, valid_annotations = validation_dataset_reader.next_batch(FLAGS.batch_size)

valid_loss, summary_sva = sess.run([loss, loss_summary],

feed_dict={image: valid_images,

annotation: valid_annotations,

keep_probability: 1.0})

print("%s ---> Validation_loss: %g" % (datetime.datetime.now(), valid_loss))

# 添加 validation loss 至 TensorBoard

validation_writer.add_summary(summary_sva, itr)

saver.save(sess, FLAGS.logs_dir + "model.ckpt", itr)

# 可视化

elif FLAGS.mode == "visualize":

valid_images, valid_annotations = validation_dataset_reader.get_random_batch(FLAGS.batch_size)

pred = sess.run(pred_annotation,

feed_dict={image: valid_images,

annotation: valid_annotations,

keep_probability: 1.0})

valid_annotations = np.squeeze(valid_annotations, axis=3)

# 去掉pred索引为3位置的维度

pred = np.squeeze(pred, axis=3)

# 显示并给原图、标签、预测标签命名。str(5+itr)可以修改图片的索引号,修改bachsize的值等

for itr in range(FLAGS.batch_size):

utils.save_image(valid_images[itr].astype(np.uint8), FLAGS.logs_dir, name="inp_" + str(5+itr))

utils.save_image(valid_annotations[itr].astype(np.uint8), FLAGS.logs_dir, name="gt_" + str(5+itr))

utils.save_image(pred[itr].astype(np.uint8), FLAGS.logs_dir, name="pred_" + str(5+itr))

print("Saved image: %d" % itr)

主要总结如下:

- vgg19 .mat文件解析

- train 流程

- tf.constant_initializer

- tf.truncated_normal

- Leaky ReLU

- tensorflow 初始化 Weight 和 bias

- 卷积 / 反卷积

- 0均值化作用

- tf.summary.image

- tf.expand_dims

- np.squeeze

- sys.stdout.write

- 偏函数

vgg19 .mat文件解析:

- type(vgg) :返回类型是个dict;

- vgg.keys() :dict_keys(['__header__', '__version__', '__globals__', 'layers', 'classes', 'normalization']),前三项是meta信息;

- vgg[‘normalization’][0][0][0]:图像均值; vgg['layers'].shape: (1, 43) ;

- layers = layers[0] :对应模型43层信息的(conv1_1,relu,conv1_2…);

- 某一层 layer = layers[0] ==> dtype=[('weights', 'O'), ('pad', 'O'), ('type', 'O'), ('name', 'O'), ('stride', 'O')] ==> 对应weight(含有bias), pad(填充元素,无用),type, name, stride;

- layer.shape:(1, 1) ==> layer = layer[0][0] ==> name = layer[3] , weight = layer[0] ==> weight.shape :(1, 2) ==> weight = weight[0] ==> weight, bias = weight

- name = vgg['layers'(字典key)][0(去掉"虚"的维,相当于np.squeeze)][layer(层索引)][0][0(连续两次去掉虚的维度)][3(weigh,pad,type,name,stride5类信息的索引,从0开始)][0(去掉"虚"的维,相当于np.squeeze)]

- weight, bias = vgg['layers'(字典key)][0(去掉"虚"的维,相当于np.squeeze)][layer(层索引)][0][0(连续两次去掉虚的维度)][0(weigh, pad, type, name, stride5类信息的索引,从0开始)][0(去掉"虚"的维,相当于np.squeeze)]

- ==>

kernels, bias = weights[i][0][0][0][0] # 'kernels' means conv, relu or pool# 前16层

layers = (

'conv1_1', 'relu1_1', # layer 1

'conv1_2', 'relu1_2', 'pool1', # layer 2

'conv2_1', 'relu2_1', # layer 3

'conv2_2', 'relu2_2', 'pool2', # layer 4

'conv3_1', 'relu3_1', # layer 5

'conv3_2', 'relu3_2', # layer 6

'conv3_3', 'relu3_3', # layer 7

'conv3_4', 'relu3_4', 'pool3', # layer 8

'conv4_1', 'relu4_1', # layer 9

'conv4_2', 'relu4_2', # layer 10

'conv4_3', 'relu4_3', # layer 11

'conv4_4', 'relu4_4', 'pool4', # layer 12

'conv5_1', 'relu5_1', # layer 13

'conv5_2', 'relu5_2', # layer 14

'conv5_3', 'relu5_3', # layer 15

'conv5_4', 'relu5_4' # layer 16

)

train 流程:

def train(loss_val, var_list):

optimizer = tf.train.AdamOptimizer(FLAGS.learning_rate)

# loss 值, 参数列表

grads = optimizer.compute_gradients(loss_val, var_list=var_list)

return optimizer.apply_gradients(grads)

tf.constant_initializer(),也可以简写为tf.Constant(),初始化为常数,通常偏置项就是用它初始化的。

由它衍生出的两个初始化方法:

- tf.zeros_initializer(), 也可以简写为tf.Zeros()

- tf.ones_initializer(), 也可以简写为tf.Ones()

tf.truncated_normal([7, 7, 512, 4096], stddev=0.02)

如果生成的值大于平均值2个标准偏差的值则丢弃重新选择

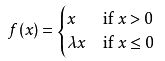

带泄露线性整流函数(Leaky ReLU):

def leaky_relu(x, alpha=0.0, name=''):

return tf.maximum(alpha*x, x, name)

初始化 Weight 和 bias, 卷积 / 反卷积:

# 下采样 W, b 初始化

W6_init = tf.truncated_normal([7, 7, 512, 4096], stddev=0.02)

W6 = tf.get_variable('W6', initializer=W6_init)

b6_init = tf.constant(0.0, shape=[4096])

b6 = tf.get_variable('b6', initializer=b6_init)

# 卷积 + relu + dropout

convolution6 = tf.nn.conv2d(pool5, W6, strides=[1, 1, 1, 1], padding='SAME')

conv6 = tf.nn.bias_add(convolution6, b6)

relu6 = tf.nn.relu(conv6, name='relu6')

relu_dropout6 = tf.nn.dropout(relu6, keep_prob=keep_prob)

# 上采样 W, b 初始化

# conv8 <==> pool4

deconv_shape1 = image_net['pool4'].get_shape()

W_t1_init = tf.truncated_normal([4, 4, deconv_shape1[3].value, NUM_OF_CLASSES], stddev=0.02)

W_t1 = tf.get_variable(name='W_t1', initializer=W_t1_init)

b_t1_init = tf.constant(0.0, shape=[deconv_shape1[3].value])

b_t1 = tf.get_variable(name='b_t1', initializer=b_t1_init)

# 反卷积(转置卷积) + 特征融合

conv_t1_output_shape = tf.shape(image_net['pool4'])

# tf.nn.conv2d_transpose: input, weight, output_shape, strides, padding

convolution_t1 = tf.nn.conv2d_transpose(conv8, W_t1, conv_t1_output_shape,

strides=[1, 2, 2, 1], padding='SAME')

conv_t1 = tf.nn.bias_and(convolution_t1, b_t1)

fuse_1 = tf.add(conv_t1, image_net['pool4'], name='fuse_1')

0均值化作用:

在反向传播中可以加快网络中每一层权重参数的收敛,运用链式法则,反向传播时权重的梯度可以表示如下:

当x全为正或者全为负时,每次返回的梯度都只会沿着一个方向发生变化,这样就会使得权重收敛效率很低。

但当x正负数量“差不多”时,那么梯度的变化方向就会不确定,这样就能达到上图中的变化效果,加速了权重的收敛;

tf.summary.image:

构建的图像的Tensor必须是4-D形状[batch_size, height, width, channels], 其中channels可以是:

- tensor被解析成灰度图像, 1 channel;

- tensor被解析成RGB图像, 3 channels;

- tensor被解析成RGBA图像,4 channels;

此函数将【计算图】中的【图像数据】写入TensorFlow中的【日志文件】,以便为将来tensorboard的可视化做准备;

tf.expand_dims(annotation_pred, dim=3)

在dim处加一维,变成 (d1, d2, d3, 1)

np.squeeze : 降维,默认axis=0

其他:

sys.stdout.write 和 print 区别:

- sys.stdout.write是将str写到流,原封不动,不会像print那样默认end='\n'

- sys.stdout.write只能输出一个str,而print能输出多个str,且默认sep=' '(一个空格)

- print,默认flush=False.

- print还可以直接把值写到file中

import sys

f = open('test.txt', 'w')

print('print write into file', file=f)

f.close()sys.stdout.flush():

flush是刷新的意思,在print和sys.stdout.write输出时是有一个缓冲区的。比如要向文件里输出字符串,是先写进内存(因为print默认flush=False,也没有手动执行flush的话),在close文件之前直接打开文件是没有东西的,如果执行一个flush就有了;

import time

import sys

for i in range(5):

print(i)

sys.stdout.flush()

time.sleep(1)在终端执行上面代码,会一秒输出一个数字。然而如果注释掉flush,就会在5秒后一次输出01234;

os.stat(filepath):

stat 系统调用时用来返回相关文件的系统状态信息;

偏函数

from operator import mul

mul100=partial(mul,100)

print(mul100(10)) # 1000

checkpoint(检查点日志):ckpt

pickle / unpickle :序列化 / 反序列化(二进制保存 / 加载)