大文本文件(接近7GB): 统计频数,Top K问题求解(二)

目录

- 说明

- 原始输入

- word count MR & 输出

- 输出

- MR日志

- 附:Java代码

- TopK 求解

- 输入

- 输出

- 附:Java代码

说明

本文是接着上一篇博文:大文本文件(接近7GB): 统计频数,Top K问题求解,用Hadoop mapreduce 处理求解说明,标题沿用了上一篇的

原始输入

5.6G的原始txt数据,hdfs的block size=128MB,原始文件被分成了48个block

word count MR & 输出

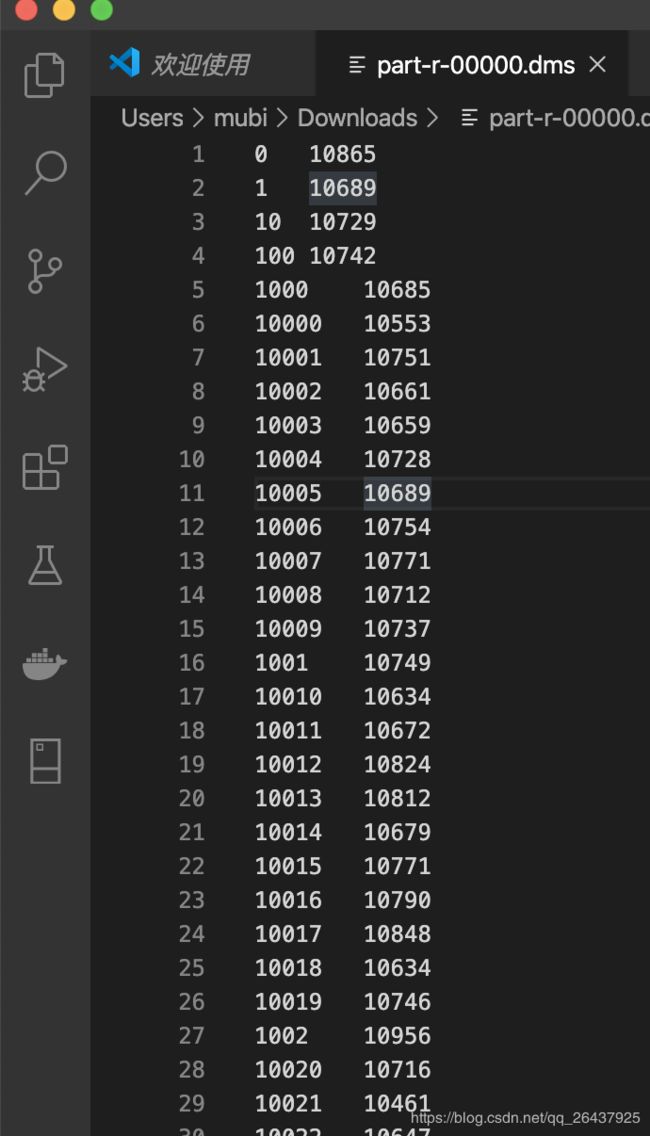

输出

如下是类似word count先求出每个IP出现的总次数,最后输出文件如下

MR日志

如下是截取的 mapreduce 日志

2020-05-01 11:40:58,120 INFO [org.apache.hadoop.mapreduce.Job] - Counters: 36

File System Counters

FILE: Number of bytes read=11257636997

FILE: Number of bytes written=12669380730

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=164048907782

HDFS: Number of bytes written=1188890

HDFS: Number of read operations=2649

HDFS: Number of large read operations=0

HDFS: Number of write operations=100

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=1073741824

Map output records=1073741824

Map output bytes=10618119939

Map output materialized bytes=57067008

Input split bytes=4800

Combine input records=1109778196

Combine output records=40836372

Reduce input groups=100000

Reduce shuffle bytes=57067008

Reduce input records=4800000

Reduce output records=100000

Spilled Records=45636372

Shuffled Maps =48

Failed Shuffles=0

Merged Map outputs=48

GC time elapsed (ms)=5273

Total committed heap usage (bytes)=52556201984

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=6323345155

File Output Format Counters

Bytes Written=1188890

2020-05-01 11:40:58,121 DEBUG [org.apache.hadoop.security.UserGroupInformation] - PrivilegedAction as:mubi (auth:SIMPLE) from:org.apache.hadoop.mapreduce.Job.updateStatus(Job.java:328)

Job Finished

-

Map input records=1073741824: 即随机产生的

1024 * 1024 * 1024=1073741824条原始数据 -

map处理是读取一行处理一个数据,所以 Map output records=1073741824

-

由于没有特殊设置split size,所以默认的map数量就是block size,即

48个 -

设置了 Combiner,因为求sum是符合的Combiner的

-

Reduce output records=100000: 即reduce输出是

100000条记录,即我们随机生产的【0,100000】的随机整数

附:Java代码

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.net.URI;

import java.util.Iterator;

/**

* @Author mubi

* @Date 2020/4/30 22:42

*/

public class IpCntMR {

static class IpCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

@Override

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

// 将mapTask传给我们的文本内容先转换为String

String ip = value.toString();

// 将 ip 输出为TopK 求解

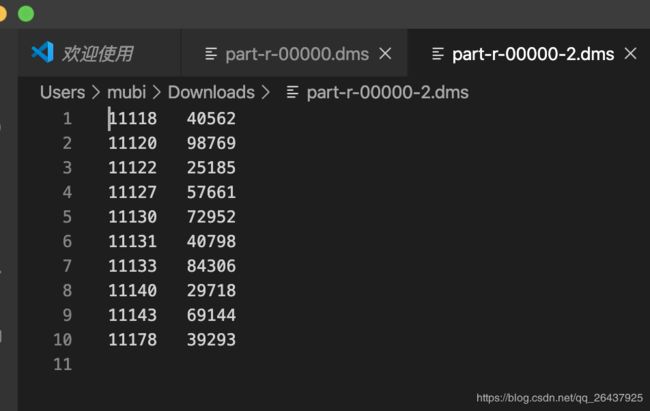

输入

输入则是上文 word count 的输出文件

输出

附:Java代码

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import sun.security.krb5.internal.KRBError;

import java.io.IOException;

import java.net.URI;

import java.util.Iterator;

import java.util.Stack;

import java.util.TreeMap;

/**

* @Author mubi

* @Date 2020/4/30 22:42

*/

public class TopCntMR {

static int K = 10;

static class KMapper extends Mapper<LongWritable, Text, IntWritable, Text> {

TreeMap<Integer, String> map = new TreeMap<Integer, String>();

@Override

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

if(line.trim().length() > 0 && line.indexOf("\t") != -1) {

String[] arr = line.split("\t", 2);

String name = arr[0];

Integer num = Integer.parseInt(arr[1]);

map.put(num, name);

if(map.size() > K) {

map.remove(map.firstKey());

}

}

}

@Override

protected void cleanup(

Mapper<LongWritable, Text, IntWritable, Text>.Context context)

throws IOException, InterruptedException {

for(Integer num : map.keySet()) {

context.write(new IntWritable(num), new Text(map.get(num)));

}

}

}

public static class KReducer extends Reducer<IntWritable, Text, IntWritable, Text> {

TreeMap<Integer, String> map = new TreeMap<Integer, String>();

@Override

public void reduce(IntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

map.put(key.get(), values.iterator().next().toString());

if(map.size() > K) {

map.remove(map.firstKey());

}

}

@Override

protected void cleanup(

Reducer<IntWritable, Text, IntWritable, Text>.Context context)

throws IOException, InterruptedException {

Stack<Integer> sta = new Stack<Integer>();

for(Integer num : map.keySet()) {

sta.push(num);

}

while (!sta.isEmpty()){

int num = sta.pop();

context.write(new IntWritable(num), new Text(map.get(num)));

}

}

}

public static void main(String[] args) throws Exception {

String uri = "hdfs://localhost:9000";

//输入路径

String dst = uri + "/output/ipoutput/part-r-00000";

//输出路径,必须是不存在的,空文件也不行。

String dstOut = uri + "/output/topk";

Configuration hadoopConfig = new Configuration();

hadoopConfig.set("fs.hdfs.impl", org.apache.hadoop.hdfs.DistributedFileSystem.class.getName());

hadoopConfig.set("fs.file.impl", org.apache.hadoop.fs.LocalFileSystem.class.getName());

// 删除输出目录

FileSystem fs = FileSystem.get(new URI(uri), hadoopConfig);

String path = "/output/topk";

Path outputPath = new Path(path);

if (fs.exists(outputPath)){

fs.delete(outputPath, true);;

}

Job job = new Job(hadoopConfig);

//如果需要打成jar运行,需要下面这句

job.setJarByClass(TopCntMR.class);

job.setJobName("IpCntMR");

//job执行作业时输入和输出文件的路径

FileInputFormat.addInputPath(job, new Path(dst));

FileOutputFormat.setOutputPath(job, new Path(dstOut));

//指定自定义的Mapper和Reducer作为两个阶段的任务处理类

job.setMapperClass(KMapper.class);

job.setReducerClass(KReducer.class);

// 设置combiner

job.setCombinerClass(KReducer.class);

//设置最后输出结果的Key和Value的类型

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

//执行job,直到完成

job.waitForCompletion(true);

System.out.println("Job Finished");

}

}