sparkStreaming 实时窗口分析

实时就是统计分析

比如:饿了么中午和晚上,区域订单数目统计

需求:

最近半小时的各个区域订单状态

11:00

10:30~11:00 半小时时间内,订单状态,还有多少订单没有配送,多的话调人

11:10

10:40~11:00 半小时时间内,订单状态

DStream

窗口统计分析

指定窗口的大小,也就是时间窗口 时间间隔

模拟数据:

订单号 地区id 价格

201710261645320001,12,45.00

201710261645320002,12,20.00

201710261645320301,14,10.00

201710261635320e01,12,4.00

201710261645320034,21,100.50

201710261645320021,14,2.00

201710261645323501,12,1.50

201710261645320281,14,3.00

201710261645332568,15,1000.50

201710261645320231,15,1000.00

201710261645320001,12,1000.00

201710261645320002,12,1000.00

201710261645320301,14,1000.00显示各个地区订单:

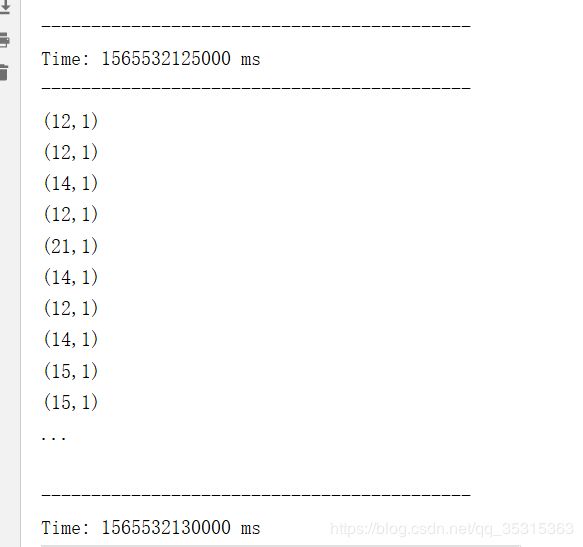

object J_WindowOrderTotalStreaming {

//批次时间,Batch Interval

val STREAMING_BATCH_INTERVAL = Seconds(5)

//设置窗口时间间隔

val STREAMING_WINDOW_INTERVAL = STREAMING_BATCH_INTERVAL * 3

//设置滑动窗口时间间隔

val STREAMING_SLIDER_INTERVAL = STREAMING_BATCH_INTERVAL * 3

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setMaster("local[3]") //为什么启动3个,有一个Thread运行Receiver

.setAppName("J_WindowOrderTotalStreaming")

val ssc: StreamingContext = new StreamingContext(conf, STREAMING_BATCH_INTERVAL)

//日志级别

ssc.sparkContext.setLogLevel("WARN")

val kafkaParams: Map[String, String] = Map(

"metadata.broker.list"->"bigdata-hpsk01.huadian.com:9092,bigdata-hpsk01.huadian.com:9093,bigdata-hpsk01.huadian.com:9094",

"auto.offset.reset"->"largest" //读取最新数据

)

val topics: Set[String] = Set("orderTopic")

val kafkaDStream: DStream[String] = KafkaUtils

.createDirectStream[String, String, StringDecoder,StringDecoder](

ssc,

kafkaParams,

topics

).map(_._2) //只需要获取Topic中每条Message中Value的值

//设置窗口

//val inputDStream = kafkaDStream.window(STREAMING_WINDOW_INTERVAL)

/**

* def window(windowDuration: Duration, slideDuration: Duration): DStream[T]

* windowDuration:

* 窗口大小,窗口时间间隔

* slideDuration

* 滑动大小,多久时间执行一次 最近窗口内数据统计

*/

val inputDStream = kafkaDStream.window(STREAMING_WINDOW_INTERVAL,STREAMING_SLIDER_INTERVAL)

val orderDStream: DStream[(Int, Int)] = inputDStream.transform(rdd=>{

rdd

//过滤不合法的数据

.filter(line => line.trim.length >0 && line.trim.split(",").length ==3)

//提取字段

.map(line =>{

val splits = line.split(",")

(splits(1).toInt,1)

})

})

//统计各个省份订单数目

val orderCountDStream = orderDStream.reduceByKey( _ + _)

orderCountDStream.print()

//启动流式实时应用

ssc.start() // 将会启动Receiver接收器,用于接收源端 的数据

//实时应用一旦启动,正常情况下不会自动停止,触发遇到特性情况(报错,强行终止)

ssc.awaitTermination() // Wait for the computation to terminate

}

}

统计各个地区订单数和

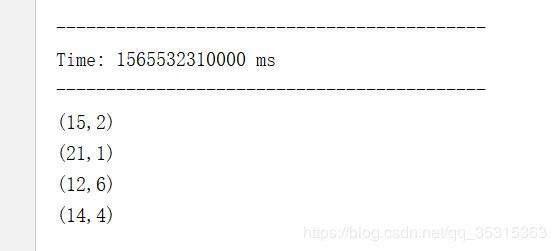

object K_WindowOrderTotalStreaming {

//批次时间,Batch Interval

val STREAMING_BATCH_INTERVAL = Seconds(5)

//设置窗口时间间隔

val STREAMING_WINDOW_INTERVAL = STREAMING_BATCH_INTERVAL * 3

//设置滑动窗口时间间隔

val STREAMING_SLIDER_INTERVAL = STREAMING_BATCH_INTERVAL * 2

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setMaster("local[3]") //为什么启动3个,有一个Thread运行Receiver

.setAppName("J_WindowOrderTotalStreaming")

val ssc: StreamingContext = new StreamingContext(conf, STREAMING_BATCH_INTERVAL)

//日志级别

ssc.sparkContext.setLogLevel("WARN")

val kafkaParams: Map[String, String] = Map(

"metadata.broker.list"->"bigdata-hpsk01.huadian.com:9092,bigdata-hpsk01.huadian.com:9093,bigdata-hpsk01.huadian.com:9094",

"auto.offset.reset"->"largest" //读取最新数据

)

val topics: Set[String] = Set("orderTopic")

val kafkaDStream: DStream[String] = KafkaUtils

.createDirectStream[String, String, StringDecoder,StringDecoder](

ssc,

kafkaParams,

topics

).map(_._2) //只需要获取Topic中每条Message中Value的值

//设置窗口

val orderDStream: DStream[(Int, Int)] = kafkaDStream.transform(rdd=>{

rdd

//过滤不合法的数据

.filter(line => line.trim.length >0 && line.trim.split(",").length ==3)

//提取字段

.map(line =>{

val splits = line.split(",")

(splits(1).toInt,1)

})

})

/**

* reduceByKeyAndWindow = window + reduceByKey

* def reduceByKeyAndWindow(

* reduceFunc: (V, V) => V,

* windowDuration: Duration,

* slideDuration: Duration

* ): DStream[(K, V)]

*/

//统计各个省份订单数目

val orderCountDStream = orderDStream.reduceByKeyAndWindow(

(v1:Int, v2:Int) => v1 + v2,

STREAMING_WINDOW_INTERVAL,

STREAMING_SLIDER_INTERVAL

)

orderCountDStream.print()

//启动流式实时应用

ssc.start() // 将会启动Receiver接收器,用于接收源端 的数据

//实时应用一旦启动,正常情况下不会自动停止,触发遇到特性情况(报错,强行终止)

ssc.awaitTermination() // Wait for the computation to terminate

}

}

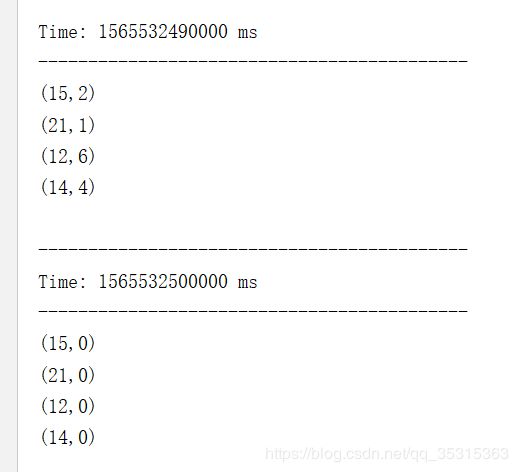

统计同一窗口下输入的各个地区订单数量:

object L_TrendOrderTotalStreaming {

//批次时间,Batch Interval

val STREAMING_BATCH_INTERVAL = Seconds(5)

//设置窗口时间间隔

val STREAMING_WINDOW_INTERVAL = STREAMING_BATCH_INTERVAL * 3

//设置滑动窗口时间间隔

val STREAMING_SLIDER_INTERVAL = STREAMING_BATCH_INTERVAL * 2

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setMaster("local[3]") //为什么启动3个,有一个Thread运行Receiver

.setAppName("J_WindowOrderTotalStreaming")

val ssc: StreamingContext = new StreamingContext(conf, STREAMING_BATCH_INTERVAL)

//日志级别

ssc.sparkContext.setLogLevel("WARN")

ssc.checkpoint("chkpt-trend-1000")

val kafkaParams: Map[String, String] = Map(

"metadata.broker.list"->"bigdata-hpsk01.huadian.com:9092,bigdata-hpsk01.huadian.com:9093,bigdata-hpsk01.huadian.com:9094",

"auto.offset.reset"->"largest" //读取最新数据

)

val topics: Set[String] = Set("orderTopic")

val kafkaDStream: DStream[String] = KafkaUtils

.createDirectStream[String, String, StringDecoder,StringDecoder](

ssc,

kafkaParams,

topics

).map(_._2) //只需要获取Topic中每条Message中Value的值

//设置窗口

val orderDStream: DStream[(Int, Int)] = kafkaDStream.transform(rdd=>{

rdd

//过滤不合法的数据

.filter(line => line.trim.length >0 && line.trim.split(",").length ==3)

//提取字段

.map(line =>{

val splits = line.split(",")

(splits(1).toInt,1)

})

})

/**

def reduceByKeyAndWindow(

reduceFunc: (V, V) => V,

invReduceFunc: (V, V) => V,

windowDuration: Duration,

slideDuration: Duration,

partitioner: Partitioner,

filterFunc: ((K, V)) => Boolean

): DStream[(K, V)]

*/

//统计各个省份订单数目

val orderCountDStream = orderDStream.reduceByKeyAndWindow(

(v1:Int, v2:Int) => v1 + v2,

(v1:Int, v2:Int) => v1 - v2,

STREAMING_WINDOW_INTERVAL,

STREAMING_SLIDER_INTERVAL

)

orderCountDStream.print()

//启动流式实时应用

ssc.start() // 将会启动Receiver接收器,用于接收源端 的数据

//实时应用一旦启动,正常情况下不会自动停止,触发遇到特性情况(报错,强行终止)

ssc.awaitTermination() // Wait for the computation to terminate

}

}一个窗口内的结果移动到下一个窗口时,只会保存key值,value值与上一个相减了

/**

def reduceByKeyAndWindow(

reduceFunc: (V, V) => V,

invReduceFunc: (V, V) => V,

windowDuration: Duration,

slideDuration: Duration,

partitioner: Partitioner,

filterFunc: ((K, V)) => Boolean

): DStream[(K, V)]

*/