基于HDFS的spark分布式Scala wordcount程序测试

基于HDFS的spark分布式Scala wordcount程序测试

本文是在Hadoop分布式集群和基于HDFS的spark分布式集群部署配置基础上进行Scala程序wordcount测试,环境分别是spark-shell和intelliJ IDEA 。

环境基础是:

| 节点 |

地址 |

HDFS |

Yarn |

Spark |

| VM1 |

196.168.168.11 |

Namenode |

ResourceManager |

Master worker |

| VM2 |

196.168.168.22 |

DataNode secondarynamenode |

NameManager |

Worker |

| VM3 |

196.168.168.33 |

DataNode |

NameManager |

Worker |

启动HDFS服务

[root@vm1 sbin]#start-dfs.sh

Starting namenodes on [vm1]

vm1: namenode running as process3861. Stop it first.

vm2: datanode running as process3656. Stop it first.

vm3: datanode running as process3623. Stop it first.

Starting secondary namenodes [vm2]

vm2: secondarynamenode running asprocess 3739. Stop it first.

启动yarn服务

[root@vm1 sbin]#start-yarn.sh

starting yarn daemons

resourcemanager running as process4152. Stop it first.

vm2: nodemanager running as process3878. Stop it first.

vm3: nodemanager running as process3776. Stop it first.

启动spark中的master和slaves节点服务

[root@vm1 sbin]#start-master.sh

org.apache.spark.deploy.master.Masterrunning as process 4454. Stop it first.

[root@vm1 sbin]#start-slaves.sh

vm1:org.apache.spark.deploy.worker.Worker running as process 4565. Stop it first.

localhost:org.apache.spark.deploy.worker.Worker running as process 4565. Stop it first.

vm3: startingorg.apache.spark.deploy.worker.Worker, logging to/usr/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm3.out

vm2: startingorg.apache.spark.deploy.worker.Worker, logging to /usr/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm2.out

vm2: failed to launch: nice -n 0/usr/spark/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port8080 spark://vm1:7077

vm2: full log in/usr/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm2.out

vm3: failed to launch: nice -n 0/usr/spark/bin/spark-class org.apache.spark.deploy.worker.Worker --webui-port8080 spark://vm1:7077

vm3: full log in/usr/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vm3.out

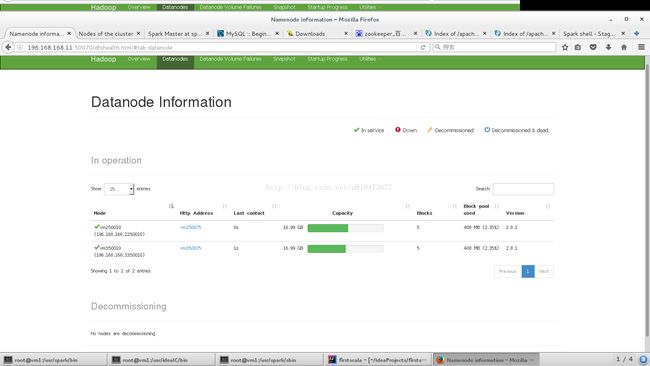

验证Hadoop和spark正常运行

主节点上:

[root@vm1 sbin]#jps

3861 NameNode

4565 Worker

4454 Master

4152 ResourceManager

54025 Jps

其他节点上:

[root@vm2 conf]#jps

3878 NodeManager

3656 DataNode

3739 SecondaryNameNode

57243 Worker

57791 Jps

启动sparkshell进行scala程序编写

[root@vm1 bin]#spark-shell

Setting default log level to"WARN".

To adjust logging level usesc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

17/08/31 01:59:01 WARNutil.NativeCodeLoader: Unable to load native-hadoop library for your platform...using builtin-java classes where applicable

17/08/31 01:59:10 WARNmetastore.ObjectStore: Failed to get database global_temp, returningNoSuchObjectException

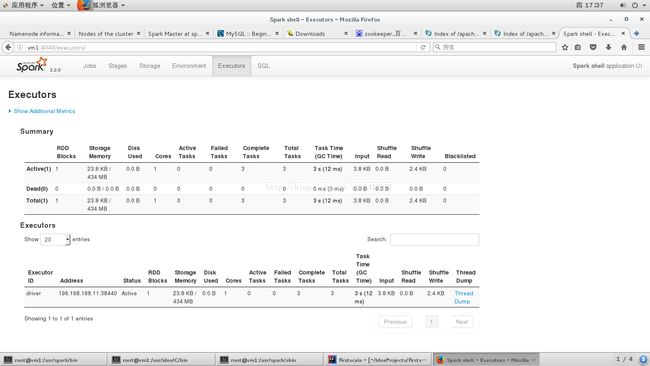

Spark context Web UI available athttp://196.168.168.11:4040

Spark context available as 'sc'(master = local[*], app id = local-1504115943045).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version2.2.0

/_/

Using Scala version 2.11.8 (JavaHotSpot(TM) 64-Bit Server VM, Java 1.8.0_144)

Type in expressions to have themevaluated.

Type :help for more information.

scala>

[root@vm1 ~]#hdfs dfs -ls /user/hadoop

Found 1 items

-rw-r--r-- 2 root supergroup 3809 2017-08-22 19:56/user/hadoop/README.md

scala> valrdd = sc.textFile("hdfs://vm1:9000/user/hadoop/README.md")

rdd:org.apache.spark.rdd.RDD[String] = hdfs://vm1:9000/user/hadoop/README.mdMapPartitionsRDD[1] at textFile at

scala> valwordcount=rdd.flatMap(_.split(" ")).map(x=>(x,1)).reduceByKey(_+_)

wordcount:org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at

scala>wordcount.count()

res0: Long = 287

scala>wordcount.take(10)

res1: Array[(String, Int)] =Array((package,1), (For,3), (Programs,1), (processing.,1), (Because,1),(The,1), (page](http://spark.apache.org/documentation.html).,1), (cluster.,1),(its,1), ([run,1))

intelliJ idea中Scala程序

Wordsort最后结果: