Hive表数据的查询

文中的两张表emp和dept:

emp数据如下:

7499 ALLEN SALESMAN 7698 1981-02-20 1600.0 300.0 30

7521 WARD SALESMAN 7698 1981-02-22 1250.0 500.0 30

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7654 MARTIN SALESMAN 7698 1981-09-28 1250.0 1400.0 30

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7788 SCOTT ANALYST 7566 1987-07-13 3000.0 0.0 20

7839 KING PRESIDENT NULL 1981-11-07 5000.0 0.0 10

7844 TURNER SALESMAN 7698 1981-09-08 1500.0 0.0 30

7876 ADAMS CLERK 7788 1987-07-13 1100.0 0.0 20

7900 JAMES CLERK 7698 1981-12-03 950.0 0.0 30

7902 FORD ANALYST 7566 1981-12-03 3000.0 0.0 20

7934 MILLER CLERK 7782 1982-01-23 1300.0 0.0 10dept的数据如下:

10 ACCOUNTING NEW YORK

20 RESEARCH DALLAS

30 SALES CHICAGO

40 OPERATIONS BOSTON1、where用法:

hive (db_emp)> select * from emp where sal>3000;

OK

emp.empno emp.enname emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7839 KING PRESIDENT NULL 1981-11-07 5000.0 0.0 10

Time taken: 0.891 seconds, Fetched: 1 row(s)

2、order用法:查询部门10的所有员工,按照薪资进行降序排列:

select * from emp where deptno='10' order by sal desc;结果:

hive (db_emp)> select * from emp where deptno='10' order by sal desc;

Query ID = root_20180725102525_ff119157-9589-4736-8780-4310923516fd

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1532453698068_0003, Tracking URL = http://master.cdh.com:8088/proxy/application_1532453698068_0003/

Kill Command = /opt/cdh5.14.2/hadoop-2.6.0/bin/hadoop job -kill job_1532453698068_0003

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2018-07-25 10:25:56,776 Stage-1 map = 0%, reduce = 0%

2018-07-25 10:26:09,404 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.72 sec

2018-07-25 10:26:18,240 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.19 sec

MapReduce Total cumulative CPU time: 5 seconds 190 msec

Ended Job = job_1532453698068_0003

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 5.19 sec HDFS Read: 10580 HDFS Write: 154 SUCCESS

Total MapReduce CPU Time Spent: 5 seconds 190 msec

OK

emp.empno emp.enname emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7839 KING PRESIDENT NULL 1981-11-07 5000.0 0.0 10

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7934 MILLER CLERK 7782 1982-01-23 1300.0 0.0 10

Time taken: 38.628 seconds, Fetched: 3 row(s) 3、limit用法:

hive (db_emp)> select * from emp limit 5;

OK

emp.empno emp.enname emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7499 ALLEN SALESMAN 7698 1981-02-20 1600.0 300.0 30

7521 WARD SALESMAN 7698 1981-02-22 1250.0 500.0 30

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7654 MARTIN SALESMAN 7698 1981-09-28 1250.0 1400.0 30

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

Time taken: 0.162 seconds, Fetched: 5 row(s)

4、distinct用法:去除查询结果中的重复记录

select distinct deptno from emp;结果:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 4.51 sec HDFS Read: 8303 HDFS Write: 9 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 510 msec

OK

deptno

10

20

30

Time taken: 32.66 seconds, Fetched: 3 row(s5、between and用法:

hive (db_emp)> select * from emp where sal between 2000 and 3000;

OK

emp.empno emp.enname emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7788 SCOTT ANALYST 7566 1987-07-13 3000.0 0.0 20

7902 FORD ANALYST 7566 1981-12-03 3000.0 0.0 20

Time taken: 0.117 seconds, Fetched: 5 row(s)6、count用法:

select count(1) from emp;

或者

select count(*) from emp;(效率较低)7、avg用法:求平均数

select avg(sal) avg_sal from emp;结果:

Total MapReduce CPU Time Spent: 5 seconds 380 msec

OK

avg_sal

2171.153846153846

Time taken: 31.874 seconds, Fetched: 1 row(s)8、group by用法:

select deptno avg(sal) from emp group by deptno;结果:

Total MapReduce CPU Time Spent: 2 seconds 730 msec

OK

deptno _c1

10 2916.6666666666665

20 2518.75

30 1566.6666666666667

Time taken: 28.437 seconds, Fetched: 3 row(s)9、having用法:跟where相似

select deptno avg(sal) avg_sal from emp group by deptno having avg_sal >2000;结果:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 4.41 sec HDFS Read: 9666 HDFS Write: 33 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 410 msec

OK

deptno avg_sal

10 2916.6666666666665

20 2518.75

Time taken: 59.368 seconds, Fetched: 2 row(s)where和having的区别:

正确理解MySQL中的where和having的区别

10、join的用法:等值join,左join,右join,全join

等值join:

select e.empno, e.enname, e.sal, d.deptno from emp e join dept d on e.deptno=d.deptno;结果:

Stage-Stage-3: Map: 1 Cumulative CPU: 1.8 sec HDFS Read: 7480 HDFS Write: 285 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 800 msec

OK

e.empno e.enname e.sal d.deptno

7499 ALLEN 1600.0 30

7521 WARD 1250.0 30

7566 JONES 2975.0 20

7654 MARTIN 1250.0 30

7698 BLAKE 2850.0 30

7782 CLARK 2450.0 10

7788 SCOTT 3000.0 20

7839 KING 5000.0 10

7844 TURNER 1500.0 30

7876 ADAMS 1100.0 20

7900 JAMES 950.0 30

7902 FORD 3000.0 20

7934 MILLER 1300.0 10

Time taken: 27.963 seconds, Fetched: 13 row(s)左join:

select e.empno, e.enname, e.sal, d.deptno from emp e left join dept d on e.deptno=d.deptno;由于数据的原因,结果同上。

右join:

select e.empno, e.enname, e.sal, d.deptno from emp e right join dept d on e.deptno=d.deptno;结果:

Stage-Stage-3: Map: 1 Cumulative CPU: 1.71 sec HDFS Read: 6727 HDFS Write: 297 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 710 msec

OK

e.empno e.enname e.sal d.deptno

7782 CLARK 2450.0 10

7839 KING 5000.0 10

7934 MILLER 1300.0 10

7566 JONES 2975.0 20

7788 SCOTT 3000.0 20

7876 ADAMS 1100.0 20

7902 FORD 3000.0 20

7499 ALLEN 1600.0 30

7521 WARD 1250.0 30

7654 MARTIN 1250.0 30

7698 BLAKE 2850.0 30

7844 TURNER 1500.0 30

7900 JAMES 950.0 30

NULL NULL NULL 40

Time taken: 31.193 seconds, Fetched: 14 row(s)

全join:

select e.empno, e.enname, e.sal, d.deptno from emp e full join dept d on e.deptno=d.deptno;由于数据的原因,结果同上。

12、导出数据到本地:

insert overwrite local directory '/opt/data/emp' row format delimited fields terminated by '\t' select * from emp;结果:

在本地的路径 /opt/data/emp/:

[root@master emp]# more 000000_0

7499 ALLEN SALESMAN 7698 1981-02-20 1600.0 300.0 30

7521 WARD SALESMAN 7698 1981-02-22 1250.0 500.0 30

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7654 MARTIN SALESMAN 7698 1981-09-28 1250.0 1400.0 30

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7788 SCOTT ANALYST 7566 1987-07-13 3000.0 0.0 20

7839 KING PRESIDENT \N 1981-11-07 5000.0 0.0 10

7844 TURNER SALESMAN 7698 1981-09-08 1500.0 0.0 30

7876 ADAMS CLERK 7788 1987-07-13 1100.0 0.0 20

7900 JAMES CLERK 7698 1981-12-03 950.0 0.0 30

7902 FORD ANALYST 7566 1981-12-03 3000.0 0.0 20

7934 MILLER CLERK 7782 1982-01-23 1300.0 0.0 10

13 、sort by

先设置reduce的个数:

hive (db_emp)> set mapreduce.job.reduces=3;

hive (db_emp)> set mapreduce.job.reduces;

mapreduce.job.reduces=3

执行sort by:

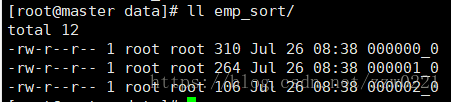

insert overwrite local directory '/opt/data/emp_sort' row format delimited fields terminated by '\t' select * from emp sort by sal;结果:

在本地路径/opt/data/emp_sort下产生3个文件:

3个文件的内容如下:每个reduce都按照sal进行排序

[root@master data]# cd emp_sort/

[root@master emp_sort]# cat 000000_0

7876 ADAMS CLERK 7788 1987-07-13 1100.0 0.0 20

7844 TURNER SALESMAN 7698 1981-09-08 1500.0 0.0 30

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

7788 SCOTT ANALYST 7566 1987-07-13 3000.0 0.0 20

7839 KING PRESIDENT \N 1981-11-07 5000.0 0.0 10

[root@master emp_sort]# cat 000001_0

7900 JAMES CLERK 7698 1981-12-03 950.0 0.0 30

7521 WARD SALESMAN 7698 1981-02-22 1250.0 500.0 30

7654 MARTIN SALESMAN 7698 1981-09-28 1250.0 1400.0 30

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7902 FORD ANALYST 7566 1981-12-03 3000.0 0.0 20

[root@master emp_sort]# cat 000002_0

7934 MILLER CLERK 7782 1982-01-23 1300.0 0.0 10

7499 ALLEN SALESMAN 7698 1981-02-20 1600.0 300.0 30

14、distribute by

insert overwrite local directory '/opt/data/emp_dist' row format delimited fields terminated by '\t' select * from emp distribute by deptno sort by sal;查看本地路径:

数据结果如下:按照deptno进行分组后根据sal进行排序

[root@master data]# cd emp_dist/

[root@master emp_dist]# more 000000_0

7900 JAMES CLERK 7698 1981-12-03 950.0 0.0 30

7521 WARD SALESMAN 7698 1981-02-22 1250.0 500.0 30

7654 MARTIN SALESMAN 7698 1981-09-28 1250.0 1400.0 30

7844 TURNER SALESMAN 7698 1981-09-08 1500.0 0.0 30

7499 ALLEN SALESMAN 7698 1981-02-20 1600.0 300.0 30

7698 BLAKE MANAGER 7839 1981-05-01 2850.0 0.0 30

[root@master emp_dist]# more 000001_0

7934 MILLER CLERK 7782 1982-01-23 1300.0 0.0 10

7782 CLARK MANAGER 7839 1981-06-09 2450.0 0.0 10

7839 KING PRESIDENT \N 1981-11-07 5000.0 0.0 10

[root@master emp_dist]# more 000002_0

7876 ADAMS CLERK 7788 1987-07-13 1100.0 0.0 20

7566 JONES MANAGER 7839 1981-04-02 2975.0 0.0 20

7902 FORD ANALYST 7566 1981-12-03 3000.0 0.0 20

7788 SCOTT ANALYST 7566 1987-07-13 3000.0 0.0 20

15、cluster by

insert overwrite local directory '/opt/data/emp_clu' row format delimited fields terminated by '\t' select * from emp cluster by sal;