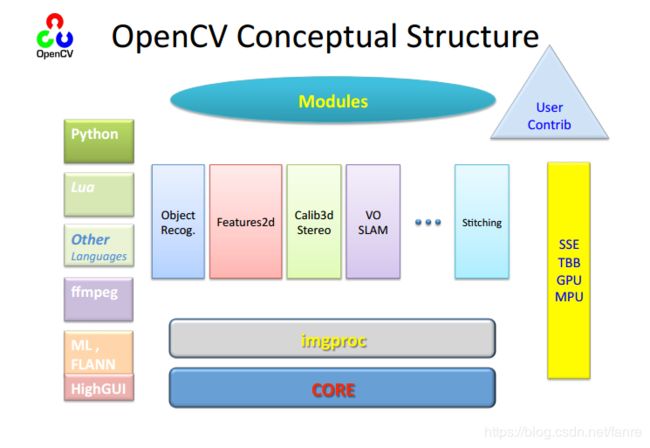

OpenCV系列(1):图像处理教程

01-概述 - OpenCV介绍与环境搭建:

核心模块:

- HighGUI

- ImageProcess

- 2D Feature

- Camera Calibration and 3D reconstruction

- Video Analysis

- Object Detection

- Machine Learning

- GPU加速

if(src.isempty())

{

return -1;

}

namedWindow("ssss",WINDOW_AUTOSIZE);//WINDOW_AUTOSIZE自动调整大小,WINDOW_NORMAL允许修改串口大小

inshow("ssss",src);

WaitKey(0);02-加载、修改、保存图像:

cv::imread();//加载图像:文件名;加载图像类型(IMREAD_UNCHANGED<0、IMREAD_GRAYSCALE=0、IMREAD_COLOR>0)

cv::cvtColor();//修改图像:原图;转换后色彩空间的图;转换到方向

cv::imwrite();//保存图像:路径+文件名,按照后缀去保存类型;带保存的图像。

03-矩阵的掩膜操作

if(!src.data)//判断Mat里面有没有数据

{

}获取图像像素指针:

- CV_Assert(myImage.depth()==CV_8U);

- Mat.ptr

(int i=0)//获取Mat矩阵第i行的指针(从0开始) - 获取当前行指针const uchar* current = myImage.ptr

(row) - 获取当前像素点P(row, col)的像素值p(row, col)=current[col]

像素范围处理saturate_cast(value)

- 将value的值输出在0~255之间,截断式的:<0则为0;>255则为255

矩阵的掩膜(mask也成为Kernel),也就是卷积,对应位置权重乘之后相加。

int cols = (src.cols-1) * src.channels();//边界问题

int offsetx = src.channels();

int rows = src.rows;

Mat dst = Mat::zero(src.size(), src.type());

for(int row = 1; row < src.row - 1; row++)

{

const uchar* previous= src.ptr(row-1);

const uchar* current = src.ptr(row);

const uchar* next= src.ptr(row+1);

uchar* output = dst.ptr(row);

for(int col = offsetx; col < cols; col++)

{

output [col] = saturat_cast(5 * current [col] - (current [col - offsetx] + current [col + offsetx] + previous[col] + next[col]));//自己左右上下

}

}

double t = getTickCount();

Mat kernel = (Mat_(3,3)<<0,-1,0,-1,5,-1,0,-1,0);

filter2D(src, dst, src.depth(), kernel);//src.depth()不知道的话就用-1;

double timeconst = (getTickCount() - t) / getTickFrequency();

printf("time consume %.2f",timeconst );

namedWindow("aaa", CV_WINDOW_AUTOSIZE);

imshow("aaa",dst) 函数调用filter2D功能

- 定义掩膜: Mat kernel = (Mat_

(3,3)<<0,-1,0,-1,5,-1,0,-1,0);

- filter2D(src, dst, src.depth(), kernel);//src.depth()位深度,32、24、8

04-Mat对象

Mat独享与IplImage

- Mat对象Opencv2.0之后引入的图像数据结构,自动分配内存,不存在内存泄露,面向对象的数据结构,分为两部分,头部和数据部分

- IplImage年的,C语言风格的数据结构,需要自己分配和管理内存,容易内存泄漏。

Mat对象构造函数与常用方法

| 构造函数 | 常用方法 |

| Mat() | void copyTo(Mat mat) |

| Mat(int rows, int cols, int type) | void convertTo(Mat dst, int type) |

| Mat(Size size, int type) | Mat clone() |

| Mat(int rows, int cols, int type, const Scalar &s) | int channels() |

| Mat(Size size, int type, const Scalar &s) | int depth() |

| Mat(int ndims, const int *sizes, int type) | bool empty() |

| Mat(int ndims, const int *sizes, int type, const Scalar &s) | uchar* ptr(i=0) |

Mat对象使用

- 部分赋值:一般只是赋值Mat的头和指针部分,不复制具体数据MatB(A)

- 完全赋值:全部复制Mat F = A.clone(); A.copyTo(G);

Mat对象创建

- 构造函数cv::Mat M(row, column,CV_8UC3, Scalar(0,0,255));//U无符号,C为Char类型,3通道数,Scalar初始值

- 创建多维度数组cv::Mat::create :int sz[3] = {2, 2, 2} Mat L(3, sz, CV_8UC1, Scalar::all(0));

定义小数组

- Mat C = (Mat_

(3,3)<<0,-1,0,-1,5,-1,0,-1,0);

05-图像操作

- imread读图

- imwrite写

读写像素

- Scalar intensity = img.at

(y,x); Scalar intensity = img.at (Point(x,y)); - Vec3f intensity = img.at

(y,x); float blue =intensity.val[0];float green=intensity.val[1];float red=intensity.val[2];

cvtColor(src, gray, CV_BGR2GRAY);

int height = gray.rows;

int width = gray.cols;

for(int row = 0; row < height; row++ )

for(int col = 0; col < width; col++)

{

int gray = gray.at(row, col);

gray.at(row, col) = 255 - gray;

}

Mat dst;

dst.creat(src.size(), src.type());

height = src.row();

width = src.clos;

int nc = src.channnels();

for(int row = 0; row < height; row++ )

for(int col = 0; col < width; col++)

{

if(nc == 1)

{

int gray = gray.at(row, col);

gray.at(row, col) = 255 - gray;

}else if(ncc==3)

{

int b = src.at(row, col)[0];

int g = src.at(row, col)[0];

int r = src.at(row, col)[0];

dst.at(row, col)[0] = 255 - b;

dst.at(row, col)[1] = 255 - g;

dst.at(row, col)[2] = 255 - r;

gray.at = max(r, max(b,g));

}

}

bitwise_not(src, dst);//范围操作 修改像素值

- 灰度图像:img.at

(y,x) = 128; - RGB三通道:img.at

(y ,x )[0]=128;//blue img.at (y ,x )[1]=128;//green img.at (y ,x )[2]=128;//red - 空白图像:img = Scalar(0);

- ROI选择:Rect r(10,10,100,100); Mat smallimg = img(r);

Vec3b与Vec3f

- Vec3b:BGR的uchar

- Vec3f:float

- CV_8UC1转换到CV_32F1:src.convertTo(dst,CV_32F);

06-图像混合

![]()

void cv::addWeighted(InputArray src1,

double alpha,

InputArray src2,

double beta,

double gamma,

OutputArray dst,

int dtype = -1

)dst(I) = saturate(src1(I) * alpha + src2(I) * beta +gamma)

double alpha = 0.5;

if(src1.rows == src2.rows && src1.cols== src2.cols&& src1.type() == src2.type())

{

addWeighted(src1, alpha, src2, (1.0 - alpha), 0.0, dst);

add(src1, src2, dst, Mat());

multiply(src1, src2, dst, 1.0);

}07 - 调整图像亮度与对比度

- 像素变换 - 点操作

- 领域操作 - 区域

调整亮度和对比度属于像素变换-点操作

![]()

int height = src.row();

int width = src.clos;

dst = Mat::zeros(src.size(), src.type());

float alpha = 1.2;

float beta = 30;

src.convertTo(m1,CV_32F);

int nc = src.channnels();

for(int row = 0; row < height; row++ )

for(int col = 0; col < width; col++)

{

if(nc == 1)

{

int v = m1.at(row, col);

gray.at(row, col) = sature_cast(v*alpha + beta);

}else if(nc==3)

{

float b = m1.at(row, col)[0];

float g = m1.at(row, col)[1];

float r = m1.at(row, col)[2];

dst.at(row, col)[0] = sature_cast(b*alpha + beta);

dst.at(row, col)[1] = sature_cast(g*alpha + beta);

dst.at(row, col)[2] = sature_cast(r*alpha + beta);

gray.at = max(r, max(b,g));

}

}

bitwise_not(src, dst);//范围操作 08 - 绘制形状与文字

使用cv::Point与cv::Scalar

- Point p; p.x=10; p.y=8; or p = Point(10,8);//笛卡尔坐标系横纵轴

- Scalar(b,g,r)

绘制线、矩形、圆、椭圆等基本几何形状

- 线:cv::line(LINE_4\LINE_8\LINE_AA);LINE_AA反锯齿

- 椭圆cv::ellipse

- 矩形cv::rectangle

- 圆cv::circle

- 填充cv::fillPoly

///线

Point p1 = Point(20, 30);

Point p2;

p2.x = 300;

p2.y = 300;

Scalar color = Scalar(0, 0, 255);

line(bgImage, p1, p2, color, 1, LINE_8);

///矩形

Rect rect = Rect(200, 100, 300, 300);

Scalar color = Scalar(255, 0, 0);

rectangle(bgImage, rect, color, 2, LINE_8);

///椭圆

Scalar color = Scalar(0,255,0)

ellipse(bgmimage, Point(bgImage.cols/2, bgImage.row/2),Size(bgImage.cols/4, bgImage.row/8),90,0,360,color,2,LINE_8)//背景、椭圆中心、size(长轴、短轴)、切斜度、0~360圆弧绘制角度范围、颜色、线宽、线类型。

///圆

Scalar color = Scalar(0, 255, 255);

Point center = Point(bgImage.cols / 2, bgImage.row / 2);

circle(bgImage, center, 150, color, 2, 8);

///多边形

Point pts[1][5];

pts[0][0] = Point(100 ,100);

pts[0][1] = Point(100 ,200);

pts[0][2] = Point(200 ,200);

pts[0][3] = Point(200 ,100);

pts[0][4] = Point(100 ,100);

const Point* ppts[] = {pts[0]};

int npt[] = {5};

Scalar color = Scalar(255, 12, 255);

fillPoly(bgImage,ppts,npt,1,color,8);//背景图,点坐标,点个数,轮廓数,颜色,线类型

///文字

putText(bgImage,"Hello Opencv", Point(300, 300), CV_POINT_HERSHEY_COMPLEX, 1.0, Scalar(12,255,200),1,8);//倒数第二个就是粗细调整

//绘制随机颜色

RNG rng(12345);

Point pt1;

Point pt2;

Mat bg = Mat::zeros(bgImage.size(), bgImage.type());

for(int i = 0; i < 100000; i++)

{

pt1.x = rng.uniform(0, bgImage.cols);

pt2.x = rng.uniform(0, bgImage.cols);

pt1.y = rng.uniform(0, bgImage.rows);

pt2.y = rng.uniform(0, bgImage.rows);

Scalar color = Scalar(rng.uniform(0,255),rng.uniform(0,255),rng.uniform(0,255));

line(bgImage, p1, p2, color, 1, LINE_8);

}

09-模糊图像一

模糊原理

相关函数

- 均值模糊:blur(Mat src, Mat dst, Size(xradius, yradius), Point(-1,1));//中心

- 高斯模糊:GaussianBlur(Mat src, Mat dst, Size(11, 11), sigmax, sigmay);其中Size(x,y),x、y必须是正奇数。

blur(src, dst, Size(3,3), Point(-1, -1));//x方向,y方向

GaussianBlur(src, dst, Size(5,5), 11, 11);//x方向sigma,y方向sigma10-模糊图像二

中值滤波

- 统计排序滤波器

- 对椒盐噪声很有效(去极值)

双边滤波

- 均值模糊无法克服边缘像素信息丢失缺陷,原因是均值滤波是基于平均权重

- 高斯模糊部分克服了该缺陷,但是无法完全避免,因为没有考虑到像素值的不用

- 高斯双边模糊-是边缘保留的滤波方法,避免了边缘信息丢失,保留了图像轮廓不变。

主要是用于美容效果;

![]()

空域核:空间域

值域核:像素值域,相差大的被保留,差异保留存在。

相关API

- 中值模糊medianBlur(Mat src, Mat dst, ksize);//ksize必须大于1 的奇数。

- 双边模糊bilateralFilter(src, dst, d=15, 150,3);//15计算半径,如果是-1则根据sigma space进行调整;150-sigma color多少差异被计算;3-sigma space,d>0则声明无效,否则根据它来计算d值

medianBlur(src,dst,3);

bilateralFilter(src, dst, 15, 100,3);//100降低,边缘信息更多的被保留

GaussianBlur(src,dst,size(15,15),3,3);

Mat kernel = (Mat_(3,3)<<0,-1,0,-1,5,-1,0,-1,0);

filter2D(src,dst,-1,kernel,Point(-1,-1),0);

11- 膨胀与腐蚀

形态学操作(morphology operators)-膨胀

- 基于形状的操作,基于集合论基础上的形态学数学

- 腐蚀、膨胀、开、闭

- 最大像素值来替换锚点(中心)像素,黑色白背景变瘦

形态学操作(morphology operators)-腐蚀

- 最小像素值来替换锚点(中心)像素,黑色白背景变胖

相关API

- getStructuringElement(int shape, Size ksize, Point anchor)-形状(MORPH_RECT\MORPH_CROSS\MORPH_ELLIPSE);大小(奇数);锚点,默认Point(-1, -1)中心点像素;

- dilate(src, dst, kernel);

- erode(src, dst, kernel);

动态调整结构元素大小

- TrackBar - createTrackbar(constString & trackbarname, constString winName, int* value, int count, Trackbarcallback func, void* userdata=0);//callback函数一定要有啊。

Mat src,dst;

char OUTPUT_WIN[]="output image";

int element_size=3;

int max_size = 21;

void CallBack_Demo(int, void*);

int main(int argc, char** argv){

src = imread("ss");

if(!src.data){

printf("xx");

return -1;

}

createTrackbar("Element Size:", OUTPUT_WIN, &element_size, CallBack_Demo);

CallBack_Demo(0,0);

}

void CallBack_Demo(int, void*)

{

int s = element_size * 2 + 1;

Mat structureElement = getStructuringElement(MORPH_RECT, Size(s, s),Point(-1,-1));

dilate(src,dst,structureElement ,Point(-1,-1),1);

erode(src,dst,structureElement );

return;

}消除图中点。

12- 形态学操作

- 开操作-open

- 闭操作-close

- 形态学梯度-Morphological Gradient

- 顶帽-top hat

- 黑帽-black hat

结构元素很重要。

开操作-open

- 先腐蚀后膨胀

- 黑色背景,白色前景,去掉小对象

相关API

- morphologyEx(src, dst, CV_MOP_BLACKHAT, kernel, Iteration);-CV_MOP_OPEN/CV_MOP_CLOSE/CV_MOP_GRADIENT/CV_MOP_TOPHAT_CV_MOP_BLACKHAT;迭代次数默认是1

Mat kernel = getStructuringElement(MORPH_RECT, Size(11,11), Point(-1, -1));

morphologyEx(src,dst,CV_MOP_OPEN,kernel);闭操作-close

- 先膨胀后腐蚀(bin2)

- 如果前景是白背景是黑,填充小孔洞fill hole

Mat kernel = getStructuringElement(MORPH_RECT, Size(11,11), Point(-1, -1));

morphologyEx(src,dst,CV_MOP_CLOSE,kernel);形态学梯度-Morphological Gradient

- 膨胀减去腐蚀

- 基本梯度(还有内部梯度:原图减腐蚀和方向梯度:XY方向)

Mat kernel = getStructuringElement(MORPH_RECT, Size(11,11), Point(-1, -1));

morphologyEx(src,dst,CV_MOP_GRADIENT,kernel);顶帽-top hat

- 原图像与开运算的差值

- 留下小的结构元素

Mat kernel = getStructuringElement(MORPH_RECT, Size(11,11), Point(-1, -1));

morphologyEx(src,dst,CV_MOP_TOPHAT,kernel);黑帽-backhat

- 闭操作图像与原图像的差值

- 获取孔洞大小

Mat kernel = getStructuringElement(MORPH_RECT, Size(11,11), Point(-1, -1));

morphologyEx(src,dst,CV_MOP_BLACKHAT,kernel);013-形态学操作应用-提取水平与垂直线

原理

通过自定义的结构元素,实现结构元素对输入图像一些对象敏感,另外一些对象不敏感,这样就使得敏感的对象改变而不敏感的对象保留输出,通过基本形态学操作-膨胀与腐蚀,使用不同的结构元素实现对输入图像的操作,得到想要的结果。

- 膨胀-最大值

- 腐蚀-最小值

结构元素

- 任意结构元素

- 矩形、圆、直线、磁盘、砖头

提取步骤

- 输入图像:imread

- 灰度化:cvtColor

- 二值化:adaptiveThreshold

- 定义结构元素

- 开运算(膨胀+腐蚀):提取水平垂直线

src=imread();

cvtColor(src, gray,CV_BGR2GRAY);

adapativeThreshold(src,bin,255,ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY,15,-2);

Mat hline = getStructuringElement(MOTPH_RECT, Size(src.cols/16,1), Point(-1,-1));

Mat vline = getStructuringElement(MOTPH_RECT, Size(1,src.rows/16), Point(-1,-1));

erode(binImg, temp, hline);

dilate(temp, dst, hile);

morphologyEx(binImage,dst,CV_MOP_OPEN,vline );

erode(binImg, temp, vline );

dilate(temp, dst, vline );

bitwise_not(dst,dst);

blur(dst,dst,Size(3,3),Point(-1,-1));

//////////////////////

Mat kernel = getStructuringElement(MOTPH_RECT, Size(5,5), Point(-1,-1));转换为二值图像-adaptiveThreshold

adaptiveThreshold(

Mat src,//输入的灰度图像

Mat dst,//二值图像

double maxValue;//二值图像的最大值

int adaptiveMethod;//自适应方法,ADAPTIBE_THRESH_MEAN_C,ADAPTIVE_THRESH_GAUSSIAN_C

int thresholdType,//阈值类型

int blockSize;//块大小

double C//常量C可以是正数,0,负数

)

14 - 图像金字塔-上采样与下采样

图像金字塔概念

- 分辨率下面小,上面大

- 调整图像的大小,放大zoom in缩小zoom out

- 一系列不同分辨率图像。保证特性一直存在(区别于差值和size放缩)

- 高斯金字塔-用来对图像进行降采样

- 拉普拉斯金字塔-用来重建一张图片,根据上层降采样图片

图像金字塔概念-高斯金字塔

- 高斯金字塔从底向上,逐层降采样得到。

- 降采样后 图像为原图大小的M*N的M/2*N/2,就是对原图像删除偶数行和列得到的,即得到降采样后上一层的图片

- 高斯金字塔的生成过程分成2步:对当前层进行高斯模糊;删除当前层的偶数行和列。上一层比下一层大小缩减1/4

高斯不同(Difference of Gaussian-DOG)

- 就是把同一张图像在不同的参数下做高斯模糊之后的结果相减,得到的输出图像,成为高斯不同(DOG);

- 高斯不同是图像的内在特征,在灰度图像增强、角点检测中经常使用。

采样相关API

- 上采样(cv::pyrUp) - zoom in放大:pryUp(Mat src, Mat dst, Size(src.cols*2, src.rows*2));//原图像在宽高各放大两倍

- 降采样(cv::pyrDown)- zoom out缩小:pryDown(Mat src, Mat dst, Size(src.cols/2, src.rows/2));//原图像在宽高各缩小1/2

pyrUp(src,dst,Size(src.cols*2,src.rows*2));

pyrDown(src,dst,Size(src.cols/2,src.rows/2));

GaussianBlur(gray, gauss1,Size(3,3),0,0);

GaussianBlur(gauss1, gauss2,Size(3,3),0,0);

subtract(gauss1,gauss1,dogImg);//低的减去高的

normalize(dogImg,dogImg,255,0,NOR_MINMAX);15-基本阈值操作

图像阈值(threshold)

- Binary segmentation.

阈值类型-阈值二值化(threshold binary)

阈值类型-截断(truncate)

阈值类型-阈值取零(threshold to zero)

![]()

阈值类型-阈值反取零(threshold to zero inverted)

![]()

#include

#include

#include

using namespace cv;

Mat src, gray_src, dst;

int threshold_value = 127;

int threshold_max = 255;

int type_value=2;

int type_max=5;

const char* output_title = "binary image";

void Threshold_Demo(int , void*);

int main(int argc, char** argv)

{

src = imread("d://");

if(!src.data)

{

printf("aaa");

return -1;

}

nameWindow("input image", CV_WINDOW_AUTOSIZE);

nameWindow(output_title, CV_WINDOW_AUTOSIZE);

imshow("input image", src);

createTrackbar("Threshold Value:", output_title, &threshold_value, threshold_max, Threshold_Demo);

createTrackbar("Type Value:", output_title, &type_value, type_max, Threshold_Demo);

Threshold_Demo(0,0);

waiteKey(0);

return 0;

}

void Threshold_Demo(int , void*)

{

cvColor(src, gray_src, CV_BGR2GRAY);

printf("%d",THRESH_BINARY);

printf("%d",THRESH_BINARY_INV);

printf("%d",CV_THRESH_TOZERO);

printf("%d",CV_THRESH_TOZERO_INV);

printf("%d",THRESH_TRUNG);

threshold(gray_src, dst, threshold_value,type_value);

threshold(gray_src, dst, 0,THRESH_OTSU|type_value);

threshold(gray_src, dst, 0,THRESH_TRUNG|type_value);

imshow(output_title, dst);

}

16-自定义线性滤波

卷积概念

- kernel在每个像素上做的操作

- Kernel本质上是一个固定大小的矩阵数组,中心点为锚点(anchor point)

卷积如何工作

常见算子

Robert算子

| 1 | 0 |

| 0 | -1 |

| 0 | 1 |

| -1 | 0 |

Sobel算子

| -1 | 0 | 1 |

| -2 | 0 | 2 |

| -1 | 0 | 1 |

| -1 | -2 | -1 |

| 0 | 0 | 0 |

| 1 | 2 | 1 |

拉普拉斯算子

| 0 | -1 | 0 |

| -1 | 4 | -1 |

| 0 | -1 | 0 |

//Robert X方向

Mat kernel_x = (Mat_(2,2)<<1,0,0,-1);

filter2D(src,dst,-1,kernel_x ,Point(-1,-1),0.0);

//Robert Y方向

Mat kernel_y = (Mat_(2,2)<<0,1,-1,0);

filter2D(src,dst,-1,kernel_y ,Point(-1,-1),0.0);

//Sobel X方向

Mat kernel_x = (Mat_(3,3)<<-1,0,1,-2,0,2,-1,0,1);

filter2D(src,dst,-1,kernel_x ,Point(-1,-1),0.0);

//Sobel Y方向

Mat kernel_y = (Mat_(3,3)<<-1,-2,-1,0,0,0,1,2,1);

filter2D(src,dst,-1,kernel_y ,Point(-1,-1),0.0);

//laplace算子是整个差异

Mat kernel_y = (Mat_(3,3)<<0,-1,0,-1,4,-1,0,-1,1);

filter2D(src,dst,-1,kernel_y ,Point(-1,-1),0.0);

自定义卷积模糊

filter2D

{

Mat src,//输入图像

Mat dst;//模糊图像

int depth;//图像深度32/8

Mat kernel;//卷积核/模板

Point anchor,//锚点位置

double delta//计算出来的像素+delta

}

int c = 0;

int index = 0;

int ksize = 3;

while(true){

c = waitKey(500);

if((char)c == 27){

break;

}

ksize = 4 + (index % 5) *2 +1;

Mat kernel = Mat::ones(Size(ksize , ksize ),CV_32F)/(float)(ksize ,ksize );

filter2D(src, dst, -1,kernel,Point(-1,-1));

index++;

}17-处理边缘

卷即边缘问题

- 图像卷积的时候,边界的相熟不能被卷积操作,原因在于边界像素没有完全被kernel重合。

处理边缘

在卷积开始之前增加边缘像素,填充值为0或者RGB,在卷积处理之后再去掉这些边缘,默认的处理方法是BORDER_DEFAULT,此外还有以下:

- BORDER_CONSTANT-填充边缘用指定像素值

- BORDER_REPLICATE-填充边缘像素用已知的边缘像素值,类似差值

- BORDER_WRAP-用另外一边的像素值来补充,就和换行一样

给图像增加边缘API

copyMakeBorder(

-Mat src,//输入图像

-Mat dst,//添加边缘图像

-int top,//边缘长度,一般上下左右都取相同值

-int bottom,

-int left,

-int right,

-int borderType,//边缘类型

-Scalar value//颜色

)

int top=(int)(0.05*src.rows);

int bottom=(int)(0.05*src.rows);

int left=(int)(0.05*src.cols);

int right=(int)(0.05*src.cols);

RNG rng(12345);

int borderType = BORDER_DEFAULT;

int c = 0;

while(true){

c = waitKey(500);

if( (char)c == 27){//ESC

break;

}

if((char)c=='r'){

borderType = BORDER_REPLICATE;

}else if((char )c=='v'){

borderType = BORDER_VRAP;

}else if((char )c=='C'){

borderType = BORDER_CONSTANT;

}else{

borderType = BORDER_DEFAULT;

}

Scalar color = Scalar(rng.unifor(0,255),rng.unifor(0,255),rng.unifor(0,255));

copyMakeBorder(src,dst,top,bottom,left,right,borderType ,color);

}

///

GaussianBlur(src, dst, Size(5,5),0,0,BORDER_DEFAULT);18-Sobel算子

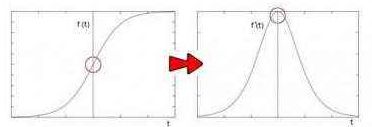

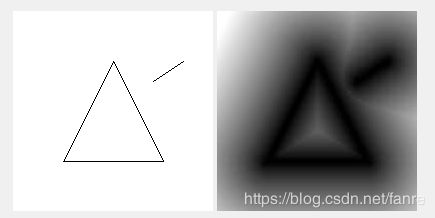

卷积的应用-图像边缘提取

图形变化比较大,求导数,最高点就是变化最大的地方。

- 边缘是像素值发生跃迁的地方,是图像的显著特征之一,在图像特征提取、对象检测、模式识别等方面都有重要的作用。

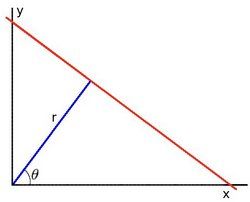

- 通过求它的一阶导数

,delta越大,说应在x方向上变化越大,边缘信号越强。

,delta越大,说应在x方向上变化越大,边缘信号越强。

Sobel算子

- 是离散微分算子(discrete differentitation operator)用来计算图像灰度的近似梯度

- Sobel算子功能集合高斯平滑和微分求导

- 又被称为一阶微分算子,求导算子,在水平和垂直两个方向上求导,得到图像x方向与y方向梯度图像。

- 水平梯度

垂直梯度

垂直梯度 ,通过权重2或者-2控制差异

,通过权重2或者-2控制差异 - 最终图像梯度

用近似

用近似 加速。减少浮点数运算

加速。减少浮点数运算

求导近似值在kernel=3时不是很准确,容易收到干扰,Opencv使用改进版本Scharr函数,算子如下: 、

、

API说明cv::Sobel

cv::Sobel(

InputArray Src,//输入图像

OutputArray dst;//输出图像,大小与输入图像一直

int depth,//输出图像深度

Int dx,//x方向,几阶导数

Int dy,//y方向,几阶导数

Int ksize,//SOBEL算子kernel大小,必须是1、3、5、7

double scale =1,

double delta = 0,

int borderType = BORDER_DEFAULT

)

API说明cv::Scharr也就是sobel的改进

cv::Scharr(

InputArray Src,//输入图像

OutputArray dst;//输出图像,大小与输入图像一直

int depth,//输出图像深度

Int dx,//x方向,几阶导数

Int dy,//y方向,几阶导数

double scale =1,

double delta = 0,

int borderType = BORDER_DEFAULT

)

- 高斯平滑

- 求灰度

- 求梯度X和Y

- 混合XY振幅图像

GuassianBlur(src,dst,Size(3,3),0,0);

cvtColor(dst,gray_src,CV_BGR2GRAY);

Mat xgrad,ygrad;

Sobel(gray_src,xgrad,CV_16S,1,0,3);//1:x方向1阶导数,0:y方向0阶导数

Sobel(gray_src,ygrad,CV_16S,0,1,3);

convertScaleAbs(xgrad,xgrad);

convertScaleAbs(ygrad,ygrad);

//

//Sobel(gray_src,xgrad,-1,1,0,3);//细节信息会丢失,特别是在直接做截断处理的时候

//Sobel(gray_src,ygrad,-1,0,1,3);

addWeighted(xgrad,0.5,ygrad,0.5,0,xygrad);

int height = src.row();

int width = src.clos;

Mat xygrad = Mat(xgrad.size(),xgrad.type());

for(int row = 0; row < height; row++ )

for(int col = 0; col < width; col++)

{

int xg= xgrad.at(row, col);

int yg= ygrad.at(row, col);

int xyg = xg + yg;

xgrad.at(row, col) = saturate_cast(xyg);

}

bitwise_not(src, dst);//范围操作 Scharr(gray_src,xgrad,CV_16S,1,0);//输出位深度要比输入的好,对于边缘干扰有较好的鲁棒性。

Scharr(gray_src,ygrad,CV_16S,0,1);19-Laplance算子

理论

在二阶导数的时候,最大变换处的值为零,即边缘是0,通过二阶倒数计算,根据此理论可以计算图像的二阶倒数,提取边缘。

Laplance算子

处理流程

- 高斯模糊-去噪声GaussianBlur()

- 转换为灰度图像cvtColor()

- 拉普拉斯-二阶倒数计算Laplance()

- 取绝对值convertScaleAbs()

- 显示

src=imread("");

if(!src.data){

printf("");

}

GaussianBlur(src,dst,Size(3,3),0,0);

cvtColor(dst,gray_src,CV_BGR2GRAY);

Laplacian(gray_src,edge_image,CV_16S,3);

convertScaleAbs(edge_image,edge_image);

threshold(edge_image,edge_image,0,255,THRESH_OTSU| THRESH_BINARY)20-Canny边缘检测

Canny算法介绍

- 边缘检测,1986年提出

Canny算法介绍-五步 in cv::Canny

- 高斯模糊-GaussianBlur 去噪

- 灰度转换-cvtColor

- 计算梯度-Sobel/Scharr

- 非最大信号抑制-非边缘信号去掉,切线法向方向

- 高低阈值输出二值图像-边缘像素大于高阈值的保留,低阈值的去掉,之间的看看有没有和边缘点连接

Canny算法介绍-非最大信号抑制

Canny算法介绍-高低与之输出二值图像

- 边缘像素大于高阈值T2的保留,低阈值T1的去掉,之间的看看有没有和边缘点连接

- 推荐的高低阈值比例T2:T1=3:1/2:1;T2为高阈值,T1为低阈值。

API-cv::Canny

canny(

InputArray src,//8-bit输入图像一定要是8位的

OutputArray edges,//输出边缘图像,一般都是二值化图像,背景是黑色

double threshold1,//低阈值,通常为高阈值的1/2或者1/3

double threshold2,//高阈值

int aptertureSize,//Sobel 算子的size,一般的都是3x3,取值3

bool L2gradient//选择ture表示L2来归一化(平方和开根号),否则选择L1归一化(绝对值相加)

)

int t1_value = 50;

int max_value = 255;

const char* OUTPUT_TITLE ="ddd";

void Canny_Demo(int ,void*);

Mat gray_src;

cvtColor(src,gray_src,CV_BGR2GRAY);

CreateTrackbar("sss",OUTPUT_TITLE ,&t1_value ,max_value ,Canny_Demo);

Canny_Demo(0,0);

void Canny_Demo(int ,void*)

{

Mat edge_output;

blur(gray_src,gray_src,Size(3,3),Point(-1,-1),BORDER_DEFAULT);

Canny(gray_src,edge_output,t1_value*2,3,false);

dst.creat(src.size(),src.type());

Mat mask1 = Mat::zeros(src.size(),src.type());

invert(edge_output,edge_output);

src.copyTo(dst,edge_output);

}21-霍夫变换-直线

霍夫变换介绍

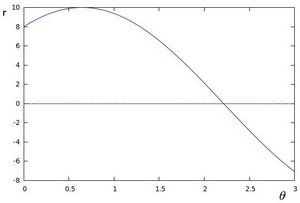

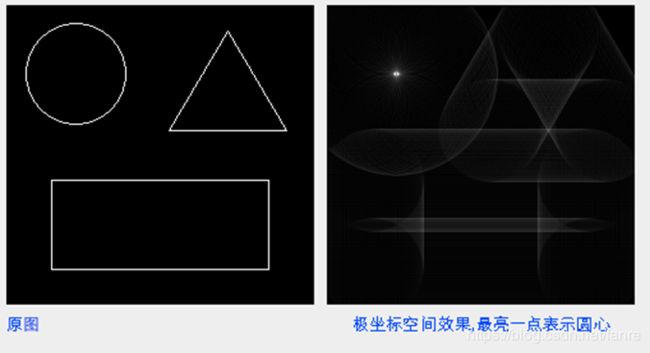

平面空间到霍夫空间

![]()

![]() 在x0&y0不变的情况下,每给定一个\theta就内确定一个r_\theta。

在x0&y0不变的情况下,每给定一个\theta就内确定一个r_\theta。

\theta有[0,360°],绘制曲线。

如果在x1,y1也绘制一条这样的曲线,发现多条曲线交于一点,标明这些点构成一条直线。

根据r和\theta获得x,y。

在霍夫空间中,如果每相交一次,灰度值加1,则在霍夫空间中相交的部分为亮的部分。

- 对于任意直线上的点来说,

- 变换到极坐标空间,在[0,360°]得到r的大小,

- 属于同一直线上的点,在极坐标空间,(r,\theta)必然在一个点上面有最强的信号出现,

- 根据此反算到平面坐标中就可以得到直线上各点的像素坐标,从而得到直线。

平面坐标->霍夫空间(极坐标)->平面坐标 :极坐标图很亮的点

相关API学习

- 标准的霍夫变换cv::HoughLines从平面坐标转换到霍夫空间,最终输出(\theta,r_\theta),表示极坐标空间

- 霍夫变换直线概率cv::HoughLineP最终输出的是直线的两个点(x_0,y_0,x_1,y_1)

cv::HoughLines(

InputArray src,//输入图像,必须8-bit的灰度图像

OutputArray lines,//输出极坐标来表示直线

double rho,//生成极坐标是像素扫描的步长

double theta,//生成极坐标时的角度步长,一般取值CV_PI/180

int threshold,//阈值,只有获得足够交点的极坐标才能被看成是直线,

double srn=0;//是否应用多尺度的霍夫变换,如果不是设置为0表示经典霍夫变换

double stn = 0;//是否应用多尺度的霍夫变换,如果不是设置为0表示经典霍夫变换

double min_theta = 0;//表示角度扫描范围0~180之间默认即可

double max_theta=CV_PI

)

cv::HoughLinesP(

InputArray src,//输入图像,必须8-bit的灰度图像

OutputArray lines,//输出极坐标来表示直线

double rho,//生成极坐标是像素扫描的步长

double theta,//生成极坐标时的角度步长,一般取值CV_PI/180

int threshold,//阈值,只有获得足够交点的极坐标才能被看成是直线,

double minLineLenght= 0,//最小直线长度

double maxLineGap=0//最大间隔

)

Canny(src,src_gray,100,200);//双阈值

cvtColor(src_gray,dst,CV_GRAY2BGR);

vector plines;

HoughLinesP(src_gray,pline,1,CV_PI / 180.0, 10,0,10);

Scalar color = Scalar(0,0,255);

for(size_t i = 0; i 22-霍夫圆检测

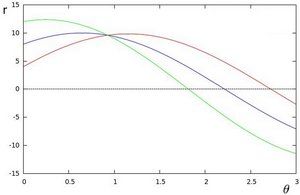

霍夫圆检测原理

![]()

![]()

圆最重要的是圆心坐标以及半径。

转变成极坐标半径固定,\theta变换[0,360°]变换,生成一个虚线的圆,多个圆有一个交点,霍夫空间累加,霍夫空间最亮,相交的圆个数最多,为圆心。

每给定一个R就有一个圆,笛卡尔的圆上所有点在极坐标上做圆都会相交于圆心。

- 从平面坐标到极坐标变换的三个参数

其中

其中 是圆心

是圆心 - 假设平面坐标的任意一个圆上点,转换到极坐标中:

处有最大值,霍夫变换正是利用这个原理实现圆的检测。

处有最大值,霍夫变换正是利用这个原理实现圆的检测。

相关API cv::HoughCircles

- 霍夫圆对噪声很敏感,因此会先进行中值滤波。

- 基于效率考虑,Opencv中实现的霍夫变换圆检测是基于图像梯度的实现,分为两步

- 检测边缘,发现可能的圆心,

- 基于第一步的基础上从候选圆心开始计算最佳半径大小

HoughCircles参数说明

HoughCircles{

InputArray image,//输入图像,必须是8位的单通道灰度图像。

OutputArray circle,//输出结果发现是圆信息,向量信息

Int method,//方法-HOUGH_GRADIENT,通过梯度寻找

Double dp,//dp=1;尺度,是否在在原图寻找(1),比原图小一半(2)

Double mindist,//10最短距离,两个圆心多远,可以分辨是两个圆的,否则认为是同心圆(半径不一样),src_gray.rows/8

Double param1,//canny edge detection lowthreshold,第一步canny求梯度

Double param2,//中心点累加器阈值-候选圆心,多长的圆弧定义为圆,越大越慢,(从小到大寻找圆心)

Int minradius,//最小半径

Int maxradius//最大半径

}

medianBlur(src,moutput,3);//去噪

cvtColor(moutput,moutput,CV_BGR2GRAY);

//霍夫检测

vector pcircles;

// 输入,输出,梯度检测,放缩倍率,同心圆最小圆心距,canny检测低阈值,检测为圆的最小点数,最小半径,最大半径

HoughCircles(moutput,pcircles,CV_HOUGH_GRADIENT,1,10,100,30,5,50);

src.copyTo(dst);

for(size_t i-0; i 23-像素重映射(cv::remap)

什么是像素重映射

- 将输入图像中各像素按照一定的规则映射到另外一张图的对应位置上,重新生成一张新的图像。

镜像:![]()

API介绍cv::remap

Remap{

InputArray src,//输入图像

OutputArray dst,//输出图像

InputArray map1,//x映射表CV_32FC1/CV_32FC2

InputArray map2;//y映射表

int interpolation,//选择插值方法,常见线性差值,可选择立方等

int borderMode,//BORDER_CONSTANT,边缘填充方式

const Scalar borderValue//color

}

map_x.create(src.size(),CV_32F);

map_y.create(src.size(),CV_32F);

while(true)

{

c=waiteKey(500);

if((char)c==27)

{

break;

}

index=c%4;

update_map();

remap(src,dst, map_x,map_y,INTER_LINEAP,BORDER_CONSTANT,Scalar(0,255,255));

}

void update_map(void)

{

for(int row=0; row (src.cols * 0.25) && col<=(src.cols*0.75)&& row>(src.rows*0.25)&&row<=(src.rows*0.75))//差值的形式缩小

{

map_x.at(row,col)=2*(col - (src.cols*0.25)+0.5);//多出像素会出问题

map_y.at(row,col)=2*(row - (src.rows*0.25)+0.5);

}

else

{

map_x.at(row,col)=0;

map_y.at(row,col)=0;

}

break;

case 1://水平镜像

map_x.at(row,col)=(src.cols-col-1);

map_y.at(row,col)=row;

case 2://垂直镜像

map_x.at(row,col)=col;

map_y.at(row,col)=(src.rows-row-1);

case 3://

map_x.at(row,col)=(src.cols-col-1);

map_y.at(row,col)=(src.rows-row-1);

}

}

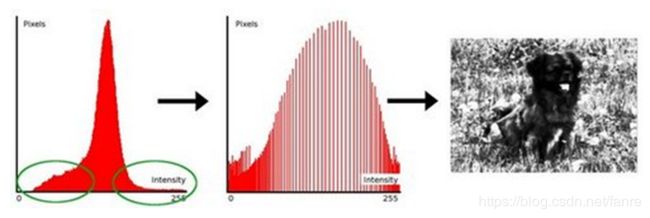

} 24-直方图均衡化

什么是直方图

也就是该灰度值出现的频率,梯度也可以做直方图,统计数值在一定范围内的都可以。例如梯度直方图、方向直方图。

- 图像直方图,是指对整个图像在灰度范围内的像素值(0~255)统计出现频率次数,据此生成的直方图,成为图像直方图,直方图反映了图像灰度的分布情况。是图像的统计学特征。

直方图均衡化

是一种提高图像对比度的方法,拉伸图像灰度值范围。

从一个灰度值分布映射到另一个灰度值分布,也是一个重映射remap

- remap将图像灰度分布从一个分布映射到另一个分布,然后在得到映射后的像素值即可

API说明cv::equalizeHist

equalizeHist(InputArray src, OutputArray dst)//驶入必须是8位单通道。

cvtColor(src,src,CV_BGR2GRAY)

equalizeHist(src,dst)25-直方图计算

直方图的概念

统计得到各个灰度等级出现的次数,每个等级绘制的次数叫做BIN

- 这里直方图的概念是基于图像像素值,其实对图像梯度、每个图像的角度等,一切图像的属性值都可以建立直方图。

- 常见属性:

-dim表示维度,对灰度直方图来说只有一个通道值dims=1

-bins表示在维度中子区域大小划分,bins=256,划分为256个级别

-range表示值的范围,灰度值范围为[0,255]

API

split(//将多通道的图像拆分为多个单通道的图像。

const Mat &src,//输入图像

Mat* mvbegin)//输出的多通道图像数组

calcHist(//输出统计结果

const Mat* images,//输入图像指针

int images,//图像个数

const int* channels,//通道数目

InputArray mask,//输入mask,可选

OutputArray hist,//输出直方图数据

int dims,//维数

const int *histsize,//直方图级数

const float * ranges,//值域范围

bool uniform,//true默认为真每一个竖条的宽度是否相等。

bool accumulate//默认false是否累加。如果为true,在下次计算的时候不会首先清空hist。

)

vectorbgr_planes;

split(src,bgr_planes);

imshow("ddd",bgr_planes[0]);

//计算直方图

int histSize = 256;

float range[] = {0,255}

float *histRanges = {range};

Mat b_hist,g_hist,r_hist;

//输入,图像数,通道数,掩码,统计后的直方图,输出的直方图维度,每个维度上直方图统计个数,进行统计的范围,直方图柱状统计条之间是否等间距,是否累加

calcHist(&bgr_planes[0],1,0,Mat(),b_hist,1,&histSize,&histRanges,ture,false);

calcHist(&bgr_planes[1],1,0,Mat(),g_hist,1,&histSize,&histRanges,ture,false);

calcHist(&bgr_planes[2],1,0,Mat(),r_hist,1,&histSize,&histRanges,ture,false);

int hist_h = 400;

int hist_w = 512;

int bin_w =hist_w/histSize;

Mat histImage(hist_w,hist_h,CV_8UC3,Scalar(0,0,0));

//归一化直方图

normalize(b_hist,b_hist,0,hist_h , NORM_MINMAX,-1,Mat());

normalize(g_hist,g_hist,0,hist_h , NORM_MINMAX,-1,Mat());

normalize(r_hist,r_hist,0,hist_h , NORM_MINMAX,-1,Mat());

//绘制直方图

for(int i=0;i(i-1))),Point((i-1)*bin_w,hist_h -cvRound(b_hist.at(i))),Scalar(255,0,0),2,LINE_AA);

line(histImage,Point((i-1)*bin_w,hist_h -cvRound(g_hist.at(i-1))),Point((i-1)*bin_w,hist_h -cvRound(g_hist.at(i))),Scalar(0,255,0),2,LINE_AA);

line(histImage,Point((i-1)*bin_w,hist_h -cvRound(r_hist.at(i-1))),Point((i-1)*bin_w,hist_h -cvRound(r_hist.at(i))),Scalar(0,0,255),2,LINE_AA);

}

26-直方图比较

直方图之间有相似性,衡量两张图像或者区域中间相关性。

直方图比较比较方法-概述

对输入的两张图像计算得到直方图H1、H2,归一化当相同的尺度空间,然后通过计算H1、H2之间的距离得到两个直方图的相似程度,今儿比较两张图像本身的相似程度,Opencv一共有四种方法:

-Correlation 相关性比较

-Chi-Square卡方比较

-Intersection十字交叉性

-Bhattacharry distance巴氏距离

直方图比较方法-相关性计算(CV_COMP_CORREL)

其中N是直方图BIN个数,取值范围要一致,![]() 是均值。也就是统计学里面的相关性系数,H1和H2一样的话就是1

是均值。也就是统计学里面的相关性系数,H1和H2一样的话就是1

直方图比较方法-卡方计算(CV_COMP_CHISQR)

统计学里面的卡方验证,查看两个数据分布的相关性。

![]() 、

、![]() 分别表示两个图像的直方图数据

分别表示两个图像的直方图数据

直方图比较方法-十字交叉(CV_COMP_INTERSECT)

![]() 、

、![]() 分别表示两个图像的直方图数据

分别表示两个图像的直方图数据

直方图比较方法-巴氏距离计算(CV_COMP_BHATTACHARYYA)

![]() 是均值,1最不相似,0完全一致

是均值,1最不相似,0完全一致

![]() 、

、![]() 分别表示两个图像的直方图数据

分别表示两个图像的直方图数据

相关API

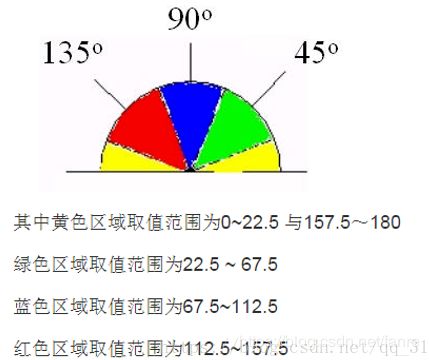

- 首先把图像从RGB色彩空间转换到HSV色彩空间cvtColor

- 计算图像的直方图,然后归一化到[0,1]之间calcHist和normalize

- 使用上述四种比较方法之一进行比较compareHist

compareHist(

InputArray h1,//直方图数据,下同

InputArray h2,

int method//比较方法,上述四种方法之一

)

cvtColor(base,base,CV_BGR2HSV);

cvtColor(test1,test1,CV_BGR2HSV);

cvtColor(test2,test2,CV_BGR2HSV);

int h_bins=50;int s_bins=60;

int binSize[]={h_bins,s_bins};

//hue varies from 0 to 179, saturation from 0 to 255

float h_ranges[] = {0,180};

float s_ranges[] = {h_bins, s_bins};

const float* ranges[]={h_ranges,s_ranges};

//use the 0-th and 1-st channels

int channels[]={0,1};

MatND hist_base;

MatND hist_test1;

MatND hist_test2;

calcHist(&base,1,channels,Mat(),hist_base,2,histSize,ranges,true,false);

normalize(hist_base,hist_base,0,1,NORM_MINMAX,-1,Mat())

calcHist(&test1,1,channels,Mat(),hist_test1,2,histSize,ranges,true,false);

normalize(hist_test1,hist_test1,0,1,NORM_MINMAX,-1,Mat())

calcHist(&test2,1,channels,Mat(),hist_test2,2,histSize,ranges,true,false);

normalize(hist_test2,hist_test2,0,1,NORM_MINMAX,-1,Mat())

double basebase=compareHist(hist_base,hist_base,CV_COMP_CORREL);

double basetest1=compareHist(hist_base,hist_test1,CV_COMP_CORREL);

double test1test2=compareHist(hist_test1,hist_test2,CV_COMP_CORREL);

printf("test1 compare with test2 correlation value :%f",test1test2);

putText(base,convertToString(basebase),Point(50,50),CV_FONT_HERSHEY_COMPLEX,1,Scalar(0,0,255),2,LINE_AA);

string convertToString(double d){

ostringstream os;

if(os<27-直方图反向投影(Back Projection)

反向投影

- 反向投影就是反映直方图模型在目标图像中的分布情况。

- 简单点说就是用直方图模型去目标图像中寻找是否有相似的对象。通常用HSV色彩空间中HS两个通道直方图模型

反向投影-步骤

- 建立直方图模型

- 计算待测图像直方图并映射到模型中

- 从模型反向计算生成图像

实现步骤与相关API

- 加载图片imread

- 将图像从RGB色彩空间转换到HSV色彩空间cvtColor

- 计算直方图和归一化calcHist与normalize

- Mat与MatND其中Mat表示二维数组,MatND表示三维或者多维数据,此处均可用Mat表示

- 计算反向投影图像calcBackProject

cvtColor(src,hsv,CV_BGR2HSV);

hue.creat(hsv.size(),hsv.depth());

int nchannels[] = {0,0};

//将指定通道的数据复制过去

mixChannels(&hsv,1,&hue,1,nchannels,1);

createTrackbar("Histogram Bins:",window_image,&bins,180,Hist_And_Backprojection);

Hist_And_Backprojection(0,0);

void Hist_And_Backprojection(int,void)

{

float range[]={0,180};

const float *histRanges = {range};

Mat h_hist;

calcHist(hue,1,0,Mat(),h_hist,&bins,&histRanges,true,flase);

normalize(h_hist,h_hist,0,1,NORM_MINMAX,-1,Mat());

Mat backPrjImage;

calcBackProject(&hue,1,0,h_hist,backPrjImage,1,true);

int hist_h=400;

int hist_v=400;

Mat histImage;

histImage.create(hist_v,hist_v,CV_8UC3,Scalar(0,0,0));

int bin_w =(hist_w/bins);

for(int i=0; i (i)*(400/255)))),Point((i)*bin_w,hist_h-cvRound(h_hist.at(i)*(400/255)))),Scalar(0,0,255),-1));

}

} 28-模板匹配(Template Match)

模板匹配介绍

模板也是一副图像(小图形),在大图像中找到相似的图像,就是模板匹配。

- 模板匹配就是在整个图像区域发现与给定子图像匹配的小块区域

- 所以模板匹配首先需要一个模板图像T(给定的子图像)

- 另外需要一个待检测的图像-源图像S

- 工作方法,在待测图像上,从左往右,从上向下计算模板图像与重叠子图像的匹配度,匹配程度越大,两者相同的可能性越大。

模板匹配介绍-匹配算法介绍

Opencv提供六钟常见的匹配算法,如下:

例如计算平方不同,前者为模板,后者覆盖在原图上。两个像素值相减后平方,

相关API介绍cv::matchTemplate

matchTemplate(

InputArray image;//源图像,必须是8-bit或者32-bit浮点数图像。

InputArray template;//模板图像,类型与输入图像一致

OutputArray result;//输出结果,必须是单通道32位浮点数,假设原图像W*H模板图像w*h,

int method;//使用匹配方法,推荐使用归一化方法

InputArray mask=noArray();//(optional)

)

enum cv::TemplateMatchModes{

cv::TM_SQDIFF = 0,

cv::TM_SQDIFF_NORMED = 1,

cv::TM_CCORR = 2,

cv::TM_CCORR_NORMED = 3,

cv::TM_CCOEFF = 4,

cv::TM_CCOEFF_NORMED=5

}

Mat src, temp, dst;

int match_method = CV_TM_SQDIFF;

int max_track = 5;

const char* INPUT_T = "input image";

const char* OUTPUT_T = "result image";

const char* match_t = "template match-demo";

src=

temp=

namedWindow(INPUT_T , CV_WINDOW_AUTOSIZE);

const char* trackbar_title="Match Algo Type";

createTrackbar(trackbar_title,OUTPUT_T ,&match_method ,max_track,Match_Demo);

atch_Demo(0,0);

void Match_Demo(int ,void*){

Mat img_display;

src.copyTo(img_display);

int result_rows = src.rows - temp.rows + 1;//边界

int result_cols = src.cols - temp.cols + 1;

dst.create(Size(result_cols, result_rows),CV_32FC1);//

matchTemplate(src, temp, dst, match_method);

normalize(dst, 0, 1, NORM_MINMAX, -1, Mat());//启用归一化

double minValue, maxValue;

Point minLoc;

Point maxLoc;

Point matchLoc;

minMaxLoc(dst, &minValue, &maxValue, &minLoc, &maxLoc, Mat());//找到最小最大值位置

if(match_method == TM_SQDIFF || match_method == TM_SQDIFF_NORMED){

matchLoc = minLoc;

}

else{

matchLoc = maxLoc;

}

//绘制矩形

//rectangle(img_display, matchLoc, Point(matchLoc.x + temp.cols, matchLoc.y + temp.rows), Scalar::all(0), 2, LINE_AA);

//rectangle(dst, matchLoc, Point(matchLoc.x + temp.cols, matchLoc.y + temp.rows), Scalar::all(0), 2, LINE_AA);

rectangle(dst, Rect(temLoc.x, temLoc.y,temp.cols,temp.rows),Scalar(0, 0, 255), 2, 8);

rectangle(result, Rect(temLoc.x, temLoc.y,temp.cols,temp.rows),Scalar(0, 0, 255), 2, 8);

imshow(OUTPUT_T, dst);

imshow(match_t, img_display);

return;

}29-轮廓发现(find contour in your image)

轮廓发现(find contour)

- 轮廓发现是基于图像边缘提取的基础寻找对象轮廓的方法。所以边缘提取的阈值选定会影响最终轮廓发现结果。

- API介绍

findContours发现轮廓

drawContours绘制轮廓

canny阈值有高阈值和低阈值,低阈值选取后,高阈值一般是它的1.5、2、3倍。

在二值图像上发现轮廓使用API cv::findContours(

InputOutputArray binImg,//输入图像,非0的像素被看成1,0的像素保持不变,8-bit

OutputArrayOfArrays contours,//全部发现的轮廓对象

OutputArray hierachy,//该图的拓扑结构,可选,该轮廓发现算法正是基于图像拓扑结构实现的

int mode,//轮廓返回的模式

int method,//发现方法

Point offset=Point()//轮廓像素的位移,默认(0,0)没有位移

)

轮廓绘制(draw contour)

在二值图像上发现轮廓使用API cv::findContours之后对发现的轮廓数据进行绘制显示

drawContours(

InputOutputArray binImg,//输出图像

OutputArrayOfArrays contours,//全部发现的轮廓对象

int contourIdx,//轮廓索引号

const Scalar &color,//绘制时候颜色

int thickness,//绘制线宽度

int lineType,//线的类型LINE_8

InputArray hierarchy,//拓扑结构图

int maxlevel,//最大层数,0之绘制当前的,1表示绘制当前及其内嵌轮廓

Point offset=Point()//轮廓位移,可选

)

代码演示

- 输入图像转为灰度图像cvColor

- 使用Canny进行边缘提取,得到二值图像

- 使用findContours寻找轮廓

- 使用drawContours绘制轮廓

int threshold_value=100;

int threshold_max =255;

const char* output_win="contours";

namedWindow(output_win,CV_WINDOW_AUTOSIZE);

cvtColor(src,src,CV_BGR2GRAY);

const char* track_title="Threshold Value";

createTrackbar(track_title,output_win,&threshold_value,threshold_max,Demo_Contours);

Demo_Contours(0,0);

void Demo_Contours(int ,void){

Mat canny_output;

vector> points;

vector hierachy;

Canny(src,canny_output,threshold_value,threshold_value*2,3,false);

findContours(canny_output,points,hierachy,RETR_TREE, CHAIN_APPORX_SIMPLE,Point(0,0));

dst = Mat::zeros(src.size(),cv_8UC3);

RNG rng;

for(size_t i=0;i 30-凸包-Convex Hull

概念介绍

- 什么是凸包(Convex Hull),在一个多边形边缘或者内部任意两个点的连线都包含在多边形边界或者内部

- 包含集合S中所有点的最小凸多边形称为凸包

- 检测算子Graham扫描法

![]()

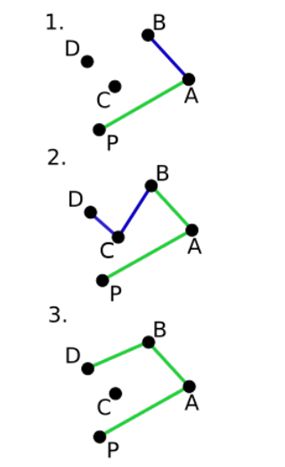

概念介绍-Graham扫描算法

- 首先选择Y方向最低的店作为起始点p0

- 从p0开始极坐标扫描,依次添加p1...pn(排序顺序是根据极坐标系的角度大小,逆时针方向)

- 对每个点pi来说,如果添加pi点到凸包中导致一个左转方向(逆时针方向)则添加该点到凸包,反之如果导致一个右转向(顺时针方向)删除该点从凸包中。

API说明cv::convexHull

convexHull(

InputArray points,//输入候选点,来自findContours

OutputArray hull,//凸包

bool clockwise,//default true,顺时针方向

bool returnPoints//true 表示返回点个数,如果第二个参数是vector

)

代码演示

- 首先把图像从RGB转为灰度

- 然后再转为二值图像

- 在通过发现轮廓得到候选点

- 凸包API调用

- 绘制显示(凸包是特殊的轮廓)

int threshold_value=100;

int threshold_max =255;

const char* output_win="contours";

namedWindow(output_win,CV_WINDOW_AUTOSIZE);

cvtColor(src,src_gray,CV_BGR2GRAY);

blur(src_gray,src_gray,Size(3,3),Point(-1,-1),BORDE_DEFAULT);

const char* trackbar_label="Threshold :";

createTrackbar(trackbar_label,output_win,&threshold_value,threshold_max,Threshold_Callback);

Threshold_Callback(0,0);

void Threshold_Callback(int ,void*){

Mat bin_output;

vector> contours;

vector hierachy;

threshold(src_gray,bin_output,threshold_value,threshold_max,THRESH_BINARY);

findContours(bin_output,contours,hierachy,RETR_EXTERNAL, CHAIN_APPORX_SIMPLE,Point(0,0));

vector> convexs(contours.size());

dst = Mat::zeros(src.size(),cv_8UC3);

RNG rng;

for(size_t i=0;i empty;

drawContours(dst,convexs,i,color,2,8,empty,0,Point(0,0));

}

inshow(output_win,dst);

}

31-轮廓周围绘制矩形框和圆形框

轮廓周围绘制矩形-API

approxPloyDP(InputArray curve, OutputArray approxCurve, double epslion, bool closed)

基于RDP算法实现,目的是减少多边形轮廓点数

- cv::boundingRet(InputArray points)得到轮廓周围最小矩形左上角点坐标和右下点坐标,绘制一个矩形。

- cv::minAreaRect(InputArray points)得到一个旋转的矩形,返回旋转矩形。

轮廓周围绘制圆和椭圆-API

- cv::minEnclosingCircle(InputArray points,//得到区域圆形。

Point2f& center,//圆心位置

float& radius)//圆的半径

- cv::fitElipse(InputArray points)//得到最小椭圆

演示代码-步骤

- 首先将图像变为二值图像

- 发现轮廓,找到图像轮廓

- 通过相关API在轮廓点上找到最小包含矩形和圆,旋转矩形与椭圆

- 绘制他们。

Mat src, gray_src,drawImg;

int threshold_value=100;

int threshold_max =255;

const char* output_win="contours";

namedWindow(output_win,CV_WINDOW_AUTOSIZE);

cvtColor(src,src_gray,CV_BGR2GRAY);

blur(src_gray,src_gray,Size(3,3),Point(-1,-1),BORDE_DEFAULT);

const char* trackbar_label="Threshold :";

createTrackbar(trackbar_label,output_win,&threshold_value,threshold_max,Threshold_Callback);

Threshold_Callback(0,0);

void Contours_Callback(int ,void*){

Mat bin_output;

vector> contours;

vector hierachy;

threshold(gray_src,binary_output,threshold_value,threshold_max,THRESH_BINARY);

findContours(binary_output,contours,hierachy,RETR_TREE, CHAIN_APPORX_SIMPLE,Point(0,0));

vector> countours_play(contours.size);

vector ploy_rects(contours.size);

vector hierachy(contours.size);

vector ccs(contours.size);

vector radius(contours.size);

vector minRects;

vector myellipse;

dst = Mat::zeros(src.size(),cv_8UC3);

RNG rng;

for(size_t i=0;i5)

{

myellipse[i] = fitEllips(contours_ploy[i]);

minRecte[i] = minAreaRect(contours_ploy[i]);

}

src.copyTo(drawImg);

Point2f pts[4];

for(size_t t=0; t 32-图像矩(Image Moments)

矩的概念介绍

几何矩

图像中心Center(x0,y0)

API介绍与使用-cv::moments计算生成数据

moments(

InputArray array,//输入数据

bool binaryImage=false//是否为二值图像

)

contoursArea(

InputArray contours,//输入轮廓数据

bool oriented//默认false,返回绝对值

)

arcLength(

InputArray curve,//输入曲线数据

bool closed//是否是封闭曲线

)

演示代码-步骤

- 提取图像边缘

- 发现轮廓

- 计算每个轮廓对象的矩

- 计算每个对象的中心、弧长、面积

Mat src, gray_src,drawImg;

int threshold_value=100;

int threshold_max =255;

const char* output_win="contours";

namedWindow(output_win,CV_WINDOW_AUTOSIZE);

cvtColor(src,gray_src,CV_BGR2GRAY);

GaussianBlur(gray_src.gray_src,Size(3,3),0,0)

const char* trackbar_label="Threshold :";

createTrackbar(trackbar_label,output_win,&threshold_value,threshold_max,Demo_Moments);

Demo_Moments(0,0);

void Demo_Moments(int ,void*){

Mat binary_output,canny_output;

vector> contours;

vector hierachy;

Canny(gray_src,canny_output,threshold_value,threshold_value+2,3,false);

findContours(canny_output,contours,hierachy,RETR_TREE, CHAIN_APPORX_SIMPLE,Point(0,0));

vector> countours_moments(contours.size());

vector ccs(contours.size());

for(size_t i=0;i(contours_moment[i].m10/contours_moment[i].m00),static_cast(contours_moment[i].m01/contours_moment[i].m00));

}

Mat drawImg=Mat::zeros(src.size(),CV_8UC3);

src.copyTo(drawImg);

for(size_t i=0;i 32-点多边形测试

概念介绍-点多边形测试

-测试一个点是否在给定多边形的内部。

API介绍cv::pointPolygonTest

pointPolygonTest(

InputArray contour,//输入的轮廓

Point2f pt,//测试点

bool measureDis//是否返回距离值,如果是false,1表示内面,0表示在边界,-1表示在外部,true返回实际距离

)

返回数据时double类型

代码演示-步骤

- 构建一张400x400大小的图片,Mat::Zero(400,400,CV_8UC1)

- 画上一个六边形的闭合区域line

- 发现轮廓

- 对图像中所有像素点做点多边形册数,得到距离,归一化后显示。

const int r = 100;

Mat src = Mat::zeros(r*4,r*4,CV_8UC1);

vector vert(6);

vert[0] = Point(3 * r / 2, static_cast(1.34*r));

vert[1] = Point(1 * r, 2 * r);

vert[2] = Point(3 * r / 2, static_cast(2.866*r));

vert[3] = Point(5 * r / 2, static_cast(2.866*r));

vert[4] = Point(3 * r / 2, 2 * r );

vert[5] = Point(5 * r, static_cast(1.34*r));

for(int i = 0; i < 6; i++)

{

line(src, vert[i], vert[(i +1 ) % 6],Scalar(255),5,8,0);

}

vector contours;

vector hierachy;

Mat csrc;

src.copyTo(csrc);

findContours(csr,contours,hierachy,RETR_TREE,CHAIN_APPROXE_SIMPLE,Point(0,0));

Mat raw_dist = Mat::zeros(csrc.size(),CV_32FC1);

for(int row = 0 ;row (col),static_cast(row)),true);

raw_dist.at(row,col) = static_cast(row)

}

}

double minValue, maxValue;

minMaxLoc(raw_dist,&minValue,&maxValue,0,0,Mat());

Mat drawImg = Mat::zeros(src.size(),CV_8UC3);

for(int row = 0 ;row (row,col);

if(dist > 0)

{

drawImg.at(row,col)[0]=(uchar)(abs((1.0-dist/maxValue))*255);

}

else if(dist <0){

drawImg.at(row,col)[2]=(uchar)(abs(dist/minValue)*255);

}else{

drawImg.at(row,col)[0]=(uchar)(abs(255-dist));

drawImg.at(row,col)[1]=(uchar)abs(255-dist));

drawImg.at(row,col)[2]=(uchar)abs(255-dist));

}

double dist = pointPolygonTest(contours[0], Point2f(static_cast(col),static_cast(row)),true);

raw_dist.at(row,col) = static_cast(row)

}

}

const char* output_win = "point ploygon test demo";

char input_win[]="input image";

namedWindow(input_win, CV_WINDOW_AUTOSIZE);

namedWindow(output_win, CV_WINDOW_AUTOSIZE);

imshow(input_win,src);

waitKey(0); 33-基于距离变换与分水岭的图像分割

什么是图像分割(image segmentation)

- 图像分割(image segmentation)是图像处理最重要的处理手段之一

- 图像分割的目标是将图像中像素根据一定的规则分为若干(N)cluster集合,每隔几何包含一类像素

- 根据算法分为监督学习方法和无监督学习方法,图像分割算法多数都是无监督学习方法KMeans

距离变换与分水岭介绍

每个山都有高低(灰度值),水位逐渐降低,水流线会进行分割。

距离变换常见算法有两种:

-不断膨胀/腐蚀得到

-基于倒角距离

分水岭变换常见算法:

-基于浸泡理论实现

相关AIP

cv::distanceTransform(InputArray src, OutputArray dst, OutputArray labels, int distanceType, int maskSize, int labelType = DIST_LABEL_CCOMP)

distanceType = DIST_L1/DIST_L2,

maskSize = 3x3,最新的支持5x5,推荐3x3,腐蚀结构元素

labels离散维诺图输出

dst输出8位或者32位浮点数,单一通道,大小与输入图像一致

cv::watershed(InputArray image, InputOutputArray markers);

处理流程

- 将白色背景变成黑色-目的是为了后面的变换做准备

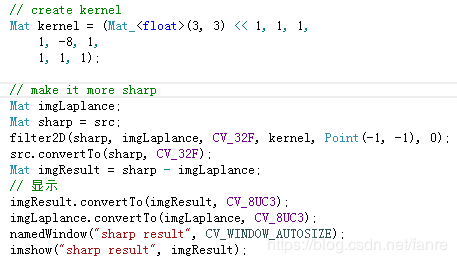

- 使用fiter2D与拉普拉斯酸枝实现图像对比度提高,sharp

- 转为二值图像通过threshold

- 距离变换

- 对距离变换结果进行归一化得到[0~1]之间

- 使用阈值,再次二值化,得到标记

- 腐蚀得到每个Peak - erode

- 发现轮廓 - findContours

- 绘制轮廓 -drawContours

- 分水岭变换watershed

- 对每个分割区域着色输出结果

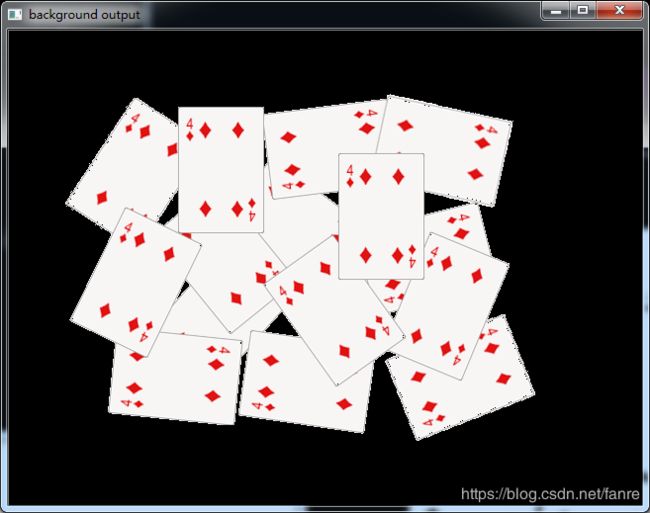

演示代码-加载图像

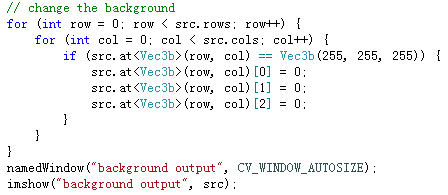

演示代码-去背景

演示代码-Sharp

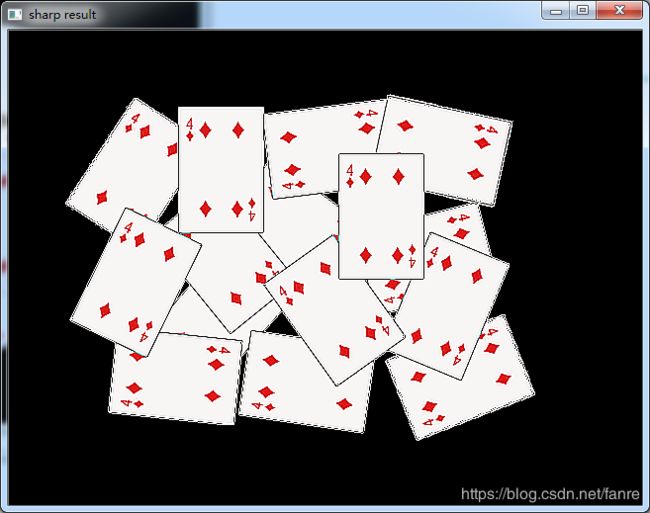

演示代码-二值距离变换

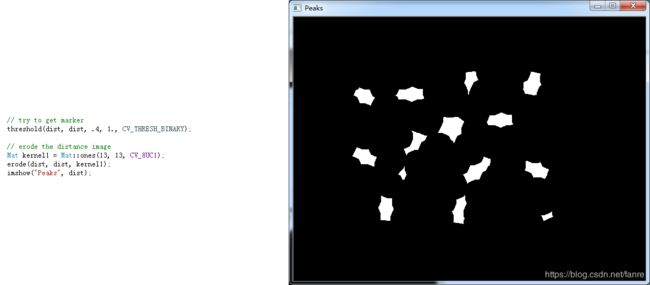

演示代码-二值腐蚀

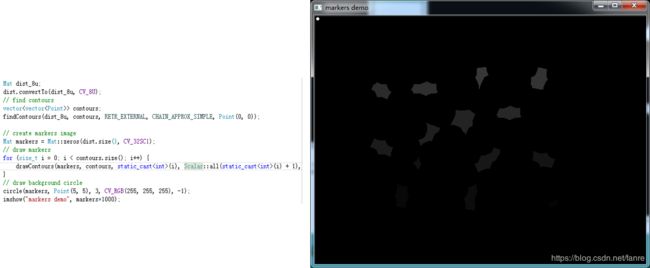

演示代码-标记

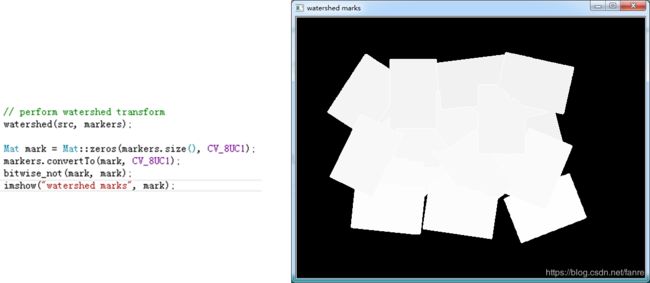

代码演示-分水岭变换

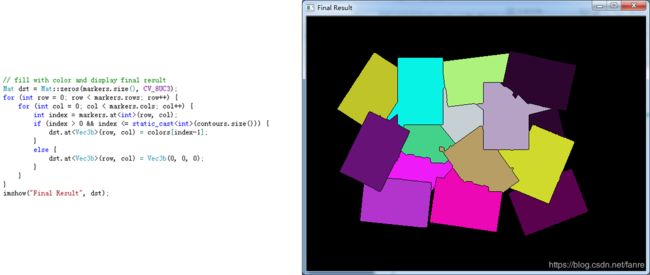

代码演示-着色

for(int row = 0 ; row (row,col)==Vec3b(255,255,255)){

src.at(row,col)[0] = 0 ;

src.at(row,col)[1] = 0 ;

src.at(row,col)[2] = 0 ;

}

}

//sharpes

Mat kernel = (Mat_ (3,3)<<1,1,1,1,-8,1,1,1,1);

Mat imgLaplace;

Mat sharpenImg = src;

filter2D(src, imgLaplance, CV_32F, kernel,

src.convertTo(sharpenImg,CV_32F);

Point(-1,-1),0,BORDER_DEFAULT);

Mat resultImg = sharpenImg - imgLaplance;

resultImg.convertTo(resultImg,CV_8UC3);

imgLaplance.convertTo(imgLaplance,CV_8UC3);

//convert to binary

Mat binaryImg;

cvtColor(resultImg, resultImg,CV_BGR2GRAY);

threshold(resultImg,binaryImg,40,255,THRESH_BINARY | THRESH_OTSU);

imshow("binary image",binaryImg);

Mat distImg;

distanceTransform(binaryImg,distImg,DIST_L1,3,5);

normalize(distImg,distImg,0,1,NORM_MINMAX);

//binary again

threshold(distImg,distImg,.4,1,TRESH_BINARY);

//

Mat k1= Mat::ones(3,3,CV_8UC1);

erode(distImg, distImg,k1,Point(-1,-1));

//markers

Mat dist_8u;

distImg.convertTo(dist_8u,CV_8U);

vector> contours;

findContours(dist_8u,contours,RETR_EXTRENAL,CHAIN_APPROX_SIMPLE,Point(0,0));

//creat makers

Mat markers = Mat::zeros(src.size(),CV_32SC1);

for(size_t t=0;t(i) , Scalar::all(static_cast(i)+1));

}

circle(markers,Point(5,5),3,Scalar(255,255,255),-1);

//perform watershed

watershed(src,markers);

Mat mark = Mat::zeros(markers.size(),CV_8UC1);

markers.convertTo(mark,CV_8UC1);

bitwise_not(mark,mark,Mat());

//generate random color

vector colors;

for(size_t i = 0; i(row,col);

if(index > 0 &&index <= static_cast(contours.size())){

dst.at(row,col) = colors[index - 1];

}else{

dst.at(row,col) = Vec3b(0,0,0);

}

}

}