Windows+DarkNet实现YoloV4的检测及训练(GTX1660S)(二)

1. 数据标注

2. 标注文件格式转换

3. 更改yolo配置

4. 开始训练

5. 训练问题总结

6. C++推理

1. 数据标注

1)使用labelimg软件

2)功能介绍

a) Opendir:打开图片目录(save)

b) Next Image:下一帧图像

c) Prev Image:上一帧图像

d) Save: 保存标注!每标注完一幅图像,一定要保存一下!

e) CreateRectBox: 创建标注框

f) PscalVOC/YOLO:选择PscalVOC

3)标注注意事项

a) 标注目标“最小外包矩形”;

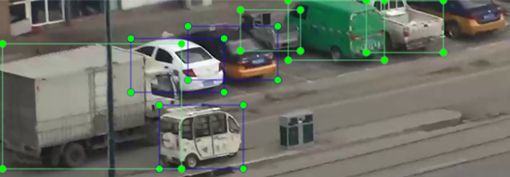

b) 标注当前目标时,不考虑其他目标遮挡问题。如下图所示。

c) 不能遗漏目标。目标残缺大于50%不再标注。

4)选择标签

本文示例:

a) 普通人员:标记为“1”;包括行走的人,开车的人。

b) 着装人员:标记为“2”

c) 小汽车、面包车:标记为“4”

d) 货车、卡车、三轮货车:标记为“6”

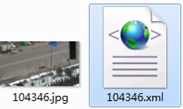

5)生成结果

2. 标注文件格式转换

标注完毕后,我们获得了与图片名称对应的xml标注文件,如图所示。

然后使用以下代码将xml格式的标注文件转换为txt格式:

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

classes = ["1", "2", "4", "6"] # 这里是你的所有分类的名称

myRoot = r'E:\yolov4\train_data' # 这里是你项目的根目录

xmlRoot = myRoot + r'\Annotations'

txtRoot = myRoot + r'\labels'

imageRoot = myRoot + r'\JPEGImages'

def getFile_name(file_dir):

L = []

for root, dirs, files in os.walk(file_dir):

print(files)

for file in files:

if os.path.splitext(file)[1] == '.jpg':

L.append(os.path.splitext(file)[0]) # L.append(os.path.join(root, file))

return L

def convert(size, box):

dw = 1. / size[0]

dh = 1. / size[1]

x = (box[0] + box[1]) / 2.0

y = (box[2] + box[3]) / 2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(image_id):

in_file = open(xmlRoot + '\\%s.xml' % (image_id))

out_file = open(txtRoot + '\\%s.txt' % (image_id), 'w') # 生成txt格式文件

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

cls = obj.find('name').text

if cls not in classes:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

# image_ids_train = open('D:/darknet-master/scripts/VOCdevkit/voc/list.txt').read().strip().split('\',\'') # list格式只有000000 000001

image_ids_train = getFile_name(imageRoot)

# image_ids_val = open('/home/*****/darknet/scripts/VOCdevkit/voc/list').read().strip().split()

list_file_train = open(myRoot + r'\train.txt', 'w')# train.txt需要自行创建

# list_file_val = open('boat_val.txt', 'w')

for image_id in image_ids_train:

list_file_train.write(imageRoot + '\\%s.jpg\n' % (image_id))

convert_annotation(image_id)

list_file_train.close() # 只生成训练集,自己根据自己情况决定

# for image_id in image_ids_val:

# list_file_val.write('/home/*****/darknet/boat_detect/images/%s.jpg\n'%(image_id))

# convert_annotation(image_id)

# list_file_val.close()

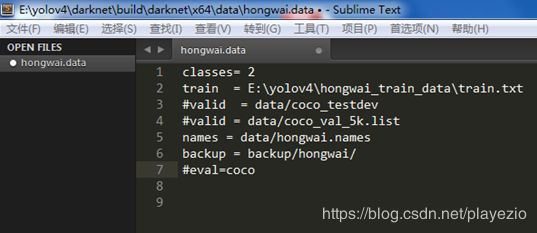

3.更改yolo配置

在darknet-master\build\darknet\x64文件夹下新建yolo-obj.cfg文件(可以直接复制yolov3.cfg,然后重命名为yolo-obj.cfg):

搜索yolo,一共出现三次,对每个yolo块都作如下修改:

filter=(5+classes)3 根据自己的类别情况进行计算

classes 根据自己的分类数设置

random 默认为1,如果显存小于4G建议改为0

[net]

max_batches = 2000num_classes

steps=80%* max_batches ,90%* max_batches

batch=32

subdivisions=32

[yolo]

mask = 6,7,8

anchors = 12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401

classes=2

[yolo]前最后一个[convolutional]

filters=(num_classes+5)*3

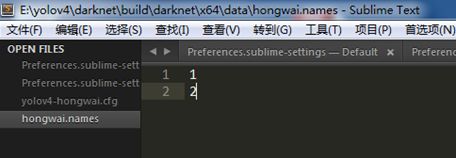

在darknet-master\build\darknet\x64\data\文件夹下新建obj.names文件,写入你自己数据集的类别:

在darknet-master\build\darknet\x64\data\文件夹下新建obj.data文件,写入类别数、训练数据集路径文件、类别标签文件、训练模型保存路径:

4.修改darknet-master目录下的Makefile文件

4.开始训练

#darknet.exe detector train data/obj.data yolov4-obj.cfg yolov4.weights 2>&1 | tee visualization/train_yolov4.log

#darknet.exe detector train data/hongwai.data yolov4-hongwai.cfg yolov4.weights

darknet.exe detector train data/hongwai.data yolov4-hongwai.cfg backup/hongwai/yolov4-hongwai_final.weights –map

darknet.exe detector train data/hongwai.data yolov4-hongwai.cfg

5.训练问题总结

1)cuda out of memory

增加subdivisions,为32或更高

2)加载cfg以及权重后马上跳出训练并保存last权重

选择的预训练权重类别数过多(80>2,比实际训练要多很多)。需要随机初始化权重,不加载预训练权重,即darknet.exe detector train data/hongwai.data yolov4-hongwai.cfg

6.C++推理

- 找到在编译过程中生成的yolo_cpp_dll文件

1)动态链接库(均在darknet-master->build->darknet->x64目录下)

(1)yolo_cpp_dll.lib

(2)yolo_cpp_dll.dll

(3)pthreadGC2.dll

(4)pthreadVC2.dll

2)OpenCV库

(1) opencv_ffmpeg401_64.dll(用于视频播放)

(2) opencv_world401.dll

3)YOLO模型文件

(1)coco.names (在darknet-master->build->darknet->x64->data目录下,保存coco数据集类别)

(2)yolov4.cfg (在darknet-master->build->darknet->x64->cfg目录下,为yolov4模型配置文件)

(3)yolov4.weights (下载后放置在darknet-master->build->darknet->x64目录下,为yolov4模型预训练权重文件)

4)头文件

(1)yolo_v2_class.hpp (在darknet-master->include目录下,为调用动态链接库需要引用的yolo头文件)

4、在VS中新建一个空项目,在源文件中添加main.cpp,将上一步中所有文件全部放入与main.cpp同路径的文件夹中,并且放入一个目标检测的测试视频test.mp4,在main.cpp中添加如下代码:

#include

#ifdef _WIN32

#define OPENCV

#define GPU

#endif

#include "yolo_v2_class.hpp" //引用动态链接库中的头文件

#include

#include "opencv2/highgui/highgui.hpp"

#pragma comment(lib, "opencv_world401.lib") //引入OpenCV链接库

#pragma comment(lib, "yolo_cpp_dll.lib") //引入YOLO动态链接库

//以下两段代码来自yolo_console_dll.sln,有修改

void draw_boxes(cv::Mat mat_img, std::vector result_vec, std::vector obj_names)

{

int const colors[6][3] = { { 1,0,1 },{ 0,0,1 },{ 0,1,1 },{ 0,1,0 },{ 1,1,0 },{ 1,0,0 } };

for (auto &i : result_vec) {

cv::Scalar color = obj_id_to_color(i.obj_id);

cv::rectangle(mat_img, cv::Rect(i.x, i.y, i.w, i.h), color, 2);

if (obj_names.size() > i.obj_id) {

std::string obj_name = obj_names[i.obj_id];

cv::Size const text_size = getTextSize(obj_name, cv::FONT_HERSHEY_COMPLEX_SMALL, 1.2, 2, 0);

int const max_width = (text_size.width > i.w + 2) ? text_size.width : (i.w + 2);

cv::rectangle(mat_img, cv::Point2f(std::max((int)i.x - 1, 0), std::max((int)i.y - 30, 0)),

cv::Point2f(std::min((int)i.x + max_width, mat_img.cols-1), std::min((int)i.y, mat_img.rows-1)),

color, CV_FILLED, 8, 0);

putText(mat_img, obj_name, cv::Point2f(i.x, i.y - 10), cv::FONT_HERSHEY_COMPLEX_SMALL, 1.2, cv::Scalar(0, 0, 0), 2);

}

}

}

std::vector objects_names_from_file(std::string const filename) {

std::ifstream file(filename);

std::vector file_lines;

if (!file.is_open()) return file_lines;

for(std::string line; getline(file, line);) file_lines.push_back(line);

std::cout << "object names loaded \n";

return file_lines;

}

int main()

{

std::string names_file = "coco.names";

std::string cfg_file = "yolov4.cfg";

std::string weights_file = "yolov4.weights";

Detector detector(cfg_file, weights_file, 0); //初始化检测器

//std::vector obj_names = objects_names_from_file(names_file); //调用获得分类对象名称

//或者使用以下四行代码也可实现读入分类对象文件

std::vector obj_names;

std::ifstream ifs(names_file.c_str());

std::string line;

while (getline(ifs, line)) obj_names.push_back(line);

//测试是否成功读入分类对象文件

for (size_t i = 0; i < obj_names.size(); i++)

{

std::cout << obj_names[i] << std::endl;

}

cv::VideoCapture capture;

capture.open("test.mp4");

if (!capture.isOpened())

{

printf("文件打开失败");

}

cv::Mat frame;

while (true)

{

capture >> frame;

if (frame.empty())

{

break;

}

std::vector result_vec = detector.detect(frame);

draw_boxes(frame, result_vec, obj_names);

cv::namedWindow("Demo", CV_WINDOW_NORMAL);

cv::imshow("Demo", frame);

cv::waitKey(5);

}

return 0;