【深度学习】keras学习笔记(一):搭建多层感知机

回归预测问题:

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from tensorflow.keras import layers

x = np.sort(5 * np.random.rand(40, 1), axis=0)

y = np.sin(x).ravel()

y[::5] += 3 * (0.5 - np.random.rand(8))

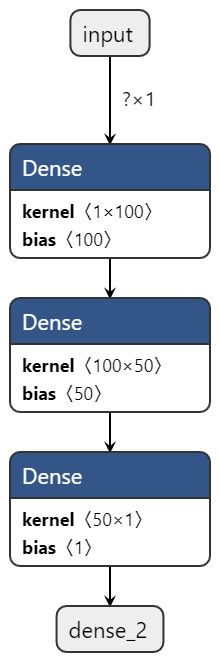

model = keras.Sequential()

model.add(layers.Dense(100, activation="relu", input_dim=1))

model.add(layers.Dense(50, activation="relu"))

model.add(layers.Dense(1))

model.save("model.h5")

model.compile(optimizer="adam", loss="mse")

model.fit(x, y, epochs=1000)

y_pre = model.predict(x)

plt.scatter(x, y, color='c', edgecolors='k')

plt.plot(x, y_pre, color='m')

plt.xlabel("x")

plt.ylabel("y")

plt.show()

import netron

import time

netron.start("model.h5")

time.sleep(60)

netron.stop()

import numpy as np

from tensorflow import keras

from tensorflow.keras import layers

from sklearn.model_selection import train_test_split

x = np.sort(5 * np.random.rand(100, 1), axis=0)

y = np.sin(x).ravel()

y[::5] += 3 * (0.5 - np.random.rand(20))

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=42)

model = keras.Sequential()

model.add(layers.Dense(100, activation="relu", input_dim=1))

model.add(layers.Dense(50, activation="relu"))

model.add(layers.Dense(1))

model.compile(optimizer="adam", loss="mse")

model.fit(x_train, y_train, epochs=1000)

model.save("model.h5")

import numpy as np

from tensorflow.keras.models import load_model

from sklearn.model_selection import train_test_split

x = np.sort(5 * np.random.rand(100, 1), axis=0)

y = np.sin(x).ravel()

y[::5] += 3 * (0.5 - np.random.rand(20))

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=42)

model = load_model("model.h5")

loss = model.evaluate(x_test, y_test)

print("Test loss: ", loss)

30/1 [====================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================================] - 0s 2ms/sample - loss: 0.1987

Test loss: 0.19867388904094696

Dense()所执行的操作即是output = activation(dot(input, kernel) + bias),学过深度学习的一般都能看懂,Dense操作只是构建了一层神经网络,如果要构建多层神经网络就要执行多个Dense,Dense中的参数units即为隐层神经元的个数,也是隐层输出的维度,参数activation为该层的激活函数,指定相应梯度下降算法以及损失函数,进行编译。fit,predict操作类似sklearn中的各种模型的方法,fit中的epochs为迭代的次数。

也可以修改adma梯度下降法的学习率,程序默认学习率为0.001

tf.keras.optimizers.Adam(

learning_rate=0.001,

beta_1=0.9,

beta_2=0.999,

epsilon=1e-07,

amsgrad=False,

name="Adam",

**kwargs

)

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.optimizers import Adam

x = np.sort(5 * np.random.rand(40, 1), axis=0)

y = np.sin(x).ravel()

y[::5] += 3 * (0.5 - np.random.rand(8))

model = keras.Sequential()

model.add(layers.Dense(100, activation="relu", input_dim=1))

model.add(layers.Dense(50, activation="relu"))

model.add(layers.Dense(1))

# 修改学习率

adam = Adam(learning_rate=0.01)

model.compile(optimizer=adam, loss="mse")

model.fit(x, y, epochs=1000)

y_pre = model.predict(x)

plt.scatter(x, y, color='c', edgecolors='k')

plt.plot(x, y_pre, color='m', linewidth=2)

plt.xlabel("x")

plt.ylabel("y")

plt.show()

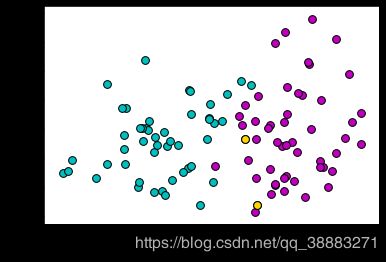

二元分类问题:

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.optimizers import Adam

X, y = make_classification(n_features=2, n_redundant=0, n_informative=2,

random_state=1, n_clusters_per_class=1)

rng = np.random.RandomState(2)

X += 2 * rng.uniform(size=X.shape)

data = linearly_separable = (X, y)

x, y = data

m, n = x.shape

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=.3, random_state=42)

p, q = x_test.shape

model = keras.Sequential()

model.add(layers.Dense(100, input_dim=2))

model.add(layers.Dense(50, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

model.compile(optimizer="adam", loss="binary_crossentropy")

model.fit(x_train, y_train, epochs=1000)

y_pre = model.predict_classes(x_test)

print(y_pre)

print(y_test)

for i in range(m):

if y[i] == 1:

plt.scatter(x[i, 0], x[i, 1], color='c', edgecolors='k', s=60)

else:

plt.scatter(x[i, 0], x[i, 1], color='m', edgecolors='k', s=60)

for i in range(p):

if y_pre[i] != y_test[i]:

plt.scatter(x_test[i, 0], x_test[i, 1], color='gold', edgecolors='k', s=60)

plt.xlabel("x1")

plt.ylabel("x2")

plt.show()

最终测试集只有两个样本分类错了。

关于测试集通过训练好的模型得到测试结果有predict、predict_classes、predict_proba三种方法,predict一般用于拟合,最终得到的输出即为神经网络最后一层的输出,后两者用于分类。可以看下predict_classes和predict_proba的源码:

def predict_classes(self, x, batch_size=32, verbose=0):

"""Generate class predictions for the input samples.

The input samples are processed batch by batch.

Arguments:

x: input data, as a Numpy array or list of Numpy arrays

(if the model has multiple inputs).

batch_size: integer.

verbose: verbosity mode, 0 or 1.

Returns:

A numpy array of class predictions.

"""

proba = self.predict(x, batch_size=batch_size, verbose=verbose)

if proba.shape[-1] > 1:

return proba.argmax(axis=-1)

else:

return (proba > 0.5).astype('int32')

def predict_proba(self, x, batch_size=32, verbose=0):

"""Generates class probability predictions for the input samples.

The input samples are processed batch by batch.

Arguments:

x: input data, as a Numpy array or list of Numpy arrays

(if the model has multiple inputs).

batch_size: integer.

verbose: verbosity mode, 0 or 1.

Returns:

A Numpy array of probability predictions.

"""

preds = self.predict(x, batch_size, verbose)

if preds.min() < 0. or preds.max() > 1.:

logging.warning('Network returning invalid probability values. '

'The last layer might not normalize predictions '

'into probabilities '

'(like softmax or sigmoid would).')

return preds

二者内部实际都调用了predict,对于predict_classes,函数内部会先判断predict返回值即神经网络最后一层的输出的第二维度即列维数是否为1,若为1则代表二分类问题,若大于1则代表多分类。二分类根据sigmoid函数默认选择0.5作为阈值,大于0.5的为1类别小于0.5的为0类别。多分类问题是在神经网络最后一层加了softmax转为了样本属于每个类别的概率,所以函数返回每一行最大值的索引(即最大概率的索引值)。

predict_proba则直接返回predict函数的返回值,但前提是,神经网络用于分类问题,神经网络最后一层加了sigmoid或者softmax层。