PyTorch学习笔记(14) ——PyTorch 1.0 的C++ FrontEnd初体验

在去年12月份,我尝试了一下PyTorch 1.0的C++前端, 当时官方负责PyTorch的C++前端的老哥是: Peter Goldsborough, 当时的C++前端还不够稳定,官方文档提供的demo无法跑通.

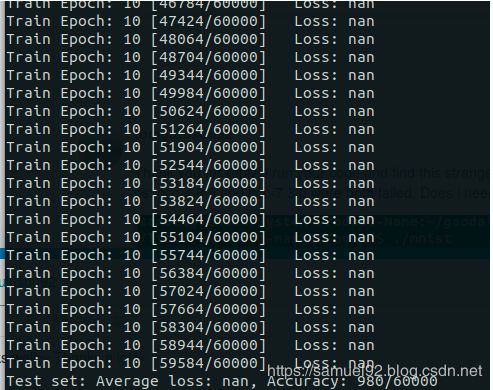

所以为了避免后面的同学再次入坑. 本部分将会手把手教你如何用PyTorch1.0 跑通C++ Mnist模型的训练~

0. PyTorch C++接口哲学

PyTorch’s C++ frontend was designed with the idea that the Python frontend is great, and should be used when possible; but in some settings, performance and portability requirements make the use of the Python interpreter infeasible(不可实行的).

For example, Python is a poor choice for low latency, high performance or multithreaded environments, such as video games or production servers. The goal of the C++ frontend is to address these use cases, while not sacrificing the user experience of the Python frontend.

显然, C++前端的目的是为了提供一种跟Python相差不多的接口, 却比Python接口效率高出许多. 在不牺牲用户的开发体验(即跟Python中的实现方式相近,使得用户上手更加方便。)

如此, C++前端在开发时候就遵循了两个哲学:

-

① Closely model the Python frontend in its design(在设计中与Python前端的实现进行尽可能贴近)

在设计中与Python前端的实现进行尽可能贴近, 这意味着C++前端兼具了功能性(functionality)和便捷性(conventions). 虽然C++前端和Python前端可能有一些差异 (e.g., 我们在C++前端中放弃了不赞成的特性或修复了Python前端的“缺陷”), 我们保证, 移植python到C++上仅仅需要做编程语言层面的工作, 而不涉及修改功能或者行为. -

② Prioritize flexibility and user-friendliness over micro-optimization(将灵活性和用户友好性置于微观优化之上)

在C++中, 用户通常可以获得最优化的代码(虽然代价是编写困难和调试问题等(静态语言的特点)). 但是, 灵活性(Flexibility)和动态性(dynamism)是PyTorch的灵魂, 所以我们的C++前端尝试保留这种PyTorch的灵魂-----这意味着在某些情况下可能会为了保持灵活性和动态性牺牲性能.

我们的目的是让不以使用C++为生的研究人员也可以容易的使用C++前端!!!

注意: Python前端不一定会比C++慢!!

Python前端中, 将所有的computationally expensive运算都放到C++里面计算, 因为这些操作会占用程序的大部分时间. 如果您更喜欢编写Python,并且有能力编写Python,我们建议使用Python接口来编写PyTorch. 然而, 无论出于什么原因(不管你是个C++ programmer还是单纯像我一样想尝试一些PyTorch1.0的新feature), 与Python前端类似, C++前端也非常方便、灵活、友好和直观。

最后, 作者认为: 这两种前端的目标不同, 但是他们携手工作(work hand in hand), 没有任何一方可以说无条件的替换对方。

1. PyTorch C++ Mnist例子

刚开始, 我是上官网找的例子: C++ Mnist minimal example, 但是!!!跑步起来, 为了解决这个问题, 我去官方论坛问了一下.

【PyTorch1.0】 C++ mnist demo Error

最后终于解决了, 下面我直接贴代码和环境, 并把当时遇到的坑附上~

1.1 环境

- gcc: 5.5+ (我最开始用的gcc是4.8.5的,会报

段错误!!!)

- g++: 5.5+

- cuda: 9.0

- system: ubuntu18.04

- libtorch: https://download.pytorch.org/libtorch/nightly/cu90/libtorch-shared-with-deps-latest.zip

- cmake: 3.13

1.2 CMakeLists.txt

cmake_minimum_required(VERSION 3.1 FATAL_ERROR)

project(mnist)

SET(CMAKE_BUILD_TYPE "Release")

SET(CMAKE_CXX_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall")

find_package(Torch REQUIRED)

option(DOWNLOAD_MNIST "Download the MNIST dataset from the internet" ON)

if (DOWNLOAD_MNIST)

message(STATUS "Downloading MNIST dataset")

execute_process(

COMMAND python ${CMAKE_CURRENT_LIST_DIR}/download_mnist.py

-d ${CMAKE_BINARY_DIR}/data

ERROR_VARIABLE DOWNLOAD_ERROR)

if (DOWNLOAD_ERROR)

message(FATAL_ERROR "Error downloading MNIST dataset: ${DOWNLOAD_ERROR}")

endif()

endif()

add_executable(mnist mnist.cpp)

target_compile_features(mnist PUBLIC cxx_range_for)

target_link_libraries(mnist ${TORCH_LIBRARIES})

1.3 download_mnist.py脚本

这个代码是下载所需要的mnist数据集的脚本代码.

from __future__ import division

from __future__ import print_function

import argparse

import gzip

import os

import sys

import urllib

try:

from urllib.error import URLError

from urllib.request import urlretrieve

except ImportError:

from urllib2 import URLError

from urllib import urlretrieve

RESOURCES = [

'train-images-idx3-ubyte.gz',

'train-labels-idx1-ubyte.gz',

't10k-images-idx3-ubyte.gz',

't10k-labels-idx1-ubyte.gz',

]

def report_download_progress(chunk_number, chunk_size, file_size):

if file_size != -1:

percent = min(1, (chunk_number * chunk_size) / file_size)

bar = '#' * int(64 * percent)

sys.stdout.write('\r0% |{:<64}| {}%'.format(bar, int(percent * 100)))

def download(destination_path, url, quiet):

if os.path.exists(destination_path):

if not quiet:

print('{} already exists, skipping ...'.format(destination_path))

else:

print('Downloading {} ...'.format(url))

try:

hook = None if quiet else report_download_progress

urlretrieve(url, destination_path, reporthook=hook)

except URLError:

raise RuntimeError('Error downloading resource!')

finally:

if not quiet:

# Just a newline.

print()

def unzip(zipped_path, quiet):

unzipped_path = os.path.splitext(zipped_path)[0]

if os.path.exists(unzipped_path):

if not quiet:

print('{} already exists, skipping ... '.format(unzipped_path))

return

with gzip.open(zipped_path, 'rb') as zipped_file:

with open(unzipped_path, 'wb') as unzipped_file:

unzipped_file.write(zipped_file.read())

if not quiet:

print('Unzipped {} ...'.format(zipped_path))

def main():

parser = argparse.ArgumentParser(

description='Download the MNIST dataset from the internet')

parser.add_argument(

'-d', '--destination', default='.', help='Destination directory')

parser.add_argument(

'-q',

'--quiet',

action='store_true',

help="Don't report about progress")

options = parser.parse_args()

if not os.path.exists(options.destination):

os.makedirs(options.destination)

try:

for resource in RESOURCES:

path = os.path.join(options.destination, resource)

url = 'http://yann.lecun.com/exdb/mnist/{}'.format(resource)

download(path, url, options.quiet)

unzip(path, options.quiet)

except KeyboardInterrupt:

print('Interrupted')

if __name__ == '__main__':

main()

1.4 mnist.cpp

关键的训练代码:

#include 1.5 编译&运行

注意, 上面的3个文件需要放在同一级目录下, 然后在这级目录建一个build文件夹, 用常规的cmake ..即可.

2. 参考资料

[1] PyTorch1.0: C++ mnist demo Error

[2] TonyzBi: libtorch-mnist例子