sqoop概述与简单实践 & sqoop-1.4.6-cdh5.7.0安装

目录

- 产生背景

- sqoop1与2的区别

- sqoop-1.4.6-cdh5.7.0安装

- sqoop导入导出简单数据

产生背景

当有数据想从RDBMS上抽取到Hadoop时,有以下几种方式:

1)读取一个文件后,加载到Hive的表中

2)通过编写shell脚本来获取RDBMS上的数据,之后通过HDFS shell中的put命令传到HDFS

3)使用MapReduce将RDBMS上的数据写到HDFS,这种也是最常用的方式

当有数据想从Hadoop上导入RDBMS上时,也可以使用MapReduce来操作。

但以上的数据导入/导出存在比较明显的问题:

1、MapReduce编写的繁琐

2、效率低下(比如,当新进一条业务线,就必须得再写一个MapReduce,复用性差)

基于存在的问题,抽取一个框架该怎么设计?

1、RDBMS

需要: drive/username/password/url/database/table/sql等

2、Hadoop

需要: hdfs path/分隔符/mappers数量/reducers数量(reducer的数量决定了有多少个文件输出)

3、需求1:当接入新的业务线后,只需要将新的业务所对应的参数传递给MapReduce即可

a. 使用hadoop jar的方式来提交作业

b. 动态的根据业务线需求来传入参数

4、需求2: 使用操作简洁便利

WebUI + DB Configuration ==> UI/UE

使每条业务线就是一行表记录,全部配置到数据库内,用户只需要在UI操作即可

在上述的问题及愿景下产生了Sqoop框架。

sqoop1与2的区别

sqoop1不兼容sqoop2,sqoop2相比sqoop1改进了以下几个方面:

- 引入了sqoop server,集中化管理connector等

- 多种访问方式: CLI,Web UI,REST API

- 引入了基于角色的安全机制

sqoop1和sqoop2的功能对比

| 功能 | sqoop1 | sqoop2 |

| 用于所有主要RDBMS的连接器 | 支持 | 不支持 解决办法: 使用已在以下数据库上执行测试的通用 JDBC 连接器: Microsoft SQL Server 、 PostgreSQL 、 MySQL 和 Oracle 。 此连接器应在任何其它符合 JDBC 要求的数据库上运行。但是,性能可能无法与 Sqoop 中的专用连接器相比 |

| k8s安全集成 | 支持 | 不支持 |

| 数据从RDBMS传输至Hive或HBase | 支持 | 不支持 解决办法: 按照此两步方法操作。 将数据从 RDBMS 导入 HDFS 在 Hive 中使用相应的工具和命令(例如 LOAD DATA 语句),手动将数据载入 Hive 或 HBase |

| 数据从Hive或HBase传输至RDBMS | 不支持 解决办法: 按照此两步方法操作。 从 Hive 或 HBase 将数据提取至 HDFS (作为文本或 Avro 文件) 使用 Sqoop 将上一步的输出导出至 RDBMS |

不支持 按照与sqoop1相同的解决方法操作 |

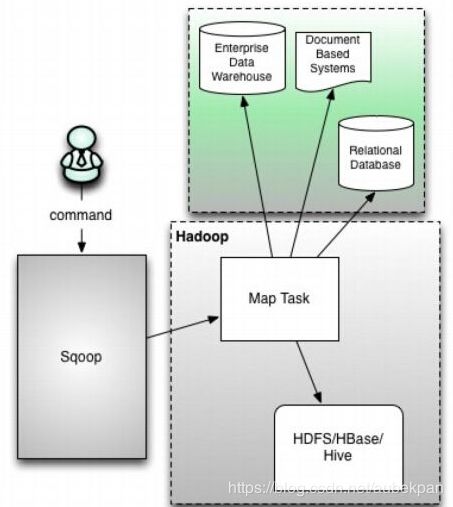

sqoop1架构图

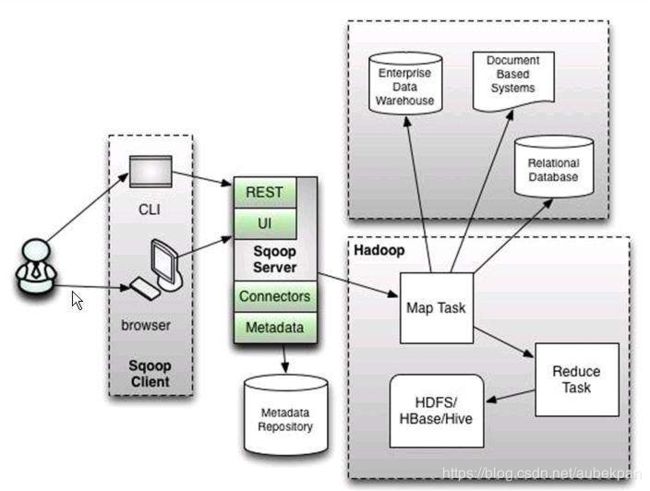

sqoop2架构图

sqoop1在使用上通过sqoop客户端直接提交作业,通过CLI控制台的方式访问

sqoop2引入sqoop server,实现集中管理,并可以通过web UI的交互方式来进行访问

sqoop1部署相对比较简单,但是无法支持所有的数据类型,安全机制也相对较差

sqoop2交互方式较多,所有的链接安装在sqoop server上,权限管理机制完善,connector规范,只负责数据的读写,但是配置部署繁琐以及兼容性差

sqoop-1.4.6-cdh5.7.0安装

- sqoop-1.4.6-cdh5.7.0下载

1.解压安装目录

[hadoop@192 sqoop-1.4.6-cdh5.7.0]$ pwd

/home/hadoop/app/sqoop-1.4.6-cdh5.7.0

2.环境变量

export SQOOP_HOME=/home/hadoop/app/sqoop-1.4.6-cdh5.7.0

export PATH=$PATH:$SQOOP_HOME/bin

3.验证安装

[hadoop@192 bin]$ sqoop version

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/02/11 07:55:56 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

Sqoop 1.4.6-cdh5.7.0

git commit id

Compiled by jenkins on Wed Mar 23 11:30:51 PDT 2016

[hadoop@192 bin]$ sqoop help

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/02/11 07:55:46 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

4.拷贝HIVE_HOME/lib下的jar包至$SQOOP_HOME/lib下

[hadoop@192 lib]$ pwd

/home/hadoop/app/hive-1.1.0-cdh5.7.0/lib

[hadoop@192 lib]$ cp hive-common-1.1.0-cdh5.7.0.jar $SQOOP_HOME/lib

[hadoop@192 lib]$ cp hive-shims-* $SQOOP_HOME/lib

[hadoop@192 lib]$ cp mysql-connector-java-5.1.46-bin.jar $SQOOP_HOME/lib

5.连接MySQL查看数据情况

[hadoop@192 bin]$ sqoop list-databases \

> --connect jdbc:mysql://localhost:3306 \

> --username root \

> --password 123456

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/02/11 08:05:12 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

19/02/11 08:05:12 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/02/11 08:05:12 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

alter_db

mysql

performance_schema

test

6.连接数据库查看数据表情况

[hadoop@192 bin]$ sqoop list-tables \

> --connect jdbc:mysql://localhost:3306/alter_db \

> --username root \

> --password 123456

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/app/sqoop-1.4.6-cdh5.7.0/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

19/02/11 08:09:41 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

19/02/11 08:09:41 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/02/11 08:09:42 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

bucketing_cols

cds

columns_v2

database_params

db_privs

dbs

func_ru

funcs

global_privs

idxs

index_params

part_col_privs

part_col_stats

part_privs

partition_key_vals

partition_keys

partition_params

partitions

roles

sd_params

sds

sequence_table

serde_params

serdes

skewed_col_names

skewed_col_value_loc_map

skewed_string_list

skewed_string_list_values

skewed_values

sort_cols

tab_col_stats

table_params

tbl_col_privs

tbl_privs

tbls

version

sqoop导入导出数据

1.sqoop从MySQL导入数据到HDFS

sqoop import \

--connect jdbc:mysql://localhost:3306/alter_db \

--username root \

--password 123456 \

--table idxs \

--num-mappers 1 \

--target-dir /datas/sqoop/input \

--delete-target-dir

2.sqoop从MySQL导入数据到Hive

sqoop import \

--connect jdbc:mysql://127.0.0.1:3306/test \

--username root \

--password 123456 \

--table idxs \

--num-mappers 1 \

--fields-terminated-by ',' \

--delete-target-dir \

--hive-database test \

--hive-import \

--hive-table idxs

3.sqoop从Hive导出数据到MySQL

sqoop export \

--connect jdbc:mysql://localhost:3306/alter_db \

--username root \

--password 123456 \

--table idxs \

--num-mappers 1 \

--fields-terminated-by ',' \

--export-dir /user/hive/warehouse/test_db.db/idxs