深度强化学习系列(9): Dueling DQN(DDQN)原理及实现

本文是DeepMind发表于ICML2016顶会的文章(获得Best Paper奖),第一作者Ziyu Wang(第四作Hado Van Hasselt就是前几篇文章#Double Q-learning#,Double DQN的作者),可以说DeepMind开创了DQN系列算法(后续阐述OpenAI的策略梯度算法)。往常一样,摘要结论。

其实本文提出的算法并没有过多的数学过程,而是一种网络结构上的创新,如同摘要中描述的,其将网络分成了两部分:值函数 和 优势函数(后续详细阐述)。

结果表明,在Atari游戏中达到了State-of-the-Art的水平。

1. 问题阐述

在前面几篇博客中已经对 Q-learning,Double Q-learning(解决值函数过估计问题),DQN(解决大状态空间、动作空间问题),Double DQN(解决值函数过估计问题),PER-DQN(解决经验回放的采样问题)进行了详细的阐述。本文从网络结构上入手,对现有的算法包括DQN、Double DQN以及PER算法进行了改进。

2. 算法原理和过程

文中第一章就直接向我们展示了提出的“dueling architecture”结构,如图所示:

图中将原有的DQN算法的网络输出分成了两部分:即值函数和优势函数共同组成,在数学上表示为:

Q ( s , a ; θ , α , β ) = V ( s ; θ , β ) + A ( s , a ; θ , α ) Q(s, a ; \theta, \alpha, \beta)=V(s ; \theta, \beta)+A(s, a ; \theta, \alpha) Q(s,a;θ,α,β)=V(s;θ,β)+A(s,a;θ,α)

其中, θ \theta θ 表示网络结构, α \alpha α, β \beta β表示两个全连接层网络的参数,由图和公式可知, V V V仅与状态有关,而 A A A与状态和动作都有关。

如果仅仅用当前的这个公式更新的话,其存在一个“unidentifiable”问题(比如V和A分别加上和减去一个值能够得到同样的Q,但反过来显然无法由Q得到唯一的V和A)。作者为了解决它,作者强制优势函数估计量在选定的动作处具有零优势。 也就是说让网络的最后一个模块实现前向映射,表示为:

Q ( s , a ; θ , α , β ) = V ( s ; θ , β ) + ( A ( s , a ; θ , α ) − max a ′ ∈ ∣ A ∣ A ( s , a ′ ; θ , α ) ) \begin{array}{l}Q(s, a ; \theta, \alpha, \beta)=V(s ; \theta, \beta)+ \left(A(s, a ; \theta, \alpha)-\max _{a^{\prime} \in|\mathcal{A}|} A\left(s, a^{\prime} ; \theta, \alpha\right)\right)\end{array} Q(s,a;θ,α,β)=V(s;θ,β)+(A(s,a;θ,α)−maxa′∈∣A∣A(s,a′;θ,α))

怎么理解呢?对于任意 a a a来说,

a ∗ = arg max a ′ ∈ A Q ( s , a ′ ; θ , α , β ) = arg max a ′ ∈ A A ( s , a ′ ; θ , α ) a^{*}=\arg \max _{a^{\prime} \in \mathcal{A}} Q\left(s, a^{\prime} ; \theta, \alpha, \beta\right)= \arg \max _{a^{\prime} \in \mathcal{A}} A\left(s, a^{\prime} ; \theta, \alpha\right) a∗=arga′∈AmaxQ(s,a′;θ,α,β)=arga′∈AmaxA(s,a′;θ,α)

那么我们可以得到: Q ( s , a ∗ ; θ , α , β ) = V ( s ; θ , β ) Q\left(s, a^{*} ; \theta, \alpha, \beta\right)= V(s ; \theta, \beta) Q(s,a∗;θ,α,β)=V(s;θ,β),因此, V ( s ; θ , β ) V(s; \theta, \beta) V(s;θ,β)提供了价值函数的估计,而另一个产生了优势函数的估计。在这里作者使用里平均( 1 ∣ A ∣ \frac{1}{|\mathcal{A}|} ∣A∣1)代替了最大化操作,表示为:

Q ( s , a ; θ , α , β ) = V ( s ; θ , β ) + ( A ( s , a ; θ , α ) − 1 ∣ A ∣ ∑ a ′ A ( s , a ′ ; θ , α ) ) \begin{array}{l}Q(s, a ; \theta, \alpha, \beta)=V(s ; \theta, \beta)+ \left(A(s, a ; \theta, \alpha)-\frac{1}{|\mathcal{A}|} \sum_{a^{\prime}} A\left(s, a^{\prime} ; \theta, \alpha\right)\right)\end{array} Q(s,a;θ,α,β)=V(s;θ,β)+(A(s,a;θ,α)−∣A∣1∑a′A(s,a′;θ,α))

采用这种方法,虽然使得值函数V和优势函数A不再完美的表示值函数和优势函数(在语义上的表示),但是这种操作提高了稳定性。而且,并没有改变值函数V和优势函数A的本质表示。

3 实验相关内容

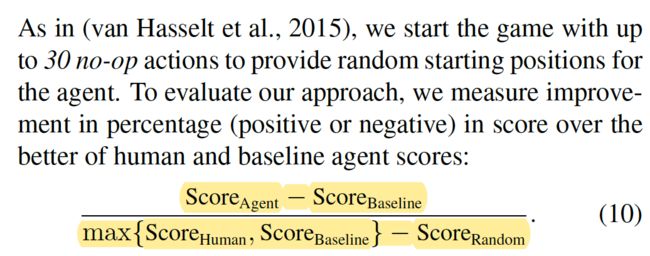

为了让实验具有可对比性,作者采用了和DQN,PER等一直的网络结构,并在次基础上采用了Van采用的通用评价方式。

4 实现结果

5. 代码复现

本部分采用莫凡代码,在Pendulum-v0环境中进行试验。

"""

Tensorflow: 1.0

gym: 0.8.0

"""

import gym

# from RL_brain import DuelingDQN

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

env = gym.make('Pendulum-v0')

env = env.unwrapped

env.seed(1)

MEMORY_SIZE = 3000

ACTION_SPACE = 25

np.random.seed(1)

tf.set_random_seed(1)

class DuelingDQN:

def __init__(

self,

n_actions,

n_features,

learning_rate=0.001,

reward_decay=0.9,

e_greedy=0.9,

replace_target_iter=200,

memory_size=500,

batch_size=32,

e_greedy_increment=None,

output_graph=False,

dueling=True,

sess=None,

):

self.n_actions = n_actions

self.n_features = n_features

self.lr = learning_rate

self.gamma = reward_decay

self.epsilon_max = e_greedy

self.replace_target_iter = replace_target_iter

self.memory_size = memory_size

self.batch_size = batch_size

self.epsilon_increment = e_greedy_increment

self.epsilon = 0 if e_greedy_increment is not None else self.epsilon_max

self.dueling = dueling # decide to use dueling DQN or not

self.learn_step_counter = 0

self.memory = np.zeros((self.memory_size, n_features*2+2))

self._build_net()

t_params = tf.get_collection('target_net_params')

e_params = tf.get_collection('eval_net_params')

self.replace_target_op = [tf.assign(t, e) for t, e in zip(t_params, e_params)]

if sess is None:

self.sess = tf.Session()

self.sess.run(tf.global_variables_initializer())

else:

self.sess = sess

if output_graph:

tf.summary.FileWriter("logs/", self.sess.graph)

self.cost_his = []

def _build_net(self):

def build_layers(s, c_names, n_l1, w_initializer, b_initializer):

with tf.variable_scope('l1'):

w1 = tf.get_variable('w1', [self.n_features, n_l1], initializer=w_initializer, collections=c_names)

b1 = tf.get_variable('b1', [1, n_l1], initializer=b_initializer, collections=c_names)

l1 = tf.nn.relu(tf.matmul(s, w1) + b1)

if self.dueling:

# Dueling DQN

with tf.variable_scope('Value'):

w2 = tf.get_variable('w2', [n_l1, 1], initializer=w_initializer, collections=c_names)

b2 = tf.get_variable('b2', [1, 1], initializer=b_initializer, collections=c_names)

self.V = tf.matmul(l1, w2) + b2

with tf.variable_scope('Advantage'):

w2 = tf.get_variable('w2', [n_l1, self.n_actions], initializer=w_initializer, collections=c_names)

b2 = tf.get_variable('b2', [1, self.n_actions], initializer=b_initializer, collections=c_names)

self.A = tf.matmul(l1, w2) + b2

with tf.variable_scope('Q'):

out = self.V + (self.A - tf.reduce_mean(self.A, axis=1, keep_dims=True)) # Q = V(s) + A(s,a)

else:

with tf.variable_scope('Q'):

w2 = tf.get_variable('w2', [n_l1, self.n_actions], initializer=w_initializer, collections=c_names)

b2 = tf.get_variable('b2', [1, self.n_actions], initializer=b_initializer, collections=c_names)

out = tf.matmul(l1, w2) + b2

return out

# ------------------ build evaluate_net ------------------

self.s = tf.placeholder(tf.float32, [None, self.n_features], name='s') # input

self.q_target = tf.placeholder(tf.float32, [None, self.n_actions], name='Q_target') # for calculating loss

with tf.variable_scope('eval_net'):

c_names, n_l1, w_initializer, b_initializer = \

['eval_net_params', tf.GraphKeys.GLOBAL_VARIABLES], 20, \

tf.random_normal_initializer(0., 0.3), tf.constant_initializer(0.1) # config of layers

self.q_eval = build_layers(self.s, c_names, n_l1, w_initializer, b_initializer)

with tf.variable_scope('loss'):

self.loss = tf.reduce_mean(tf.squared_difference(self.q_target, self.q_eval))

with tf.variable_scope('train'):

self._train_op = tf.train.RMSPropOptimizer(self.lr).minimize(self.loss)

# ------------------ build target_net ------------------

self.s_ = tf.placeholder(tf.float32, [None, self.n_features], name='s_') # input

with tf.variable_scope('target_net'):

c_names = ['target_net_params', tf.GraphKeys.GLOBAL_VARIABLES]

self.q_next = build_layers(self.s_, c_names, n_l1, w_initializer, b_initializer)

def store_transition(self, s, a, r, s_):

if not hasattr(self, 'memory_counter'):

self.memory_counter = 0

transition = np.hstack((s, [a, r], s_))

index = self.memory_counter % self.memory_size

self.memory[index, :] = transition

self.memory_counter += 1

def choose_action(self, observation):

observation = observation[np.newaxis, :]

if np.random.uniform() < self.epsilon: # choosing action

actions_value = self.sess.run(self.q_eval, feed_dict={self.s: observation})

action = np.argmax(actions_value)

else:

action = np.random.randint(0, self.n_actions)

return action

def learn(self):

if self.learn_step_counter % self.replace_target_iter == 0:

self.sess.run(self.replace_target_op)

print('\ntarget_params_replaced\n')

sample_index = np.random.choice(self.memory_size, size=self.batch_size)

batch_memory = self.memory[sample_index, :]

q_next = self.sess.run(self.q_next, feed_dict={self.s_: batch_memory[:, -self.n_features:]}) # next observation

q_eval = self.sess.run(self.q_eval, {self.s: batch_memory[:, :self.n_features]})

q_target = q_eval.copy()

batch_index = np.arange(self.batch_size, dtype=np.int32)

eval_act_index = batch_memory[:, self.n_features].astype(int)

reward = batch_memory[:, self.n_features + 1]

q_target[batch_index, eval_act_index] = reward + self.gamma * np.max(q_next, axis=1)

_, self.cost = self.sess.run([self._train_op, self.loss],

feed_dict={self.s: batch_memory[:, :self.n_features],

self.q_target: q_target})

self.cost_his.append(self.cost)

self.epsilon = self.epsilon + self.epsilon_increment if self.epsilon < self.epsilon_max else self.epsilon_max

self.learn_step_counter += 1

sess = tf.Session()

with tf.variable_scope('natural'):

natural_DQN = DuelingDQN(

n_actions=ACTION_SPACE, n_features=3, memory_size=MEMORY_SIZE,

e_greedy_increment=0.001, sess=sess, dueling=False)

with tf.variable_scope('dueling'):

dueling_DQN = DuelingDQN(

n_actions=ACTION_SPACE, n_features=3, memory_size=MEMORY_SIZE,

e_greedy_increment=0.001, sess=sess, dueling=True, output_graph=True)

sess.run(tf.global_variables_initializer())

def train(RL):

acc_r = [0]

total_steps = 0

observation = env.reset()

while True:

# if total_steps-MEMORY_SIZE > 9000: env.render()

action = RL.choose_action(observation)

f_action = (action-(ACTION_SPACE-1)/2)/((ACTION_SPACE-1)/4) # [-2 ~ 2] float actions

observation_, reward, done, info = env.step(np.array([f_action]))

reward /= 10 # normalize to a range of (-1, 0)

acc_r.append(reward + acc_r[-1]) # accumulated reward

RL.store_transition(observation, action, reward, observation_)

if total_steps > MEMORY_SIZE:

RL.learn()

if total_steps-MEMORY_SIZE > 15000:

break

observation = observation_

total_steps += 1

return RL.cost_his, acc_r

c_natural, r_natural = train(natural_DQN)

c_dueling, r_dueling = train(dueling_DQN)

plt.figure(1)

plt.plot(np.array(c_natural), c='r', label='natural')

plt.plot(np.array(c_dueling), c='b', label='dueling')

plt.legend(loc='best')

plt.ylabel('cost')

plt.xlabel('training steps')

plt.grid()

plt.figure(2)

plt.plot(np.array(r_natural), c='r', label='natural')

plt.plot(np.array(r_dueling), c='b', label='dueling')

plt.legend(loc='best')

plt.ylabel('accumulated reward')

plt.xlabel('training steps')

plt.grid()

plt.show()

参考内容:

- https://arxiv.org/pdf/1511.06581.pdf

- https://github.com/MorvanZhou