python爬虫入门_3种方法爬取古诗文网站

目的:

爬取古诗文网的古诗词,获取详细信息,目标网站:https://www.gushiwen.org/default.aspx?page=1

1.根据网页分析可知

import requests

from bs4 import BeautifulSoup

list=[]#定义一个空列表来存放后续生产的字典

url='https://www.gushiwen.org/default.aspx?page=1'

headers={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0'}

text=requests.get(url,headers=headers).text

soup=BeautifulSoup(text,'lxml')

div=soup.find_all('div',{'class':'left'})[1]

sons=div.find_all('div',class_="sons")

for son in sons:

dic={}

title=son.find('b').text

dynasty=son.find_all('a')[1].text

author=son.find_all('a')[2].text

content=son.find('div',class_="contson").text.strip()

dic['标题']=title

dic['朝代']=dynasty

dic['作者']=author

dic['内容']=content

list.append(dic)

print(list)#打印出结果

with open('gushi.json','w',encoding='utf-8')as f:#以写的方式生成一个json文件

json.dump(list,f,indent=4,ensure_ascii=False)#将list写入json文件

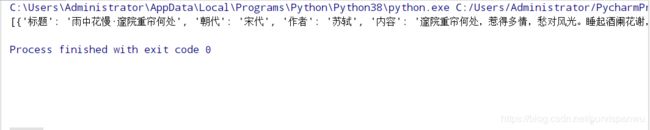

打印结果截图:

json文件:

json文件:

方法2:Xpath解析(咱们只爬取第一页的信息)

方法2:Xpath解析(咱们只爬取第一页的信息)

这里就只附代码辽:

import requests

from lxml import etree

import json

list=[]

url='https://www.gushiwen.org/default.aspx?page=1'

headers={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0'}

text=requests.get(url,headers=headers).text

html=etree.HTML(text,etree.HTMLParser())

div=html.xpath('//div[@class="left"]')[1]

sons=div.xpath('.//div[@class="sons"]')

for son in sons:

dic={}

title=son.xpath('.//b/text()')[0]

dynasty=son.xpath('.//a[1]/text()')[0]

auth = son.xpath('.//a[2]/text()')[0]

content="".join(son.xpath('.//div[@class="contson"]//text()')).strip()#这里要注意 text前面加//

dic['标题'] = title

dic['朝代'] = dynasty

dic['作者'] = auth

dic['内容'] = content

list.append(dic)

print(list)

with open('gushi.json','w',encoding='utf-8')as f:#以写的方式生成一个json文件

json.dump(list,f,indent=4,ensure_ascii=False)#将list写入json文件

方法3:正则表达式

代码:

import requests

import csv

import re

list1=[]

url='https://www.gushiwen.org/default.aspx?page=1'

headers={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0'}

text=requests.get(url,headers=headers).text

title=re.findall(r'(.*?)',text,re.DOTALL)

dynasty=re.findall(r'(.*?)'

,text,re.DOTALL)

auth=re.findall(r'(.*?)'

,text,re.DOTALL)

contents=re.findall(r'.*?(.*?)

',text,re.DOTALL)

for content in contents:

content.strip().replace(''

,'').replace('','').replace('

','')#这里处理的有点麻烦,应该有更好的方法

list1.append(content.strip().replace(''

,'').replace('','').replace('

',''))

results=list(zip(title,dynasty,auth,list1))

head=['标题','朝代','作者','内容']

with open('gushi.csv','w',encoding='utf-8',newline='')as f:

writer=csv.writer(f)

writer.writerow(head)

writer.writerows(results)