贷款利润最大化——利用随机森林和逻辑回归进行分类

1.数据读取

这里有一份贷款数据,包括与贷款相关信息,现有主要任务:对贷款人借贷状态(全额借贷、不予借贷)进行分类,从而实现贷款利润最大化。

import pandas as pd

loans_2007 = pd.read_csv('LoanStats3a.csv', skiprows=1)

half_count = len(loans_2007) / 2

#删除空值记录

loans_2007 = loans_2007.dropna(thresh=half_count, axis=1)

#舍弃无关字段

loans_2007 = loans_2007.drop(['desc', 'url'],axis=1)

#将清理的数据集存入新的csv文件中

loans_2007.to_csv('loans_2007.csv', index=False)

import pandas as pd

loans_2007 = pd.read_csv("loans_2007.csv")

print(loans_2007.iloc[0])

print(loans_2007.shape[1])

输出结果显示共有52个字段,其中部分字段为无关字段,应该舍弃。

2.数据清洗及分析

loans_2007 = loans_2007.drop(["id", "member_id", "funded_amnt", "funded_amnt_inv", "grade", "sub_grade", "emp_title", "issue_d"], axis=1)

loans_2007 = loans_2007.drop(["zip_code", "out_prncp", "out_prncp_inv", "total_pymnt", "total_pymnt_inv", "total_rec_prncp"], axis=1)

loans_2007 = loans_2007.drop(["total_rec_int", "total_rec_late_fee", "recoveries", "collection_recovery_fee", "last_pymnt_d", "last_pymnt_amnt"], axis=1)

print(loans_2007.iloc[0])

print(loans_2007.shape[1])

删除无关字段后,剩余32个字段

#统计不同还款状态对应的样本数量——标签类分析

print(loans_2007['loan_status'].value_counts())

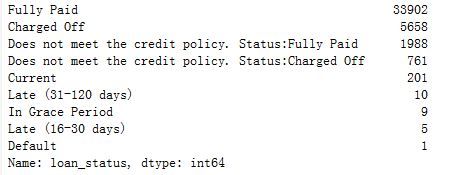

统计结果显示,共有9种借贷状态,其中我们仅分析"Fully Paid"(全额借款)和"Charged Off"(不借款)这两种状态。

#筛选出要分类的标签值,其中"Fully Paid"状态值为1,"Charged Off"状态值为0

loans_2007 = loans_2007[(loans_2007['loan_status'] == "Fully Paid") | (loans_2007['loan_status'] == "Charged Off")]

status_replace = {

"loan_status" : {

"Fully Paid": 1,

"Charged Off": 0,

}

}

loans_2007 = loans_2007.replace(status_replace)

在进行数据分析时,部分字段对应的值只有一个,应删除这些无关字段

orig_columns = loans_2007.columns

drop_columns = []

for col in orig_columns:

col_series = loans_2007[col].dropna().unique()

#如果字段值都一样,删除该字段

if len(col_series) == 1:

drop_columns.append(col)

loans_2007 = loans_2007.drop(drop_columns, axis=1)

print(drop_columns)

print loans_2007.shape

#将清洗后数据存入一个新的文件中

loans_2007.to_csv('filtered_loans_2007.csv', index=False)

import pandas as pd

loans = pd.read_csv('filtered_loans_2007.csv')

loans = loans.drop("pub_rec_bankruptcies", axis=1)

#删除包含空值的记录

loans = loans.dropna(axis=0)

print(loans.dtypes.value_counts())

loans = loans.drop("pub_rec_bankruptcies", axis=1)

loans = loans.dropna(axis=0)

#统计不同数据类型下的字段总和

print(loans.dtypes.value_counts())

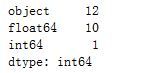

输出结果如下图,12个列所对应的数据类型为字符型,应转化为数值型。

#输出数据类型为字符型的字段

object_columns_df = loans.select_dtypes(include=["object"])

print(object_columns_df.iloc[0])

#对字符型数据进行分组计数

cols = ['home_ownership', 'verification_status', 'emp_length', 'term', 'addr_state']

for c in cols:

print(loans[c].value_counts())

print(loans["purpose"].value_counts())

print(loans["title"].value_counts())

进一步进行数据清洗

#将"emp_length"字段转化为字符型数据

mapping_dict = {

"emp_length": {

"10+ years": 10,

"9 years": 9,

"8 years": 8,

"7 years": 7,

"6 years": 6,

"5 years": 5,

"4 years": 4,

"3 years": 3,

"2 years": 2,

"1 year": 1,

"< 1 year": 0,

"n/a": 0

}

}

loans = loans.drop(["last_credit_pull_d", "earliest_cr_line", "addr_state", "title"], axis=1)

#将百分比类型转化为浮点型(小数类型)

loans["int_rate"] = loans["int_rate"].str.rstrip("%").astype("float")

loans["revol_util"] = loans["revol_util"].str.rstrip("%").astype("float")

loans = loans.replace(mapping_dict)

cat_columns = ["home_ownership", "verification_status", "emp_length", "purpose", "term"]

dummy_df = pd.get_dummies(loans[cat_columns])

loans = pd.concat([loans, dummy_df], axis=1)

loans = loans.drop(cat_columns, axis=1)

loans = loans.drop("pymnt_plan", axis=1)

loans.to_csv('cleaned_loans2007.csv', index=False)

import pandas as pd

#读取最终清洗的数据

loans = pd.read_csv("cleaned_loans2007.csv")

print(loans.info())

最终数据包含的字段如下:

| loan_amnt | 38428 non-null float64 |

|---|---|

| installment | 38428 non-null float64 |

| int_rate | 38428 non-null float64 |

| annual_inc | 38428 non-null float64 |

| loan_status | 38428 non-null float64 |

| dti | 38428 non-null float64 |

| delinq_2yrs | 38428 non-null float64 |

| inq_last_6mths | 38428 non-null float64 |

| open_acc | 38428 non-null float64 |

| pub_rec | 38428 non-null float64 |

| revol_bal | 38428 non-null float64 |

| revol_util | 38428 non-null float64 |

| total_acc | 38428 non-null float64 |

| home_ownership_MORTGAGE | 38428 non-null float64 |

| home_ownership_NONE | 38428 non-null float64 |

| `````` | ``````` |

3.建立逻辑回归模型,预测是否借贷

在这里我们分别建立了三个模型,比较不同模型的训练效果

#利用逻辑回归模型对0-1标签进行分类

from sklearn.linear_model import LogisticRegression

from sklearn.cross_validation import cross_val_predict, KFold

lr = LogisticRegression()

kf = KFold(features.shape[0], random_state=1)

predictions = cross_val_predict(lr, features, target, cv=kf)

predictions = pd.Series(predictions)

#负例预测为正例

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# 正例预测为正例

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# 负例预测为正例

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# 负例预测为负例

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# 统计指标:tpr和fpr,其中tpr越大越好,fpr越小越好

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print(tpr)

print(fpr)

print(predictions[:20])

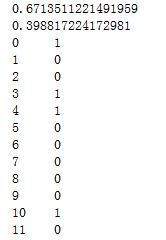

输出如下:

此时tpr和fpr比例均较高,不满足要求;进一步调整参数class_weight,使得正负样本比例得到均衡

#在正负样本比例均衡条件下训练模型,统计相关指标

from sklearn.linear_model import LogisticRegression

from sklearn.cross_validation import cross_val_predict

lr = LogisticRegression(class_weight="balanced")

kf = KFold(features.shape[0], random_state=1)

predictions = cross_val_predict(lr, features, target, cv=kf)

predictions = pd.Series(predictions)

#负例预测为正例

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# 正例预测为正例

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# 负例预测为正例

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# 负例预测为负例

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# 统计指标:tpr和fpr,其中tpr越大越好,fpr越小越好

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print(tpr)

print(fpr)

print(predictions[:20])

from sklearn.linear_model import LogisticRegression

from sklearn.cross_validation import cross_val_predict

#因为这里负例样本数量远远小于正例样本数量,我们可以调整正负样本的权重,使得正负样本比例均衡

penalty = {

0: 5,

1: 1

}

lr = LogisticRegression(class_weight=penalty)

kf = KFold(features.shape[0], random_state=1)

predictions = cross_val_predict(lr, features, target, cv=kf)

predictions = pd.Series(predictions)

#负例预测为正例

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# 正例预测为正例

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# 负例预测为正例

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# 负例预测为负例

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# 统计指标:tpr和fpr,其中tpr越大越好,fpr越小越好

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print(tpr)

print(fpr)

print(predictions[:20])

4.建立随机森林模型

from sklearn.ensemble import RandomForestClassifier

from sklearn.cross_validation import cross_val_predict

rf = RandomForestClassifier(n_estimators=10,class_weight="balanced", random_state=1)

#print help(RandomForestClassifier)

kf = KFold(features.shape[0], random_state=1)

predictions = cross_val_predict(rf, features, target, cv=kf)

predictions = pd.Series(predictions)

# False positives.

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# True positives.

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# False negatives.

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# True negatives

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# Rates

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

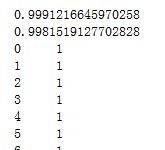

输出结果如下

在这里我们得到随机森林模型效果劣于逻辑回归模型的效果

相关数据集如下:链接:https://pan.baidu.com/s/1CezGYZAR1Zs-8_WIo2-TrA

提取码:wmvq