pytorch使用matplotlib和tensorboard实现模型和训练的可视化

pytorch构建和训练深度学习模型的过程中,往往需要能够直观的观测到可视化的过程,比如画出训练曲线等。

对于简单的曲线绘制可以使用matplotlib库做出基本的图,如果需要更加高级的可视化过程,pytorch有好几个工具都可以做到,比如tensorwatch,visdom,tensorboard,实测下来发现tensorboard用起来比较方便和成熟稳定。

(pytorch自从1.2版本依赖就正式支持了独立的tensorboard,不再需要去安装tensorboardX了)

本文通过简单线性模型训练来讲解如何使用这两种可视化方法。

安装依赖

这里用的pytorch1.5 cpu版本,需要额外安装matplotlib和tensorboard库

pip install matplotlib

pip install tensorboard代码

引入模块

import torch

from torch import nn

import torch.utils.data as Data

from torch.nn import init

import numpy as np

import matplotlib.pyplot as plt准备数据

这里用一个简单的线性模型为例

![]()

# real parameter

true_w = [2, -3.4]

true_b = 4.2输入两个特征值,输出一个结果

通过随机数构造批量特征值矩阵,并增加随机噪声

# prepare data

input_num = 2 # input feature dim

output_num = 1 # output result dim

num_samples = 1000

features = torch.rand(num_samples, input_num)

labels = true_w[0] * features[:, 0] + true_w[1] * features[:, 1] + true_b

labels += torch.tensor(np.random.normal(0, 0.01, size=labels.size())) # add random noise

batch_size = 10

num_trains = 800

num_tests = 200

train_features = features[:num_trains, :]

train_labels = labels[:num_trains]

test_features = features[num_trains:, :]

test_labels = labels[num_trains:]

定义模型

根据输入输出维度构建一个简单的线性层

# define model

class LinearNet(nn.Module):

def __init__(self, n_feature, n_output):

super(LinearNet, self).__init__()

self.linear = nn.Linear(n_feature, n_output)

def forward(self, x):

y = self.linear(x)

return y

net = LinearNet(input_num, output_num)定义训练参数

lr = 0.03

loss = nn.MSELoss()

optimizer = torch.optim.SGD(net.parameters(), lr=lr)

num_epochs = 100通过matplotlib可视化

在训练过程中保存中间结果,然后用matplotlib画出损失曲线

# define draw

def plotCurve(x_vals, y_vals,

x_label, y_label,

x2_vals=None, y2_vals=None,

legend=None,

figsize=(3.5, 2.5)):

# set figsize

plt.xlabel(x_label)

plt.ylabel(y_label)

plt.semilogy(x_vals, y_vals)

if x2_vals and y2_vals:

plt.semilogy(x2_vals, y2_vals, linestyle=':')

if legend:

plt.legend(legend)# train and visualize

def train1(net, num_epochs, batch_size,

train_features, train_labels,

test_features, test_labels,

loss, optimizer):

print ("=== train begin ===")

# data process

train_dataset = Data.TensorDataset(train_features, train_labels)

test_dataset = Data.TensorDataset(test_features, test_labels)

train_iter = Data.DataLoader(train_dataset, batch_size, shuffle=True)

test_iter = Data.DataLoader(test_dataset, batch_size, shuffle=True)

# train by step

train_ls, test_ls = [], []

for epoch in range(num_epochs):

for x, y in train_iter:

ls = loss(net(x).view(-1, 1), y.view(-1, 1))

optimizer.zero_grad()

ls.backward()

optimizer.step()

# save loss for each step

train_ls.append(loss(net(train_features).view(-1, 1), train_labels.view(-1, 1)).item())

test_ls.append(loss(net(test_features).view(-1, 1), test_labels.view(-1, 1)).item())

if (epoch % 10 == 0):

print ("epoch %d: train loss %f, test loss %f" % (epoch, train_ls[-1], test_ls[-1]))

print ("final epoch: train loss %f, test loss %f" % (train_ls[-1], test_ls[-1]))

print ("plot curves")

plotCurve(range(1, num_epochs + 1), train_ls,

"epoch", "loss",

range(1, num_epochs + 1), test_ls,

["train", "test"]

)

print ("=== train end ===")

train1(net, num_epochs, batch_size, train_features, train_labels, test_features, test_labels, loss, optimizer)结果如下

=== train begin ===

epoch 0: train loss 1.163743, test loss 1.123318

epoch 10: train loss 0.002227, test loss 0.001833

epoch 20: train loss 0.000107, test loss 0.000106

epoch 30: train loss 0.000101, test loss 0.000106

epoch 40: train loss 0.000100, test loss 0.000104

epoch 50: train loss 0.000101, test loss 0.000104

epoch 60: train loss 0.000100, test loss 0.000103

epoch 70: train loss 0.000101, test loss 0.000102

epoch 80: train loss 0.000100, test loss 0.000103

epoch 90: train loss 0.000103, test loss 0.000103

final epoch: train loss 0.000100, test loss 0.000102

plot curves

=== train end ===查看一下训练后好的参数

print (net.linear.weight)

print (net.linear.bias)

Parameter containing:

tensor([[ 1.9967, -3.3978]], requires_grad=True)

Parameter containing:

tensor([4.2014], requires_grad=True)

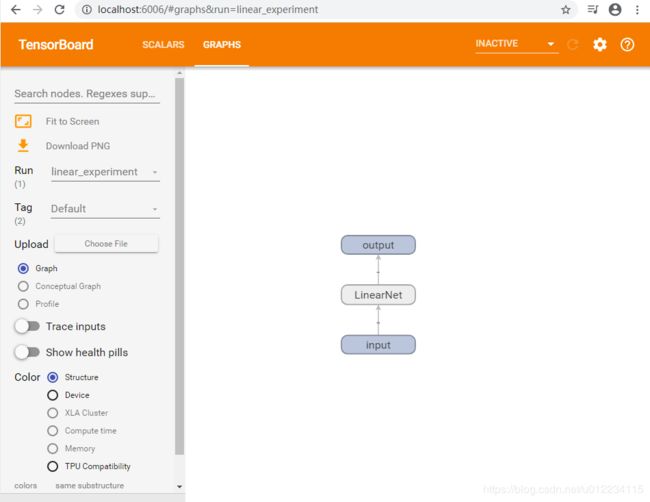

通过tensorboard可视化

启动tensorboard显示网页

先引入模块

# train with tensorboard

from torch.utils.tensorboard import SummaryWriter定义一个writer,记录的中间数据会落到对应的目录中去用于显示

writer = SummaryWriter("runs/linear_experiment") # default at runs folder if not sepecify path此时用命令行启动tensorboard网页服务

tensorboard --logdir=runs然后打开 http://localhost:6006/ 网页进入tensorboard主页面

模型可视化

tensorboard可以通过传入一个样本,写入graph到日志中,在网页上看到模型的结构

sample = train_features[0]

writer.add_graph(net, sample)刷新网页即可看到

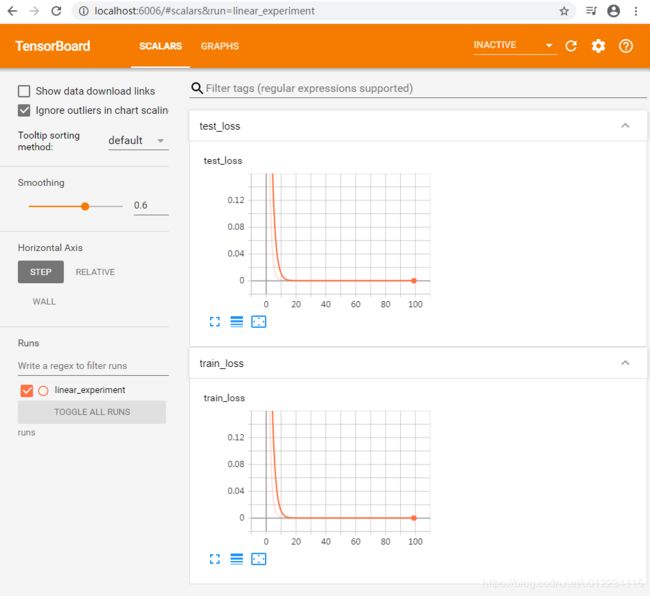

训练过程可视化

定义新的训练函数,在过程中写入对应的scalar数据,就可以由tensorboard绘制出曲线

# trian and record scalar

def train2(net, num_epochs, batch_size,

train_features, train_labels,

test_features, test_labels,

loss, optimizer):

print ("=== train begin ===")

# data process

train_dataset = Data.TensorDataset(train_features, train_labels)

test_dataset = Data.TensorDataset(test_features, test_labels)

train_iter = Data.DataLoader(train_dataset, batch_size, shuffle=True)

test_iter = Data.DataLoader(test_dataset, batch_size, shuffle=True)

# train by step

for epoch in range(num_epochs):

for x, y in train_iter:

ls = loss(net(x).view(-1, 1), y.view(-1, 1))

optimizer.zero_grad()

ls.backward()

optimizer.step()

# save loss for each step

train_loss = loss(net(train_features).view(-1, 1), train_labels.view(-1, 1)).item()

test_loss = loss(net(test_features).view(-1, 1), test_labels.view(-1, 1)).item()

# write to tensorboard

writer.add_scalar("train_loss", train_loss, epoch)

writer.add_scalar("test_loss", test_loss, epoch)

if (epoch % 10 == 0):

print ("epoch %d: train loss %f, test loss %f" % (epoch, train_loss, test_loss))

print ("final epoch: train loss %f, test loss %f" % (train_loss, test_loss))

print ("=== train end ===")

train2(net, num_epochs, batch_size, train_features, train_labels, test_features, test_labels, loss, optimizer)结果如下

=== train begin ===

epoch 0: train loss 0.928869, test loss 0.978139

epoch 10: train loss 0.000948, test loss 0.000902

epoch 20: train loss 0.000102, test loss 0.000104

epoch 30: train loss 0.000101, test loss 0.000105

epoch 40: train loss 0.000101, test loss 0.000102

epoch 50: train loss 0.000100, test loss 0.000103

epoch 60: train loss 0.000101, test loss 0.000105

epoch 70: train loss 0.000102, test loss 0.000103

epoch 80: train loss 0.000104, test loss 0.000110

epoch 90: train loss 0.000100, test loss 0.000103

final epoch: train loss 0.000101, test loss 0.000104

=== train end ===刷新页面可以看到

更多高阶用法就有待于自己去摸索研究了