spark-shell启动报错:Yarn application has already ended! It might have been killed or unable to launch...

前半部分转自:https://www.cnblogs.com/tibit/p/7337045.html (后半原创)

spark-shell --master=yarn --deploy-mode=client

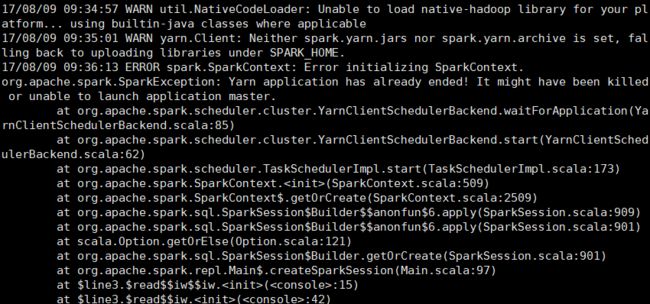

启动日志,错误信息如下

其中“Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME”,只是一个警告,官方的解释如下:

To make Spark runtime jars accessible from YARN side, you can specify spark.yarn.archive or spark.yarn.jars. For details please refer to Spark Properties. If neither spark.yarn.archive nor spark.yarn.jars is specified, Spark will create a zip file with all jars under $SPARK_HOME/jars and upload it to the distributed cache.

大概是说:如果 spark.yarn.jars 和 spark.yarn.archive都没配置,会把$SPAR_HOME/jars下面所有jar打包成zip文件,上传到每个工作分区,所以打包分发是自动完成的,没配置这俩参数没关系。

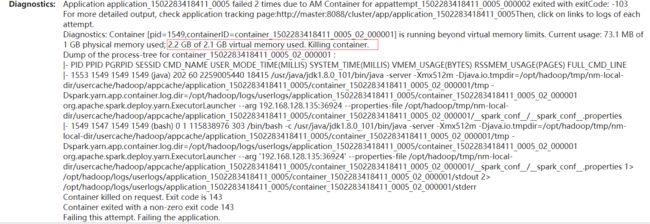

"Yarn application has already ended! It might have been killed or unable to launch application master",这个可是一个异常,打开mr管理页面,我的是 http://192.168.128.130/8088 ,

重点在红框处,2.2g的虚拟内存实际值,超过了2.1g的上限。也就是说虚拟内存超限,所以contrainer被干掉了,活都是在容器干的,容器被干掉了,还玩个屁。

解决方案

yarn-site.xml 增加配置:

2个配置2选一即可

1 2 34 8 9yarn.nodemanager.vmem-check-enabled 5false 6Whether virtual memory limits will be enforced for containers 710 yarn.nodemanager.vmem-pmem-ratio 114 12Ratio between virtual memory to physical memory when setting memory limits for containers 13

修改后,启动hadoop,spark-shell.

---------------------------------------------------下面原创------------------------------------------------------------

我在spark1.6的老集群上面的yarn master安装了spark2.3,local模式启动正常,但是spark2.3 on yarn启动(spark)报错信息同上文;区别在于yarn的报错信息:

显然没有那么直接明了的错误提示,进一步查看以下log:HADOOP_HOME/logs/userlogs/application_1522048616169_0028/container_1522048616169_0028_01_000001/stderr

Exception in thread "main" java.lang.UnsupportedClassVersionError: org/apache/spark/network/util/ByteUnit : Unsupported major.minor version 52.0

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at org.apache.spark.deploy.history.config$.

at org.apache.spark.deploy.history.config$.

at org.apache.spark.SparkConf$.

at org.apache.spark.SparkConf$.

at org.apache.spark.SparkConf.set(SparkConf.scala:94)

at org.apache.spark.SparkConf$$anonfun$loadFromSystemProperties$3.apply(SparkConf.scala:76)

at org.apache.spark.SparkConf$$anonfun$loadFromSystemProperties$3.apply(SparkConf.scala:75)

at scala.collection.TraversableLike$WithFilter$$anonfun$foreach$1.apply(TraversableLike.scala:733)

at scala.collection.immutable.HashMap$HashMap1.foreach(HashMap.scala:221)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:732)

at org.apache.spark.SparkConf.loadFromSystemProperties(SparkConf.scala:75)

at org.apache.spark.SparkConf.

at org.apache.spark.SparkConf.

at org.apache.spark.deploy.yarn.ApplicationMaster.

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:823)

at org.apache.spark.deploy.yarn.ExecutorLauncher$.main(ApplicationMaster.scala:854)

at org.apache.spark.deploy.yarn.ExecutorLauncher.main(ApplicationMaster.scala)

由此可见,是配置的jdk不支持,由于旧的配置引用jdk7,然而spark2.3需要jdk8;因此修改yarn-env.sh

#export JAVA_HOME=/usr/java/jdk1.7.0_55

export JAVA_HOME=/r2/jwb/java/jdk1.8.0_161

yarn没重启,,,继续还是报一样的错。。。yarn重启后再试:

虽然spark session是有了,但是 ,还是有点问题,因为non-zero exit code 1报错还在。先这样吧o(╯□╰)o