pytorch深度学习入门之Logistic回归

Logistic回归——理论篇

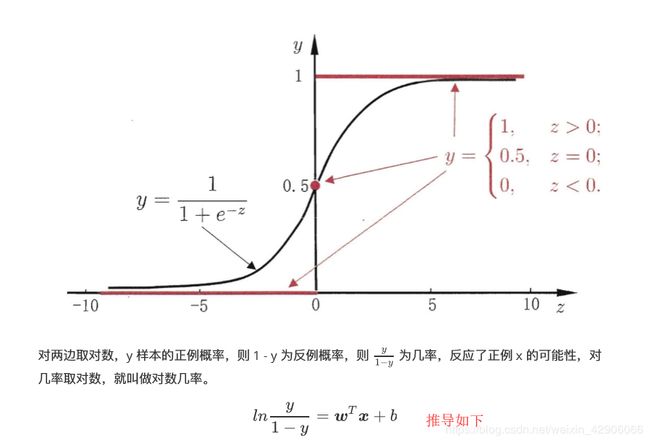

【推导】

y = 1 1 + e − z 1 + e − z = 1 y e − z = 1 − y y e z = y 1 − y z = ln y 1 − y ∴ 对 数 几 率 或 l o g i t 函 数 : l o g i t ( p ) = ln y 1 − y = w ⊤ x + b y=\frac{1}{1+e^{-z}}\\ 1+e^{-z}=\frac{1}{y}\\ e^{-z}=\frac{1-y}{y}\\e^{z}=\frac{y}{1-y}\\z=\ln \frac{y}{1-y}\\ \\\therefore 对数几率或logit函数:logit(p)= \ln \frac{y}{1-y}=w^{\top} x+b y=1+e−z11+e−z=y1e−z=y1−yez=1−yyz=ln1−yy∴对数几率或logit函数:logit(p)=ln1−yy=w⊤x+b

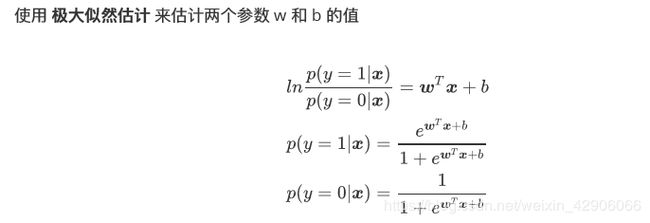

模型的参数估计:

- 用极大似然估计求解对数几率回归中的 w w w 和 b b b .

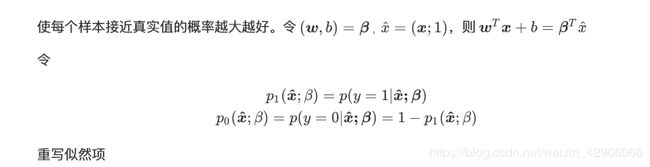

所以似然函数为:

L ( w , b ) = ∏ i = 1 m p ( y i ∣ x i ; w , b ) L(w, b)=\prod_{i=1}^{m} p\left(y_{i} | x_{i} ; w, b\right) L(w,b)=i=1∏mp(yi∣xi;w,b)取对数之后的对数似然函数为:

l ( w , b ) = ∑ i = 1 m ln p ( y i ∣ x i ; w , b ) l(\boldsymbol{w}, b)=\sum_{i=1}^{m} \ln p\left(y_{i} | \boldsymbol{x}_{i} ; \boldsymbol{w}, b\right) l(w,b)=i=1∑mlnp(yi∣xi;w,b)

p ( y i ∣ x i ; w , b ) = [ p 1 ( x ^ i ; β ) ] y i [ p 0 ( x ^ i ; β ) ] 1 − y i p\left(y_{i} | \boldsymbol{x}_{i} ; \boldsymbol{w}, b\right)=\left[p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right]^{y_{i}}\left[p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right]^{1-y_{i}} p(yi∣xi;w,b)=[p1(x^i;β)]yi[p0(x^i;β)]1−yi

其中, y i y_{i} yi取0或者1。

将似然项代入到对数似然函数,得:

ℓ ( β ) = ∑ i = 1 m ln ( [ p 1 ( x ^ i ; β ) ] y i [ p 0 ( x ^ i ; β ) ] 1 − y i ) = ∑ i = 1 m [ y i ln ( p 1 ( x ^ i ; β ) ) + ( 1 − y i ) ln ( p 0 ( x ^ i ; β ) ) ] = ∑ i = 1 m { y i [ ln ( p 1 ( x ^ i ; β ) ) − ln ( p 0 ( x ^ i ; β ) ) ] + ln ( p 0 ( x ^ i ; β ) ) } = ∑ i = 1 m [ y i ln ( p 1 ( x ^ i ; β ) p 0 ( x ^ i ; β ) ) + ln ( p 0 ( x ^ i ; β ) ) ] = ∑ i = 1 m [ y i ln ( e β T x ^ i ) + ln ( 1 1 + e β T x ^ i ) ] = ∑ i = 1 m ( y i β T x ^ i − ln ( 1 + e β T x ^ i ) ) \begin{aligned} \ell(\boldsymbol{\beta}) &=\sum_{i=1}^{m} \ln \left(\left[p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right]^{y_{i}}\left[p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right]^{1-y_{i}}\right) \\ &=\sum_{i=1}^{m}\left[y_{i} \ln \left(p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)+\left(1-y_{i}\right) \ln \left(p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)\right] \\ &=\sum_{i=1}^{m}\left\{y_{i}\left[\ln \left(p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)-\ln \left(p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)\right]+\ln \left(p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)\right\} \\ &=\sum_{i=1}^{m}\left[y_{i} \ln \left(\frac{p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)}{p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)}\right)+\ln \left(p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right)\right] \\ &=\sum_{i=1}^{m}\left[y_{i} \ln \left(e^{\boldsymbol{\beta}^{T} \hat{x}_{i}}\right)+\ln \left(\frac{1}{1+e^{\beta^{T} \hat{x}_{i}}}\right)\right] \\ &=\sum_{i=1}^{m}\left(y_{i} \boldsymbol{\beta}^{T} \hat{\boldsymbol{x}}_{i}-\ln \left(1+e^{\boldsymbol{\beta}^{T} \hat{x}_{i}}\right)\right) \end{aligned} ℓ(β)=i=1∑mln([p1(x^i;β)]yi[p0(x^i;β)]1−yi)=i=1∑m[yiln(p1(x^i;β))+(1−yi)ln(p0(x^i;β))]=i=1∑m{yi[ln(p1(x^i;β))−ln(p0(x^i;β))]+ln(p0(x^i;β))}=i=1∑m[yiln(p0(x^i;β)p1(x^i;β))+ln(p0(x^i;β))]=i=1∑m[yiln(eβTx^i)+ln(1+eβTx^i1)]=i=1∑m(yiβTx^i−ln(1+eβTx^i))

由于此式仍为极大似然估计的似然函数,所以最大化似然函数等价于最小化似然函数的相反数,也即在似然函数前添加负号,即可得下式:【西瓜书 p 59 p_{59} p59最终似然函数,公式 ( 3.27 ) (3.27) (3.27)】

l ( β ) = ∑ i = 1 m ( − y i β T x ^ i + ln ( 1 + e β T x ^ i ) ) l(\beta)=\sum_{i=1}^{m}\left(-y_{i} \boldsymbol{\beta}^{T} \hat{x}_{i}+\ln \left(1+e^{\boldsymbol{\beta}^{T} \hat{x}_{i}}\right)\right) l(β)=i=1∑m(−yiβTx^i+ln(1+eβTx^i))

对 l ( β ) l(\beta) l(β)求极小值就能够得到 ( w , b ) = β (w, b) = \beta (w,b)=β 的估计值,可以使用最简单的梯度下降法来求得。

用 l ( β ) l(\beta) l(β) 对 w w w 求导 ,可以得到:【西瓜书 p 60 p_{60} p60,公式 ( 3.30 ) (3.30) (3.30)】

∂ l ( β ) ∂ β = ∑ i = 1 m ( − y i x ^ i + e β T x ^ i ⋅ x ^ i 1 + e β T x ^ i ) = ∑ i = 1 m ( − y i x ^ i + x ^ i p i ( x ^ i ; β ) ) = − ∑ i = 1 m x ^ i ( y i − p 1 ( x ^ i ; β ) ) \begin{aligned} \frac{\partial l(\beta)}{\partial \beta} &=\sum_{i=1}^{m}\left(-y_{i} \hat{x}_{i}+\frac{e^{\beta^{T} \hat{x}_{i}} \cdot \hat{x}_{i}}{1+e^{\beta^{T} \hat{x}_{i}}}\right) \\ &=\sum_{i=1}^{m}\left(-y_{i} \hat{x}_{i}+\hat{x}_{i} p_{i}\left(\hat{x}_{i} ; \beta\right)\right) \\ &=-\sum_{i=1}^{m} \hat{x}_{i}\left(y_{i}-p_{1}\left(\hat{x}_{i} ; \beta\right)\right)\end{aligned} ∂β∂l(β)=i=1∑m(−yix^i+1+eβTx^ieβTx^i⋅x^i)=i=1∑m(−yix^i+x^ipi(x^i;β))=−i=1∑mx^i(yi−p1(x^i;β))

梯度下降公式,如下:

β t + 1 = β t − η ⋅ ∂ l ( β ) ∂ β \beta_{t+1}=\beta_{t}-\eta \cdot \frac{\partial l(\beta)}{\partial \beta} βt+1=βt−η⋅∂β∂l(β)

Reference:

1、《深度学习入门之PyTorch》

2、西瓜书第三章线性模型总结

3、南瓜书公式推导

Logistic回归——实践篇(pytorch)

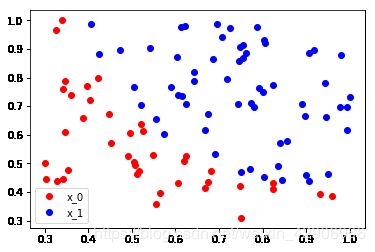

数据集

- 说明: 每个数据点是一行,每一行中前边两个数据表示 x x x 坐标和 y y y 坐标,最后一个数据表示其类别 0 0 0 或 1 1 1。

- 链接

34.62365962451697,78.0246928153624,0

30.28671076822607,43.89499752400101,0

35.84740876993872,72.90219802708364,0

60.18259938620976,86.30855209546826,1

79.0327360507101,75.3443764369103,1

45.08327747668339,56.3163717815305,0

61.10666453684766,96.51142588489624,1

75.02474556738889,46.55401354116538,1

76.09878670226257,87.42056971926803,1

84.43281996120035,43.53339331072109,1

95.86155507093572,38.22527805795094,0

75.01365838958247,30.60326323428011,0

82.30705337399482,76.48196330235604,1

69.36458875970939,97.71869196188608,1

39.53833914367223,76.03681085115882,0

53.9710521485623,89.20735013750205,1

69.07014406283025,52.74046973016765,1

67.94685547711617,46.67857410673128,0

70.66150955499435,92.92713789364831,1

76.97878372747498,47.57596364975532,1

67.37202754570876,42.83843832029179,0

绘图:

# 从 data.txt 中读入点

with open('./datasets/data.txt', 'r') as f:

data_list = [i.split('\n')[0].split(',') for i in f.readlines()]

data = [(float(i[0]), float(i[1]), float(i[2])) for i in data_list]

# 标准化

x0_max = max([i[0] for i in data])

x1_max = max([i[1] for i in data])

data = [(i[0]/x0_max, i[1]/x1_max, i[2]) for i in data]

# filter() 过滤序列,过滤掉不符合条件的元素,返回由符合条件元素组成的新列表

x0 = list(filter(lambda x: x[-1] == 0.0, data)) # 选择第一类的点,类别为0(列表中每一个元组的最后一位为0或者1)

x1 = list(filter(lambda x: x[-1] == 1.0, data)) # 选择第二类的点,类别为1

plot_x0 = [i[0] for i in x0] # 类别为0的点的横坐标

plot_y0 = [i[1] for i in x0] # 类别为0的点的纵坐标

plot_x1 = [i[0] for i in x1] # 类别为1的点的横坐标

plot_y1 = [i[1] for i in x1] # 类别为1的点的纵坐标

plt.plot(plot_x0, plot_y0, 'ro', label='x_0')

plt.plot(plot_x1, plot_y1, 'bo', label='x_1')

plt.legend(loc='best')

完整程序,如下:

import torch

from torch import nn, optim

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

import time

class LogisticRegression(nn.Module):

def __init__(self):

super(LogisticRegression, self).__init__()

# self.lr = nn.Linear(2, 1)

# self.sm = nn.Sigmoid() # 增加sigmoid激活函数

self.lr = nn.Sequential(

nn.Linear(2, 1),

nn.Sigmoid())

def forward(self, x):

x = self.lr(x)

# x = self.sm(x)

return x

if __name__ == '__main__':

start = time.time()

with open('./datasets/data.txt', 'r') as f:

data_list = [i.split('\n')[0].split(',') for i in f.readlines()] # 读取数据并去掉换行按,分割

data = [(float(i[0]), float(i[1]), float(i[2])) for i in data_list] # str->float

# 标准化

x0_max = max([i[0] for i in data])

x1_max = max([i[1] for i in data])

data = [(i[0]/x0_max, i[1]/x1_max, i[2]) for i in data] #[(0.34683364331979855, 0.7891689906960261, 0.0),..]

data = torch.Tensor(data) #tensor([[0.3468, 0.7892, 0.0000],...])

x_train = data[:, 0:2] # torch.Size([100, 2])

y_train = data[:, -1].unsqueeze(1) # torch.Size([100, 1])

# 定义模型

if torch.cuda.is_available(): # GPU cuda加速

model = LogisticRegression().cuda()

else: # CPU

model = LogisticRegression()

# 定义损失函数和优化器

criterion = nn.BCELoss()

optimizer = optim.SGD(model.parameters(), lr=5e-3, momentum=0.9) # 提取需优化的参数,学习率为0.001,动量为0.9

# 训练模型

num_epoch = 20000

for epoch in range(num_epoch):

if torch.cuda.is_available():

inputs = Variable(x_train).cuda()

target = Variable(y_train).cuda()

else:

inputs = Variable(x_train)

target = Variable(y_train)

# 前向传播

out = model(inputs)

loss = criterion(out, target)

mask = out.ge(0.5).float() # 以0.5为阈值进行分类, 大于0.5的为1 ,否则为0

correct = (mask == target).sum() # 计算正确预测的样本个数

acc = correct.item() / x_train.size(0) # 计算精度

# 反向传播

optimizer.zero_grad() # 归零梯度

loss.backward() # 反向传播

optimizer.step() # 更新参数

if(epoch + 1) % 1000 == 0:

print('Epoch[{}/{}], loss:{:.4f}, acc:{:.4f}'.format(epoch+1, num_epoch, loss.item(), acc))

during = time.time() - start

print('During Time: {:.3f} s'.format(during))

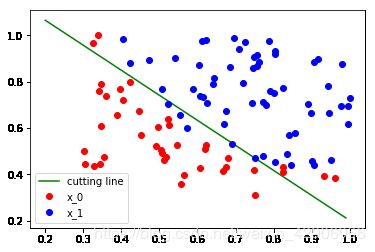

# 结果可视化

w0, w1 = model.lr[0].weight[0]

w0 = w0.item()

w1 = w1.item()

b = model.lr[0].bias.item()

plot_x = np.arange(0.2, 1, 0.01)

plot_y = (-w0 * plot_x - b) / w1

plt.plot(plot_x, plot_y, 'g', label='cutting line') # 拟合直线

# filter() 过滤序列,过滤掉不符合条件的元素,返回由符合条件元素组成的新列表

x0 = list(filter(lambda x: x[-1] == 0.0, data)) # 选择第一类的点,类别为0(列表中每一个元组的最后一位为0或者1)

x1 = list(filter(lambda x: x[-1] == 1.0, data)) # 选择第二类的点,类别为1

plot_x0 = [i[0] for i in x0] # 类别为0的点的横坐标

plot_y0 = [i[1] for i in x0] # 类别为0的点的纵坐标

plot_x1 = [i[0] for i in x1] # 类别为1的点的横坐标

plot_y1 = [i[1] for i in x1] # 类别为1的点的纵坐标

plt.plot(plot_x0, plot_y0, 'ro', label='x_0') # 数据集

plt.plot(plot_x1, plot_y1, 'bo', label='x_1')

plt.legend(loc='best')

plt.show()

输出结果:

Epoch[1000/20000], loss:0.5752, acc:0.6500

Epoch[2000/20000], loss:0.4983, acc:0.7800

Epoch[3000/20000], loss:0.4461, acc:0.8700

Epoch[4000/20000], loss:0.4090, acc:0.9100

Epoch[5000/20000], loss:0.3814, acc:0.9100

Epoch[6000/20000], loss:0.3601, acc:0.9300

Epoch[7000/20000], loss:0.3433, acc:0.9200

Epoch[8000/20000], loss:0.3296, acc:0.9200

Epoch[9000/20000], loss:0.3182, acc:0.9100

Epoch[10000/20000], loss:0.3086, acc:0.9100

Epoch[11000/20000], loss:0.3004, acc:0.9100

Epoch[12000/20000], loss:0.2934, acc:0.9100

Epoch[13000/20000], loss:0.2872, acc:0.9100

Epoch[14000/20000], loss:0.2817, acc:0.9100

Epoch[15000/20000], loss:0.2768, acc:0.9100

Epoch[16000/20000], loss:0.2725, acc:0.9100

Epoch[17000/20000], loss:0.2686, acc:0.9100

Epoch[18000/20000], loss:0.2650, acc:0.9100

Epoch[19000/20000], loss:0.2618, acc:0.9000

Epoch[20000/20000], loss:0.2588, acc:0.9000

During Time: 33.413 s

拟合的直线,如下:

提取层结构及参数:

print(model)

print(model.lr.weight)

print(model.lr.bias)

输出结果:

LogisticRegression(

(lr): Linear(in_features=2, out_features=1, bias=True)

(sm): Sigmoid()

)

Parameter containing:

tensor([[4.1670, 3.7247]], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-4.6508], device='cuda:0', requires_grad=True)

(new) nn.Sequential() 形式提取层结构及参数:

# nn.Sequential()

print(model)

print(model.lr[0].weight) # 相当于把所有层封装在list里,用下标来找到所在的层

print(model.lr[0].bias)

输出结果:

LogisticRegression(

(lr): Sequential(

(0): Linear(in_features=2, out_features=1, bias=True)

(1): Sigmoid()

)

)

Parameter containing:

tensor([[9.4361, 8.7443]], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-11.2034], device='cuda:0', requires_grad=True)