pytorch 训练数据以及测试 全部代码(5) 网络

from networks import deeplab_xception, deeplab_resnet

# Network definition

if backbone == 'xception':

net = deeplab_xception.DeepLabv3_plus(nInputChannels=3, n_classes=21, os=16, pretrained=True)

elif backbone == 'resnet':

net = deeplab_resnet.DeepLabv3_plus(nInputChannels=3, n_classes=21, os=16, pretrained=True)

else:

raise NotImplementedError

这里面有两个网络xception和resnet

下面讲解文件deeplab_resnet里面的resnet

class DeepLabv3_plus(nn.Module):

def __init__(self, nInputChannels=3, n_classes=21, os=16, pretrained=False, _print=True):

if _print:

print("Constructing DeepLabv3+ model...")

print("Number of classes: {}".format(n_classes))

print("Output stride: {}".format(os))

print("Number of Input Channels: {}".format(nInputChannels))

super(DeepLabv3_plus, self).__init__()

# Atrous Conv

self.resnet_features = ResNet101(nInputChannels, os, pretrained=pretrained)

# ASPP

if os == 16:

rates = [1, 6, 12, 18]

elif os == 8:

rates = [1, 12, 24, 36]

else:

raise NotImplementedError

self.aspp1 = ASPP_module(2048, 256, rate=rates[0])

self.aspp2 = ASPP_module(2048, 256, rate=rates[1])

self.aspp3 = ASPP_module(2048, 256, rate=rates[2])

self.aspp4 = ASPP_module(2048, 256, rate=rates[3])

self.relu = nn.ReLU()

self.global_avg_pool = nn.Sequential(nn.AdaptiveAvgPool2d((1, 1)),

nn.Conv2d(2048, 256, 1, stride=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU())

self.conv1 = nn.Conv2d(1280, 256, 1, bias=False)

self.bn1 = nn.BatchNorm2d(256)

# adopt [1x1, 48] for channel reduction.

self.conv2 = nn.Conv2d(256, 48, 1, bias=False)

self.bn2 = nn.BatchNorm2d(48)

self.last_conv = nn.Sequential(nn.Conv2d(304, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, n_classes, kernel_size=1, stride=1))

def forward(self, input):

x, low_level_features = self.resnet_features(input)

x1 = self.aspp1(x)

x2 = self.aspp2(x)

x3 = self.aspp3(x)

x4 = self.aspp4(x)

x5 = self.global_avg_pool(x)

x5 = F.upsample(x5, size=x4.size()[2:], mode='bilinear', align_corners=True)

x = torch.cat((x1, x2, x3, x4, x5), dim=1)

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = F.upsample(x, size=(int(math.ceil(input.size()[-2]/4)),

int(math.ceil(input.size()[-1]/4))), mode='bilinear', align_corners=True)

low_level_features = self.conv2(low_level_features)

low_level_features = self.bn2(low_level_features)

low_level_features = self.relu(low_level_features)

x = torch.cat((x, low_level_features), dim=1)

x = self.last_conv(x)

x = F.upsample(x, size=input.size()[2:], mode='bilinear', align_corners=True)

return x

def freeze_bn(self):

for m in self.modules():

if isinstance(m, nn.BatchNorm2d):

m.eval()

def __init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

# n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

# m.weight.data.normal_(0, math.sqrt(2. / n))

torch.nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()继承torch.nn库里面的Module类,我们的模型就是它的子类。模块还可以包含其他模块,允许将它们嵌套在树形结构中。可以将子模块指定为常规属性。forward函数需要我们自己重写

class Module(object):

r"""Base class for all neural network modules.

Your models should also subclass this class.

Modules can also contain other Modules, allowing to nest them in

a tree structure. You can assign the submodules as regular attributes::

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

Submodules assigned in this way will be registered, and will have their

parameters converted too when you call `.cuda()`, etc.

"""

dump_patches = False

r"""This allows better BC support for :meth:`load_state_dict`. In

:meth:`state_dict`, the version number will be saved as in the attribute

`_metadata` of the returned state dict, and thus pickled. `_metadata` is a

dictionary with keys follow the naming convention of state dict. See

``_load_from_state_dict`` on how to use this information in loading.

If new parameters/buffers are added/removed from a module, this number shall

be bumped, and the module's `_load_from_state_dict` method can compare the

version number and do appropriate changes if the state dict is from before

the change."""

_version = 1

def __init__(self):

self._backend = thnn_backend

self._parameters = OrderedDict()

self._buffers = OrderedDict()

self._backward_hooks = OrderedDict()

self._forward_hooks = OrderedDict()

self._forward_pre_hooks = OrderedDict()

self._modules = OrderedDict()

self.training = True

def forward(self, *input):

r"""Defines the computation performed at every call.

Should be overridden by all subclasses.

.. note::

Although the recipe for forward pass needs to be defined within

this function, one should call the :class:`Module` instance afterwards

instead of this since the former takes care of running the

registered hooks while the latter silently ignores them.

"""

raise NotImplementedErrorsuper(DeepLabv3_plus, self).__init__()这是对继承自父类的属性进行初始化。而且是用父类的初始化方法来初始化继承的属性。也就是说,子类继承了父类的所有属性和方法,父类属性自然会用父类方法来进行初始化。当然,如果初始化的逻辑与父类的不同,不使用父类的方法,自己重新初始化也是可以的。请参考https://www.imooc.com/qadetail/72165

# Atrous Conv

self.resnet_features = ResNet101(nInputChannels, os, pretrained=pretrained)由上面的初始化可知number of input channels = 3, output stride = 16,这里调用了一个函数如下:

def ResNet101(nInputChannels=3, os=16, pretrained=False):

model = ResNet(nInputChannels, Bottleneck, [3, 4, 23, 3], os, pretrained=pretrained)

return model这里又调用了一个函数ResNet如下:可知这是一个类,则说明上面的mode是该类的一个实例,也就是说self.resnet_features是ResNet的一个实例。

class ResNet(nn.Module):

def __init__(self, nInputChannels, block, layers, os=16, pretrained=False):

def _make_layer(self, block, planes, blocks, stride=1, rate=1):

def _make_MG_unit(self, block, planes, blocks=[1,2,4], stride=1, rate=1):

def forward(self, input):

def _init_weight(self):

def _load_pretrained_model(self):

主线继续!根据输出的大小来确定rate

# ASPP

if os == 16:

rates = [1, 6, 12, 18]

elif os == 8:

rates = [1, 12, 24, 36]

else:

raise NotImplementedError然后更具不同的rate得到不同的块

self.aspp1 = ASPP_module(2048, 256, rate=rates[0])

self.aspp2 = ASPP_module(2048, 256, rate=rates[1])

self.aspp3 = ASPP_module(2048, 256, rate=rates[2])

self.aspp4 = ASPP_module(2048, 256, rate=rates[3])其中ASPP和上面的resnet101一样是一个网络

class ASPP_module(nn.Module):

def __init__(self, inplanes, planes, rate):

def forward(self, x):

def _init_weight(self):

主线继续!定义激活层

self.relu = nn.ReLU()定义全局平均层,这个是一个有序的容器,神经网络模块将按照在传入构造器的顺序依次被添加到计算图中执行,同时以神经网络模块为元素的有序字典也可以作为传入参数。 nn.Sequential可参考https://blog.csdn.net/dss_dssssd/article/details/82980222

self.global_avg_pool = nn.Sequential(nn.AdaptiveAvgPool2d((1, 1)),

nn.Conv2d(2048, 256, 1, stride=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU())nn.AdaptiveAvgPool2d这个函数如下:是将输入得到给定的大小输出,batch和channel保持不变The output is of size H x W, for any input size.

The number of output features is equal to the number of input planes.

Args:

output_size: the target output size of the image of the form H x W.

Can be a tuple (H, W) or a single H for a square image H x H

H and W can be either a ``int``, or ``None`` which means the size will be the same as that of the input.

Examples:

>>> # target output size of 5x7

>>> m = nn.AdaptiveAvgPool2d((5,7))

>>> input = torch.randn(1, 64, 8, 9) size=(1,64,8,9)

>>> output = m(input) size=(1,6,5,7)

>>> # target output size of 7x7 (square)

>>> m = nn.AdaptiveAvgPool2d(7)

>>> input = torch.randn(1, 64, 10, 9) size=(1,64,10,9)

>>> output = m(input) size=(1,64,7,7)

>>> # target output size of 10x7

>>> m = nn.AdaptiveMaxPool2d((None, 7))

>>> input = torch.randn(1, 64, 10, 9) size=(1,64,10,9)

>>> output = m(input) size=(1,64,10,7)

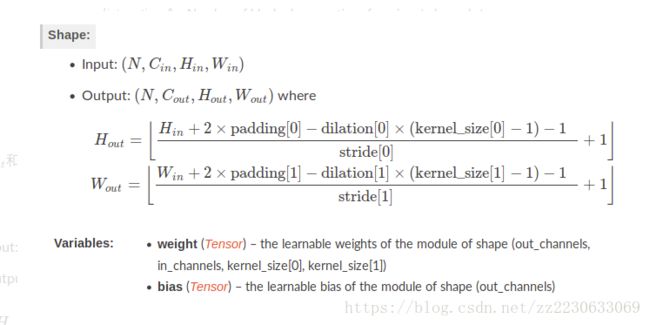

nn.Conv2d这个函数如下:和TensorFlow的卷积有不同之处,可参考https://blog.csdn.net/g11d111/article/details/82665265

class Conv2d(_ConvNd):

def __init__(self, in_channels, out_channels, kernel_size, stride=1,padding=0, dilation=1, groups=1, bias=True) Args:

in_channels (int): Number of channels in the input image

out_channels (int): Number of channels produced by the convolution

kernel_size (int or tuple): Size of the convolving kernel

stride (int or tuple, optional): Stride of the convolution. Default: 1

padding (int or tuple, optional): 输入的每一条边补充0的层数 Default: 0

dilation (int or tuple, optional): 卷积核元素之间的间距 Default: 1

groups (int, optional): Number of blocked connections from input channels to output channels. Default: 1

bias (bool, optional): If ``True``, adds a learnable bias to the output. Default: ``True``

标注:groups: 控制输入和输出之间的连接: group=1,输出是所有的输入的卷积;group=2,此时相当于有并排的两个卷积层,每个卷积层计算输入通道的一半,并且产生的输出是输出通道的一半,随后将这两个输出连接起来。

Shape:

- Input: `(N, C_{in}, H_{in}, W_{in})`

- Output: `(N, C_{out}, H_{out}, W_{out})`

Examples::

>>> # With square kernels and equal stride

>>> m = nn.Conv2d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

>>> # non-square kernels and unequal stride and with padding and dilation

>>> m = nn.Conv2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2), dilation=(3, 1))

>>> input = torch.randn(20, 16, 50, 100)

>>> output = m(input)计算方式如下向下取整

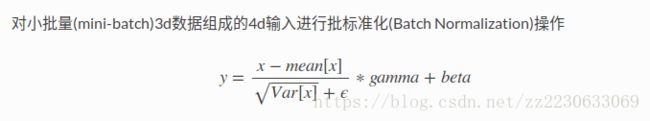

nn.BatchNorm2函数如下:

class torch.nn.BatchNorm2d(num_features, eps=1e-05, momentum=0.1, affine=True):

pass

"""

参数:

num_features: 来自期望输入的特征数,该期望输入的大小为'batch_size x num_features x height x width'

eps: 为保证数值稳定性(分母不能趋近或取0),给分母加上的值。默认为1e-5。

momentum: 动态均值和动态方差所使用的动量。默认为0.1。

affine: 一个布尔值,当设为true,给该层添加可学习的仿射变换参数也就是添加了weight和bias参数(learnable affine parameters)。

Shape: - 输入:(N, C,H, W) - 输出:(N, C, H, W)(输入输出相同)

"""主程序继续!下面定义了卷积,BN,一个时序容器(以他们传入的顺序被添加到容器中)

self.conv1 = nn.Conv2d(1280, 256, 1, bias=False)

self.bn1 = nn.BatchNorm2d(256)

# adopt [1x1, 48] for channel reduction.

self.conv2 = nn.Conv2d(256, 48, 1, bias=False)

self.bn2 = nn.BatchNorm2d(48)

self.last_conv = nn.Sequential(nn.Conv2d(304, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, n_classes, kernel_size=1, stride=1))接下来就是forward函数了

def forward(self, input):

x, low_level_features = self.resnet_features(input)上面这句得到的是resnet101的forward输出

下面得到四个不同rate的ASPP的forward输出

x1 = self.aspp1(x)

x2 = self.aspp2(x)

x3 = self.aspp3(x)

x4 = self.aspp4(x)然后相同的输入经过一个时序容器

x5 = self.global_avg_pool(x)x5向上采样得到和x4相同的大小(h和w)

x5 = F.upsample(x5, size=x4.size()[2:], mode='bilinear', align_corners=True)将上述所有的特征图堆叠在一起,使用torch.cat()函数,可参见https://blog.csdn.net/xrinosvip/article/details/81164697

在使用这个函数的时候,处理dim这一个维度,其他维度大小要保持一致。

x = torch.cat((x1, x2, x3, x4, x5), dim=1) # 在通道上面叠加接下来的代码不需要解释了:

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = F.upsample(x, size=(int(math.ceil(input.size()[-2]/4)),

int(math.ceil(input.size()[-1]/4))), mode='bilinear', align_corners=True)

low_level_features = self.conv2(low_level_features)

low_level_features = self.bn2(low_level_features)

low_level_features = self.relu(low_level_features)

x = torch.cat((x, low_level_features), dim=1)

x = self.last_conv(x)

x = F.upsample(x, size=input.size()[2:], mode='bilinear', align_corners=True)

return x然后下一个函数 freeze_bn,冻结BN。

def freeze_bn(self):

for m in self.modules():

if isinstance(m, nn.BatchNorm2d):

m.eval()self.modules返回一个包含 当前模型 所有模块的迭代器。NOTE: 重复的模块只被返回一次(children()也是)。 在下面的例子中, submodule 只会被返回一次:

# conv 和 conv1不一样

import torch.nn as nn

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.add_module("conv", nn.Conv2d(10, 20, 4))

self.add_module("conv1", nn.Conv2d(20 ,10, 4))

model = Model()

for module in model.modules():

print(module)

# 下面是输出

Model (

(conv): Conv2d(10, 20, kernel_size=(4, 4), stride=(1, 1))

(conv1): Conv2d(20, 10, kernel_size=(4, 4), stride=(1, 1))

)

Conv2d(10, 20, kernel_size=(4, 4), stride=(1, 1))

Conv2d(20, 10, kernel_size=(4, 4), stride=(1, 1))

# conv 和 conv1 一样

import torch.nn as nn

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

submodule = nn.Conv2d(10, 20, 4)

self.add_module("conv", submodule)

self.add_module("conv1", submodule)

model = Model()

for module in model.modules():

print(module)

#下面是输出

Model (

(conv): Conv2d(10, 20, kernel_size=(4, 4), stride=(1, 1))

(conv1): Conv2d(10, 20, kernel_size=(4, 4), stride=(1, 1))

)

Conv2d(10, 20, kernel_size=(4, 4), stride=(1, 1)) #重复的,复制的模块只返回一个 然后这个eval(): 将模型设置成evaluation模式,仅仅当模型中有Dropout和BatchNorm是才会有影响。

拓展一下, train(mode=True): 将module设置为 training mode。仅仅当模型中有Dropout和BatchNorm是才会有影响。

类DeepLabv3_plus的最后一个函数:

def __init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

# n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

# m.weight.data.normal_(0, math.sqrt(2. / n))

torch.nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()可以看到这个函数的命名仅前面有双下划线__,用于对象的数据封装,以此命名的属性或者方法为类的私有属性或者私有方法。如果在外部直接访问私有属性或者方法是不可行的,这就起到了隐藏数据的作用,但是这种实现机制并不是很严格,机制是通过自动"变形"实现的,类中所有以双下划线开头的名称__name都会自动变为"_类名__name"的新名称。当命名一个类属性引起名称冲突时使用,可以参考https://www.cnblogs.com/linxiyue/p/7944871.html

torch.nn.init.kaiming_normal_(m.weight):,Hekaiming 用一个正态分布生成值,填充输入的张量或变量。结果张量中的值采样自均值为0,标准差为sqrt(2/((1 + a^2) * fan_in))的正态分布。

torch.nn.init.kaiming_normal_(tensor, a=0, mode='fan_in', nonlinearity='leaky_relu')

"""

根据He, K等人在“Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification”中

描述的方法,用一个正态分布生成值,填充输入的张量或变量。

结果张量中的值采样自均值为0,标准差为sqrt(2/((1 + a^2) * fan_in))的正态分布。

参数:

tensor – n维的torch.Tensor或 autograd.Variable

a -这层之后使用的rectifier的斜率系数(ReLU的默认值为0)

mode -可以为“fan_in”(默认)或“fan_out”。“fan_in”保留前向传播时权值方差的量级,“fan_out”保留反向传播时的量级。

nonlinearity -非线性函数(`nn.functional` name),建议使用relu或者leaky_relu。其他的还有linear,sigmoid,tanh

例子

>>> w = torch.empty(3, 5)

>>> nn.init.kaiming_normal_(w, mode='fan_out', nonlinearity='relu')

"""m.weight.data.fill_(1)应用了fill_(value)方法,将里面的数值1全部赋值给m.weight这个tensor。

m.bias.data.zero_()应用了zero_()方法,将m.bias这个tensor全部为0