Android系统之Binder 机制

转:https://blog.csdn.net/litao55555/article/details/80597677

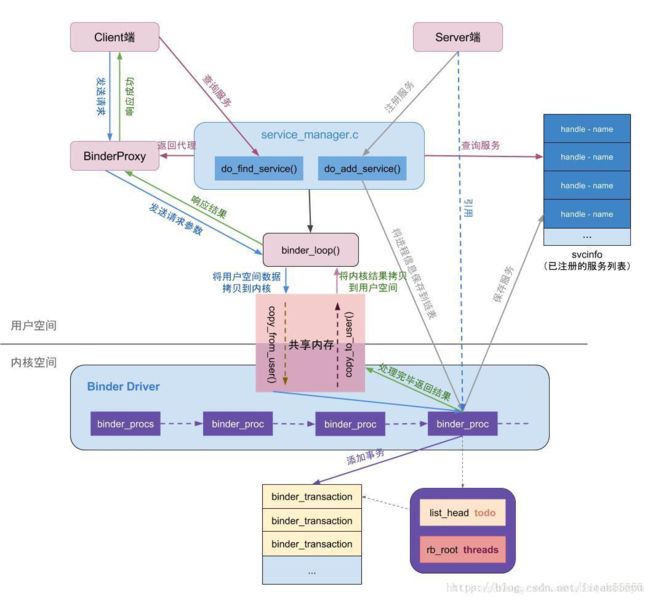

写在前面:看过很多大牛写的Binder详解,因为讲得太过晦涩难懂,所以对于新手好像不太友好,为了让新手对于Binder有一个大概的认识,故准备了半个月写了这篇博客,博客的大概流程应该是正确的,希望看过的新手能够有一些收获。本文主要讲解了三个部分:ServiceManager 启动流程、ServiceManager 注册服务过程、ServiceManager 获取服务过程

- ServiceManager的启动流程

system\core\roodir\init.rc:

service servicemanager /system/bin/servicemanager //可知孵化器的目录为servicemanager

class core

user system

group system

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

onrestart restart drm

分析Android启动流程可知,Android启动时会解析init.rc,servicemanager 服务的孵化器的目录为/system/bin/servicemanager,在此目录下有service_manager.c、binder.c

frameworks\native\cmds\servicemanager\Service_manager.c:

int main(int argc, char **argv)

{

struct binder_state *bs

bs = binder_open(128*1024); //1. 打开Binder驱动,建立128K = 128*1024内存映射

if (binder_become_context_manager(bs)) { //2. 设置自己(ServiceManager)为Binder的大管家

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

...

svcmgr_handle = BINDER_SERVICE_MANAGER;

binder_loop(bs, svcmgr_handler); //3. 开启for循环,充当Server的角色,等待Client连接

return 0;

}

分析binder_state:

struct binder_state

{

int fd; //表示打开的/dev/binder文件句柄

void *mapped; //把设备文件/dev/binder映射到进程空间的起始地址

size_t mapsize; //内存映射空间的大小

};

分析BINDER_SERVICE_MANAGER:

#define BINDER_SERVICE_MANAGER 0U //表示Service Manager的句柄为0

1.1 分析binder_open(128*1024)

frameworks/native/cmds/servicemanager/Binder.c:

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return NULL;

}

bs->fd = open("/dev/binder", O_RDWR); //调用Binder驱动注册的file_operation结构体的open、ioctl、mmap函数

if (bs->fd < 0) { //a. binder_state.fd保存打开的/dev/binder文件句柄

goto fail_open;

}

if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) ||

(vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) {

goto fail_open;

}

bs->mapsize = mapsize; //b. binder_state.mapsize保存内存映射空间的大小128K = 128*1024

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0); //c. binder_state.mapped保存设备文件/dev/binder映射到进程空间的起始地址

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return NULL;

}

执行open(“/dev/binder”, O_RDWR);时从用户态进入内核态,因此会执行:

drivers/android/binder.c

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

proc = kzalloc(sizeof(*proc), GFP_KERNEL); //a. 创建Service_manager进程对应的binder_proc,保存Service_manager进程的信息

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

proc->tsk = current;

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

proc->default_priority = task_nice(current);

binder_lock(__func__);

binder_stats_created(BINDER_STAT_PROC);

hlist_add_head(&proc->proc_node, &binder_procs); //binder_procs是一个全局变量,hlist_add_head是将proc->proc_node(proc->proc_node是一个hlist_node链表)加入binder_procs的list中

proc->pid = current->group_leader->pid;

INIT_LIST_HEAD(&proc->delivered_death);

filp->private_data = proc; //将binder_proc保存在打开文件file的私有数据成员变量private_data中

binder_unlock(__func__);

return 0;

}

在此函数中创建Service_manager进程对应的binder_proc,保存Service_manager进程的信息,并将binder_proc保存在打开文件file的私有数据成员变量private_data中

//分析下面这个函数可以得出:函数功能是将proc->proc_node放入binder_procs链表的头部,注意是从右向左,最开始插入的binder_proc在binder_procs链表的最右边

static inline void hlist_add_head(struct hlist_node *n, struct hlist_head *h)

{

struct hlist_node *first = h->first;

n->next = first;

if (first)

first->pprev = &n->next;

h->first = n;

n->pprev = &h->first;

}

分析binder_open(128*1024)可知:

a. 创建binder_state结构体保存/dev/binder文件句柄fd、内存映射的起始地址和大小

b. 创建binder_procs链表,将保存Service_manager进程信息的binder_proc对应的binder_proc->proc_node加入binder_procs的list中(proc->proc_node是一个hlist_node链表)

1.2 分析binder_become_context_manager(bs)

frameworks\native\cmds\servicemanager\Binder.c:

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0); //传入参数BINDER_SET_CONTEXT_MGR

}

drivers/android/binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) //cmd = BINDER_SET_CONTEXT_MGR

{

int ret;

struct binder_proc *proc = filp->private_data; //获得binder_proc,binder_proc对应Service_manager进程 --- 从打开文件file的私有数据成员变量private_data中获取binder_proc

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

binder_lock(__func__);

thread = binder_get_thread(proc); //获得Service_manager线程的信息binder_thread

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

...

case BINDER_SET_CONTEXT_MGR:

if (binder_context_mgr_node != NULL) { //由binder_context_mgr_node = binder_new_node(proc, NULL, NULL);可知:binder_context_mgr_node为ServiceManager对应的binder_node

...

}

ret = security_binder_set_context_mgr(proc->tsk);

if (ret < 0)

goto err;

if (binder_context_mgr_uid != -1) { //binder_context_mgr_uid表示ServiceManager进程的uid

if (binder_context_mgr_uid != current->cred->euid) {

...

}

} else {

binder_context_mgr_uid = current->cred->euid;

binder_context_mgr_node = binder_new_node(proc, NULL, NULL); //binder_context_mgr_node为ServiceManager对应的binder_node,且binder_node.proc对应Service_manager进程

binder_context_mgr_node->local_weak_refs++;

binder_context_mgr_node->local_strong_refs++;

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

break;

}

ret = 0;

...

return ret;

}

1.2.1 分析thread = binder_get_thread(proc);

static struct binder_thread *binder_get_thread(struct binder_proc *proc) //proc对应Service_manager进程

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

/*尽量从threads树中查找和current线程匹配的binder_thread节点*/

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

/*“找不到就创建”一个binder_thread节点*/

if (*p == NULL) { //第一次执行时,p为NULL,下一次执行时会进入while

thread = kzalloc(sizeof(*thread), GFP_KERNEL); //b. 创建Service_manager进程对应的binder_thread

if (thread == NULL)

return NULL;

binder_stats_created(BINDER_STAT_THREAD);

thread->proc = proc; //将Service_manager进程的binder_proc保存到binder_thread.proc

thread->pid = current->pid; //将Service_manager进程的PID保存到binder_thread.pid

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

rb_link_node(&thread->rb_node, parent, p); //将binder_thread保存到红黑树中

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}

函数为获得proc对应进程下的所有线程中和当前线程pid相等的binder_thread

1.2.2 分析binder_context_mgr_node = binder_new_node(proc, NULL, NULL)

static struct binder_node *binder_new_node(struct binder_proc *proc,

void __user *ptr,

void __user *cookie)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

return NULL;

}

node = kzalloc(sizeof(*node), GFP_KERNEL); //c. 创建Service_manager进程对应的binder_node

if (node == NULL)

return NULL;

binder_stats_created(BINDER_STAT_NODE);

rb_link_node(&node->rb_node, parent, p); //将binder_node保存到红黑树中

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = ++binder_last_id;

node->proc = proc; //将binder_proc保存到binder_node.proc

node->ptr = ptr;

node->cookie = cookie;

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

return node;

}

分析binder_become_context_manager(bs)可知:

a. 创建ServiceManager线程的binder_thread,binder_thread.proc保存ServiceManager进程对应的 binder_proc,binder_thread.pid保存当前进程ServiceManager的PID

b. 创建ServiceManager进程的binder_node,binder_node.proc保存binder_proc

c. 把ServiceManager进程对应的binder_proc保存到全局变量filp->private_data中

1.3 分析binder_loop(bs, svcmgr_handler)

frameworks\native\cmds\servicemanager\Binder.c:

void binder_loop(struct binder_state *bs, binder_handler func) //开启for循环,充当Server的角色,等待Client连接

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER; //readbuf[0] = BC_ENTER_LOOPER

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf; //bwr.read_buffer = BC_ENTER_LOOPER

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); //bs->fd记录/dev/binder文件句柄,因此调用binder驱动的ioctl函数,传入参数BINDER_WRITE_READ,bwr.read_buffer = BC_ENTER_LOOPER,bwr.write_buffer = 0

...

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

}

}

1.3.1 分析binder_write(bs, readbuf, sizeof(uint32_t));

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data; //bwr.write_buffer = data = BC_ENTER_LOOPER

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

return res;

}

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) //cmd = BINDER_WRITE_READ,bwr.read_size = 0,bwr.write_size = len

{

int ret;

struct binder_proc *proc = filp->private_data; //获得Service_manager进程的binder_proc,从打开文件file的私有数据成员变量private_data中获取binder_proc

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

binder_lock(__func__);

thread = binder_get_thread(proc); //获得proc对应进程(Service_manager进程)下的所有线程中和当前线程pid相等的binder_thread

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

...

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { //把用户传递进来的参数转换成binder_write_read结构体,并保存在本地变量bwr中,bwr.write_buffer = BC_ENTER_LOOPER

ret = -EFAULT; bwr.read_buffer = 0

goto err;

}

if (bwr.write_size > 0) { //bwr.write_size = len

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed); //bwr.write_buffer = BC_ENTER_LOOPER,bwr.write_consumed = 0

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) { //bwr.read_size = 0

...

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) { //将bwr返回到用户空间

ret = -EFAULT;

goto err;

}

break;

}

ret = 0;

...

return ret;

}

int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, //参数binder_proc、binder_thread、binder_write_read

void __user *buffer, int size, signed long *consumed) //buffer = bwr.write_buffer = BC_ENTER_LOOPER

{

uint32_t cmd;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

if (get_user(cmd, (uint32_t __user *)ptr)) //cmd = BC_ENTER_LOOPER

return -EFAULT;

ptr += sizeof(uint32_t);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) { //cmd = BC_ENTER_LOOPER

case BC_ENTER_LOOPER:

...

thread->looper |= BINDER_LOOPER_STATE_ENTERED; //binder_thread.looper值变为BINDER_LOOPER_STATE_ENTERED,表明当前线程ServiceManager已经进入循环状态

break;

}

return 0;

}

1.3.2 分析res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) //cmd = BINDER_WRITE_READ

{

int ret;

struct binder_proc *proc = filp->private_data; //获得Service_manager进程的binder_proc,从打开文件file的私有数据成员变量private_data中获取binder_proc

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

binder_lock(__func__);

thread = binder_get_thread(proc); //获得proc对应进程(Service_manager进程)下的所有线程中和当前线程pid相等的binder_thread

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

...

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { //把用户传递进来的参数转换成binder_write_read结构体,并保存在本地变量bwr中,bwr.read_buffer = BC_ENTER_LOOPER

ret = -EFAULT; bwr.write_buffer = BC_ENTER_LOOPER

goto err;

}

if (bwr.write_size > 0) { //由binder_loop函数可知bwr.write_buffer = 0

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) { //由binder_loop函数可知bwr.read_buffer = BC_ENTER_LOOPER

/*读取binder_thread->todo的事物,并处理,执行完后bwr.read_buffer = BR_NOOP*/

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); //proc和thread分别发起传输动作的进程和线程

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

}

ret = 0;

...

return ret;

}

1.3.2.1 分析binder_thread_write

int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed)

{

...

return 0;

}

1.3.2.2 分析binder_thread_read

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

void __user *buffer, int size, //buffer = bwr.read_buffer = BC_ENTER_LOOPER,consumed = 0

signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr)) //把BR_NOOP写回到用户传进来的缓冲区ptr = *buffer + *consumed = bwr.read_buffer + bwr.read_consumed = bwr.read_buffer,即ptr = bwr.read_buffer = BR_NOOP

return -EFAULT;

ptr += sizeof(uint32_t);

}

...

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

if (!list_empty(&thread->todo))

w = list_first_entry(&thread->todo, struct binder_work, entry); //从thread->todo队列中取出待处理的事项

else if (!list_empty(&proc->todo) && wait_for_proc_work)

w = list_first_entry(&proc->todo, struct binder_work, entry);

else {

if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) /* no data added */

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) { //由待处理事项的type分类处理

...

}

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

cmd = BR_TRANSACTION; //cmd = BR_TRANSACTION

} else {

...

}

...

if (put_user(cmd, (uint32_t __user *)ptr)) //把cmd = BR_TRANSACTION写回到用户传进来的缓冲区ptr = bwr.read_buffer,故执行完binder_thread_read后cmd = bwr.read_buffer = BR_TRANSACTION

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

}

return 0;

}

分析binder_loop(bs, svcmgr_handler)可知:

a. 进入for (;;)死循环,执行binder_thread_write

b. 执行binder_thread_read,从binder_thread->todo队列中取出待处理的事项并处理,处理完thread->todo队列中待处理的事项后:cmd = bwr.read_buffer = BR_TRANSACTION

总结:

ServiceManager 的启动分为三步

a. 打开Binder驱动,建立128K = 128*1024内存映射

b. 设置自己(ServiceManager)为Binder的大管家

c. 开启for循环,充当Server的角色,等待Client连接

在Binder驱动程序中为ServiceManager建立了三个结构体:binder_proc、binder_thread、binder_node

binder_node.proc保存binder_proc,进程间通信的数据会发送到binder_proc的todo链表

binder_proc进程里有很多线程,每个线程对应一个binder_thread,每个binder_thread用来处理一个Client的跨进程通信的请求