VR系列——Oculus Audio sdk文档:一、虚拟现实音频技术简介(2)——定位和人耳听觉系统

本文主要讲解了人类如何从方向和距离两方面去定位三维空间中的声源;

方向主要有侧面、前/后/高度的分辨,侧面借助了声音到达两耳的延时,或双耳听到的音量大小进行定位,而前/后/高度的定位相对比较困难,需要通过头部和身体的声音光谱定位,方向定位最终会形成一个方向选择滤波器,会被编码为一个HRTF转换函数,成为3D声音空间技术的基础,人的头部运动可以作为辅助定位,将大大减小前/后定位的难度;

距离主要有通过借助参照物,判断声音从源头消弱的程度,衡量距离大小,同时通过回声的起始时间延迟、声音和混音比例定位,以及空间中的运动声源定位,还有受大气影响,相对不稳定的高频衰减定位。

注意到这一现象:人类只有两只耳朵,但却能够定位出三维空间的声源所在。但如果给你一张立体声唱片并问你声音是从耳机中的上方还是下方传出,你将无法给出答案。为什么你无法从唱片中确认声源所在,但在现实中却能够做到?

Consider the following: human beings have only two ears, but are able to locate sound sources within three dimensions. That shouldn't be possible — if you were given a stereo recording and were asked to determine if the sound came from above or below the microphones, you would have no way to tell. If you can't do it from a recording, how can you do it in reality?

人类在三维空间中通过时间、相位、声级和频谱变化进行分析,并基于心理学和推理实现定位。

Humans rely on psychoacoustics and inference to localize sounds in three dimensions, attending to factors such as timing, phase, level, and spectral modifications.

这一小节概述了人类如何定位声源。接下来,我们将利用这一原理来解决空间定位问题,并了解开发人员如何将单声道声源转换成信号,让其听起来像是从空间中某个特定位置发出的声音。

This section summarizes how humans localize sound. Later, we will apply that knowledge to solving the spatialization problem, and learn how developers can take a monophonic sound and transform its signal so that it sounds like it comes from a specific point in space.

一、方向定位(Directional Localization)

这一小节,我们主要研究人类如何确定声源方向,其中两个关键点分别是方向定位和距离。

侧面(Lateral)

作为所需的定位之一,声源的侧面定位是所有定位中最简单的。当声音靠近左侧时,左耳将会比右耳更先听到声音,且左耳听到的音量更强。一般说来,两只耳朵听到的声音越相似,声源位置就越靠近两只耳朵的中心。

Laterally localizing a sound is the simplest type of localization, as one would expect. When a sound is closer to the left, the left ear hears it before the right ear hears it, and it sounds louder. The closer to parity, the more centered the sound, generally speaking.

然而有一些有趣的细节。首先,我们主要通过两耳间的声音到达的延时即双耳时差(ITD), 或通过双耳所听到声音音量的不同即双耳声级差(ILD),来对声音进行定位。我们的定位技术极大地依赖了信号的频率组成。

There are, however, some interesting details. First, we may primarily localize a sound based on the delay between the sound's arrival in both ears, orinteraural time difference(ITD); or, we may primarily localize a sound based on the difference in the sound's volume level in both ears, or the interaural level difference (ILD). The localization technique we rely upon depends heavily on the frequency content of the signal.

当声音低于一定频率时(在500~800Hz之间,取决于声源),将很难通过声级的不同进行分辨。但是,这一频率范围内的声音比人脑空间还多了半个波长,让我们可以依靠两耳间的时间信息(或相位),而不至于混淆。

Sounds below a certain frequency (anywhere from 500 to 800 Hz, depending on the source) are difficult to distinguish based on level differences. However, sounds in this frequency range have half wavelengths greater than the dimensions of a typical human head, allowing us to rely on timing information (orphase) between the ears without confusion.

另一种极端情况下,当声音频率高于1500Hz,比人脑空间少了半个波长,将无法依靠相位信息进行定位。在这种频率下,我们可以依靠头部对声音的遮挡而产生的声音级差,或由于头部遮挡会使得离声源较远的耳朵所听到较弱的声音来进行定位(见下图)。

At the other extreme, sounds with frequencies above approximately 1500 Hz have half wavelengths smaller than the typical head. Phase information is therefore no longer reliable for localizing the sound. At these frequencies, we rely on level differences caused byhead shadowing, or the sound attenuation that results from our heads obstructing the far ear (see figure below).

我们也可根据信号的起始时间差来进行判断。当一个声音响起时,哪一只耳朵先听到声音在很大程度上能够确定声源的位置。但是,这只能帮助我们判断简短变化的声音,而不能判断连续的声音。

We also key on the difference in time of the signal's onset. When a sound is played, which ear hears it firstis a big part of determining its location. However, this only helps us localize short sounds with transients as opposed to continuous sounds.

声音频率在800Hz~1500Hz这段过渡区间时,需同时依据声音级差和时间差进行定位。

There is a transitional zone between ~800 Hz and ~1500 Hz in which both level differences and time differences are used for localization.

前/后/高度(Front/Back/Elevation)

前后的定位比侧面定位困难很多。我们无法通过声音时间差和级差来进行判断,因为声源在前方和后方时的时间差和级差都可能为0。

Front versus back localization is significantly more difficult than lateral localization. We cannot rely on time differences, since interaural time and/or level differences may be zero for a sound in front of or behind the listener.

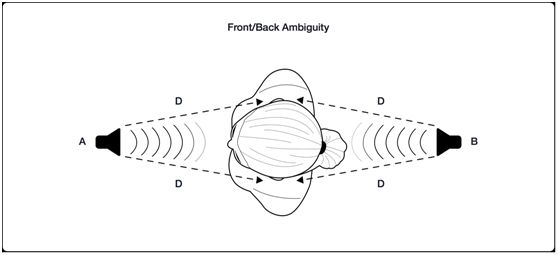

在下图中我们可以看到,我们无法区分声音是来自A还是B,因为它们和双耳的距离相同,音量相同,时间也相同。

In the following figure we can see how sounds at locations A and B would be indistinguishable from each other since they are the same distance from both ears, giving identical level and time differences.

人类依靠头部和身体引起的声音光谱差别来解决这一问题。光谱差别是由于头部的形状和大小、脖子、肩膀、身躯,特别是外耳(或耳廓)对声音的过滤和反射而形成的。由于不同方向的声音与人体的交互不同,我们的大脑可以通过光谱差别来推断声源的方向。例如,靠近前方的声音会和耳廓内部形成共振,而靠近后方的声音则会被耳廓减弱。类似的,从上方传来的声音会被肩膀反射,而来自下方的声音会被身躯和肩膀遮挡。

Humans rely onspectral modificationsof sounds caused by the head and body to resolve this ambiguity. These spectral modifications are filters and reflections of sound caused by the shape and size of the head, neck, shoulders, torso, and especially, by the outer ears (or pinnae). Because sounds originating from different directions interact with the geometry of our bodies differently, our brains use spectral modification to infer the direction of origin. For example, sounds approaching from the front produce resonances created by the

interior of our pinnae, while sounds from the back are shadowed by our pinnae. Similarly, sounds from above may reflect off our shoulders, while sounds from below are shadowed by our torso and shoulders.

这些反射和遮挡效应结合起来,形成了一个方向选择滤波器。

All of these reflections and shadowing effects combine to create adirection selective filter.

头部相关的转换函数 (Head-Related Transfer Functions (HRTFs))

一个方向选择滤波器可以被编码为一个头部相关的转换函数(HRTF),这个转换函数是现代3D声音空间技术的基础。如何测量和创建这个方程,将在后文中进行详细的说明。

A direction selection filter can be encoded as ahead-related transfer function(HRTF). The HRTF is the cornerstone for most modern 3D sound spatialization techniques. How we measure and create an HRTF is described in more detail elsewhere in this document.

头部运动(Head Motion)

仅仅依靠HRTF函数还不能够准确定位声音,因此我们需要头部运动来辅助定位。简单地旋转头部,就可以把一个困难的前/后定位问题变为侧面定位问题,让我们能够更轻易地进行解决。

HRTFs by themselves may not be enough to localize a sound precisely, so we often rely on head motion to assist with localization. Simply turning our heads changes difficult front/back ambiguity problems into lateral localization problems that we are better equipped to solve.

如下图中的A和B的声源无法通过声源级差和时间差来区分,因此它们是相同的。通过轻微地转动头部,收听者就改变了双耳的时差和级差,来帮助定位声音。D1比D2要近,因此可以判断出声音在收听者的左侧(后侧)。

In the following figure sounds atAandBare indistinguishable from each other based on level or time differences, since they are identical. By turning her head slightly, the listener alters the time and level differences between ears, helping to disambiguate the location of the sound.D1is closer thanD2, which is a cue that the sound is to the left (and thus behind) the listener.

二、距离定位(Distance Localization)

ILD、ITD和HRTF可以帮助我们定位声音源头的方向,但是对于声音的距离只能给出一个宽泛的参考。为了定位距离,我们需要考虑一系列的因素,包括起始时间的延迟,声音和混音的比例,以及运动视差。

ILD, ITD and HRTFs help us determine the direction to a sound source, but they give relatively sparse cues for determining the distance to a sound. To determine distance we use a combination of factors, including initial time delay, ratio of direct sound to reverberant sound, and motion parallax.

声音大小(Loudness)

声音大小是距离最明显的线索,但是有时候会误导。如果我们缺少参照,我们就无法判断声音从源头削弱的程度,从而衡量距离。幸运的是,我们对生活中遇到的许多声源很熟悉,例如乐器、人声、动物、汽车等,因此我们能很好的判断这些距离。

Loudness is the most obvious distance cue, but it can be misleading. If we lack a frame of reference, we can't judge how much the sound has diminished in volume from its source, and thus estimate a distance. Fortunately, we are familiar with many of the sound sources that we encounter daily, such as musical instruments, human voice, animals, vehicles, and so on, so we can predict these distances reasonably well.

对于合成的和不熟悉的声源,我们没有参照,那就只能依靠其他的信息或者是相对音量的改变来判断一个声音是接近还是远离。

For synthetic or unfamiliar sound sources, we have no such frame of reference, and we must rely on other cues or relative volume changes to predict if a sound is approaching or receding.

起始时间延迟(Initial Time Delay)

无回声或者空旷的环境中,例如沙漠,不会产生可感知的回声,这会导致距离的估计更加困难。

Anechoic (echoless) or open environments such as deserts may not generate appreciable reflections, which makes estimating distances more difficult.

声音和混音的比例(Ratio of Direct Sound to Reverberation)

在一个有回声的环境中,相互作用的晚回声之间会有很长的、散开的音尾融合,在不同平面上反射,最终消失。如果我们听到的原声比混声要多,那我们就离声源越近。

In a reverberant environment there is a long, diffuse sound tail consisting of all the late echoes interacting with each other, bouncing off surfaces, and slowly fading away. The more we hear of a direct sound in comparison to the late reverberations, the closer we assume it is.

数十年来音频工程师在人工混音时,常常需要考虑这个因素,来调整乐器和歌手的位置。

This property has been used by audio engineers for decades to move a musical instrument or vocalist “to the front” or “to the back” of a song by adjusting the “wet/dry mix” of an artificial reverb.

运动视差(Motion Parallax)

声音的运动视差(空间中明显的运动声源)可以体现距离,因为附近的声音通常比遥远的声音更能表现出更大程度的视差,例如比较近的昆虫可以从左边很快的飞到右边,但是远处的飞机可能需要好多秒来达到同样的效果。因此,如果一个声源运动得比一个固定的视角要快,那我们就猜测这个声源来自附近。

Motion parallax (the apparent movement of a sound source through space) indicates distance, since nearby sounds typically exhibit a greater degree of parallax than far-away sounds. For example, a nearby insect can traverse from the left to the right side of your head very quickly, but a distant airplane may take many seconds to do the same. As a consequence, if a sound source travels quickly relative to a stationary perspective, we tend to perceive that sound as coming from nearby.

高频衰减(High Frequency Attenuation)

高频衰减比低频更快, 因此长距离下我们可以通过高频衰减来推断一下距离。在文献中这个通常有点夸大,因为听起来必须旅行数百或数千英尺,高频才会明显减弱(也就是远高于10kHz)。这也受大气条件的影响,例如温度和湿度。

High frequencies attenuate faster than low frequencies, so over long distances we can infer a bit about distance based on how attenuated those high frequencies are. This is often a little overstated in the literature, because sounds must travel hundreds or thousands of feet before high frequencies are noticeably attenuated (i.e., well above 10 kHz). This is also affected by atmospheric conditions, such as temperature and humidity.