多进程、多线程 爬取拉勾网的职位信息和工作内容

import requests

import json

import re

from bs4 import BeautifulSoup

import time

import csv

import bs4

from multiprocessing import Pool

import threading

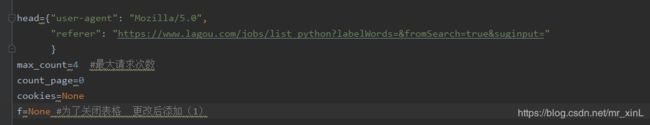

head={"user-agent": "Mozilla/5.0",

"referer": "https://www.lagou.com/jobs/list_python?labelWords=&fromSearch=true&suginput="

}

max_count=4 #最大请求次数

count_page=0

cookies=None

def get_cookie():

try:

url="https://www.lagou.com/jobs/list_python?labelWords=&fromSearch=true&suginput="

sses=requests.session()

sses.get(url,headers=head)

cookies=sses.cookies

cookies=cookies.get_dict()

if cookies:

return cookies

except Exception as ex:

print(ex)

def getHtml(url,judge=None,count=1):

global cookies

if count>max_count:

print("提取页面失败,失败的页面地址是%s"%url)

return None

try:

if judge:

html=requests.get(url, headers=head,cookies=cookies)

else:

cookies=get_cookie()

html = requests.get(url, headers=head,cookies=cookies)

if not re.search("您操作太频繁,请稍后再访问",html.text):

html.raise_for_status()

html.encoding = "utf-8"

return html.text

else:

print("——————————————————访问频繁,缓冲2s————————————————————")

count+=1

time.sleep(2)

return getHtml(url,count)

except Exception as ex:

print("出现异常",ex)

count+=1

return getHtml(url, count)

def parseFirstHtml(content): #解析主页面,获取子页面的id号

FirstHtmlList=[]

try:

content=content.split('"positionResult":',1)[1] #提取出适合用json的字符串

content=content.rsplit(',"pageSize"',1)[0] #提取出适合用json的字符串

content=json.loads(content)["result"] #json字典化

for i in content:#关键词作为列表,添加进FirstHtmlList

index=i["positionId"]

FirstHtmlList.append(index)

FristList=FirstHtmlList

return FristList #保证每次的FristList仅有15个,并不断更新

except Exception as ex:

print(ex,content,"返回的页面内容有问题,解析失败")

def parseSecondHtml(secondcontent,url): #解析子页面,获取薪水、地点、年限、学历和工作内容的信息

soup = BeautifulSoup(secondcontent, "html.parser")

if isinstance(soup.find("h2", attrs={"class": "title"}),bs4.element.Tag):

try:

title = soup.find("h2", attrs={"class": "title"}).get_text()

introduce = soup.find("div", attrs={"class": "items"}).get_text() # 提炼出薪水、地点、年限、学历

introduce = introduce.strip().split("\n")

new_intro = []

for i in introduce:

if i != "":

i = i.strip()

new_intro.append(i)

salary, loc, years, degree = new_intro[0], new_intro[1], new_intro[3], new_intro[4] # 提炼出薪水、地点、年限、学历

work_content = soup.find("div", attrs={"class": "content"}).get_text()

outList=[salary,loc,years,degree,work_content] #和全局outList不是同一个

return outList

except Exception as ex:

print(ex, soup.find("h2", attrs={"class": "title"}), soup)

else:

print("页面出现问题,正在加载",url)

return None

def outputHtml(outList): #将子页面的内容,输出到csv

try:

with open("C:\Dsoftdisk\python\scrap_example\lagouwang\\second_html.csv","a",newline="",encoding="utf-8-sig") as f:

ff=csv.writer(f)

ff.writerow(outList)

except Exception as ex:

print(ex)

finally:

if f:

f.close()

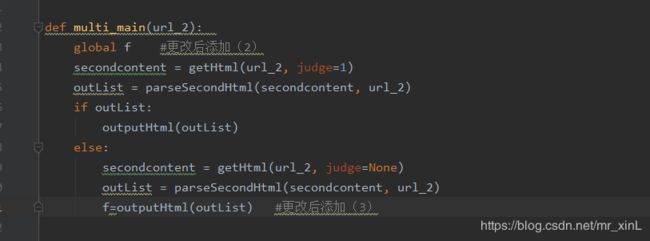

def multi_main(url_2): #多线程,执行子页面的获取、解析和输出

secondcontent = getHtml(url_2, judge=1)

outList = parseSecondHtml(secondcontent, url_2)

if outList:

outputHtml(outList)

else:

secondcontent = getHtml(url_2, judge=None)

outList = parseSecondHtml(secondcontent, url_2)

outputHtml(outList)

'''

多进程

'''

def firstmain(page,count=1): #多进程,执行主页面的获取、解析和输出子页面的id号

global count_page

Firsturl="https://www.lagou.com/jobs/positionAjax.json?needAddtionalResult=false&pn="+str(page)+"&kd=python"

Fristcontent=getHtml(Firsturl)

if Fristcontent:

FirstList=parseFirstHtml(Fristcontent)

else:

print("%s页面提取失败"%Firsturl)

try:

if not re.search("positionId",Fristcontent):

print("————————————————————出现警告,获取内容失败——————————————————————")

return

else:

count_page+=1

print("成功打印page=%s页面,打印页数为%s"%(page,count_page))

except Exception as ex:

print(ex,Fristcontent,type(Fristcontent))

if FirstList:

#采用多进程

for i in FirstList:

url_2 = "https://m.lagou.com/jobs/" + str(i) + ".html"

time.sleep(0.05) #设置时间,不然输入到csv上会出现乱码

t=threading.Thread(target=multi_main,args=(url_2,))

t.start()

else:

print("None")

'''

多进程

'''

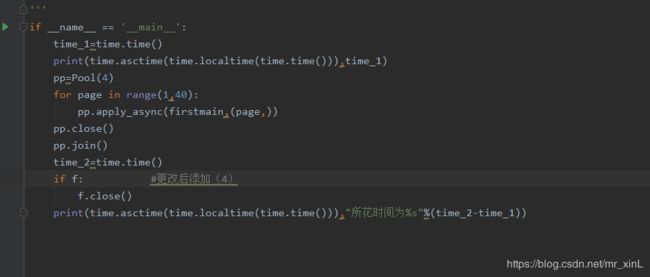

if __name__ == '__main__':

time_1=time.time()

print(time.asctime(time.localtime(time.time())),time_1)

pp=Pool(4)

for page in range(1,40):

pp.apply_async(firstmain,(page,))

pp.close()

pp.join()

time_2=time.time()

print(time.asctime(time.localtime(time.time())),"所花时间为%s"%(time_2-time_1))

2020.5.12 23.56代码修改

原因,在csv中发现格式混乱的情况,分析认为是csv关闭代码的问题,进行代码更改如下,能解决csv中乱码问题。

更改前:

def outputHtml(outList): #将子页面的内容,输出到csv

try:

with open("C:\Dsoftdisk\python\scrap_example\lagouwang\\second_html.csv","a",newline="",encoding="utf-8-sig") as f:

ff=csv.writer(f)

ff.writerow(outList)

except Exception as ex:

print(ex)

finally:

if f:

f.close()

更改后:

def outputHtml(outList): #将子页面的内容,输出到csv

try:

with open("C:\Dsoftdisk\python\scrap_example\lagouwang\\second_html.csv","a",newline="",encoding="utf-8-sig") as f:

ff=csv.writer(f)

ff.writerow(outList)

except Exception as ex:

print(ex)

#更改后删除1

#更改后删除2

#更改后删除3