Spark源码学习之RDD的常见算子(2)

前言

上一篇博客讨论了RDD的一些算子间的关系,留下了两个疑问,这篇博客先来解决第一个问题:iter到底是个什么?

MapPartitionsRDD

仔细来看,其实是创建了一个MapPartitionsRDD,iter是它的一个参数

def flatMap[U: ClassTag](f: T => TraversableOnce[U]): RDD[U] = withScope {

val cleanF = sc.clean(f)

new MapPartitionsRDD[U, T](this, (context, pid, iter) => iter.flatMap(cleanF))

}

sc.clean(f)在清理一些闭包问题,避免函数的外部变量序列化失败或者避免不必要的网络传输。

MapPartitionsRDD源码不多,就在下面。

private[spark] class MapPartitionsRDD[U: ClassTag, T: ClassTag](

var prev: RDD[T],

f: (TaskContext, Int, Iterator[T]) => Iterator[U], // (TaskContext, partition index, iterator)

preservesPartitioning: Boolean = false)

extends RDD[U](prev) {

override val partitioner = if (preservesPartitioning) firstParent[T].partitioner else None

override def getPartitions: Array[Partition] = firstParent[T].partitions

override def compute(split: Partition, context: TaskContext): Iterator[U] =

f(context, split.index, firstParent[T].iterator(split, context))

override def clearDependencies() {

super.clearDependencies()

prev = null

}

}

不难看到MapPartitionsRDD的一个属性f,这个函数将TaskContext,、Int、Iterator[T]转为Iterator[U],但是却没有具体的实现过程。这条线索好些看不到更多关于iter的细节了,那么再进入iter的flatMap方法看看,可以得到如下的代码。

def flatMap[B](f: A => GenTraversableOnce[B]): Iterator[B] = new AbstractIterator[B] {

private var cur: Iterator[B] = empty

private def nextCur() { cur = f(self.next()).toIterator }

def hasNext: Boolean = {

// Equivalent to cur.hasNext || self.hasNext && { nextCur(); hasNext }

// but slightly shorter bytecode (better JVM inlining!)

while (!cur.hasNext) {

if (!self.hasNext) return false

nextCur()

}

true

}

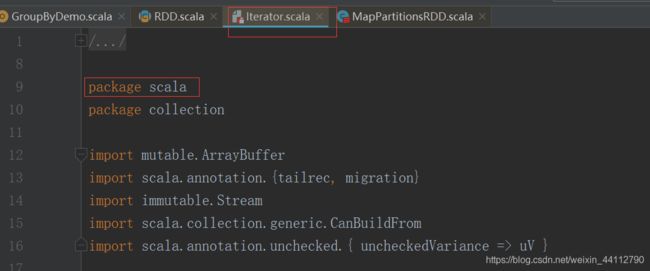

再看看现在所在的位置,居然来到了Scala的源码包

再想想MapPartitionsRDD指定的类型,其实也没什么问题。

同样返回MapPartitionsRDD的算子还有map、filter这些。

def map[U: ClassTag](f: T => U): RDD[U] = withScope {

val cleanF = sc.clean(f)

new MapPartitionsRDD[U, T](this, (context, pid, iter) => iter.map(cleanF))

}

def filter(f: T => Boolean): RDD[T] = withScope {

val cleanF = sc.clean(f)

new MapPartitionsRDD[T, T](

this,

(context, pid, iter) => iter.filter(cleanF),

preservesPartitioning = true)

}

可以看到手法都是一样的

既然有MapPartitionsRDD继承了RDD,应该还有其他RDD的子类吧?下面就来看看这个

PartitionwiseSampledRDD

抽样这个也比较好理解,返回的是一个PartitionwiseSampledRDD

def sample(

withReplacement: Boolean,

fraction: Double,

seed: Long = Utils.random.nextLong): RDD[T] = {

require(fraction >= 0,

s"Fraction must be nonnegative, but got ${fraction}")

withScope {

require(fraction >= 0.0, "Negative fraction value: " + fraction)

if (withReplacement) {

new PartitionwiseSampledRDD[T, T](this, new PoissonSampler[T](fraction), true, seed)

} else {

new PartitionwiseSampledRDD[T, T](this, new BernoulliSampler[T](fraction), true, seed)

}

}

}

CoalescedRDD

这是对RDD重新进行了分区

def coalesce(numPartitions: Int, shuffle: Boolean = false,

partitionCoalescer: Option[PartitionCoalescer] = Option.empty)

(implicit ord: Ordering[T] = null)

: RDD[T] = withScope {

require(numPartitions > 0, s"Number of partitions ($numPartitions) must be positive.")

if (shuffle) {

/** Distributes elements evenly across output partitions, starting from a random partition. */

val distributePartition = (index: Int, items: Iterator[T]) => {

var position = (new Random(index)).nextInt(numPartitions)

items.map { t =>

// Note that the hash code of the key will just be the key itself. The HashPartitioner

// will mod it with the number of total partitions.

position = position + 1

(position, t)

}

} : Iterator[(Int, T)]

// include a shuffle step so that our upstream tasks are still distributed

new CoalescedRDD(

new ShuffledRDD[Int, T, T](mapPartitionsWithIndex(distributePartition),

new HashPartitioner(numPartitions)),

numPartitions,

partitionCoalescer).values

} else {

new CoalescedRDD(this, numPartitions, partitionCoalescer)

}

}

还有一个repartition算子,区别在于shuffle为true

def repartition(numPartitions: Int)(implicit ord: Ordering[T] = null): RDD[T] = withScope {

coalesce(numPartitions, shuffle = true)

}

关于二者使用上的区别,推荐一篇博客。

总结

刚刚接触到了一些RDD的子类,限于篇幅,关于RDD的子类不再展开叙述。这里只给出一张图

在IDEA中Ctrl+H就可以查看到