电费敏感数据挖掘三: 构建低敏用户模型

电费敏感数据挖掘一: 数据处理与特征工程

电费敏感数据挖掘二: 文本特征构造

目录:

- 六. 构建XGBoost模型

- 6.1 读取特征

- 6.2 基于选择的词来创建tf-idf,构建模型输入数据

- 6.3 XGBoost

- 七. 保存最终预测

六. 构建XGBoost模型

6.1 读取特征

import pandas as pd

import numpy as np

import pickle

from scipy.sparse import csc_matrix

from sklearn.feature_extraction.text import TfidfVectorizer

import xgboost as xgb

from scipy.sparse import hstack

df = pickle.load(open(r'..\电费\statistical_features_1.pkl', 'rb'))

text = pickle.load(open(r'..\电费\text_features_1.pkl', 'rb'))

df = df.merge(text, on = 'CUST_NO', how = 'left')

train = df.loc[df.label != -1]

test = df.loc[df.label == -1]

print('训练集:',train.shape[0])

print('正样本:',train.loc[train.label == 1].shape[0])

print('负样本:',train.loc[train.label == 0].shape[0])

print('测试集:',test.shape[0])

训练集: 400075

正样本: 13139

负样本: 386936

测试集: 326167

6.2 基于选择的词来创建tf-idf,构建模型输入数据

x_data = train.copy()

x_val = test.copy()

x_data = x_data.sample(frac = 1, random_state = 42).reset_index(drop = True)

delete_columns = ['CUST_NO', 'label', 'contents']

X_train_1 = csc_matrix(x_data.drop(delete_columns, axis = 1).values)

X_val_1 = csc_matrix(x_val.drop(delete_columns, axis = 1).values)

y_train = x_data.label.values

y_val = x_val.label.values

featurenames = list(x_data.drop(delete_columns, axis = 1).columns)

select_words = pickle.load(open(r'..\电费\single_select_words.pkl', 'rb'))

tfidf = TfidfVectorizer(ngram_range = (1, 2), min_df = 3, sublinear_tf = True,

smooth_idf = False, use_idf = False, vocabulary = select_words)

tfidf.fit(x_data.contents)

word_names = tfidf.get_feature_names()

X_train_2 = tfidf.transform(x_data.contents)

X_val_2 = tfidf.transform(x_val.contents)

print('文本特征:{}维.'.format(len(word_names)))

statistic_feature = featurenames.copy()

print('其他特征:{}维.'.format(len(statistic_feature)))

featurenames.extend(word_names)

X_train = hstack((X_train_1, X_train_2)).tocsc()

X_val = hstack((X_val_1, X_val_2)).tocsc()

print('特征数量', X_train.shape[1])

文本特征:341维.

其他特征:85维.

特征数量 426

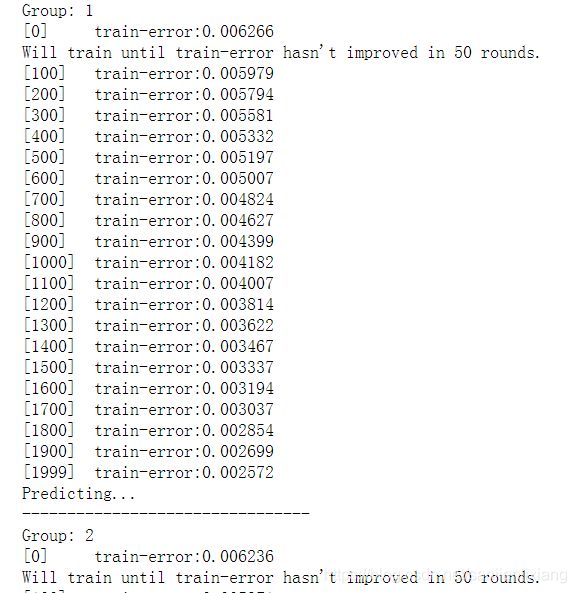

6.3 XGBoost

bagging = []

for i in range(1, 4):

print('Group:', i)

dtrain = xgb.DMatrix(X_train, y_train, feature_names = featurenames)

dval = xgb.DMatrix(X_val, feature_names = featurenames)

params = {'objective': 'binary:logistic', 'eta': 0.1, 'max_depth': 12,

'booster': 'gbtree', 'eval_metric': 'error', 'subsample': 0.8,

'min_child_weight': 3, 'gamma': 0.2, 'lambda': 300,

'colsample_bytree': 1, 'silent': 1, 'seed': i}

watchlist = [(dtrain, 'train')]

model = xgb.train(params, dtrain, 2000, evals = watchlist, early_stopping_rounds = 50, verbose_eval = 100)

print('Predicting...')

y_prob = model.predict(dval, ntree_limit = model.best_ntree_limit)

bagging.append(y_prob)

print('--------------------------------')

print('Done!')

def threshold(y, t):

z = np.copy(y)

z[z >= t] = 1

z[z < t] = 0

return z

t = 0.5

pres = []

for i in bagging:

pres.append(threshold(i, t))

pres = np.array(pres).T.astype('int64')

result = []

for line in pres:

result.append(np.bincount(line).argmax())

myout = test[['CUST_NO']].copy()

myout['pre'] = result

七. 保存最终预测

import os

if not os.path.isdir(r'..\数据挖掘\result'):

os.makedirs(r'..\数据挖掘\result')

myout.loc[myout.pre == 1, 'CUST_NO'].to_csv(r'..\数据挖掘\result\A.csv', index = False)