intent-slot 变体联合模型

- Attention-based recurrent neural network models for joint intent detection and slot filling. Bing Liu and Ian Lane. InterSpeech, 2016. [Code1] [Code2]

- Encoder-decoder with Focus-mechanism for Sequence Labelling Based Spoken Language Understanding. Su Zhu and Kai Yu. ICASSP, 2017. [Code]

- A Bi-model based RNN Semantic Frame Parsing Model for Intent Detection and Slot Filling. Yu Wang, et al. NAACL 2018.

- Neural Models for Sequence Chunking. Fei Zhai, et al. AAAI, 2017.

- Improving Slot Filling in Spoken Language Understanding with Joint Pointer and Attention. Lin Zhao and Zhe Feng. ACL, 2018.

- End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF. Xuezhe Ma, Eduard Hovy. ACL, 2016.

- A Self-Attentive Model with Gate Mechanism for Spoken Language Understanding. Changliang Li, et al. EMNLP 2018. [from Kingsoft AI Lab]

- Joint Slot Filling and Intent Detection via Capsule Neural Networks. Chenwei Zhang. et al. 2018. [ongoing work]

- Joint Online Spoken Language Understanding and Language Modeling with Recurrent Neural Networks. Bing Liu and Ian Lane. SIGDIAL, 2016. [Code]

- Slot-Gated Modeling for Joint Slot Filling and Intent Prediction.Chih-Wen Goo† Guang Gao† Yun-Kai Hsu? Chih-Li Huo? Tsung-Chieh Chen? Keng-Wei Hsu? Yun-Nung Chen†[Code]

- ONENET:JOINT DOMAIN, INTENT, SLOT PREDICTION FOR SPOKEN LANGUAGE UNDERSTANDING

Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling

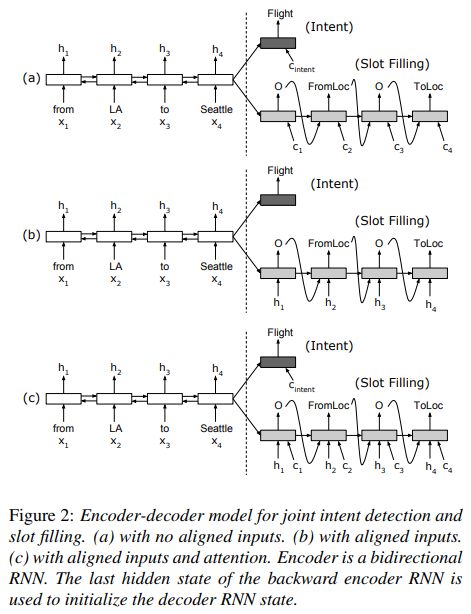

提出了联合训练slot filling和intent detection的数种模型,直接上图:

- Encoder-Decoder Model(Left)

- Add an additional decoder for intent detection

- The intent decoder state is a function of the shared initial decoder state s0s0

- The context vector cintentcintent, which indicates part of the source sequence that the intent decoder pays attention to

- Attention-Based(Right)

- 少了Decoder网络,序列one pass,不是two pass(问题是此时cici怎么计算的啊)

- 对于intent detection:

- If attention is not used, we apply mean-pooling over time on the hidden states hh followed by logistic regression to perform the intent classification

- If attention is enabled, we instead take the weighted average of the hidden states hh over time

Encoder-decoder with Focus-mechanism for Sequence Labelling Based Spoken Language Understanding

参见Seq2Seq系列论文笔记(2017年)

A Bi-Model Based RNN Semantic Frame Parsing Model for Intent Detection and Slot Filling

- Bi-model structures are proposed to take their cross-impact into account

- 模型结构:

- without decoder的模型,x哪里去了

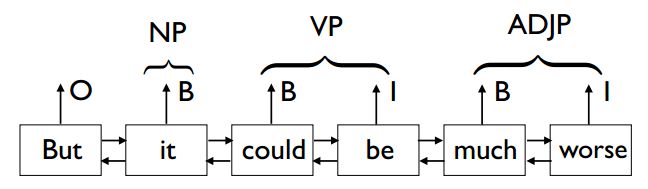

Neural Models for Sequence Chunking

- 将传统的sequence labeling任务划分为sequence chunking(segmentation)和chunk labeling两个子任务

- 为什么要划分成两个子任务:

- 传统基于BIO标签的标注无法确定chunk的边界,我们是从BIO标签中推断的,因此没有完全利用训练数据的信息

- RNN或LSTM单元虽然可以编码上下文信息,但没有把每一个chunk看做一个完整的单元

- 模型1:一个BiLSTM既用于segmentation又用于labeling

- segmentation时使用BIO标签分割

- label时对一个chunk的hidden states作average,用作特征

Chunkj=Average(hi↔,hi+1↔,⋯,hi+l−1↔)Chunkj=Average(hi↔,hi+1↔,⋯,hi+l−1↔)

-

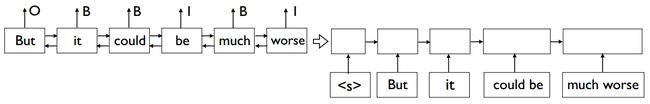

模型2:Encoder-Decoder框架

- 模型1结构较简单,可能无法在两个子任务上都表现很好

- 本模型中decoder使用encoder的隐状态[hT→,h1←][hT→,h1←]来初始化

- decoder使用chunk作为输入特征CjCj而不是word,分为三个部分

- CNN+MaxPoolOverTime提取chunk内的word特征,g(⋅)g(⋅)是表示CNNMax函数

Cxj=g(xi,xi+1,⋯,xi+l−1)Cxj=g(xi,xi+1,⋯,xi+l−1)

- 使用chunk的context word embedding作为上下文特征CwjCwj,context window size是一个超参数

- average the hidden states from the encoder BiLSTM(参见模型1) ChjChj

- 从而decoder更新公式是

hdecoderj=LSTM(Cxj,Chj,Cwj,hdecoderj−1,cdecoderj−1)hjdecoder=LSTM(Cxj,Chj,Cwj,hj−1decoder,cj−1decoder)

注意:模型1和2中我们无法保证I标签一定紧跟B标签,对于出错的I标签,将其看作一个B标签

- CNN+MaxPoolOverTime提取chunk内的word特征,g(⋅)g(⋅)是表示CNNMax函数

-

模型3:Encoder-Decoder-Pointer框架

- 使用BIO标签有两个缺点:

- 很难使用chunk-level特征作segmentation,比如chunk的长度

- 使用BIO标签无法直接比较不同的chunk

- 本模型是一个贪心的过程,先识别出chunk的起始位置,再对它进行label,重复这两步直到所有words都被处理。由于chunk之间是直接相邻的,当一个chunk的end point识别出来时,下一个chunk的begining point也就得到了

- 对于一个开始于时间bb的chunk(bb是encoder的时间步),首先为每一个可能的结束位置ii生成一个特征向量(jj是decoder的时间步,即第jj个将要得到的chunk,djdj是decoder的第jj个隐状态,lmlm是最大chunk长度,LE(⋅)LE(⋅)是length embedding)

uij=vT1tanh(W1hi↔+W2xi+W3xb+W4dj)+vT2LE(i−b+1)i∈[b,b+lm)uji=v1Ttanh(W1hi↔+W2xi+W3xb+W4dj)+v2TLE(i−b+1)i∈[b,b+lm)

- 然后对于所有的uijuji(i∈[b,b+lm)i∈[b,b+lm))作softmax从而得到概率最大的ending position

- 使用BIO标签有两个缺点:

- 训练的目标是两个子任务的cross entropy loss之和

L(θ)=Lsegmentation(θ)+Llabeling(θ)L(θ)=Lsegmentation(θ)+Llabeling(θ)

Improving Slot Filling in Spoken Language Understanding with Joint Pointer and Attention

- Pointer Network+Attentional Seq2Seq, s.t. sentence level slot annotations

- 限制条件sentence level slot annotation带来的挑战:

- 从sentence level slot annotation,比如price=moderate,到word level slot labels耗时费力且可能由于噪声value对应不上

- 如果将slot,value看做一个整体对句子进行分类,又会引入新问题:

- slot对应的value可能非常多甚至是无限的,导致分类器数据稀疏问题

- slot对应的value可能OOV,这类value无法预定义

- 模型结构,利用PtrNet解决OOV问题

- ptpt is a weighted parameter to balance the two components. It is learned at each time step based on decoder input dtdt, decoder state stst and context vector ctct, σ(⋅)σ(⋅) is sigmoid function, wc,ws,wdwc,ws,wd are all trainable weights

pt=σ(wcct+wddt+wsst+b)pt=σ(wcct+wddt+wsst+b)

- 以上的工作仅仅做了value prediction,为了实现一个完整的SLU系统,还需要预测dialog act和slot types,论文中提出使用CNN作分类器:

- 对于dialog act prediction,CNN的输入是整个句子(utterance),输出是一个或多个dialog acts

- 对于slot type prediction,输入是预测的slot value和整个句子,输出是预定义的slot types之一

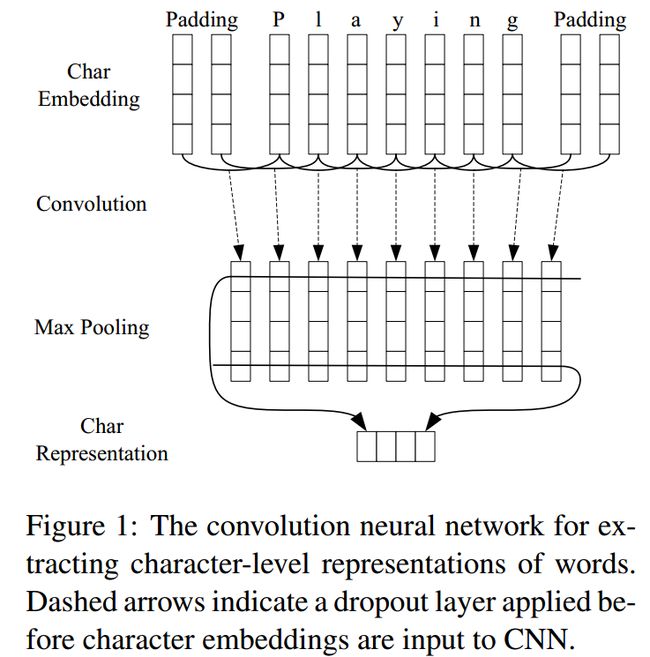

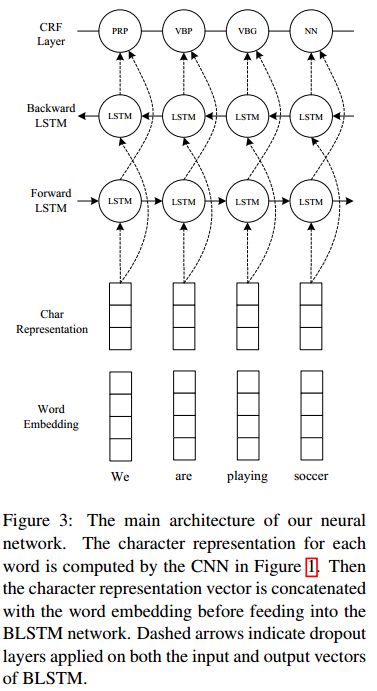

End-to-end Sequence Labeling via Bi-directional LSTM-CNNs-CRF

- Using combination of bidirectional LSTM, CNN and CRF in sequence labeling systems

-

模型结构:

- CNN for Character-level Representation, character embeddings as input, initialized with [−3dim−−−√,3dim−−−√][−3dim,3dim]

- concatenate char-level representation with word level representation and feed it into BiLSTM

- on top of BiLSTM, we use a sequential CRF to jointly decode labels for the whole sentence

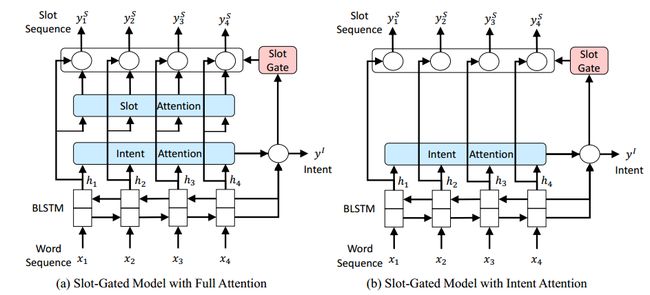

Slot-Gated Modeling for Joint Slot Filling and Intent Prediction

- proposes a slot gate that focuses on learning the relationship between intent and slot attention vectors

- attention部分的计算根据论文中的公式是一种self-attention,align model计算时没有考虑输出位置,是否有些问题?

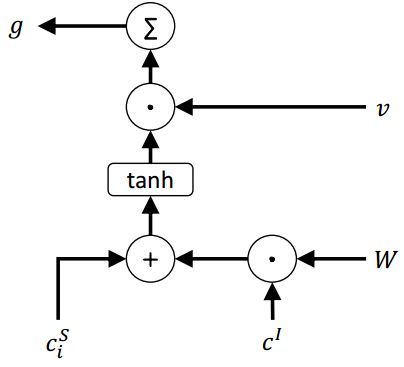

- additional gate引入了intent context vector来对slot-intent关系建模:

- 首先,cintentcintent和cici结合在一起(cintentcintent在序列长度的维度上进行广播)通过一个slot gate (v,Wv,W是trainable参数)

g=∑v⋅tanh(ci+W⋅cintent)g=∑v⋅tanh(ci+W⋅cintent)

- 加法是对一个时间步内元素的操作,gg可以看作联合context向量(cici和cintentcintent)的权重特征

- yiyi的公式

ysloti=softmax(Wy(hi+ci⋅g))yislot=softmax(Wy(hi+ci⋅g))

- gg越大,表示slot context vector和intent context vector对序列同一个部分都关注(which also infers that the correlation between the slot and the intent is stronger and the context vector is more “reliable” for contributing the prediction results)

- slot没有attention的模型(第二幅图),只需要把上面公式的cici改为hihi,此时slot共享intent的attention的信息

- 首先,cintentcintent和cici结合在一起(cintentcintent在序列长度的维度上进行广播)通过一个slot gate (v,Wv,W是trainable参数)

- 联合优化

p(yintent,yslot|x)=p(yintent|x)∏t=1Tp(yslott|x)x=(x1,x2,⋯,xT)p(yintent,yslot|x)=p(yintent|x)∏t=1Tp(ytslot|x)x=(x1,x2,⋯,xT)

ONENET:JOINT DOMAIN, INTENT, SLOT PREDICTION FOR SPOKEN LANGUAGE UNDERSTANDING

- 传统任务型对话先进性domain prediction,然后再intent detection和slot tagging,但这种流水线的模式存在以下弊端:

- domain prediction的错误会传输到下流的任务中

- domain prediction任务无法从下流的预测结果中获取收益和信息

- 很难共享一些domain-invariant特征比如共同、类似的意图和语义槽

- 本论文提出一个统一的模型——OneNet,包括三个部分:

- 一个共享的orthography-sensitive词向量层

- 一个共享的双向的LSTM层用来生成contextual向量表示

- 三个不同的输出层分别进行三个任务:

- domain和intent分类把所有时刻的contextual向量和word embedding相加,再过一个前馈网络

- slot tagging在序列标注的基础上加上CRF层

- Joint Loss直接相加

- Curriculum Learning:实际学习训练中,预训练各个模型,先优化domain classification任务,再intent detection,然后domain+intent分类器,最后再把三个loss加起来一起训练