python从网站抓取特定内容

背景

测试需要模拟浏览器的userAgent,从网站上找到一批 http://www.fynas.com/ua

分析

非常普通的table元素维护

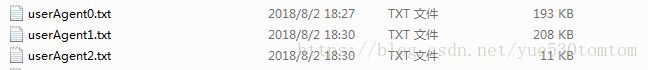

约有8K多条,为防止中途中断导致数据丢失选择读取一页写一页,单个文件可能吃力,选择100页数据放入一个文件的方式

运行后发现 其实抓取结果放入一个文件即可~,总共才2MB左右 -_-!

实现

# -*- coding:utf8 -*-

import string

import urllib2

import re

import time

import random

import math

class GetUserAgent:

def __init__(self,url):

self.myUrl = url

self.datas = []

print u"useragent starting...."

def fynas(self):

url = "http://www.fynas.com/ua"

user_agents = [ 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36']

agent = random.choice(user_agents)

req = urllib2.Request(url)

req.add_header('User-Agent', agent)

req.add_header('Host', 'www.fynas.com')

req.add_header('Accept', '*/*')

req.add_header('GET', url)

mypage = urllib2.urlopen(req).read().decode("utf8")

#print mypage

Pagenum = self.page_counter(mypage)

#print Pagenum

self.find_data(self.myUrl,Pagenum)

def page_counter(self,mypage):

myMatch = re.search(u'共(\d+?)条',mypage,re.S)

print myMatch

if myMatch:

Pagenum = int(math.ceil(int(myMatch.group(1))/10.0))

print u"info:一共%d页" %Pagenum

else:

Pagenum = 0

print u"info:none"

return Pagenum

def find_data(self,myurl,Pagenum):

name = myurl.split("/")

for i in range(1,Pagenum+1):

f = open('userAgent'+str(i/100)+'.txt','a+')

mypage=''

self.datas=[]

print i

print u"info:第%d页正在加载中......" % i

url = myurl + "/search?d=&b=&k=&page=" + str(i)

user_agents = [ 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36']

agent = random.choice(user_agents)

req = urllib2.Request(url)

req.add_header('User-Agent', agent)

req.add_header('Host', 'www.fynas.com')

req.add_header('Accept', '*/*')

req.add_header('GET', url)

mypage = urllib2.urlopen(req).read()

#time.sleep(2)

#MQQBrowser

#Opera

#Mozilla

myItems = re.findall(u'(Mozilla.*?) ',mypage,re.S)

#print myItems

for item in myItems:

self.datas.append(item+"\n")

time.sleep(1)

#self.datas.append(mypage+"\n")

f.writelines(self.datas)

f.close()

#print self.datas

print u"info:文件已下载"

url = "http://www.fynas.com/ua"

mySpider = GetUserAgent(url)

mySpider.fynas()……

info:第245页正在加载中......

246

info:第246页正在加载中......

247

info:第247页正在加载中......

248

info:第248页正在加载中......

249

info:第249页正在加载中......

250

info:第250页正在加载中......

251

info:第251页正在加载中......

252

info:第252页正在加载中......

253

info:第253页正在加载中......

254

……