机器学习(西瓜书)之一元线性回归公式推导

一元线性回归公式推导

1.求解偏置b的公式推导思路:由最小二乘法导出损失函数 E ( ω , b ) E( \omega, b ) E(ω,b)—>证明损失函数 E ( ω , b ) E( \omega, b ) E(ω,b) 是关于 w w w和 b b b的凸函数—>对损失函数 E ( ω , b ) E( \omega, b) E(ω,b)关于 b b b求一阶偏导数—>令一阶偏导数等于 0 0 0解出 b b b

由最小二乘法导出损失函数 E ( ω , b ) E( \omega, b) E(ω,b):

E ( w , b ) = ∑ i = 1 m ( y i − f ( x i ) ) 2 = ∑ i = 1 m ( y i − ( ω x i + b ) ) 2 = ∑ i = 1 m ( y i − ω x i − b ) 2 ( p 54 . 3.4 ) E_{(w,b)}=\sum^{m}_{i = 1}(y_i-f(x_i))^2=\sum^{m}_{i = 1}(y_i-(\omega x_i+b))^2=\sum^{m}_{i = 1}(y_i-\omega x_i-b)^2 \tag{$ p_{54}.3.4$} E(w,b)=i=1∑m(yi−f(xi))2=i=1∑m(yi−(ωxi+b))2=i=1∑m(yi−ωxi−b)2(p54.3.4)

证明损失函数 E ( ω , b ) E(\omega, b) E(ω,b)是关于 ω \omega ω和 b b b的凸函数—— 求: A = f x x 〞 ( x , y ) A=f_{xx}^{ 〞}(x,y) A=fxx〞(x,y)

∂ E ( w , b ) ∂ ω = ∂ ∂ ω [ ∑ i = 1 m ( y i − ω x i − b ) 2 ] = ∑ i = 1 m ∂ ∂ ω ( y i − ω x i − b ) 2 = ∑ i = 1 m 2 ⋅ ( y i − ω x i − b ) ⋅ ( − x i ) = 2 ( ω ∑ i = 1 m x i 2 − ∑ i = 1 m ( y i − b ) x i ) \frac{\partial E_{(w,b)}}{\partial \omega} =\frac{\partial }{\partial \omega} [\sum^{m}_{i = 1}(y_i-\omega x_i-b)^2] =\sum^{m}_{i = 1}\frac{\partial }{\partial \omega} (y_i-\omega x_i-b)^2 =\sum^{m}_{i = 1}2·(y_i-\omega x_i-b)·(-x_i) =2(\omega\sum^{m}_{i = 1}x_i ^2-\sum^{m}_{i = 1}(y_i-b)x_i) ∂ω∂E(w,b)=∂ω∂[i=1∑m(yi−ωxi−b)2]=i=1∑m∂ω∂(yi−ωxi−b)2=i=1∑m2⋅(yi−ωxi−b)⋅(−xi)=2(ωi=1∑mxi2−i=1∑m(yi−b)xi)上式即为即为(3.5)

A = f x x 〞 ( x , y ) = ∂ 2 E ( ω , b ) ∂ ω 2 = ∂ ∂ ω ( ∑ i = 1 m ∂ E ( w , b ) ∂ ω ) = ∂ ∂ ω [ 2 ( ω ∑ i = 1 m x i 2 − ∑ i = 1 m ( y i − b ) x i ) ] = ∂ ∂ ω [ 2 ω ∑ i = 1 m x i 2 ] = 2 ∑ i = 1 m x i 2 A=f_{xx}^{ 〞}(x,y)= \frac{\partial^2E_{(\omega,b)}}{\partial \omega^2} =\frac{\partial }{\partial \omega} (\sum^{m}_{i = 1}\frac{\partial E_{(w,b)}}{\partial \omega} ) =\frac{\partial }{\partial \omega}[2(\omega\sum^{m}_{i = 1}x_i ^2-\sum^{m}_{i = 1}(y_i-b)x_i)] =\frac{\partial }{\partial \omega}[2\omega\sum^{m}_{i = 1}x_i ^2] =2\sum^{m}_{i = 1}x_i ^2 A=fxx〞(x,y)=∂ω2∂2E(ω,b)=∂ω∂(i=1∑m∂ω∂E(w,b))=∂ω∂[2(ωi=1∑mxi2−i=1∑m(yi−b)xi)]=∂ω∂[2ωi=1∑mxi2]=2i=1∑mxi2

求: B = f x y 〞 ( x , y ) B=f_{xy}^{ 〞}(x,y) B=fxy〞(x,y)

B = f x y 〞 ( x , y ) = ∂ 2 E ( ω , b ) ∂ ω ∂ b = ∂ ∂ b ( ∂ E ( w , b ) ∂ ω ) = ∂ ∂ b [ 2 ( ω ∑ i = 1 m x i 2 − ∑ i = 1 m ( y i − b ) x i ) ] = ∂ ∂ b [ − 2 ∑ i = 1 m ( y i − b ) x i ) ] = 2 ∑ i = 1 m x i B=f_{xy}^{ 〞}(x,y)= \frac{\partial^2E_{(\omega,b)}}{\partial \omega\partial b} =\frac{\partial }{\partial b} ( \frac{\partial E_{(w,b)}}{\partial \omega} ) =\frac{\partial }{\partial b} [2(\omega\sum^{m}_{i = 1}x_i ^2-\sum^{m}_{i = 1}(y_i-b)x_i)] =\frac{\partial }{\partial b}[-2\sum^{m}_{i = 1}(y_i-b)x_i)] =2\sum^{m}_{i = 1}x_i B=fxy〞(x,y)=∂ω∂b∂2E(ω,b)=∂b∂(∂ω∂E(w,b))=∂b∂[2(ωi=1∑mxi2−i=1∑m(yi−b)xi)]=∂b∂[−2i=1∑m(yi−b)xi)]=2i=1∑mxi

求: C = f y y 〞 ( x , y ) C=f_{yy}^{ 〞}(x,y) C=fyy〞(x,y)

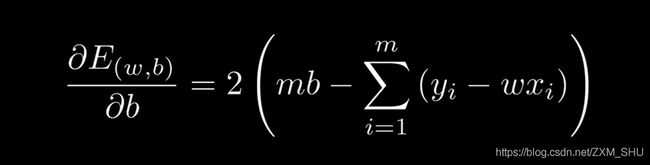

∂ E ( w , b ) ∂ b = ∂ ∂ b [ ∑ i = 1 m ( y i − ω x i − b ) 2 ] = ∑ i = 1 m ∂ ∂ b ( y i − ω x i − b ) 2 = ∑ i = 1 m 2 ⋅ ( y i − ω x i − b ) ⋅ ( − 1 ) = 2 ( m b − ∑ i = 1 m ( y i − ω x i ) ) \frac{\partial E_{(w,b)}}{\partial b} = \frac{\partial }{\partial b}[\sum^{m}_{i = 1} (y_i-\omega x_i-b)^2] =\sum^{m}_{i = 1}\frac{\partial }{\partial b} (y_i-\omega x_i-b)^2 =\sum^{m}_{i = 1}2·(y_i-\omega x_i-b)·(-1) =2(mb-\sum^{m}_{i = 1}(y_i-\omega x_i)) ∂b∂E(w,b)=∂b∂[i=1∑m(yi−ωxi−b)2]=i=1∑m∂b∂(yi−ωxi−b)2=i=1∑m2⋅(yi−ωxi−b)⋅(−1)=2(mb−i=1∑m(yi−ωxi))上式即为即为(3.6)