Android 8.1 Audio框架(二)AudioPolicy路由策略实例分析

概述

这里以蓝牙耳机连接手机这一场景为例分析Audio路由策略是如何进行设备切换和管理输出的。蓝牙耳机连接上Android系统后,AudioService的handleDeviceConnection会被调用,然后调用到AudioPolicyManager的核心函数setDeviceConnectionState。

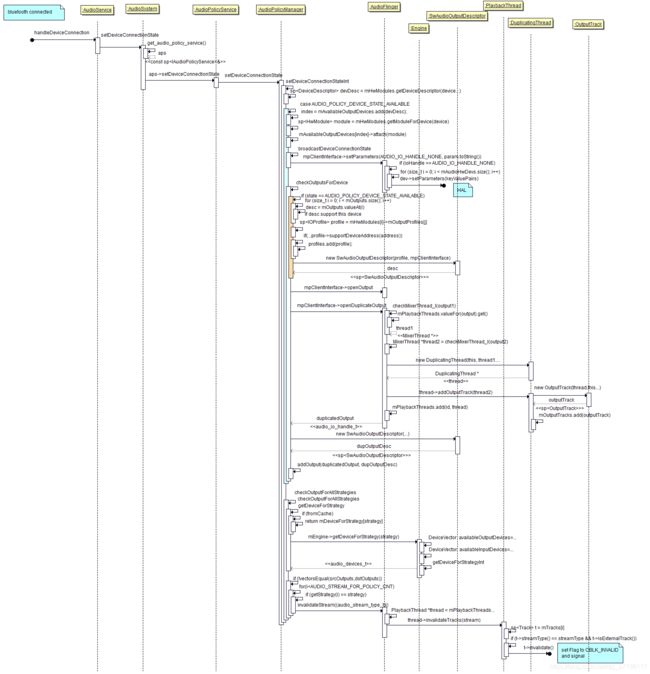

时序图

说明:连接过程的setDeviceConnectionState可以拆分为以下几个流程:

1.添加设备到mAvailableOutputDevices。

2.通知所有module有新设备连接。

3.创建dup output。

4.invalidate指定的流。

setDeviceConnectionStateInt函数

handleDeviceConnection被调用之后,从时序图可以看出AudioPolicyManager的setDeviceConnectionStateInt会被调用。这里来分析这个函数。

status_t AudioPolicyManager::setDeviceConnectionStateInt(audio_devices_t device,

audio_policy_dev_state_t state,

const char *device_address,

const char *device_name)

{

//如果不是输入设备或者输出设备,则返回错误

if (!audio_is_output_device(device) && !audio_is_input_device(device)) return BAD_VALUE;

//mHwModules包含了所有模块的设备描述符

sp<DeviceDescriptor> devDesc =

mHwModules.getDeviceDescriptor(device, device_address, device_name);

if (audio_is_output_device(device)) {

ssize_t index = mAvailableOutputDevices.indexOf(devDesc);//很明显mAvailableOutputDevices没有这个设备,因为还没处理过

mPreviousOutputs = mOutputs;//预存ouput

switch (state)

{

case AUDIO_POLICY_DEVICE_STATE_AVAILABLE: {

if (index >= 0) { //说明已经连接,而且已经处理过了

ALOGW("setDeviceConnectionState() device already connected: %x", device);

return INVALID_OPERATION;

}

index = mAvailableOutputDevices.add(devDesc); //添加到mAvailableOutputDevices中

if (index >= 0) {

//找到支持这个device的HwModule,这里会找到a2dp的module

sp<HwModule> module = mHwModules.getModuleForDevice(device);

....

mAvailableOutputDevices[index]->attach(module);//绑定module

}

//在checking output之前调用setParameters去通知所有的hardware module,有新的设备正在处理中。

//不同的hardware module会有不同的操作,比如primary module会去判断是否需要做bootcomplete。

broadcastDeviceConnectionState(device, state, devDesc->mAddress);

if (checkOutputsForDevice(devDesc, state, outputs, devDesc->mAddress) != NO_ERROR) {

mAvailableOutputDevices.remove(devDesc);

broadcastDeviceConnectionState(device, AUDIO_POLICY_DEVICE_STATE_UNAVAILABLE,

devDesc->mAddress);

return INVALID_OPERATION;

}

}

}

}

}

checkOutputsForDevice是这个非常重要的函数,现在来分析这个函数的第一个阶段

status_t AudioPolicyManager::checkOutputsForDevice(const sp<DeviceDescriptor>& devDesc,

audio_policy_dev_state_t state,

SortedVector<audio_io_handle_t>& outputs,

const String8& address)

{

......

if (state == AUDIO_POLICY_DEVICE_STATE_AVAILABLE) {

for (size_t i = 0; i < mOutputs.size(); i++) {

//查找当前的output是否能支持这个device,很明显当前的primary的,不支持a2dp的device

}

for (size_t i = 0; i < mHwModules.size(); i++){

for (size_t j = 0; j < mHwModules[i]->mOutputProfiles.size(); j++){

sp<IOProfile> profile = mHwModules[i]->mOutputProfiles[j];

//从每一个hardware module中取出每一个profile,找到支持这个a2dp speaker的profile

}

}

ALOGV(" found %zu profiles, %zu outputs", profiles.size(), outputs.size());

//到了这里,找到1个profile,0个output

}

...

}

这里我之所以省略了部分代码,是因为这个过程我们前面已经做过类似的分析了Android 8.1 Audio框架(一)初始化分析。我们知道了checkOutputsForDevice前面的处理会找到1个profile,0个output。module和profile的信息我用dumpsys media.audio_policy打印出来给你们看下并作解释

- HW Module 3: //存在mHwModules[2]中

- name: a2dp //audiopolicy会根据这个名字找到audio.a2dp.default.so这个库,然后链接它

- handle: 26

- version: 2.0

- outputs:

output 0: //很明显我这里只有一个output

- name: a2dp output

- Profiles:

Profile 0: //这里的信息保存在IOProfile中,比如mHwModules[2]->mOutputProfiles[0]就是得到这个Profile 0

- format: AUDIO_FORMAT_PCM_16_BIT

- sampling rates:44100

- channel masks:0x0003

- flags: 0x0000 (AUDIO_OUTPUT_FLAG_NONE)

- Supported devices: //支持的设备,这里有三个

Device 1:

- tag name: BT A2DP Out

- type: AUDIO_DEVICE_OUT_BLUETOOTH_A2DP //我这边蓝牙耳机链接后用于输出的device就是这个

Device 2:

- tag name: BT A2DP Headphones

- type: AUDIO_DEVICE_OUT_BLUETOOTH_A2DP_HEADPHONES

Device 3:

- tag name: BT A2DP Speaker

- type: AUDIO_DEVICE_OUT_BLUETOOTH_A2DP_SPEAKER

- inputs:

....

我们来继续看checkOutputsForDevice函数的第二个阶段

status_t AudioPolicyManager::checkOutputsForDevice(const sp<DeviceDescriptor>& devDesc,

audio_policy_dev_state_t state,

SortedVector<audio_io_handle_t>& outputs,

const String8& address)

{

if (state == AUDIO_POLICY_DEVICE_STATE_AVAILABLE) {

......

for (ssize_t profile_index = 0; profile_index < (ssize_t)profiles.size(); profile_index++) {

sp<IOProfile> profile = profiles[profile_index];

desc = new SwAudioOutputDescriptor(profile, mpClientInterface);

//SwAudioOutputDescriptor包含有很多profile信息,下面传值给audio_config_t

desc->mDevice = device;

audio_config_t config = AUDIO_CONFIG_INITIALIZER;

config.sample_rate = desc->mSamplingRate;

config.channel_mask = desc->mChannelMask;

config.format = desc->mFormat;

config.offload_info.sample_rate = desc->mSamplingRate;

config.offload_info.channel_mask = desc->mChannelMask;

config.offload_info.format = desc->mFormat;

audio_io_handle_t output = AUDIO_IO_HANDLE_NONE;

//打开output

status_t status = mpClientInterface->openOutput(profile->getModuleHandle(),

&output,

&config,

&desc->mDevice,

address,

&desc->mLatency,

desc->mFlags);

}

}

}

可以看出checkOutputsForDevice的第二个阶段就是创建SwAudioOutputDescriptor,然后根据config还有其他参数打开output了。这个过程在Android 8.1 Audio框架(一)初始化分析已经有做过分析了,这里不做赘述。接下来就是checkOutputsForDevice的第三阶段了,重点来了。

status_t AudioPolicyManager::checkOutputsForDevice(const sp<DeviceDescriptor>& devDesc,

audio_policy_dev_state_t state,

SortedVector<audio_io_handle_t>& outputs,

const String8& address)

{

if (state == AUDIO_POLICY_DEVICE_STATE_AVAILABLE) {

......

if (output != AUDIO_IO_HANDLE_NONE) {

addOutput(output, desc);

if (device_distinguishes_on_address(device) && address != "0") {

......

} else if (((desc->mFlags & AUDIO_OUTPUT_FLAG_DIRECT) == 0) &&

hasPrimaryOutput()) {

audio_io_handle_t duplicatedOutput = AUDIO_IO_HANDLE_NONE;

//为primary和新的ouput 创建一个dup output

duplicatedOutput =

mpClientInterface->openDuplicateOutput(output,

mPrimaryOutput->mIoHandle);

if (duplicatedOutput != AUDIO_IO_HANDLE_NONE) {

// add duplicated output descriptor

sp<SwAudioOutputDescriptor> dupOutputDesc =

new SwAudioOutputDescriptor(NULL, mpClientInterface);

dupOutputDesc->mOutput1 = mPrimaryOutput;

dupOutputDesc->mOutput2 = desc;

dupOutputDesc->mSamplingRate = desc->mSamplingRate;

dupOutputDesc->mFormat = desc->mFormat;

dupOutputDesc->mChannelMask = desc->mChannelMask;

dupOutputDesc->mLatency = desc->mLatency;

addOutput(duplicatedOutput, dupOutputDesc);

applyStreamVolumes(dupOutputDesc, device, 0, true);

}

}

}

}

}

在checkOutputsForDevice的第二阶段的时候,已经为这个a2dp profile创建了一个output,在Android 8.1 Audio框架(一)初始化分析我们已经知道openOutput操作会创建一个MixerThread,它继承于PlaybackThread,用于调用混音器,然后播放混音后的数据到hal设备。所以到了第三阶段的时候已经有两个PlaybackThread,一个是开机初始化的时候为primary创建的,一个是连接蓝牙耳机后为a2dp output创建的PlaybackThread。我们知道PlaybackThread的作用就是把混音之后的pcm数据送给底层hal设备的播放的。在android系统中经常会有一些特殊的用户场景,比如在戴耳机的时候,有些声音必须同时从耳机还有扬声器中输出。基于这种用户场景,DuplicatingThread就诞生了,它继承于MixerThread,可以看出DuplicatingThread就是MixerThread的一个wrapper。我们知道PlaybackThread在播放混音之后的数据就是通过threadLoop_write这个函数往hal写数据的,我们先来看看DuplicatingThread的threadLoop_write会做什么:

ssize_t AudioFlinger::DuplicatingThread::threadLoop_write()

{

for (size_t i = 0; i < outputTracks.size(); i++) {

outputTracks[i]->write(mSinkBuffer, writeFrames);

}

mStandby = false;

#ifdef AUDIO_FW_PCM_DUMP

if(NULL!=pcmFile){

fwrite(mSinkBuffer, 1, mSinkBufferSize, pcmFile);

}

#endif

return (ssize_t)mSinkBufferSize;

}

我们可以看出DuplicatingThread的threadLoop_write没有像PlaybackThread的threadLoop_write一样有类似mNormalSink->write一样的往hal写音频数据的操作,它是往outputTracks里是write数据的。在这里我们可以大胆的猜测:DuplicatingThread拥有两个outputTracks,一个是给primary的,一个是给a2dp output的。这样通过DuplicatingThread就可以同时让primary还有a2dp out出声音了。

现在我们来具体分析openDuplicateOutput函数,看看我们的猜想是不是对的。

audio_io_handle_t AudioFlinger::openDuplicateOutput(audio_io_handle_t output1,

audio_io_handle_t output2)

{

Mutex::Autolock _l(mLock);

MixerThread *thread1 = checkMixerThread_l(output1);//从已经创建的播放线程中取出匹配的线程

MixerThread *thread2 = checkMixerThread_l(output2);//从已经创建的播放线程中取出匹配的线程

....

audio_io_handle_t id = nextUniqueId(AUDIO_UNIQUE_ID_USE_OUTPUT);

//创建DuplicatingThread

DuplicatingThread *thread = new DuplicatingThread(this, thread1, id, mSystemReady);

//保存另外一个线程。

thread->addOutputTrack(thread2);

mPlaybackThreads.add(id, thread);

// notify client processes of the new output creation

thread->ioConfigChanged(AUDIO_OUTPUT_OPENED);

return id;

}

AudioFlinger::MixerThread *AudioFlinger::checkMixerThread_l(audio_io_handle_t output) const{

PlaybackThread *thread = checkPlaybackThread_l(output);

return thread != NULL && thread->type() != ThreadBase::DIRECT ? (MixerThread *) thread : NULL;

}

AudioFlinger::PlaybackThread *AudioFlinger::checkPlaybackThread_l(audio_io_handle_t output) const{

return mPlaybackThreads.valueFor(output).get();//也只是从缓存中取出PlaybackThread而已,并没有创建

}

来看看DuplicatingThread的构造函数

class DuplicatingThread : public MixerThread {

......

}

AudioFlinger::DuplicatingThread::DuplicatingThread(const sp<AudioFlinger>& audioFlinger,

AudioFlinger::MixerThread* mainThread, audio_io_handle_t id, bool systemReady)

: MixerThread(audioFlinger, mainThread->getOutput(), id, mainThread->outDevice(),

systemReady, DUPLICATING),

mWaitTimeMs(UINT_MAX)

{

addOutputTrack(mainThread);

}

void AudioFlinger::DuplicatingThread::addOutputTrack(MixerThread *thread)

{

sp<OutputTrack> outputTrack = new OutputTrack(thread,this,mSampleRate,mFormat,mChannelMask,frameCount,...);

mOutputTracks.add(outputTrack); //咦,这不是就是刚才那个outputTracks吗

}

看来DuplicatingThread在构造的时候已经为线程thread1创建了一个OutputTrack。然后另外一个是在thread->addOutputTrack(thread2);中创建的。

到了这里我们已经分析完checkOutputsForDevice的三个阶段了,现在返回到setDeviceConnectionStateInt函数,继续分析。

status_t AudioPolicyManager::setDeviceConnectionStateInt(audio_devices_t device,

audio_policy_dev_state_t state,

const char *device_address,

const char *device_name)

{

......

if (audio_is_output_device(device)) {

......

checkOutputForAllStrategies(true /*doNotMute*/);

}

}

void AudioPolicyManager::checkOutputForAllStrategies(bool doNotMute)

{

if (mEngine->getForceUse(AUDIO_POLICY_FORCE_FOR_SYSTEM) == AUDIO_POLICY_FORCE_SYSTEM_ENFORCED)

checkOutputForStrategy(STRATEGY_ENFORCED_AUDIBLE, doNotMute);

checkOutputForStrategy(STRATEGY_PHONE, doNotMute);

if (mEngine->getForceUse(AUDIO_POLICY_FORCE_FOR_SYSTEM) != AUDIO_POLICY_FORCE_SYSTEM_ENFORCED)

checkOutputForStrategy(STRATEGY_ENFORCED_AUDIBLE, doNotMute);

checkOutputForStrategy(STRATEGY_SONIFICATION, doNotMute);

checkOutputForStrategy(STRATEGY_SONIFICATION_RESPECTFUL, doNotMute);

checkOutputForStrategy(STRATEGY_ACCESSIBILITY, doNotMute);

checkOutputForStrategy(STRATEGY_MEDIA, doNotMute);

checkOutputForStrategy(STRATEGY_DTMF, doNotMute);

checkOutputForStrategy(STRATEGY_REROUTING, doNotMute);

}

checkOutputForAllStrategies为每一个strategy调用checkOutputForStrategy,又会调用getDeviceForStrategy去获得对应的audio_devices_t。getDeviceForStrategy会调用mEngine->getDeviceForStrategy(strategy)。来看看这个函数会做什么

audio_devices_t Engine::getDeviceForStrategy(routing_strategy strategy) const

{

//获取到availableOutputDevices,前面已经把a2dp output这个device添加到设备vector中,现在可以获取到了

DeviceVector availableOutputDevices = mApmObserver->getAvailableOutputDevices();

DeviceVector availableInputDevices = mApmObserver->getAvailableInputDevices();

const SwAudioOutputCollection &outputs = mApmObserver->getOutputs();

return getDeviceForStrategyInt(strategy, availableOutputDevices,

availableInputDevices, outputs);

}

audio_devices_t Engine::getDeviceForStrategyInt(routing_strategy strategy,

DeviceVector availableOutputDevices,

DeviceVector availableInputDevices,

const SwAudioOutputCollection &outputs) const

{

uint32_t device = AUDIO_DEVICE_NONE;

uint32_t availableOutputDevicesType = availableOutputDevices.types();

switch (strategy) {

case STRATEGY_TRANSMITTED_THROUGH_SPEAKER:

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_SPEAKER;

break;

default: // FORCE_NONE

if (!isInCall() &&

(mForceUse[AUDIO_POLICY_FORCE_FOR_MEDIA] != AUDIO_POLICY_FORCE_NO_BT_A2DP) &&

(outputs.getA2dpOutput() != 0)) {

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_BLUETOOTH_A2DP;

if (device) break;

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_BLUETOOTH_A2DP_HEADPHONES;

if (device) break;

}

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_WIRED_HEADPHONE;

if (device) break;

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_WIRED_HEADSET;

if (device) break;

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_USB_HEADSET;

if (device) break;

device = availableOutputDevicesType & AUDIO_DEVICE_OUT_USB_DEVICE;

}

}

可以看出checkOutputForStrategy最终会调用到getDeviceForStrategyInt,它会根据strategy类型返回不同的device。这样就可以让不同的流走不同的通路了,比如我music流只走a2dp output,来电铃声AUDIO_STREAM_RING走primary和a2dp output同时出声。

void AudioPolicyManager::checkOutputForStrategy(routing_strategy strategy, bool doNotMute)

{

audio_devices_t oldDevice = getDeviceForStrategy(strategy, true /*fromCache*/);

audio_devices_t newDevice = getDeviceForStrategy(strategy, false /*fromCache*/);

SortedVector<audio_io_handle_t> srcOutputs = getOutputsForDevice(oldDevice, mPreviousOutputs);

SortedVector<audio_io_handle_t> dstOutputs = getOutputsForDevice(newDevice, mOutputs);

.....

//如果strategy在a2dp output和primary中的输出规则不一样,则进行track的搬移处理

if (!vectorsEqual(srcOutputs,dstOutputs)) {

for (size_t i = 0; i < srcOutputs.size(); i++) {

sp<SwAudioOutputDescriptor> desc = mOutputs.valueFor(srcOutputs[i]);

if (isStrategyActive(desc, strategy) && !doNotMute) {

setStrategyMute(strategy, true, desc); //个人认为:先mute住,不然会有pop音

setStrategyMute(strategy, false, desc, MUTE_TIME_MS, newDevice);

}

sp<AudioSourceDescriptor> source =

getSourceForStrategyOnOutput(srcOutputs[i], strategy);//我这平台获取不到source,估计是没做这个

if (source != 0){

connectAudioSource(source);

}

如果是STRATEGY_MEDIA,则需要把音效的东西搬移到新的output

if (strategy == STRATEGY_MEDIA) {

selectOutputForMusicEffects();

}

for (int i = 0; i < AUDIO_STREAM_FOR_POLICY_CNT; i++) {

if (getStrategy((audio_stream_type_t)i) == strategy) {

mpClientInterface->invalidateStream((audio_stream_type_t)i);//让对应的流失效

}

}

}

}

}

来看看这个invalidateStream的过程

status_t AudioFlinger::invalidateStream(audio_stream_type_t stream){

for (size_t i = 0; i < mPlaybackThreads.size(); i++) {

PlaybackThread *thread = mPlaybackThreads.valueAt(i).get(); //取出每一个PlaybackThread

thread->invalidateTracks(stream);

}

......

return NO_ERROR;

}

void AudioFlinger::PlaybackThread::invalidateTracks(audio_stream_type_t streamType){

invalidateTracks_l(streamType);

}

bool AudioFlinger::PlaybackThread::invalidateTracks_l(audio_stream_type_t streamType){

size_t size = mTracks.size();

for (size_t i = 0; i < size; i++) {

sp<Track> t = mTracks[i];

if (t->streamType() == streamType && t->isExternalTrack()) {

t->invalidate(); //设置Track状态

trackMatch = true;

}

}

return trackMatch;

}

void AudioFlinger::PlaybackThread::Track::invalidate(){

TrackBase::invalidate();

signalClientFlag(CBLK_INVALID);

}

可以看出这个invalidateStream的操作就是设置对应Track的状态。比如我要invalidate music流,那么对应的Track就会被设置为CBLK_INVALID,然后AudioTrack在AudioTrack::obtainBuffer、obtainBuffer或者getPosition的时候会检测到CBLK_INVALID这个flag,然后调用restoreTrack_l,restoreTrack_l会调用createTrack_l重新创建track。这样新的track就会按照预期输出音频了。