mtk audio

之前的版本,AF在Main_MediaServer.cpp里面启动,在android N,AF在main_audioserver.cpp里面启动,

AudioFlinger::instantiate()并不属于AudioFlinger的内部类,而是BinderService类的一个实现包括AudioFlinger,AudioPolicy等在内的几个服务都继承自这个统一的Binder的服务类,具体实现在BinderService.h中

// frameworks/native/include/binder/BinderService.h

static status_t publish(bool allowIsolated = false) {

sp sm(defaultServiceManager());

//SERVICE是文件中定义的一个模板,AudioFlinger调用了instantiate()函数,

//所以当前的SERVICE为AudioFlinger

return sm->addService(

String16(SERVICE::getServiceName()),

new SERVICE(), allowIsolated);

}

static void instantiate() { publish(); }

可以看出publish()函数所做的事获取到ServiceManager的代理,然后new一个调用instantiate的那个service的对象并把它添加到ServiceManager中。

所以下一步就是去分析AudioFlinger的构造函数了

AF是执行,APS是策略的规划者

audio的所有设备在AudioPolicyManager的构造函数里加载audio_policy.conf

void AudioPolicyService::onFirstRef()

{

{

Mutex::Autolock _l(mLock);

// start tone playback thread

mTonePlaybackThread = new AudioCommandThread(String8("ApmTone"), this);

// start audio commands thread

mAudioCommandThread = new AudioCommandThread(String8("ApmAudio"), this);

// start output activity command thread

mOutputCommandThread = new AudioCommandThread(String8("ApmOutput"), this);

mAudioPolicyClient = new AudioPolicyClient(this);

mAudioPolicyManager = createAudioPolicyManager(mAudioPolicyClient);

从这可以看出AudioSystem是与其他接口的中间人如蓝牙或有线耳机能过 AudioSystem.setDeviceConnectionState接口来设置设备在audio系统的连接状态

过程中还创建了mtk自已的动作

extern "C" AudioPolicyInterface* createAudioPolicyManager(

AudioPolicyClientInterface *clientInterface)

{

audiopolicymanagerMTK = (AudioPolicyManagerCustomInterface*) new AudioPolicyManagerCustomImpl(); // MTK_AUDIO

return new AudioPolicyManager(clientInterface, audiopolicymanagerMTK);

}

mHwModules从配置文件中audio_policy.conf收集

for (size_t i = 0; i < mHwModules.size(); i++) {

mHwModules[i]->mHandle = mpClientInterface->loadHwModule(mHwModules[i]->getName());

if (mHwModules[i]->mHandle == 0) {

ALOGW("could not open HW module %s", mHwModules[i]->getName());

continue;

audio_module_handle_t AudioPolicyService::AudioPolicyClient::loadHwModule(const char *name)

{

sp af = AudioSystem::get_audio_flinger();

if (af == 0) {

ALOGW("%s: could not get AudioFlinger", __func__);

return AUDIO_MODULE_HANDLE_NONE;

}

return af->loadHwModule(name);

}

AudioPolicyManager的构造函数里用的是mpClientInterface的接口最终用的是AF的loadHwModule

// loadHwModule_l() must be called with AudioFlinger::mLock held

audio_module_handle_t AudioFlinger::loadHwModule_l(const char *name)

{

for (size_t i = 0; i < mAudioHwDevs.size(); i++) {

if (strncmp(mAudioHwDevs.valueAt(i)->moduleName(), name, strlen(name)) == 0) {

ALOGW("loadHwModule() module %s already loaded", name);

return mAudioHwDevs.keyAt(i);

}

}

sp dev;

int rc = mDevicesFactoryHal->openDevice(name, &dev);

最终call到

status_t DevicesFactoryHalLocal::openDevice(const char *name, sp *device) {

audio_hw_device_t *dev;

status_t rc = load_audio_interface(name, &dev);

if (rc == OK) {

*device = new DeviceHalLocal(dev);

}

return rc;

}

static status_t load_audio_interface(const char *if_name, audio_hw_device_t **dev)

{

const hw_module_t *mod;

int rc;

rc = hw_get_module_by_class(AUDIO_HARDWARE_MODULE_ID, if_name, &mod);

if (rc) {

ALOGE("%s couldn't load audio hw module %s.%s (%s)", __func__,

AUDIO_HARDWARE_MODULE_ID, if_name, strerror(-rc));

goto out;

}

rc = audio_hw_device_open(mod, dev);

if (rc) {

ALOGE("%s couldn't open audio hw device in %s.%s (%s)", __func__,

AUDIO_HARDWARE_MODULE_ID, if_name, strerror(-rc));

goto out;

}

if ((*dev)->common.version < AUDIO_DEVICE_API_VERSION_MIN) {

ALOGE("%s wrong audio hw device version %04x", __func__, (*dev)->common.version);

rc = BAD_VALUE;

audio_hw_device_close(*dev);

goto out;

}

return OK;

out:

*dev = NULL;

return rc;

}

加载并打开audio_hw_device

static inline int audio_hw_device_open(const struct hw_module_t* module,

struct audio_hw_device** device)

{

return module->methods->open(module, AUDIO_HARDWARE_INTERFACE,

TO_HW_DEVICE_T_OPEN(device));

}

struct legacy_audio_module HAL_MODULE_INFO_SYM = {

.module = {

.common = {

.tag = HARDWARE_MODULE_TAG,

.module_api_version = AUDIO_MODULE_API_VERSION_0_1,

.hal_api_version = HARDWARE_HAL_API_VERSION,

.id = AUDIO_HARDWARE_MODULE_ID,

.name = "MTK Audio HW HAL",

.author = "MTK",

.methods = &legacy_audio_module_methods,

.dso = NULL,

.reserved = {0},

},

},

static struct hw_module_methods_t legacy_audio_module_methods = {

.open = legacy_adev_open

};

最终执行legacy_adev_open,打开执行这个 "MTK Audio HW HAL"接口的open,这里作一些针对底层hal接口的赋值动作

结合AF中的

audio_interfaces加载三大接口与对应的so与节点下面的设备

#define AUDIO_HARDWARE_MODULE_ID_PRIMARY "primary"

#define AUDIO_HARDWARE_MODULE_ID_A2DP "a2dp"

#define AUDIO_HARDWARE_MODULE_ID_USB "usb"

static const char * const audio_interfaces[] = {

AUDIO_HARDWARE_MODULE_ID_PRIMARY,

AUDIO_HARDWARE_MODULE_ID_A2DP,

AUDIO_HARDWARE_MODULE_ID_USB,

};

与配置文件audio_policy.conf

遍历节点primary加载audio.primary.mt6580.so

primary {

global_configuration {

attached_output_devices AUDIO_DEVICE_OUT_SPEAKER|AUDIO_DEVICE_OUT_EARPIECE

default_output_device AUDIO_DEVICE_OUT_SPEAKER

attached_input_devices AUDIO_DEVICE_IN_BUILTIN_MIC|AUDIO_DEVICE_IN_FM_TUNER|AUDIO_DEVICE_IN_VOICE_CALL

audio_hal_version 3.0

}

devices {

headset {

type AUDIO_DEVICE_OUT_WIRED_HEADSET

gains {

gain_1 {

mode AUDIO_GAIN_MODE_JOINT

channel_mask AUDIO_CHANNEL_OUT_

遍历节点a2dp加载audio.a2dp.default.so

a2dp {

global_configuration {

audio_hal_version 2.0

}

outputs {

a2dp {

sampling_rates 44100

channel_masks AUDIO_CHANNEL_OUT_STEREO

formats AUDIO_FORMAT_PCM_16_BIT

devices AUDIO_DEVICE_OUT_ALL_A2DP

}

}

inputs {

遍历节点usb加载audio.usb.mt6580.so

usb {

global_configuration {

audio_hal_version 2.0

}

outputs {

usb_accessory {

sampling_rates 44100

channel_masks AUDIO_CHANNEL_OUT_STEREO

formats AUDIO_FORMAT_PCM_16_BIT

devices AUDIO_DEVICE_OUT_USB_ACCESSORY

}

usb_device {

sampling_rates dyna

在AF中加载三大接口

audio_module_handle_t AudioFlinger::loadHwModule(const char *name)

{

if (name == NULL) {

return AUDIO_MODULE_HANDLE_NONE;

}

if (!settingsAllowed()) {

return AUDIO_MODULE_HANDLE_NONE;

}

Mutex::Autolock _l(mLock);

return loadHwModule_l(name);

}

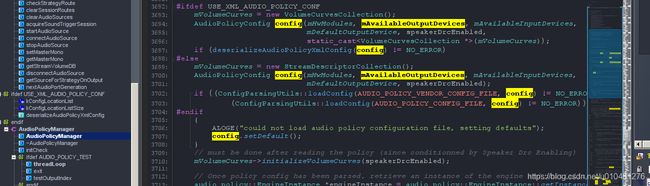

从audio_policy.conf文件中可以发现,系统包含了primary、a2dp、usb等音频接口,对应着系统中的audio.

audiomanager.setParameters会call到AudioFlinger::setParameters

status_t AudioFlinger::setParameters(audio_io_handle_t ioHandle, const String8& keyValuePairs)

{

ALOGV("setParameters(): io %d, keyvalue %s, calling pid %d",

ioHandle, keyValuePairs.string(), IPCThreadState::self()->getCallingPid());

// check calling permissions

if (!settingsAllowed()) {

return PERMISSION_DENIED;

}

// AUDIO_IO_HANDLE_NONE means the parameters are global to the audio hardware interface

if (ioHandle == AUDIO_IO_HANDLE_NONE) {

Mutex::Autolock _l(mLock);

// result will remain NO_INIT if no audio device is present

status_t final_result = NO_INIT;

{

AutoMutex lock(mHardwareLock);

mHardwareStatus = AUDIO_HW_SET_PARAMETER;

for (size_t i = 0; i < mAudioHwDevs.size(); i++) {

sp dev = mAudioHwDevs.valueAt(i)->hwDevice();

status_t result = dev->setParameters(keyValuePairs);

// return success if at least one audio device accepts the parameters as not all

// HALs are requested to support all parameters. If no audio device supports the

// requested parameters, the last error is reported.

status_t result = **dev->setParameters**(keyValuePairs);

对于HAL以上的逻辑,只需找到ID和相应的名字即可找到需要使用的模块,即使你有100个厂商,100个厂商又有100个模块,还是依照明确的标准去走,这个就是面向对象编程中的一个核心理念,面向接口编程,不管你逻辑如何变,接口一定不能变!这样就能确保软件的低耦合,可移植

.id = AUDIO_HARDWARE_MODULE_ID,

.name = “MTK Audio HW HAL”,

vendor/mediatek/proprietary/hardware/audio/common/aud_drv/audio_hw_hal.cpp

ladev->device.set_parameters = adev_set_parameters;

static int adev_set_parameters(struct audio_hw_device *dev, const char *kvpairs) {

#ifdef AUDIO_HAL_PROFILE_ENTRY_FUNCTION

AudioAutoTimeProfile _p(__func__);

#endif

struct legacy_audio_device *ladev = to_ladev(dev);

return ladev->hwif->setParameters(String8(kvpairs));

}

其中

ladev->hwif->setParameters(String8(kvpairs));

ladev->hwif = createMTKAudioHardware();

AudioMTKHardwareInterface *AudioMTKHardwareInterface::create() {

/*

* FIXME: This code needs to instantiate the correct audio device

* interface. For now - we use compile-time switches.

*/

AudioMTKHardwareInterface *hw = 0;

char value[PROPERTY_VALUE_MAX];

ALOGV("Creating MTK AudioHardware");

//hw = new android::AudioALSAHardware();

hw = android::AudioALSAHardware::GetInstance();

return hw;

}

extern "C" AudioMTKHardwareInterface *createMTKAudioHardware() {

/*

* FIXME: This code needs to instantiate the correct audio device

* interface. For now - we use compile-time switches.

*/

return AudioMTKHardwareInterface::create();

}

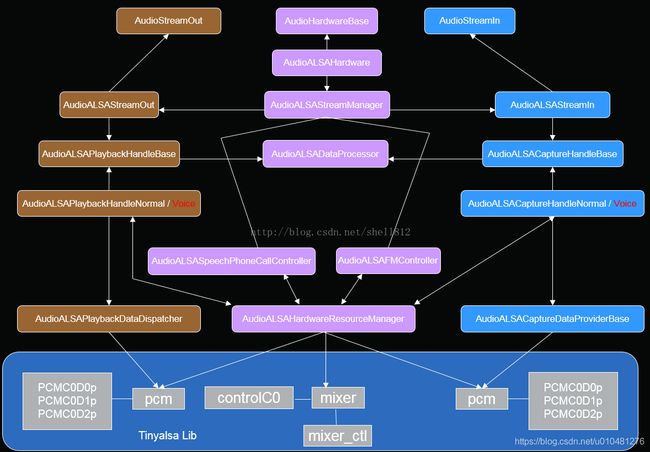

即ladev->hwif是AudioALSAHardware中的实例,所调用的为它的方法

即最终所调用的是

status_t AudioALSAHardware::setParameters(const String8 &keyValuePairs) {

AudioALSAHardware::setParameters()是Audio setParameters()的最终执行函数

如下数据结构中 struct audio_stream_out stream;为AF/APS需要用的,属于平台无关数据

struct legacy_stream_out {

struct audio_stream_out stream;

AudioMTKStreamOutInterface *legacy_out;

};

vendor/mediatek/proprietary/hardware/audio/common/aud_drv/audio_hw_hal.cpp

out->legacy_out = ladev->hwif->openOutputStreamWithFlags(devices, flags,

(int *) &config->format,

&config->channel_mask,

&config->sample_rate, &status);

AudioALSAHardware.cpp

AudioMTKStreamOutInterface *AudioALSAHardware::openOutputStreamWithFlags(uint32_t devices,

audio_output_flags_t flags,

int *format,

uint32_t *channels,

uint32_t *sampleRate,

status_t *status) {

return mStreamManager->openOutputStream(devices, format, channels, sampleRate, status, flags);

//AudioMTKStreamOutInterface *AudioALSAStreamManager::openOutputStream(

}

之后

AudioALSAStreamManager.cpp中如下返回的是AudioALSAStreamOut

AudioMTKStreamOutInterface *AudioALSAStreamManager::openOutputStream(

则out_write对应的out->legacy_out为AudioALSAStreamOut则struct audio_stream_out *stream的out_write就变为AudioALSAStreamOut::write()

static ssize_t out_write(struct audio_stream_out *stream, const void *buffer,

size_t bytes) {

#ifdef AUDIO_HAL_PROFILE_ENTRY_FUNCTION

AudioAutoTimeProfile _p(__func__, AUDIO_HAL_FUNCTION_WRITE_NS);

#endif

struct legacy_stream_out *out =

reinterpret_cast(stream);

return out->legacy_out->write(buffer, bytes);

}

转 https://blog.csdn.net/bberdong/article/details/78346729

Audio write数据流程,

AudioTrack->write

AudioFlinger::PlaybackThread::threadLoop_write()

mNormalSink->write

而mNormalSink其实是NBAIO_Sink,实现类是:AudioStreamOutSink

那我们直接看

frameworks/av/media/libnbaio/AudioStreamOutSink.cpp

//AudioStreamOutSink::write节选

status_t ret = mStream->write(buffer, count * mFrameSize, &written);

//AudioStreamOutSink.h

sp mStream;

果然,mStream类型变成了StreamOutHalInterface(Android 5.1上是audio_stream_out类型)

然后,我们发现frameworks/av/media/底下多了个文件夹

libaudiohal

Android.mk DeviceHalLocal.h DevicesFactoryHalLocal.h EffectHalHidl.h EffectsFactoryHalLocal.h StreamHalLocal.h

ConversionHelperHidl.cpp DevicesFactoryHalHidl.cpp EffectBufferHalHidl.cpp EffectHalLocal.cpp HalDeathHandlerHidl.cpp

ConversionHelperHidl.h DevicesFactoryHalHidl.h EffectBufferHalHidl.h EffectHalLocal.h include

DeviceHalHidl.cpp DevicesFactoryHalHybrid.cpp EffectBufferHalLocal.cpp EffectsFactoryHalHidl.cpp StreamHalHidl.cpp

DeviceHalHidl.h DevicesFactoryHalHybrid.h EffectBufferHalLocal.h EffectsFactoryHalHidl.h StreamHalHidl.h

DeviceHalLocal.cpp DevicesFactoryHalLocal.cpp EffectHalHidl.cpp EffectsFactoryHalLocal.cpp StreamHalLocal.cpp

很明显,从文件名命名方式来看,一类是以Hidl结尾,一类是Local结尾,很明显!Local结尾的应该是兼容之前的方式,

StreamOutHalInterface的实现类就在这底下了:

class StreamOutHalHidl : public StreamOutHalInterface, public StreamHalHidl

继续write流程

StreamOutHalHidl::write

callWriterThread(WriteCommand::WRITE,…

//StreamOutHalHidl::callWriterThread

if (!mCommandMQ->write(&cmd)) {

ALOGE("command message queue write failed for \"%s\"", cmdName);

return -EAGAIN;

}

if (data != nullptr) {

size_t availableToWrite = mDataMQ->availableToWrite();

if (dataSize > availableToWrite) {

ALOGW("truncating write data from %lld to %lld due to insufficient data queue space",

(long long)dataSize, (long long)availableToWrite);

dataSize = availableToWrite;

}

if (!mDataMQ->write(data, dataSize)) {

ALOGE("data message queue write failed for \"%s\"", cmdName);

}

}

mDataMQ:

typedef MessageQueue DataMQ;

看本文件的顶部:

#include

好吧。fmq!

先看看WriteCommand

//StreamHalHidl.h

using WriteCommand = ::android::hardware::audio::V2_0::IStreamOut::WriteCommand;

1

2

到这里很明显能看出Binder化的痕迹了。这是要开始跨进程调用了!

fmq(Fast Message Queue)就是实现这种跨进程的关键!

编译hardware/interfaces/audio模块的输出:

/out/soong/.intermediates/hardware/interfaces/audio/2.0/[email protected]_genc++/gen/android/hardware/audio/2.0目录下面:

DeviceAll.cpp DevicesFactoryAll.cpp PrimaryDeviceAll.cpp StreamAll.cpp StreamInAll.cpp StreamOutAll.cpp StreamOutCallbackAll.cpp types.cpp

这些文件自动生成出来,然后可以实现audioflinger通过libaudiohal模块,binder化地调用hal!

现在回到:

/hardware/interfaces/audio/2.0/

default里已经有一堆实现好的代码了(server端)

还是用write接口举例:

vendor/mediatek/proprietary/hardware/audio/common/service/2.0/StreamOut.cpp

bool WriteThread::threadLoop() {

// This implementation doesn't return control back to the Thread until it

// decides to stop,

// as the Thread uses mutexes, and this can lead to priority inversion.

while (!std::atomic_load_explicit(mStop, std::memory_order_acquire)) {

uint32_t efState = 0;

mEfGroup->wait(static_cast(MessageQueueFlagBits::NOT_EMPTY),

&efState);

if (!(efState &

static_cast(MessageQueueFlagBits::NOT_EMPTY))) {

continue; // Nothing to do.

}

if (!mCommandMQ->read(&mStatus.replyTo)) {

continue; // Nothing to do.

}

switch (mStatus.replyTo) {

case IStreamOut::WriteCommand::WRITE:

ALOGE("zyk IStreamOut::WriteCommand::WRITE: %d", mStatus.replyTo);

doWrite();

break;

//WriteThread::threadLoop

case IStreamOut::WriteCommand::WRITE:

doWrite();

//StreamOut.cpp

ssize_t writeResult = mStream->write(mStream, &mBuffer[0], availToRead);

//StreamOut.h

audio_stream_out_t *mStream;

接下来,通过函数指针,白转千回找到

vendor/mediatek/proprietary/hardware/audio/common/aud_drv/audio_hw_hal.cpp

调用out_write函数,然后有的平台如高通调用pcm_write从而进入tinyAlsa驱动的流程就不表了,和以前的流程应该大同小异

在写的函数AudioALSAStreamOut::write()中发现为standby模式会重新打开open会调用如下开启线程

AudioALSAStreamOut->open

./vendor/mediatek/proprietary/hardware/audio/common/V3/aud_drv/AudioALSAStreamManager.cpp

createPlaybackHandler

AudioALSAPlaybackHandlerBTCVSD

createCaptureHandler

AudioALSACaptureHandlerBT

catpture from mic

mtk6580 mic 所使用的mix ctl是

AUDIO_DEVICE_BUILTIN_DUAL_MIC

“builtin_Mic_DualMic”

./device/mediatek/mt6580/audio_device.xml:84:

./device/mediatek/mt6580/audio_device.xml-85-

./device/mediatek/mt6580/audio_device.xml-86-

./device/mediatek/mt6580/audio_device.xml-87-

./device/mediatek/mt6580/audio_device.xml-88-

./device/mediatek/mt6580/audio_device.xml:90:

./device/mediatek/mt6580/audio_device.xml-91-

./device/mediatek/mt6580/audio_device.xml-92-

./device/mediatek/mt6580/audio_device.xml-93-

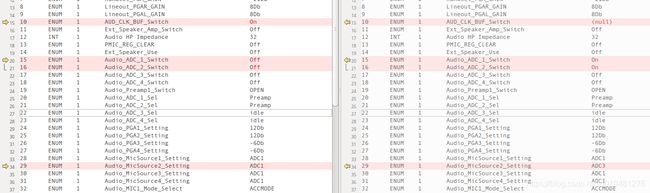

默认adc值为下标为0的“ADC1"

static const char * const Pmic_Digital_Mux[] = { “ADC1”, “ADC2”, “ADC3”, “ADC4” };

如图左边是默认的状态,右边是切到mic录音模式时的mix ctl 状态

ms,(s:271596503415,e:271600163031)

[ 271.600678] <2>-(1)[1611:AudioBTCVSDLoop][name:mt_soc_pcm_capture&]mtk_capture_pcm_pointer, buffer overflow u4DMAReadIdx:78, u4WriteIdx:a8, u4DataRemained:1030, u4BufferSize:1000

[ 271.600697] <2>-(1)[1611:AudioBTCVSDLoop][name:mt_soc_pcm_capture&]mtk_capture_alsa_stop

./sound/soc/mediatek/mt6580/mt_soc_pcm_capture.c:400:static int mtk_capture_alsa_start(struct snd_pcm_substream *substream)

./sound/soc/mediatek/mt6580/mt_soc_pcm_capture.c:402: pr_warn("mtk_capture_alsa_start\n");

./sound/soc/mediatek/mt6580/mt_soc_pcm_capture.c:414: return mtk_capture_alsa_start(substream);

play on speaker

[ 271.602486] <2> (1)[1611:AudioBTCVSDLoop][name:mt_soc_codec_63xx&]mt63xx_codec_prepare set up SNDRV_PCM_STREAM_CAPTURE rate = 8000

./sound/soc/mediatek/mt6580/mt_soc_codec_63xx.c:827:static int mt63xx_codec_prepare(struct snd_pcm_substream *substream, struct snd_soc_dai *Daiport)

./sound/soc/mediatek/mt6580/mt_soc_codec_63xx.c:830: pr_warn("mt63xx_codec_prepare set up SNDRV_PCM_STREAM_CAPTURE rate = %d\n",

./sound/soc/mediatek/mt6580/mt_soc_codec_63xx.c:835: pr_warn("mt63xx_codec_prepare set up SNDRV_PCM_STREAM_PLAYBACK rate = %d\n",

./sound/soc/mediatek/mt6580/mt_soc_codec_63xx.c:859: .prepare = mt63xx_codec_prepare,

使用栈跟踪代码cpp

在你的模块的Android.mk添加libutils动态库:

LOCAL_SHARED_LIBRARIES :=

…

libutils

(2). 在你需要获取native调用栈的位置定义android::CallStack对象,即可将调用栈输出到main log里:

#include

android::CallStack stack(“TAG”);

/* add this code at necessary place /

注意带

android namespace要在前面加#include

如:

#define calc_time_diff(x,y) ((x.tv_sec - y.tv_sec )+ (double)( x.tv_nsec - y.tv_nsec ) / (double)1000000000)

#include /* add this line */

namespace android {