sparksql根据字段排好序后存入mysql

在做sparkSQL的时候发现明明在DataFrame中已经排好序列了,但是存进mysql后发现还是无序的

代码如下

import org.apache.spark.{SparkConf}

import org.apache.spark.sql.{ SaveMode, SparkSession}

object timetest {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setAppName("timetest")

.setMaster("local[*]")

.set("spark.testing.memory", "2147480000")

val spark = SparkSession.builder()

.config(conf)

.getOrCreate()

val df1 = spark.read.csv("hdfs://106.12.48.46:9000/sparktest/服创大赛-原始数据.csv").toDF("timestamp","imsi","lac_id","cell_id","phone","timestamp1","tmp0","tmp1","nid","npid")

df1.select(df1("timestamp").cast("int"))

df1.dropDuplicates()

df1.createTempView("test1")

val df2 = spark.sql("select substr(timestamp,1,10) as timestamp,imsi,lac_id,cell_id,phone from test1")

df2.createTempView("test2")

val df3 = spark.sql("select from_unixtime(timestamp)as datatime,timestamp,imsi,lac_id,cell_id,phone from test2")

df3.createTempView("test3")

val df4 = spark.sql("select datatime,timestamp,imsi,lac_id,cell_id,phone from test3 order by timestamp asc")

df4.createTempView("test4")

val df5 = spark.sql("select substr(datatime,1,10) as data,datatime,timestamp,imsi,lac_id,cell_id,phone from test4")

df5.createTempView("test5")

val df6 = spark.sql("select datatime,data,timestamp,imsi,lac_id,cell_id,phone from test5 where data = '2018-10-03' order by timestamp asc")

val df7 = spark.sql("select * from test5 where data = '2018-10-03' order by timestamp asc").show()

//

//

val properties = new java.util.Properties()

properties.setProperty("user","root")

properties.setProperty("password","123456")

df6.write.mode(SaveMode.Overwrite).jdbc("jdbc:mysql://111.229.224.174:3306/spark","test",properties)

spark.stop()

}

}

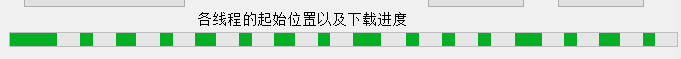

存入mysql后的效果:

发现是无序的,但是在sparkSQL里面用show方法展示的都是有序的,后来终于发现原因。

val conf = new SparkConf()

.setAppName("timetest")

.setMaster("local[*]")

.set("spark.testing.memory", "2147480000")

我在调用sparkconf的时候,setMaster(“local[*]”)是用多线程的,那么我们在想想多线程运行的过程是怎么样的,对!多线程并不会根据我们排序好的一点一点来下载,

他会在各个线程上同时下载,所以会导致sparksql上已经排好的顺序,存入mysql是变成无序的,那么解决方法就是将多线程变成单线程模式

val conf = new SparkConf()

.setAppName("timetest")

.setMaster("local")

.set("spark.testing.memory", "2147480000")

在setMaster上将【*】删除变成单线程,这时我们在看mysql中的数据就已经是排好序的了

当然在实际spark集群上开发过程中不可能使用单线程模式,所以建议在读取数据的时候在根据需要的字段排序!