CentOS6安装Hadoop-3.3.0

CentOS6安装Hadoop-3.3.0

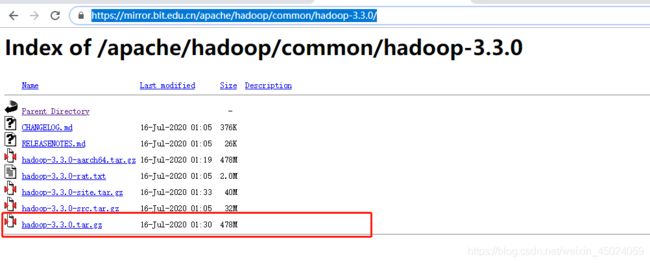

首先从官网下载hadoop

https://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.3.0/

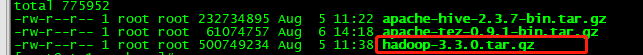

将tar包上传到虚拟机

tar -zxvf hadoop-3.3.0.tar.gz -C /new/software/

cd /new/software/hadoop-3.3.0/

cd /new/software/hadoop-3.3.0/etc/hadoop

vim core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://yts1:8020value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/export/installed/hadoop-2.6.0-cdh5.14.0/hadoopDatas/tempDatasvalue>

property>

<property>

<name>io.file.buffer.sizename>

<value>4096value>

property>

<property>

<name>fs.trash.intervalname>

<value>10080value>

property>

<property>

<name>hadoop.proxyuser.root.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.root.groupsname>

<value>*value>

property>

configuration>

vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>yts1:50090value>

property>

<property>

<name>dfs.namenode.http-addressname>

<value>yts1:50070value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:///new/software/hadoop-3.3.0/hadoopDatas/namenodeDatas,file:///new/software/hadoop-3.3.0/hadoopDatas/namenodeDatas2value>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:///new/software/hadoop-3.3.0/hadoopDatas/datanodeDatas,file:///new/software/hadoop-3.3.0/hadoopDatas/datanodeDatas2value>

property>

<property>

<name>dfs.namenode.edits.dirname>

<value>file:///new/software/hadoop-3.3.0/hadoopDatas/nn/editsvalue>

property>

<property>

<name>dfs.namenode.checkpoint.dirname>

<value>file:///new/software/hadoop-3.3.0/hadoopDatas/snn/namevalue>

property>

<property>

<name>dfs.namenode.checkpoint.edits.dirname>

<value>file:///new/software/hadoop-3.3.0/hadoopDatas/dfs/snn/editsvalue>

property>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.permissions.enabledname>

<value>falsevalue>

property>

<property>

<name>dfs.blocksizename>

<value>134217728value>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

configuration>

vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.job.ubertask.enablename>

<value>truevalue>

property>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.jobhistory.addressname>

<value>yts1:10020value>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>yts1:19888value>

property>

configuration>

vim yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>yts1value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

<property>

<name>yarn.log.server.urlname>

<value>http://yts1:19888/jobhistory/logsvalue>

property>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>604800value>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>20480value>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>2048value>

property>

<property>

<name>yarn.nodemanager.vmem-pmem-rationame>

<value>2.1value>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

configuration>

cp hadoop-env.sh hadoop-env.sh.bak

vim hadoop-env.sh

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hadoop-specific environment variables here.

# The only required environment variable is JAVA_HOME. All others are

# optional. When running a distributed configuration it is best to

# set JAVA_HOME in this file, so that it is correctly defined on

# remote nodes.

# The java implementation to use.

export JAVA_HOME=/new/software/jdk1.8.0_141

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

#export JSVC_HOME=${JSVC_HOME}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

# Extra Java CLASSPATH elements. Automatically insert capacity-scheduler.

for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

if [ "$HADOOP_CLASSPATH" ]; then

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

else

export HADOOP_CLASSPATH=$f

fi

done

# The maximum amount of heap to use, in MB. Default is 1000.

#export HADOOP_HEAPSIZE=

#export HADOOP_NAMENODE_INIT_HEAPSIZE=""

# Extra Java runtime options. Empty by default.

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

# Command specific options appended to HADOOP_OPTS when specified

export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HDFS_NAMENODE_OPTS"

export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HDFS_DATANODE_OPTS"

export HDFS_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HDFS_SECONDARYNAMENODE_OPTS"

export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

# The following applies to multiple commands (fs, dfs, fsck, distcp etc)

export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

#HADOOP_JAVA_PLATFORM_OPTS="-XX:-UsePerfData $HADOOP_JAVA_PLATFORM_OPTS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

export HDFS_DATANODE_SECURE_USER=${HDFS_DATANODE_SECURE_USER}

# Where log files are stored. $HADOOP_HOME/logs by default.

#export HADOOP_LOG_DIR=${HADOOP_LOG_DIR}/$USER

# Where log files are stored in the secure data environment.

export HADOOP_SECURE_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

###

# HDFS Mover specific parameters

###

# Specify the JVM options to be used when starting the HDFS Mover.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HADOOP_MOVER_OPTS=""

###

# Advanced Users Only!

###

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by

# the user that will run the hadoop daemons. Otherwise there is the

# potential for a symlink attack.

export HADOOP_PID_DIR=${HADOOP_PID_DIR}

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

# A string representing this instance of hadoop. $USER by default.

export HADOOP_IDENT_STRING=$USER

cp yarn-env.sh yarn-env.sh.bak

vim yarn-env.sh

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# User for YARN daemons

export HADOOP_YARN_USER=${HADOOP_YARN_USER:-yarn}

# resolve links - $0 may be a softlink

export HADOOP_CONF_DIR="${HADOOP_CONF_DIR:-$HADOOP_YARN_HOME/etc/hadoop/}"

# some Java parameters

export JAVA_HOME=/new/software/jdk1.8.0_141

if [ "$JAVA_HOME" != "" ]; then

#echo "run java in $JAVA_HOME"

JAVA_HOME=/new/software/jdk1.8.0_141

fi

if [ "$JAVA_HOME" = "" ]; then

echo "Error: JAVA_HOME is not set."

exit 1

fi

JAVA=$JAVA_HOME/bin/java

JAVA_HEAP_MAX=-Xmx1000m

# For setting YARN specific HEAP sizes please use this

# Parameter and set appropriately

# YARN_HEAPSIZE=1000

# check envvars which might override default args

if [ "$YARN_HEAPSIZE" != "" ]; then

JAVA_HEAP_MAX="-Xmx""$YARN_HEAPSIZE""m"

fi

# Resource Manager specific parameters

# Specify the max Heapsize for the ResourceManager using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either HADOOP_OPTS

# and/or YARN_RESOURCEMANAGER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_RESOURCEMANAGER_HEAPSIZE=1000

# Specify the max Heapsize for the timeline server using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either HADOOP_OPTS

# and/or YARN_TIMELINESERVER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_TIMELINESERVER_HEAPSIZE=1000

# Specify the JVM options to be used when starting the ResourceManager.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#export YARN_RESOURCEMANAGER_OPTS=

# Node Manager specific parameters

# Specify the max Heapsize for the NodeManager using a numerical value

# in the scale of MB. For example, to specify an jvm option of -Xmx1000m, set

# the value to 1000.

# This value will be overridden by an Xmx setting specified in either YARN_OPTS

# and/or YARN_NODEMANAGER_OPTS.

# If not specified, the default value will be picked from either YARN_HEAPMAX

# or JAVA_HEAP_MAX with YARN_HEAPMAX as the preferred option of the two.

#export YARN_NODEMANAGER_HEAPSIZE=1000

# Specify the JVM options to be used when starting the NodeManager.

# These options will be appended to the options specified as YARN_OPTS

# and therefore may override any similar flags set in YARN_OPTS

#export YARN_NODEMANAGER_OPTS=

# so that filenames w/ spaces are handled correctly in loops below

IFS=

# default log directory & file

if [ "$HADOOP_LOG_DIR" = "" ]; then

HADOOP_LOG_DIR="$HADOOP_YARN_HOME/logs"

fi

if [ "$HADOOP_LOGFILE" = "" ]; then

HADOOP_LOGFILE='yarn.log'

fi

# default policy file for service-level authorization

if [ "$YARN_POLICYFILE" = "" ]; then

YARN_POLICYFILE="hadoop-policy.xml"

fi

# restore ordinary behaviour

unset IFS

HADOOP_OPTS="$HADOOP_OPTS -Dhadoop.log.dir=$HADOOP_LOG_DIR"

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.log.dir=$HADOOP_LOGFILE"

HADOOP_OPTS="$HADOOP_OPTS -Dhadoop.log.file=$HADOOP_LOGFILE"

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.log.file=$HADOOP_LOGFILE"

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.home.dir=$YARN_COMMON_HOME"

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.id.str=$YARN_IDENT_STRING"

HADOOP_OPTS="$HADOOP_OPTS -Dhadoop.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.root.logger=${YARN_ROOT_LOGGER:-INFO,console}"

if [ "x$JAVA_LIBRARY_PATH" != "x" ]; then

HADOOP_OPTS="$HADOOP_OPTS -Djava.library.path=$JAVA_LIBRARY_PATH"

fi

HADOOP_OPTS="$HADOOP_OPTS -Dyarn.policy.file=$YARN_POLICYFILE"

vim workers

yts1

yts2

yts3

cd /new/software/

scp -r ./hadoop-3.3.0/ yts2:/new/software/

scp -r ./hadoop-3.3.0/ yts3:/new/software/

vim /etc/profile

添加hadoop路径

#hadoop

export HADOOP_HOME=/new/software/hadoop-3.3.0

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=/new/software/hadoop-3.3.0/etc/hadoop

scp /etc/profile yts2:/etc/profile

scp /etc/profile yts3:/etc/profile

#对三台机器使用命令 source

source /etc/profile

mkdir

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/namenodeDatas

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/namenodeDatas2

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/datanodeDatas

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/datanodeDatas2

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/nn/edits

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/snn/name

mkdir -p /new/software/hadoop-3.3.0/hadoopDatas/dfs/snn/edits

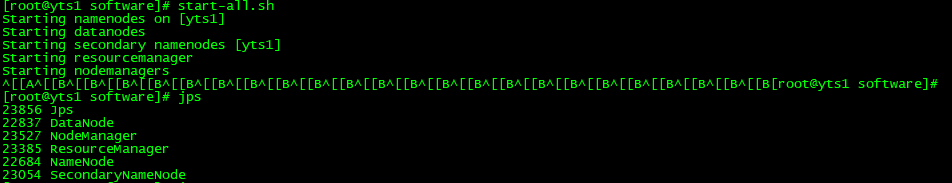

vim start-dfs.sh & stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vim start-yarn.sh & stop-yarn.sh

YARN_RESOURCEMANAGER_USER=root

HDFS_DATANODE_SECURE_USER=yarn

YARN_NODEMANAGER_USER=root