参考

1、硬件配置(实则为虚拟机)

| 主机名 | IP | 配置 | 发行版 |

| k8s1 | 172.16.186.132 | 双核/4G/150G | CentOS7.4.1708 |

| k8s2 | 172.16.186.133 | 双核/4G/150G | CentOS7.4.1708 |

| k8s3 |

172.16.186.134 | 双核/4G/150G | CentOS7.4.1708 |

| k8s共需要3个网络:service网络、节点网络、pod网络,其中pod网络需要借助第三方 插件来分配网络地址,如flannel(默认使用网络为10.244.0.0/16),部署k8s时指定网络地址 和部署flannel时指定网络,部署k8s时和部署flannel时两者要匹配 |

|||

2、测试环境配置

(1)前期设置(所有节点都执行)

sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config

setenforce 0

systemctl stop firewalld && systemctl disable firewalld

(2)主机名称解析

[root@k8s1 ~]# vim /etc/hosts

172.16.186.132 k8s1

172.16.186.133 k8s2

172.16.186.134 k8s3

[root@k8s1 ~]# scp /etc/hosts [email protected]:/etc

[root@k8s1 ~]# scp /etc/hosts [email protected]:/etc

(3)配置免密钥登陆

[root@k8s1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

[root@k8s1 ~]# for i in {1..3};do ssh-copy-id k8s$i;done

(4)配置yum源(所有节点都执行)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i mv `ls /etc/yum.repos.d/|grep -Ev '(*Base.repo|*Media.repo)'`{,.bak};done

[root@k8s1 ~]#vim CentOS-Media.repo

[c7-media]

name=CentOS-$releasever - Media

baseurl=file:///mnt/usb1/

# file:///media/cdrom/

# file:///media/cdrecorder/

gpgcheck=0

enabled=1

创建挂载点并挂载光盘

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i mkdir /mnt/usb{1..3};done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i mount /dev/sr0 /mnt/usb1;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install vim wget;done

(5)禁用swap设备(所有节点执行)

临时禁用:

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i swapoff -a;done

永久禁用:(将swap行注释即可)

[root@k8s1 ~]# vim /etc/fstab

[root@k8s2 ~]# vim /etc/fstab

[root@k8s3 ~]# vim /etc/fstab

(6)启用ipvs内核模块(所有节点执行)

[root@k8s1 ~]# vim /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for mod in $(ls $ipvs_mods_dir | grep -o "^[^.]*");do

/sbin/modinfo -F filename $mod &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $mod

fi

done

分发权限

[root@k8s1 ~]# for i in {2..3};do scp /etc/sysconfig/modules/ipvs.modules k8s$i:/etc/sysconfig/modules/

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i chmod +x /etc/sysconfig/modules/ipvs.modules;done

3、安装docker

(1)生成yum仓库

[root@k8s1 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker.repo

[root@k8s1 ~]# for i in {2..3};do scp /etc/yum.repos.d/docker.repo k8s$i:/etc/yum.repos.d/;done

安装docker(所有节点上都要安装)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install docker-ce;done

启动docker

[root@k8s1 ~]# sed -i "14i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

[root@k8s1 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://bk6kzfqm.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

[root@k8s1 ~]# for i in {2..3};do scp /usr/lib/systemd/system/docker.service k8s$i:/usr/lib/systemd/system/;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl daemon-reload;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl restart docker && systemctl enable docker;done

验证

[root@k8s1 ~]# docker info|grep -i driver

注:三个节点查看有无刚添加的三项

安装k8s工具; 使用国内yum源;

#该文件需自建

[root@k8s1 ~]# cat<

[kubernetes]

name=kubernetes Repository

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

[root@k8s1 ~]# for i in {2..3};do scp /etc/yum.repos.d/k8s.repo k8s$i:/etc/yum.repos.d/;done

安装

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install kubelet kubeadm kubectl;done

master端拉取所需镜像

由于国内网络因素,kubernetes镜像需要从mirrors站点或通过dockerhub用户推送的镜像拉取

[root@k8s1 ~]# kubeadm config images list --kubernetes-version v1.18.6

拉取镜像

另因阿里云的镜像暂时还没更新到v1.18.6版本,所以通过dockerhub上拉取,以下脚本需要单独执行

[root@k8s1 ~]# vim get-k8s-images.sh

#!/bin/bash

KUBE_VERSION=v1.18.6

PAUSE_VERSION=3.2

CORE_DNS_VERSION=1.6.7

ETCD_VERSION=3.4.3-0

# pull kubernetes images from hub.docker.com

docker pull kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker pull kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker pull kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker pull kubeimage/kube-scheduler-amd64:$KUBE_VERSION

# pull aliyuncs mirror docker images

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

# retag to k8s.gcr.io prefix

docker tag kubeimage/kube-proxy-amd64:$KUBE_VERSION k8s.gcr.io/kube-proxy:$KUBE_VERSION

docker tag kubeimage/kube-controller-manager-amd64:$KUBE_VERSION k8s.gcr.io/kube-controller-manager:$KUBE_VERSION

docker tag kubeimage/kube-apiserver-amd64:$KUBE_VERSION k8s.gcr.io/kube-apiserver:$KUBE_VERSION

docker tag kubeimage/kube-scheduler-amd64:$KUBE_VERSION k8s.gcr.io/kube-scheduler:$KUBE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION k8s.gcr.io/coredns:$CORE_DNS_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION k8s.gcr.io/etcd:$ETCD_VERSION

# untag origin tag, the images won't be delete.

docker rmi kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-scheduler-amd64:$KUBE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

[root@k8s1 ~]# sh get-k8s-images.sh

[root@k8s1 ~]# for i in {2..3};do scp get-k8s-images.sh k8s$i:~;done

[root@k8s2 ~]# sh get-k8s-images.sh

[root@k8s3 ~]# sh get-k8s-images.sh

初始化集群

以下命令如无特殊说明,均在k8s-master上执行

(1)使用kubeadm init初始化集群(注意修改 apiserver 地址为本机IP)

[root@k8s1 ~]# kubeadm init --kubernetes-version=v1.18.6 --apiserver-advertise-address=172.16.186.132 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

注:执行成功后会看到上图中的信息

–kubernetes-version=v1.16.2 : 加上该参数后启动相关镜像(刚才下载的那一堆)

–pod-network-cidr=10.244.0.0/16 :(Pod 中间网络通讯我们用flannel,flannel要求是10.244.0.0/16,这个IP段就是Pod的IP段)

–service-cidr=10.1.0.0/16 : Service(服务)网段(和微服务架构有关)

初始化成功后会输出类似下面的加入命令,暂时无需运行,先记录,记得先记录下来。

kubeadm join 172.16.186.132:6443 --token 2qtuzq.ay9cm62unnhiqu0p \

--discovery-token-ca-cert-hash sha256:eda7b133a436ad0cd8556ca5fb27b30c9da2459144ac72f0f0bf1e26a4644e53

(2)为需要使用kubectl的用户进行配置

#把密钥配置加载到自己的环境变量里

[root@k8s1 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

#每次启动自动加载$HOME/.kube/config下的密钥配置文件(K8S自动行为)

[root@k8s1 ~]# mkdir -p $HOME/.kube

[root@k8s1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

集群网络配置(以下3种方式选择一种即可)

方法1、安装 flannel 网络(这里我使用的是该方法)

[root@k8s1 ~]# echo "151.101.108.133 raw.githubusercontent.com">>/etc/hosts

[root@k8s1 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 注意:修改集群初始化地址及镜像能否拉去,如执行上述命令时报The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?的错需查询raw.githubusercontent.com的真正地址,而后替换上述151.101.108.133即可

方法2、安装Pod Network(使用七牛云镜像)

[root@k8s1 ~]# curl -o kube-flannel.yml https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s1 ~]# sed -i "s/quay.io\/coreos\/flannel/quay-mirror.qiniu.com\/coreos\/flannel/g" kube-flannel.yml

[root@k8s1 ~]# kubectl apply -f kube-flannel.yml

[root@k8s1 ~]# rm -f kube-flannel.yml

方法3、安装 calico 网络 —推荐使用这种

[root@k8s1 ~]# wget https://docs.projectcalico.org/v3.15/manifests/calico.yaml

[root@k8s1 ~]# vim calico.yaml

## 搜ip地址192---大概是3580行---修改后取消啊该行注释---保存退出

使calico.yaml生效

[root@k8s1 ~]# kubectl apply -f calico.yaml

使用下面的命令确保所有的Pod都处于Running状态,可能要等到许久。

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

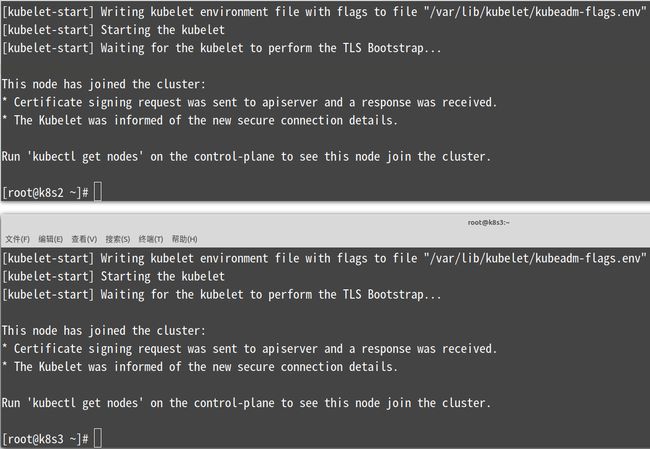

向Kubernetes集群中添加Node节点

在k8s2和k8s3节点上运行之前在k8s1节点上输出的命令

[root@k8s2 ~]# kubeadm join 172.16.186.132:6443 --token 2qtuzq.ay9cm62unnhiqu0p \

--discovery-token-ca-cert-hash sha256:eda7b133a436ad0cd8556ca5fb27b30c9da2459144ac72f0f0bf1e26a4644e53

[root@k8s3 ~]# kubeadm join 172.16.186.132:6443 --token 2qtuzq.ay9cm62unnhiqu0p \

--discovery-token-ca-cert-hash sha256:eda7b133a436ad0cd8556ca5fb27b30c9da2459144ac72f0f0bf1e26a4644e53

========================================================

注:没有记录集群 join 命令的可以通过以下方式重新获取

kubeadm token create --print-join-command --ttl=0

========================================================

注意:上图中提示使用kubectl get nodes命令获取节点以查看该节点是否已加入集群。但是现在这个命令还不能用,

解决:在k8s1节点上将admin.conf文件发送到其他节点上并改名即可

[root@k8s2 ~]# mkdir -p $HOME/.kube

[root@k8s1 ~]# scp /etc/kubernetes/admin.conf k8s2:/root/.kube/config

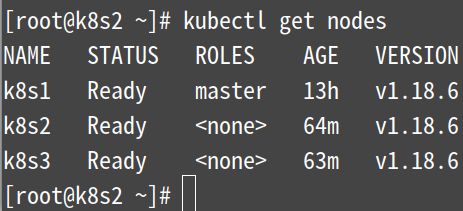

[root@k8s2 ~]# kubectl get nodes

在k8s1节点上查看集群中的节点状态

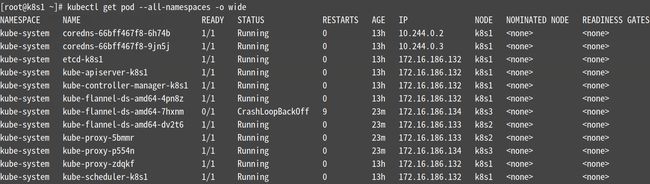

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

注:下图要全部都为Running

遇到的问题:

如下图所示有一个节点的状态一直为CrashLoopBackOff,将k8s3节点上的所有容器重启(docker restart `docker ps -aq`)即可恢复

kube-proxy 开启 ipvs

[root@k8s1 ~]# kubectl get configmap kube-proxy -n kube-system -o yaml > kube-proxy-configmap.yaml

[root@k8s1 ~]# sed -i 's/mode: ""/mode: "ipvs"/' kube-proxy-configmap.yaml

[root@k8s1 ~]# kubectl apply -f kube-proxy-configmap.yaml

[root@k8s1 ~]# rm -f kube-proxy-configmap.yaml

[root@k8s1 ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

部署 kubernetes-dashboard

1、在 Master 上部署 Dashboard

[root@k8s1 ~]# kubectl get pods -A -o wide

2、下载并修改Dashboard安装脚本(在Master上执行)

参照官网安装说明在master上执行:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0- beta5/aio/deploy/recommended.yaml

##如下载失败请直接复制下面的文件

cat > recommended.yaml<<-EOF

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: kubernetes-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: kubernetes-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-metrics-scraper

template:

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

spec:

containers:

- name: kubernetes-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.0

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

EOF

修改recommended.yaml文件内容

....

....

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #增加

ports:

- port: 443

targetPort: 8443 #增加

nodePort: 30008

selector:

k8s-app: kubernetes-dashboard

....

....

#因为自动生成的证书很多浏览器无法使用,所以我们自己创建,注释掉kubernetes-dashboard-certs对象声明

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-certs

# namespace: kubernetes-dashboard

#type: Opaque

3、创建证书

[root@k8s1 ~]# mkdir dashboard-certs

[root@k8s1 ~]# cd dashboard-certs/

#创建命名空间

[root@k8s1 ~]# kubectl create namespace kubernetes-dashboard

## 删除命名空间kubectl delete namespace kubernetes-dashboard

# 创建私钥key文件

[root@k8s1 ~]# openssl genrsa -out dashboard.key 2048

#证书请求

[root@k8s1 ~]# openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

#自签证书

[root@k8s1 ~]# openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#创建kubernetes-dashboard-certs对象

[root@k8s1 ~]# kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

[root@k8s1 dashboard-certs]# cd

4、创建 dashboard 管理员

(1)创建账号

[root@k8s1 ~]# vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

[root@k8s1 ~]# kubectl create -f dashboard-admin.yaml

(2)为用户分配权限

[root@k8s1 ~]# vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

[root@k8s1 ~]# kubectl create -f dashboard-admin-bind-cluster-role.yaml

(5)安装 Dashboard

#安装

[root@k8s1 ~]# kubectl create -f ~/recommended.yaml

注:这里会有一个提示,如下图

#检查结果

[root@k8s1 ~]# kubectl get pods -A -o wide

[root@k8s1 ~]# kubectl get service -n kubernetes-dashboard -o wide

(6)查看并复制用户Token

[root@k8s1 ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-sb82t

Namespace: kubernetes-dashboard

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: c74677cf-c8fc-4fe7-a3ee-ecd60beba6f1

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlRIcjFETmxHNFVJVGVGd0U1SkxGOFdVNHhQZUlPVlJORDd6c29VM2hac2MifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tc2I4MnQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYzc0Njc3Y2YtYzhmYy00ZmU3LWEzZWUtZWNkNjBiZWJhNmYxIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.M-HMHfXcq9trsP0U_RdU5wqYDnLYeAGA7krYJwvP-0lao_kf-ja3xD9EgkPdl1OURg1RXs4GdlaWz3USD1XRUWA89yQiuDyxXo_jUasXKlhTnBfzkt3wHcs0Z-QmLi1v-qBu6ZfeXP-ng0040NST3tUvtd929Wad-hxyEqfeahpsHgism3qivK_sfTmNbQOKG7kbEcyqKkyBDMoK1qXwr2iPY27wPOc6nUVXcSMETagt-48UzZP89EeMjXcUt8fNcTcym8kpcQCLHqt4B8tYT5rXwgUP10ThInXjYYmiwHJ9V6sNni5LrFkKWiWNV_rxm7FJLx78nJRTEzTMaqqpAA

(7)浏览器访问

https://172.16.186.132:30008/

注:下图选择“高级”---“接受风险并继续”

下图选择Token并把上面第6步的token粘贴到下图的Token位置,而后点击Sign in登陆

==============================================

#如果进入网站提示证书错误执行,原路返回一步一步delete回到创建证书步骤重做

kubectl delete -f ~/recommended.yaml #删除重做证书即可

==============================================

后续部署应用

欢迎加入QQ群一起讨论Linux、开源等技术