Azure Kinect DK + Ubuntu 18.04,从相机获取图像和点云数据

前言

1. 相机驱动和SDK的准备

在Ubuntu 18.04系统下安装Azure Kinect DK 深度相机驱动和SDK,可以参考我的另一篇博客:https://blog.csdn.net/denkywu/article/details/103177559。

到这儿,基本的准备工作已经完成了:连接上相机后,打开Azure Kinect 查看器(运行 sudo ./k4aviewer ),选择不同的相机配置,打开相机,能够正常运行,查看到相机RGB图,IR图,深度图等。

2. 开发环境的准备

下面获取数据时利用了OpenCV,所以需要先安装配置好OpenCV,这里就不再详述了(我使用的版本是opencv 3.4.7)。

3. 本文可获取的数据

本文可获取的图像数据为:rgb图像,ir红外图像,深度图像;以及和深度图像同坐标系下的的点云xyz数据。

获取图像数据

1. 参考了博客https://blog.csdn.net/qq_40936780/article/details/102634734中的代码(感谢),但其代码貌似有些问题,因为 depth_out 并未声明和定义,无法通过编译。

2. 我在上述博客基础上更改,并又参考了官方github中的example。我给出的一个示例如下:

// C++

#include

// OpenCV

#include

// Kinect DK

#include

// 宏

// 方便控制是否 std::cout 信息

#define DEBUG_std_cout 0

int main(int argc, char* argv[])

{

/*

找到并打开 Azure Kinect 设备

*/

// 发现已连接的设备数

const uint32_t device_count = k4a::device::get_installed_count();

if (0 == device_count)

{

std::cout << "Error: no K4A devices found. " << std::endl;

return EXIT_FAILURE;

}

else

{

std::cout << "Found " << device_count << " connected devices. " << std::endl;

if (1 != device_count)// 超过1个设备,也输出错误信息。

{

std::cout << "Error: more than one K4A devices found. " << std::endl;

return EXIT_FAILURE;

}

else// 该示例代码仅限对1个设备操作

{

std::cout << "Done: found 1 K4A device. " << std::endl;

}

}

// 打开(默认)设备

k4a::device device = k4a::device::open(K4A_DEVICE_DEFAULT);

std::cout << "Done: open device. " << std::endl;

/*

检索 Azure Kinect 图像数据

*/

// 配置并启动设备

k4a_device_configuration_t config = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

config.camera_fps = K4A_FRAMES_PER_SECOND_30;

config.color_format = K4A_IMAGE_FORMAT_COLOR_BGRA32;

config.color_resolution = K4A_COLOR_RESOLUTION_1080P;

// config.depth_mode = K4A_DEPTH_MODE_NFOV_UNBINNED;

config.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

config.synchronized_images_only = true;// ensures that depth and color images are both available in the capture

device.start_cameras(&config);

std::cout << "Done: start camera." << std::endl;

// 稳定化

k4a::capture capture;

int iAuto = 0;//用来稳定,类似自动曝光

int iAutoError = 0;// 统计自动曝光的失败次数

while (true)

{

if (device.get_capture(&capture))

{

std::cout << iAuto << ". Capture several frames to give auto-exposure" << std::endl;

// 跳过前 n 个(成功的数据采集)循环,用来稳定

if (iAuto != 30)

{

iAuto++;

continue;

}

else

{

std::cout << "Done: auto-exposure" << std::endl;

break;// 跳出该循环,完成相机的稳定过程

}

}

else

{

std::cout << iAutoError << ". K4A_WAIT_RESULT_TIMEOUT." << std::endl;

if (iAutoError != 30)

{

iAutoError++;

continue;

}

else

{

std::cout << "Error: failed to give auto-exposure. " << std::endl;

return EXIT_FAILURE;

}

}

}

std::cout << "-----------------------------------" << std::endl;

std::cout << "----- Have Started Kinect DK. -----" << std::endl;

std::cout << "-----------------------------------" << std::endl;

// 从设备获取捕获

k4a::image rgbImage;

k4a::image depthImage;

k4a::image irImage;

cv::Mat cv_rgbImage_with_alpha;

cv::Mat cv_rgbImage_no_alpha;

cv::Mat cv_depth;

cv::Mat cv_depth_8U;

cv::Mat cv_irImage;

cv::Mat cv_irImage_8U;

while (true)

// for (size_t i = 0; i < 100; i++)

{

// if (device.get_capture(&capture, std::chrono::milliseconds(0)))

if (device.get_capture(&capture))

{

// rgb

// * Each pixel of BGRA32 data is four bytes. The first three bytes represent Blue, Green,

// * and Red data. The fourth byte is the alpha channel and is unused in the Azure Kinect APIs.

rgbImage = capture.get_color_image();

#if DEBUG_std_cout == 1

std::cout << "[rgb] " << "\n"

<< "format: " << rgbImage.get_format() << "\n"

<< "device_timestamp: " << rgbImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << rgbImage.get_system_timestamp().count() << "\n"

<< "height*width: " << rgbImage.get_height_pixels() << ", " << rgbImage.get_width_pixels()

<< std::endl;

#endif

cv_rgbImage_with_alpha = cv::Mat(rgbImage.get_height_pixels(), rgbImage.get_width_pixels(), CV_8UC4, (void *)rgbImage.get_buffer());

cv::cvtColor(cv_rgbImage_with_alpha, cv_rgbImage_no_alpha, cv::COLOR_BGRA2BGR);

// depth

// * Each pixel of DEPTH16 data is two bytes of little endian unsigned depth data. The unit of the data is in

// * millimeters from the origin of the camera.

depthImage = capture.get_depth_image();

#if DEBUG_std_cout == 1

std::cout << "[depth] " << "\n"

<< "format: " << depthImage.get_format() << "\n"

<< "device_timestamp: " << depthImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << depthImage.get_system_timestamp().count() << "\n"

<< "height*width: " << depthImage.get_height_pixels() << ", " << depthImage.get_width_pixels()

<< std::endl;

#endif

cv_depth = cv::Mat(depthImage.get_height_pixels(), depthImage.get_width_pixels(), CV_16U, (void *)depthImage.get_buffer(), static_cast(depthImage.get_stride_bytes()));

cv_depth.convertTo(cv_depth_8U, CV_8U, 1 );

// ir

// * Each pixel of IR16 data is two bytes of little endian unsigned depth data. The value of the data represents

// * brightness.

irImage = capture.get_ir_image();

#if DEBUG_std_cout == 1

std::cout << "[ir] " << "\n"

<< "format: " << irImage.get_format() << "\n"

<< "device_timestamp: " << irImage.get_device_timestamp().count() << "\n"

<< "system_timestamp: " << irImage.get_system_timestamp().count() << "\n"

<< "height*width: " << irImage.get_height_pixels() << ", " << irImage.get_width_pixels()

<< std::endl;

#endif

cv_irImage = cv::Mat(irImage.get_height_pixels(), irImage.get_width_pixels(), CV_16U, (void *)irImage.get_buffer(), static_cast(irImage.get_stride_bytes()));

cv_irImage.convertTo(cv_irImage_8U, CV_8U, 1 );

// show image

cv::imshow("color", cv_rgbImage_no_alpha);

cv::imshow("depth", cv_depth_8U);

cv::imshow("ir", cv_irImage_8U);

cv::waitKey(1);

std::cout << "--- test ---" << std::endl;

}

else

{

std::cout << "false: K4A_WAIT_RESULT_TIMEOUT." << std::endl;

}

}

cv::destroyAllWindows();

// 释放,关闭设备

rgbImage.reset();

depthImage.reset();

irImage.reset();

capture.reset();

device.close();

// ---------------------------------------------------------------------------------------------------------

/*

Test

*/

// 等待输入,方便显示上述运行结果

std::cout << "--------------------------------------------" << std::endl;

std::cout << "Waiting for inputting an integer: ";

int wd_wait;

std::cin >> wd_wait;

std::cout << "----------------------------------" << std::endl;

std::cout << "------------- closed -------------" << std::endl;

std::cout << "----------------------------------" << std::endl;

return EXIT_SUCCESS;

} 一个运行截图如下:

获取点云数据

在上述代码基础上,加上以下代码,即可获取点云xyz数据。注意这里的点云数据与深度图像是相对应的,同属一个坐标系。

1)这里的关键是对transformation和calibration的定义和使用:

// 以下使用参考自github - examples - transformation 的 main.cpp

k4a::calibration k4aCalibration = device.get_calibration(config.depth_mode, config.color_resolution);// Get the camera calibration for the entire K4A device, which is used for all transformation functions.

k4a::transformation k4aTransformation = k4a::transformation(k4aCalibration);

2)接下来就可以获取点云数据了

k4a::image xyzImage;

cv::Mat cv_xyzImage;// 16位有符号

cv::Mat cv_xyzImage_32F;// 32位float

// 点云

/*

Each pixel of the xyz_image consists of three int16_t values, totaling 6 bytes. The three int16_t values are the X, Y, and Z values of the point.

我们将为每个像素存储三个带符号的 16 位坐标值(以毫米为单位)。 因此,XYZ 图像步幅设置为 width * 3 * sizeof(int16_t)。

数据顺序为像素交错式,即,X 坐标 – 像素 0,Y 坐标 – 像素 0,Z 坐标 – 像素 0,X 坐标 – 像素 1,依此类推。

如果无法将某个像素转换为 3D,该函数将为该像素分配值 [0,0,0]。

*/

xyzImage = k4aTransformation.depth_image_to_point_cloud(depthImage, K4A_CALIBRATION_TYPE_DEPTH);

cv_xyzImage = cv::Mat(xyzImage.get_height_pixels(), xyzImage.get_width_pixels(), CV_16SC3, (void *)xyzImage.get_buffer(), static_cast(xyzImage.get_stride_bytes()));

cv_xyzImage.convertTo(cv_xyzImage_32F, CV_32FC3, 1.0/1000, 0);// 转为float,同时将单位从 mm 转换为 m. 3)最后加上

// 释放

k4aTransformation.destroy();将采集数据保存到本地,一个示例如下:

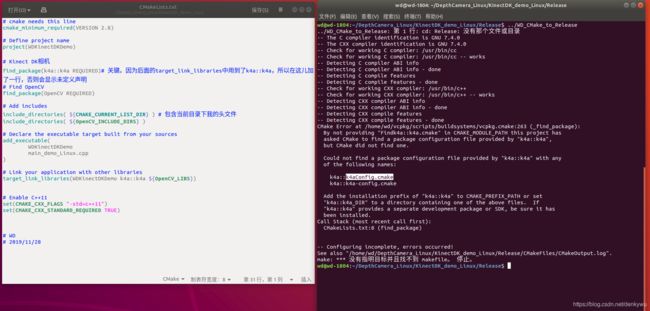

CMakeLists

使用cmake工具来生成makefile,首先编写CMakeLists.txt。

1)我对编写CMakeLists.txt也只是一知半解,这个后续我还要再学习下;

2)目前没有在Kinect DK的微软官方说明中,或网上,查询到具体的编写示例;

3)我目前参考了Kinect DK的github(https://github.com/microsoft/Azure-Kinect-Sensor-SDK/blob/develop/docs/usage.md),其中有从源码编译SDK时使用的CMakeLists.txt;另外,在给出的example(https://github.com/microsoft/Azure-Kinect-Sensor-SDK/tree/develop/examples)中,其每一个例子(文件夹)也有CMakeLists.txt。

基于上述原因,和自己东拼西凑,最终给出以下的CMakeLists.txt示例。亲测可行:能够完成编译链接生成可执行文件,自己的代码能够跑起来了。

# cmake needs this line

cmake_minimum_required(VERSION 2.8)

# Define project name

project(WDKinectDKDemo)

# Kinect DK相机

find_package(k4a REQUIRED)# 关键。因为后面的target_link_libraries中用到了k4a::k4a,所以在这儿加了一行,否则会显示未定义声明

# Find OpenCV

find_package(OpenCV REQUIRED)

# Add includes

include_directories( ${CMAKE_CURRENT_LIST_DIR} ) # 包含当前目录下我的头文件

include_directories( ${OpenCV_INCLUDE_DIRS} )

# Declare the executable target built from your sources

add_executable(

WDKinectDKDemo

main_demo_Linux.cpp

)

# Link your application with other libraries

target_link_libraries(WDKinectDKDemo k4a::k4a ${OpenCV_LIBS})

# Enable C++11

set(CMAKE_CXX_FLAGS "-std=c++11")

set(CMAKE_CXX_STANDARD_REQUIRED TRUE)

# WD

# 2019/11/29下面几个图是编写 CMakeLists.txt 的尝试过程和结果

a)尝试失败及错误信息

b)尝试成功,完成编译链接生成可执行文件

c)在本地路径下找到了k4a文件夹及其中包括的 .cmake 等文件

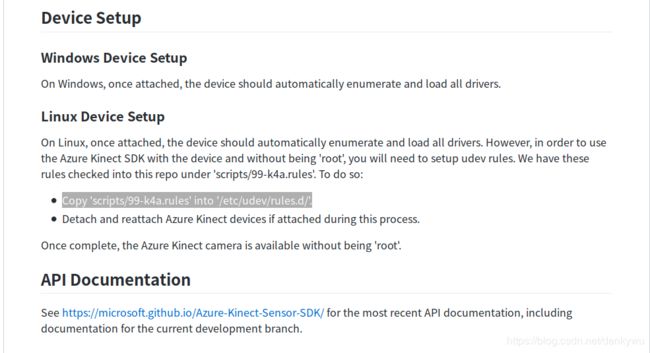

另外一个可能的问题,关于usb权限

1. 问题

上面的编译链接都已完成,生成了可执行文件。

接下来运行可执行文件,出错,显示信息如下:

上述错误信息看起来是usb权限的问题(由于我不太懂Linux系统和usb权限这方面内容,所以我只能给出不专业的判断,看起来是...)。

然后我记得我在github的安装说明里(https://github.com/microsoft/Azure-Kinect-Sensor-SDK/blob/develop/docs/usage.md),看到了如下内容:

2. 解决办法

在下载原码的文件夹下(其实只需要github中“99-k4a.rules”这个文件,然后在存储该文件的路径下)执行以下命令:

sudo cp scripts/99-k4a.rules /etc/udev/rules.d/OK,问题解决。

生成的可执行文件能够正常运行了。

【本文 完】