基于Python3.6编写的jieba分词组件+Scikit-Learn库+朴素贝叶斯算法小型中文自动分类程序

实验主题:大规模数字化(中文)信息资源信息组织所包含的基本流程以及各个环节执行的任务。

基本流程:

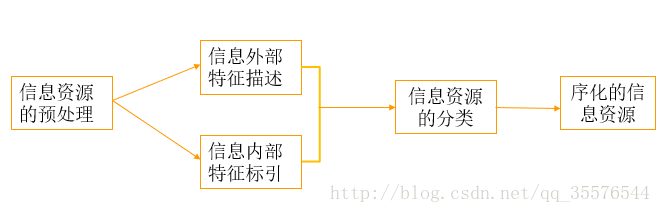

如下图所示,和信息资源信息组织的基本流程类似,大规模数字化(中文)信息资源组织的基本流程也如下:1信息资源的预处理、2信息外部特征描述、3信息内部特征标引、4信息资源的分类、5得到序化的信息资源

图1

1.1在信息资源预处理环节,首先要选择处理文本的范围,建立分类文本语料库:训练集语料(已经分好类的文本资源)和测试集语料(待分类的文本语料)。其次要转化文本格式,使用Python的lxml库去除html标签。最后监测句子边界,标记句子的结束。

1.2在信息外部特征描述环节,大规模数字化信息资源信息组织关注的不多,主要是对信息资源的内容、外部表现形式和物质形态(媒体类型、格式)的特征进行分析、描述、选择和记录的过程。信息外部特征序化的最终结果就是书目。

1.3在信息内部特征标引环节,指在分析信息内容基础上,用某种语言和标识符把信息的主题概念及其具有检索意义的特征标示出来,作为信息分析与检索的基础。而信息内部特征序化的结果就是代表主体概念的标引词集合。数字化信息组织更注重通过细粒度信息内容特征的语义逻辑和统计概率关系。技术上对应文本自动分类中建立向量空间模型和TF/IDF权重矩阵环节,也就是信息资源的自动标引。

1.4在信息资源分类环节,注重基于语料库的统计分类体系,在数据挖掘领域称作文档自动分类,主要任务是从文档数据集中提取描述文档的模型,并把数据集中的每个文档归入到某个已知的文档类中。常用的有朴素贝叶斯分类器和KNN分类器,在深度学习里还有卷积神经网络分类法。

1.5最后便得到了序化好的信息资源,接下来根据目的的不同有两种组织方法,一是基于资源(知识)导航、另一种是搜索引擎。大规模数字化(中文)信息资源信息组织主要是一种基于搜索引擎的信息组织,序化好的信息资源为数字图书馆搜索引擎建立倒排索引打下基础。

图22程序文件的目录层次结构

功能说明:

(1) html_demo.py程序对文本进行预处理,即用lxml去除html标签。

(2) corpus_segment.py程序利用jieba分词库对训练集和测试集分词。

(3) corpus2Bunch.py程序利用的是Scikit-Learn库中的Bunch数据结构将训练集和测试集语料库表示为变量,分别保存在train_word_bag/train_set.dat和test_word_bag/test_set.dat。

(4) TFIDF_space.py程序用于构建TF-IDF词向量空间,将训练集数据转换为TF-IDF词向量空间中的实例(去掉停用词),保存在train_word_bag/tfdifspace.dat,形成权重矩阵; 同时采用同样的训练步骤加载训练集词袋,将测试集产生的词向量映射到训练集词袋的词典中,生成向量空间模型文件test_word_bag/testspace.dat。

(5) NBayes_Predict.py程序采用多项式贝叶斯算法进行预测文本分类。

1.分词程序代码#!/usr/bin/env python

# -*- coding: UTF-8 -*-

import sys

import os

from importlib import reload

import jieba

# 配置utf-8输出环境

reload(sys)

# 保存至文件

def savefile(savepath, content):

with open(savepath, "w", encoding='utf-8', errors='ignore') as fp:

fp.write(content)

# 读取文件

def readfile(path):

with open(path, "r") as fp:

content = fp.read()

return content

def corpus_segment(corpus_path, seg_path):

# 获取每个目录(类别)下所有的文件

for mydir in catelist:

class_path = corpus_path + mydir + "/" # 拼出分类子目录的路径如:train_corpus/art/

seg_dir = seg_path + mydir + "/" # 拼出分词后存贮的对应目录路径如:train_corpus_seg/art/

if not os.path.exists(seg_dir): # 是否存在分词目录,如果没有则创建该目录

os.makedirs(seg_dir)

file_list = os.listdir(class_path) # 获取未分词语料库中某一类别中的所有文本

for file_path in file_list: # 遍历类别目录下的所有文件

fullname = class_path + file_path # 拼出文件名全路径如:train_corpus/art/21.txt

content = readfile(fullname) # 读取文件内容

content = content.replace("\r\n", "") # 删除换行

content = content.replace(" ", "")#删除空行、多余的空格

content_seg = jieba.cut(content) # 为文件内容分词

savefile(seg_dir + file_path, " ".join(content_seg)) # 将处理后的文件保存到分词后语料目录

print ("中文语料分词结束!!!")

if __name__=="__main__":

#对训练集进行分词

corpus_path = "C:/Users/JAdpp/Desktop/数据集/trainingdataset/" # 未分词分类语料库路径

seg_path = "C:/Users/JAdpp/Desktop/数据集/outdataset1/" # 分词后分类语料库路径

corpus_segment(corpus_path, seg_path)2.用Bunch数据结构将训练集和测试集转化为变量的程序代码

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

import sys

from importlib import reload

reload(sys)

import os#python内置的包,用于进行文件目录操作,我们将会用到os.listdir函数

import pickle#导入 pickle包

from sklearn.datasets.base import Bunch

def _readfile(path):

with open(path, "rb") as fp:

content = fp.read()

return content

def corpus2Bunch(wordbag_path,seg_path):

catelist = os.listdir(seg_path)# 获取seg_path下的所有子目录,也就是分类信息

#创建一个Bunch实例

bunch = Bunch(target_name=[], label=[], filenames=[], contents=[])

bunch.target_name.extend(catelist)

# 获取每个目录下所有的文件

for mydir in catelist:

class_path = seg_path + mydir + "/" # 拼出分类子目录的路径

file_list = os.listdir(class_path) # 获取class_path下的所有文件

for file_path in file_list: # 遍历类别目录下文件

fullname = class_path + file_path # 拼出文件名全路径

bunch.label.append(mydir)

bunch.filenames.append(fullname)

bunch.contents.append(_readfile(fullname)) # 读取文件内容

# 将bunch存储到wordbag_path路径中

with open(wordbag_path, "wb") as file_obj:

pickle.dump(bunch, file_obj)

print("构建文本对象结束!!!")

if __name__ == "__main__":

#对训练集进行Bunch化操作:

wordbag_path = "train_word_bag/train_set.dat" # Bunch存储路径

seg_path = "C:/Users/JAdpp/Desktop/数据集/outdataset1/" # 分词后分类语料库路径

corpus2Bunch(wordbag_path, seg_path)

# 对测试集进行Bunch化操作:

wordbag_path = "test_word_bag/test_set.dat" # Bunch存储路径

seg_path = "C:/Users/JAdpp/Desktop/数据集/experimentdataset/" # 分词后分类语料库路径

corpus2Bunch(wordbag_path, seg_path)3.建TF-IDF词向量空间,将训练集和测试集数据转换为TF-IDF词向量空间中的实例(去掉停用词)程序代码

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

import codecs

import sys

from importlib import reload

reload(sys)

from sklearn.datasets.base import Bunch

import pickle

from sklearn.feature_extraction.text import TfidfVectorizer

def _readfile(path):

with open(path, "rb") as fp:

content = fp.read()

return content

def _readbunchobj(path):

with open(path, "rb") as file_obj:

bunch = pickle.load(file_obj)

return bunch

def _writebunchobj(path, bunchobj):

with open(path, "wb") as file_obj:

pickle.dump(bunchobj, file_obj)

def vector_space(stopword_path,bunch_path,space_path,train_tfidf_path=None):

stpwrdlst = _readfile(stopword_path).splitlines()

bunch = _readbunchobj(bunch_path)

tfidfspace = Bunch(target_name=bunch.target_name, label=bunch.label, filenames=bunch.filenames, tdm=[], vocabulary={})

if train_tfidf_path is not None:

trainbunch = _readbunchobj(train_tfidf_path)

tfidfspace.vocabulary = trainbunch.vocabulary

vectorizer = TfidfVectorizer(stop_words=stpwrdlst, sublinear_tf=True, max_df=0.5,vocabulary=trainbunch.vocabulary)

tfidfspace.tdm = vectorizer.fit_transform(bunch.contents)

else:

vectorizer = TfidfVectorizer(stop_words=stpwrdlst, sublinear_tf=True, max_df=0.5)

tfidfspace.tdm = vectorizer.fit_transform(bunch.contents)

tfidfspace.vocabulary = vectorizer.vocabulary_

_writebunchobj(space_path, tfidfspace)

print("if-idf词向量空间实例创建成功!!!")

if __name__ == '__main__':

stopword_path = "train_word_bag/hlt_stop_words.txt"

bunch_path = "train_word_bag/train_set.dat"

space_path = "train_word_bag/tfdifspace.dat"

vector_space(stopword_path,bunch_path,space_path)

bunch_path = "test_word_bag/test_set.dat"

space_path = "test_word_bag/testspace.dat"

train_tfidf_path="train_word_bag/tfdifspace.dat"

vector_space(stopword_path,bunch_path,space_path,train_tfidf_path)4.用多项式贝叶斯算法预测测试集文本分类情况

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

import sys

from importlib import reload

reload(sys)

import pickle

from sklearn.naive_bayes import MultinomialNB # 导入多项式贝叶斯算法

# 读取bunch对象

def _readbunchobj(path):

with open(path, "rb") as file_obj:

bunch = pickle.load(file_obj)

return bunch

# 导入训练集

trainpath = "train_word_bag/tfdifspace.dat"

train_set = _readbunchobj(trainpath)

# 导入测试集

testpath = "test_word_bag/testspace.dat"

test_set = _readbunchobj(testpath)

# 训练分类器:输入词袋向量和分类标签,alpha:0.001 alpha越小,迭代次数越多,精度越高

clf = MultinomialNB(alpha=0.001).fit(train_set.tdm, train_set.label)

# 预测分类结果

predicted = clf.predict(test_set.tdm)

for flabel,file_name,expct_cate in zip(test_set.label,test_set.filenames,predicted):

if flabel != expct_cate:

print(file_name,": 实际类别:",flabel," -->预测类别:",expct_cate)

print("预测完毕!!!")

# 计算分类精度:

from sklearn import metrics

def metrics_result(actual, predict):

print('精度:{0:.3f}'.format(metrics.precision_score(actual, predict,average='weighted')))

print('召回:{0:0.3f}'.format(metrics.recall_score(actual, predict,average='weighted')))

print('f1-score:{0:.3f}'.format(metrics.f1_score(actual, predict,average='weighted')))

metrics_result(test_set.label, predicted)5.分类结果

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file01.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file02.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file03.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file04.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file05.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file06.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file07.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file08.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file09.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file10.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file11.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file12.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file13.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file14.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file15.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file16.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file17.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file18.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file19.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file20.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file21.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file22.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file23.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file24.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file25.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file26.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file27.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file28.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file29.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file30.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file31.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file32.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file33.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file34.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file35.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file36.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file37.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file38.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file39.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file40.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file41.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file42.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file43.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file44.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file45.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file46.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file47.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file48.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file49.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file50.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file51.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file52.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file53.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file54.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file55.txt : 实际类别: experimentdataset -->预测类别: class7

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file56.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file57.txt : 实际类别: experimentdataset -->预测类别: class8

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file58.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file59.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file60.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file61.txt : 实际类别: experimentdataset -->预测类别: class2

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file62.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file63.txt : 实际类别: experimentdataset -->预测类别: class3

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file64.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file65.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file66.txt : 实际类别: experimentdataset -->预测类别: class5

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file67.txt : 实际类别: experimentdataset -->预测类别: class4

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file68.txt : 实际类别: experimentdataset -->预测类别: class1

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file69.txt : 实际类别: experimentdataset -->预测类别: class6

C:/Users/JAdpp/Desktop/数据集/experimentdataset/experimentdataset/file70.txt : 实际类别: experimentdataset -->预测类别: class7

预测完毕!!!

总结:

遇到的问题主要有Python2.7和Python3的语法问题,举例来说,参考代码由于是Python2.7编写的,所以在设置utf-8 unicode环境时需要写“sys.setdefaultencoding('utf-8')”代码,而在Python3字符串默认编码unicode,所以sys.setdefaultencoding也不存在了,需要删除这行。还有print在Python3以后由语句变成了函数,所以需要加上括号。分词环节参考了jieba组件作者在github上的官方教程和说明文档,Scikit-Learn库则参考了官方的技术文档。程序运行成功,但遗留一个小bug,在于由于数据集结构问题,训练集和测试集分类数不同,所以不能评估分类评估结果,召回率、准确率和F-Score。