解决Python爬取:response.status_code为418 问题

问题1:初步解决响应状态418

python爬取豆瓣网站电影:

url = ‘https://movie.douban.com/?start=0&filter=’

import requests

url = 'https://movie.douban.com/top250?start=0&filter='

res =requests.get(url)

print(res.status_code)

响应状态:418

正常返回状态应该是 200

问题解决参考链接:

-

requests状态码集合:

https://www.cnblogs.com/yitiaodahe/p/9216387.html

418:(‘im_a_teapot’, ‘teapot’, ‘i_am_a_teapot’) -

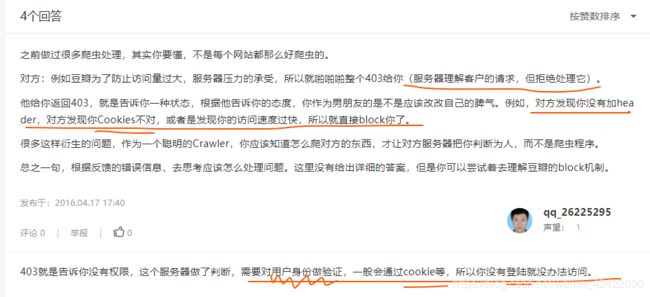

418啥意思?

https://blog.csdn.net/weixin_43902320/article/details/104342771

就是你爬取的网站有反爬虫机制,我们要向服务器发出爬虫请求,需要添加请求头:headers -

为什么加 及 如何加 请求头headers?

https://blog.csdn.net/ysblogs/article/details/88530124?depth_1-utm_source=distribute.pc_relevant.none-task&utm_source=distribute.pc_relevant.none-task

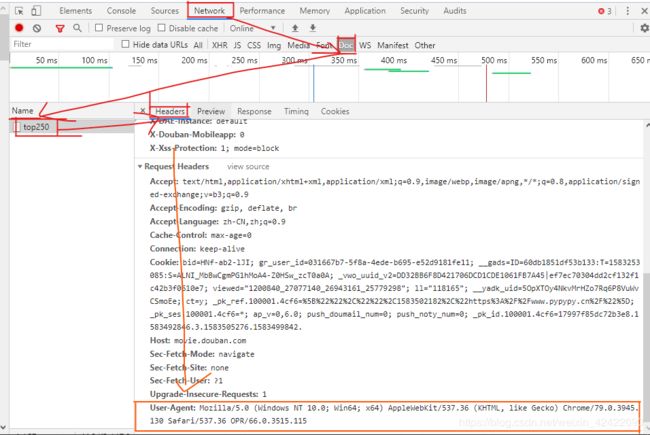

网页右键“检查元素”,在图示页面找headers内容:

——Network

—— Doc(如果没有出现这一排,可先按F5刷新一下)

——Name下是你搜索的页面的html 点击一下(或按F5再刷新)

—— 找到Headers下面的 User-Agent,复制图示框内所有~ -

怎么用User-Agent?

【复制的内容写成字典形式,冒号前的User-Agent作为字典元素的键,冒号后整体以字符串形式(注意加引号)作为键值】

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36 OPR/66.0.3515.115'}

然后 将赋值语句 headers = headers 作为requests.get(url) 第二个参数

改进后的代码——

import requests

url = 'https://movie.douban.com/top250?start=0&filter='

headers ={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36 OPR/66.0.3515.115'}

res =requests.get(url,headers = headers)

print(res.status_code)

该代码在某线上课程界面运行的结果:

403

该代码在pycharm编译器上运行的结果:

200

那么,该课程界面的运行错误该怎么解决???

添加了请求头 ,响应状态却是403,继续查查原因和解决方法~

问题2:进一步解决状态403问题

import requests

url = 'https://movie.douban.com/top250?start=0&filter='

headers ={'Cookie': 'bid=HNf-ab2-lJI; gr_user_id=031667b7-5f8a-4ede-b695-e52d9181fe11; __gads=ID=60db1851df53b133:T=1583253085:S=ALNI_MbBwCgmPG1hMoA4-Z0HSw_zcT0a0A; _vwo_uuid_v2=DD32BB6F8D421706DCD1CDE1061FB7A45|ef7ec70304dd2cf132f1c42b3f0610e7; viewed="1200840_27077140_26943161_25779298"; ll="118165"; __yadk_uid=5OpXTOy4NkvMrHZo7Rq6P8VuWvCSmoEe; ct=y; _pk_ref.100001.4cf6=%5B%22%22%2C%22%22%2C1583502182%2C%22https%3A%2F%2Fwww.pypypy.cn%2F%22%5D; _pk_ses.100001.4cf6=*; ap_v=0,6.0; push_doumail_num=0; push_noty_num=0; _pk_id.100001.4cf6=17997f85dc72b3e8.1583492846.3.1583505276.1583499842.',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36 OPR/66.0.3515.115'}

res =requests.get(url,headers = headers)

print(res.status_code)

结果:

403

不行!

- 选择先 登陆 豆瓣网站,再更新网站元素(F5刷新),再次复制Cookies

import requests

url = 'https://movie.douban.com/top250?start=0&filter='

headers ={'Cookie': 'bid=HNf-ab2-lJI; gr_user_id=031667b7-5f8a-4ede-b695-e52d9181fe11; __gads=ID=60db1851df53b133:T=1583253085:S=ALNI_MbBwCgmPG1hMoA4-Z0HSw_zcT0a0A; _vwo_uuid_v2=DD32BB6F8D421706DCD1CDE1061FB7A45|ef7ec70304dd2cf132f1c42b3f0610e7; viewed="1200840_27077140_26943161_25779298"; ll="118165"; __yadk_uid=5OpXTOy4NkvMrHZo7Rq6P8VuWvCSmoEe; ct=y; ap_v=0,6.0; push_doumail_num=0; push_noty_num=0; _pk_ref.100001.4cf6=%5B%22%22%2C%22%22%2C1583508410%2C%22https%3A%2F%2Fwww.pypypy.cn%2F%22%5D; _pk_ses.100001.4cf6=*; dbcl2="131182631:x7xeSw+G5a8"; ck=9iia; _pk_id.100001.4cf6=17997f85dc72b3e8.1583492846.4.1583508446.1583505298.',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36 OPR/66.0.3515.115'}

res =requests.get(url,headers = headers)

print(res.status_code)

运行结果:

200

可以爬取了!

————————————————————————————————————

>>进一步了解网站登录实现问题

- 爬取 登录后界面 的内容(网页端必须先登录)

爬虫之网站登陆实现,爬取 登录后界面 的内容,有两种实现方法——

1、同上面介绍的添加登录后的Cookie:最为简便,但是该方法具有局限性——

在登陆后,如果不退出当前用户,cookie是不会变的(哪怕退出浏览器),如果退出当前用户,那么下次登录后,cookie会改变;

只限本台电脑,换个电脑,该Cookie无效。

2、session() + post提交请求 ——

代码如下(以豆瓣网为例):

import requests

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36 OPR/66.0.3515.115'}

data = {'user_name':'********','password':'********'} ## 注意此处输入这个并不意味着不需要在网页上先登陆

url_login= 'https://accounts.douban.com/passport/login_popup?login_source=anony'

session = requests.Session()

session.post(url_login,headers= headers,data = data) ## 此处的url_login 是登陆入口的地址,这个可以在<右键——页面元素elements中找到带有账户login的链接>

print(session)

url = 'https://www.douban.com'

res =session.get(url,headers = headers) ## 此处输入的url是登录后的豆瓣网页链接

print(res.status_code)

运行后状态码:

200

【再详细了解些Headers中内容参考】:

(转)PYTHON爬虫:HTTP请求头部(HEADER)详解