【机器学习-4】神经网络算法——NeuralNetwork

一、NeuralNetwork

import numpy as np

def tanh(x):

return np.tanh(x)

def tanh_deriv(x):

return 1.0-np.tanh(x)*np.tanh(x)

def logistic(x):

return 1/(1+np.exp(-x))

def logistic_derivative(x):

return logistic(x)*(1-logistic(x))

class NeuralNetwork:

def __init__(self,layers,activation='tanh'):

#根据类实例化一个函数,_init_代表的是构造函数

#self相当于java中的this

"""

:param layers:一个列表,包含了每层神经网络中有几个神经元,至少有两层,输入层不算作

[, , ,]中每个值代表了每层的神经元个数

:param activation:激活函数可以使用tanh 和 logistics,不指明的情况下就是tanh函数

"""

if activation =='logistic':

self.activation = logistic

self.activation_deriv = logistic_derivative

elif activation =='tanh':

self.activation =tanh

self.activation_deriv=tanh_deriv

#初始化weights,

self.weights =[]

#len(layers)layer是一个list[10,10,3],则len(layer)=3

#除了输出层都要赋予一个随机产生的权重

for i in range(1,len(layers)-1):

#np.random.random为nunpy随机产生的数

#实际是以第二层开始,前后都连线赋予权重,权重位于[-0.25,0.25]之间

self.weights.append((2*np.random.random((layers[i-1]+1,layers[i]+1))-1)*0.25)

self.weights.append((2*np.random.random((layers[i]+1,layers[i+1]))-1)*0.25)

#定义一个方法,训练神经网络

def fit(self,X,y,learning_rate=0.2,epochs=10000):

#X:数据集,确认是二维,每行是一个实例,每个实例有一些特征值

X=np.atleast_2d(X)

#np.ones初始化一个矩阵,传入两个参数全是1

#X.shape返回的是一个list[行数,列数]

#X.shape[0]返回的是行,X.shape[1]+1:比X多1,对bias进行赋值为1

temp = np.ones([X.shape[0],X.shape[1]+1])

#“ :”取所有的行

#“0:-1”从第一列到倒数第二列,-1代表的是最后一列

temp[:,0:-1]=X

X=temp

#y:classlabel,函数的分类标记

y=np.array(y)

#K代表的是第几轮循环

for k in range(epochs):

#从0到X.shape[0]随机抽取一行做实例

i =np.random.randint(X.shape[0])

a=[X[i]]

#正向更新权重 ,len(self.weights)等于神经网络层数

for l in range(len(self.weights)):

#np.dot代表两参数的内积,x.dot(y) 等价于 np.dot(x,y)

#即a与weights内积,之后放入非线性转化function求下一层

#a输入层,append不断增长,完成所有正向的更新

a.append(self.activation(np.dot(a[l],self.weights[l])))

#计算错误率,y[i]真实标记 ,a[-1]预测的classlable

error=y[i]-a[-1]

#计算输出层的误差,根据最后一层当前神经元的值,反向更新

deltas =[error*self.activation_deriv(a[-1])]

#反向更新

#len(a)所有神经元的层数,不能算第一场和最后一层

#从最后一层到第0层,每次-1

for l in range(len(a)-2,0,-1):

#

deltas.append(deltas[-1].dot(self.weights[l].T)*self.activation_deriv(a[l]))

#reverse将deltas的层数跌倒过来

deltas.reverse()

for i in range(len(self.weights)):

#

layer = np.atleast_2d(a[i])

#delta代表的是权重更新

delta = np.atleast_2d(deltas[i])

#layer.T.dot(delta)误差和单元格的内积

self.weights[i]+=learning_rate*layer.T.dot(delta)

def predict(self,x):

x=np.array(x)

temp=np.ones(x.shape[0]+1)

#从0行到倒数第一行

temp[0:-1]=x

a=temp

for l in range(0,len(self.weights)):

a=self.activation(np.dot(a,self.weights[l]))

return a

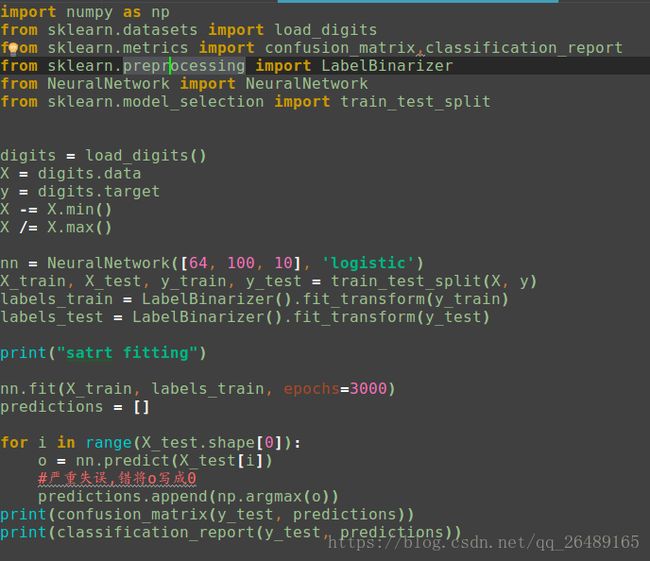

二、TestOXR(异或)

#从自定义的NeuralNetwork包中导入NeuralNetwork模块

from NeuralNetwork import NeuralNetwork

import numpy as np

nn=NeuralNetwork([2,2,1],'tanh')

X=np.array([[0,0],[1,0],[0,1],[1,1]])

y=np.array([0,1,1,0])

nn.fit(X,y)

for i in [[0,0],[1,0],[0,1],[1,1]]:

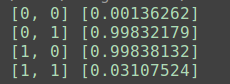

print(i,nn.predict(i))运行结果:

1.TypeError: object() takes no parameters

解决办法:

def fit与原因是:

def __init__(self,layers,activation='tanh'):中的__init__设置成了_init_,,细致看,自己定义的两下划线明显比正确的短

解决办法:将__init__设置正确

是两个平级定义

2. AttributeError: 'NeuralNetwork' object has no attribute 'fit'

原因:

def fit(self,X,y,learning_rate=0.2,epochs=10000):

此处定义设置成ldef __init__(self,layers,activation='tanh'):的子定义,因此找不到fit3.格外注意定义的self.weights =[],极易将weights写错成weight

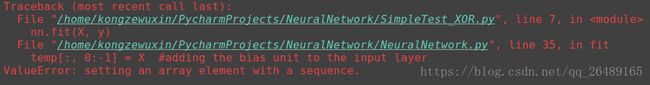

4.ValueError: setting an array element with a sequence.

原因:

X = np.array([[0, 0], [0, 1], [1.0], [1, 1]])中将逗号写成句号三、手写数字(HandwrittenDigitsRecognition)

运行结果:

【bug-01】

/home/kongzewuxin/anaconda3/lib/python3.5/site-packages/sklearn/cross_validation.py:41: DeprecationWarning: This module was deprecated in version 0.18 in favor of the model_selection module into which all the refactored classes and functions are moved. Also note that the interface of the new CV iterators are different from that of this module. This module will be removed in 0.20.

"This module will be removed in 0.20.", DeprecationWarning)

解决办法:from sklearn.cross_validation import train_test_split改成

from sklearn.model_selection import train_test_split

【bug-02】

UndefinedMetricWarning: Precision are ill-defined and being set to 0.0 in labels with no predicted samples.

'precision', 'predicted', average, warn_for[k1] )

原因分析:在下述代码中

for i in range(X_test.shape[0]):

o = nn.predict(X_test[i])

#严重失误,错将o写成0

predictions.append(np.argmax[k2] (o))

其它参考:http://www.cnblogs.com/albert-yzp/p/9534891.html

参考:https://blog.csdn.net/akadiao/article/details/78040405