Pytorch打卡第11天:可视化工具TensorBoard

目录

- 任务

- 任务简介

- 详细说明

- 知识点

- TensorBoard简介与安装

- TensorBoard的使用

- SummaryWriter

- SummaryWriter 方法

- 1. add_scalar()

- 2. add_scalars()

- 3. add_histogram()

- 4. add_image()

- 5. add_graph()

- torchvision.utils.make_grid

- torchsummary (很实用)

- 代码

- 1.TensorBoard各种方法代码汇总

- 2.可视化任意网络模型训练的Loss,及Accuracy曲线图,Train与Valid在同一个图中

- 3.采用make_grid,对任意图像训练输入数据进行批量可视化

任务

TensorBoard支持Scalars, Images, Audio, Graphs, Distrbutions, Histograms, Embeddings, Text等数据的可视化。

任务简介

- 学习TensorBoard中scalar与histogram的使用;

- 学习TensorBoard中Image与PyTorch的make_grid使用

详细说明

-

第一部分

学习TensorBoard的SummaryWriter类的基本属性,然后学习add_scalar, add_scalars和add_histogram的使用,最后采用所学函数实现模型训练过程中的Loss曲线,Accuracy曲线的对比监控,同时对参数及其梯度的分布进行可视化。 -

第二部分

学习TensorBoard的add_image方法,并学习PyTorch的make_grid函数构建网格图片,对批量图片进行可视化,最后采用所学函数对AlexNet网络卷积核与特征图进行可视化分析。

知识点

TensorBoard简介与安装

- from torch.utils.tensorboard import SummaryWriter

- TensorBoard:TensorFlow中强大的可视化工具

- 安装:pip install future ; pip install tensorboard;

(换源:pip install tensorboard -i https://pypi.douban.com/simple) - 运行后,得到event file

- 终端输入: tensorboard --logdir=./runs

- 点击本地地址:TensorBoard 2.3.0 at http://localhost:6006/ (Press CTRL+C to quit)

# -*- coding:utf-8 -*-

"""

@brief : 测试tensorboard可正常使用

"""

import numpy as np

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter(comment='test_tensorboard')

for x in range(100):

writer.add_scalar('y=2x', x * 2, x)

writer.add_scalar('y=pow(2, x)', 2 ** x, x)

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x),

"arctanx": np.arctan(x)}, x)

writer.close()

TensorBoard的使用

# -*- coding:utf-8 -*-

"""

@brief : tensorboard使用方法

"""

import os

import torch

import time

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.utils as vutils

from tools.my_dataset import RMBDataset

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

from tools.common_tools2 import set_seed

from model.lenet import LeNet

import matplotlib.pyplot as plt

import numpy as np

set_seed(1)

SummaryWriter

- 功能:提供创建event file的高级接口

- 主要属性:

• log_dir:event file输出文件夹

• comment:不指定log_dir时,文件夹后缀

• filename_suffix:event file文件名后缀

# ----------------------------------- 0 SummaryWriter

flag = 0

# flag = 1

if flag:

log_dir = './train_log/test_log_dir'

writer = SummaryWriter(log_dir=log_dir, comment='_scales', filename_suffix='12345678')

writer = SummaryWriter(comment='_scales', filename_suffix='12345678')

for x in range(100):

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.close()

SummaryWriter 方法

1. add_scalar()

功能:记录标量

• tag:图像的标签名,图的唯一标识

• scalar_value:要记录的标量

• global_step:x轴

2. add_scalars()

• main_tag:该图的标签

• tag_scalar_dict:key是变量的tag,value是变量的值

# ----------------------------------- 1 scalar and scalars

flag = 0

flag = 1

if flag:

max_epoch = 100

writer = SummaryWriter(comment='test_comment', filename_suffix='test_suffix')

for x in range(max_epoch):

writer.add_scalar('y=2x', 2 * x, x)

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.add_scalars('data/scale_group', {"xsin": x * np.sin(x),

"xcos": x * np.cos(x)}, x)

writer.close()

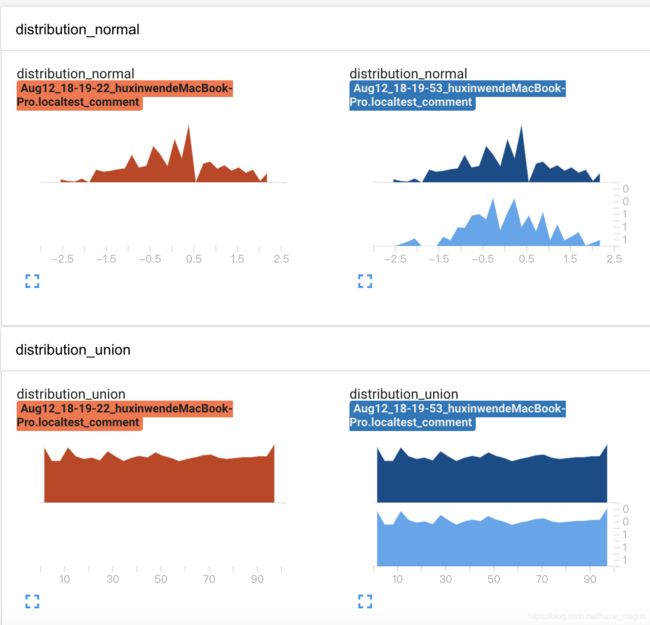

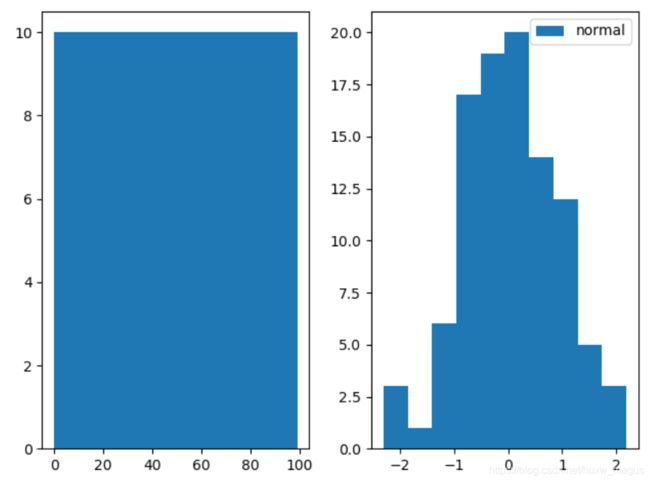

3. add_histogram()

功能:统计直方图与多分位数折线图

• tag:图像的标签名,图的唯一标识

• values:要统计的参数

• global_step:y轴

• bins:取直方图的bins

# ----------------------------------- 2 histogram

flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment="test_comment", filename_suffix='test_suffix')

for x in range(2):

np.random.seed(x)

data_union = np.arange(100)

data_normal = np.random.normal(size=100)

writer.add_histogram('distribution union', data_union, x)

writer.add_histogram('distribution normal', data_normal, x)

plt.subplot(121).hist(data_union, label='union')

plt.subplot(122).hist(data_normal, label='normal')

plt.legend()

plt.show()

4. add_image()

功能:记录图像

• tag:图像的标签名,图的唯一标识

• img_tensor:图像数据,注意尺度

• global_step:x轴

• dataformats:数据形式,CHW,HWC,HW

# ----------------------------------- 3 image

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# img 1 random

fake_img = torch.randn(3, 512, 512)

writer.add_image("fake_img", fake_img, 1)

time.sleep(1)

# img 2 ones

fake_img = torch.ones(3, 512, 512)

time.sleep(1)

writer.add_image("fake_img", fake_img, 2)

# img 3 1.1

fake_img = torch.ones(3, 512, 512) * 1.1

time.sleep(1)

writer.add_image("fake_img", fake_img, 3)

# img 4 HW

fake_img = torch.rand(512, 512)

writer.add_image("fake_img", fake_img, 4, dataformats="HW")

# img 5 HWC

fake_img = torch.rand(512, 512, 3)

writer.add_image("fake_img", fake_img, 5, dataformats="HWC")

writer.close()

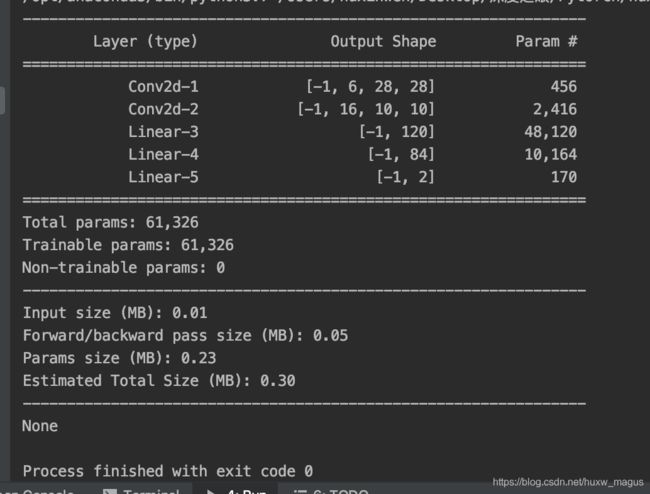

5. add_graph()

== 运行有点问题,预判断应该是pytorch版本不符==

功能:可视化模型计算图

notice: pytorch要1.3以上版本

• model:模型,必须是 nn.Module

• input_to_model:输出给模型的数据

• verbose:是否打印计算图结构信息

# ----------------------------------- 5 add_graph

flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 模型

fake_img = torch.randn(1, 3, 32, 32)

lenet = LeNet(classes=2)

writer.add_graph(model=lenet, input_to_model=fake_img)

writer.close()

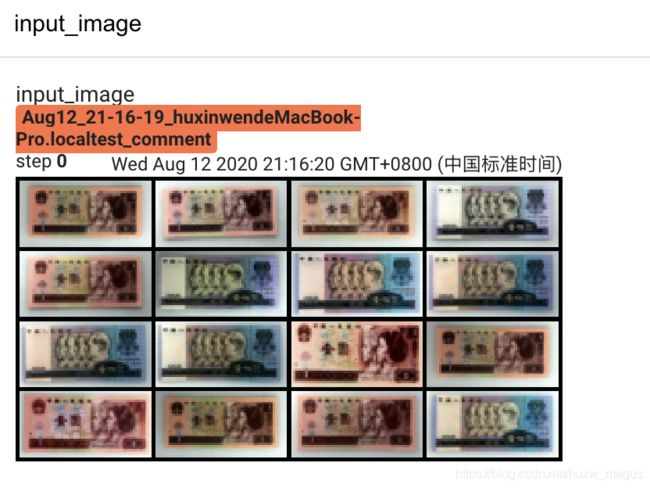

torchvision.utils.make_grid

- 功能:制作网格图像

• tensor:图像数据, BCH*W形式

• nrow:行数(列数自动计算)

• padding:图像间距(像素单位)

• normalize:是否将像素值标准化

• range:标准化范围

• scale_each:是否单张图维度标准化

• pad_value:padding的像素值

# ----------------------------------- 4 make_grid

flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_comment', filename_suffix='test_suffix')

split_dir = os.path.join('..', '..', 'data', 'rmb_split')

train_dir = os.path.join(split_dir, 'train')

transform_compose = transforms.Compose([

transforms.Resize((32, 64)),

transforms.ToTensor()

])

train_data = RMBDataset(data_dir=train_dir, transform=transform_compose)

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

data_batch, label_batch = next(iter(train_loader))

img_grid = vutils.make_grid(data_batch, nrow=4, normalize=True, scale_each=True)

writer.add_image("input image", img_grid, 0)

writer.close()

torchsummary (很实用)

- 功能:查看模型信息,便于调试

• model:pytorch模型

• input_size:模型输入size

• batch_size:batch size

• device:“cuda” or “cpu”

github: https://github.com/sksq96/pytorch-summary

# ----------------------------------- 6 torchsummary

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

lenet = LeNet(classes=2)

from torchsummary import summary

print(summary(lenet, (3, 32, 32), device='cpu'))

代码

1.TensorBoard各种方法代码汇总

# -*- coding:utf-8 -*-

"""

@brief : tensorboard使用方法

"""

import os

import torch

import time

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.utils as vutils

from tools.my_dataset import RMBDataset

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

from tools.common_tools2 import set_seed

from model.lenet import LeNet

import matplotlib.pyplot as plt

import numpy as np

set_seed(1)

# ----------------------------------- 0 SummaryWriter

flag = 0

# flag = 1

if flag:

log_dir = './train_log/test_log_dir'

writer = SummaryWriter(log_dir=log_dir, comment='_scales', filename_suffix='12345678')

writer = SummaryWriter(comment='_scales', filename_suffix='12345678')

for x in range(100):

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.close()

# ----------------------------------- 1 scalar and scalars

flag = 0

# flag = 1

if flag:

max_epoch = 100

writer = SummaryWriter(comment='test_comment', filename_suffix='test_suffix')

for x in range(max_epoch):

writer.add_scalar('y=2x', 2 * x, x)

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.add_scalars('data/scale_group', {"xsin": x * np.sin(x),

"xcos": x * np.cos(x)}, x)

writer.close()

# ----------------------------------- 2 histogram

flag = 0

# flag = 1

if flag:

writer = SummaryWriter(comment="test_comment", filename_suffix='test_suffix')

for x in range(2):

np.random.seed(x)

data_union = np.arange(100)

data_normal = np.random.normal(size=100)

writer.add_histogram('distribution union', data_union, x)

writer.add_histogram('distribution normal', data_normal, x)

plt.subplot(121).hist(data_union, label='union')

plt.subplot(122).hist(data_normal, label='normal')

plt.legend()

plt.show()

# ----------------------------------- 3 image

flag = 0

# flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# img 1 random

fake_img = torch.randn(3, 512, 512)

writer.add_image("fake_img", fake_img, 1)

time.sleep(1)

# img 2 ones

fake_img = torch.ones(3, 512, 512)

time.sleep(1)

writer.add_image("fake_img", fake_img, 2)

# img 3 1.1

fake_img = torch.ones(3, 512, 512) * 1.1

time.sleep(1)

writer.add_image("fake_img", fake_img, 3)

# img 4 HW

fake_img = torch.rand(512, 512)

writer.add_image("fake_img", fake_img, 4, dataformats="HW")

# img 5 HWC

fake_img = torch.rand(512, 512, 3)

writer.add_image("fake_img", fake_img, 5, dataformats="HWC")

writer.close()

# ----------------------------------- 4 make_grid

flag = 0

# flag = 1

if flag:

writer = SummaryWriter(comment='test_comment', filename_suffix='test_suffix')

split_dir = os.path.join('..', '..', 'data', 'rmb_split')

train_dir = os.path.join(split_dir, 'train')

transform_compose = transforms.Compose([

transforms.Resize((32, 64)),

transforms.ToTensor()

])

train_data = RMBDataset(data_dir=train_dir, transform=transform_compose)

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

data_batch, label_batch = next(iter(train_loader))

img_grid = vutils.make_grid(data_batch, nrow=4, normalize=True, scale_each=True)

writer.add_image("input image", img_grid, 0)

writer.close()

# ----------------------------------- 5 add_graph

flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 模型

fake_img = torch.randn(1, 3, 32, 32)

lenet = LeNet(classes=2)

writer.add_graph(model=lenet, input_to_model=fake_img)

writer.close()

from torchsummary import summary

print(summary(lenet, (3, 32, 32), device='cpu'))

2.可视化任意网络模型训练的Loss,及Accuracy曲线图,Train与Valid在同一个图中

# -*- coding:utf-8 -*-

"""

@brief : 监控loss, accuracy, weights, gradients

"""

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from torch.utils.tensorboard import SummaryWriter

import torch.optim as optim

from matplotlib import pyplot as plt

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools2 import set_seed

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join("..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.RandomGrayscale(p=0.8),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

iter_count = 0

# 构建 SummaryWriter

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

iter_count += 1

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i + 1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i + 1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

scheduler.step() # 更新学习率

# validate the model

if (epoch + 1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss.item())

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j + 1, len(valid_loader), loss_val, correct / total))

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Valid": np.mean(valid_curve)}, iter_count)

writer.add_scalars("Accuracy", {"Valid": correct / total}, iter_count)

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve) + 1) * train_iters * val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

3.采用make_grid,对任意图像训练输入数据进行批量可视化

# -*- coding:utf-8 -*-

"""

@brief : 卷积核和特征图的可视化

"""

import torch.nn as nn

from PIL import Image

import torchvision.transforms as transforms

from torch.utils.tensorboard import SummaryWriter

import torchvision.utils as vutils

from tools.common_tools import set_seed

import torchvision.models as models

set_seed(1) # 设置随机种子

# ----------------------------------- kernel visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

alexnet = models.alexnet(pretrained=True)

kernel_num = -1

vis_max = 1

for sub_module in alexnet.modules():

if isinstance(sub_module, nn.Conv2d):

kernel_num += 1

if kernel_num > vis_max:

break

kernels = sub_module.weight

c_out, c_int, k_w, k_h = tuple(kernels.shape)

for o_idx in range(c_out):

kernel_idx = kernels[o_idx, :, :, :].unsqueeze(1) # make_grid需要 BCHW,这里拓展C维度

kernel_grid = vutils.make_grid(kernel_idx, normalize=True, scale_each=True, nrow=c_int)

writer.add_image('{}_Convlayer_split_in_channel'.format(kernel_num), kernel_grid, global_step=o_idx)

kernel_all = kernels.view(-1, 3, k_h, k_w) # 3, h, w

kernel_grid = vutils.make_grid(kernel_all, normalize=True, scale_each=True, nrow=8) # c, h, w

writer.add_image('{}_all'.format(kernel_num), kernel_grid, global_step=322)

print("{}_convlayer shape:{}".format(kernel_num, tuple(kernels.shape)))

writer.close()

# ----------------------------------- feature map visualization -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform

])

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# forward

convlayer1 = alexnet.features[0]

fmap_1 = convlayer1(img_tensor)

# 预处理

fmap_1.transpose_(0, 1) # bchw=(1, 64, 55, 55) --> (64, 1, 55, 55)

fmap_1_grid = vutils.make_grid(fmap_1, normalize=True, scale_each=True, nrow=8)

writer.add_image('feature map in conv1', fmap_1_grid, global_step=322)

writer.close()