cv2.copyMakeBorder()&cv2.warpAffine()&cv2.warpPerspective()

在跟踪里面,会需要完成如下的操作:

- 例如,在MDNet的里面,会在GT BBox周围crop下一些positive/negative examples, 然后进行resize和padding的操作,得到固定分辨率的patch|regions

- 例如在SiamFC|SiamFC++里面就会在上一帧目标位置进行crop和resize,得到search image

不难发现,对于目标跟踪问题,无论在train phase或者tracking phase,要得到patch,都要有crop->resize/padding的步骤,现在对于此实现进行总结:

cv2.copyMakeBorder()

在siamfc中,用的就是cv2.copyMakeBorder()函数:official link 和 usage example

dst = cv2.copyMakeBorder( src, top, bottom, left, right, borderType[, dst[, value]] )

- src:输入的图片

- top, bottom, left, right:需要在四个方向上填充的像素值

- borderType:填充边界的类型,一般使用固定值,如cv2.BORDER_CONSTANT

- dst: 输出的图片

- value:当borderType是cv2.BORDER_CONSTANT,需要指定填充的固定值是多少

当然这个函数只是单纯实现了在图片周围进行padding的操作,可以看下面的例子:

import cv2

from matplotlib import pyplot as plt

img = cv2.imread('E:\\PycharmProjects\\py-MDNet-master\\datasets\\OTB\\MotorRolling\\img\\0001.jpg')

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # cv2 read PIL show

constant= cv2.copyMakeBorder(imgRGB,20,20,20,20,cv2.BORDER_CONSTANT,value=(255, 0, 0)) # red

plt.subplot(121),plt.imshow(imgRGB),plt.title('ORIGINAL')

plt.subplot(122),plt.imshow(constant),plt.title('CONSTANT')

plt.show()

可以得到:

所以还得配合其他的来实现crop和resize,如siamfc中的crop_and_resize函数:

def crop_and_resize(img, center, size, out_size,

border_type=cv2.BORDER_CONSTANT,

border_value=(0, 0, 0),

interp=cv2.INTER_LINEAR):

# convert box to corners (0-indexed)

size = round(size)

corners = np.concatenate((

np.round(center - (size - 1) / 2),

np.round(center - (size - 1) / 2) + size))

corners = np.round(corners).astype(int)

# pad image if necessary

pads = np.concatenate((

-corners[:2], corners[2:] - img.shape[:2]))

npad = max(0, int(pads.max()))

if npad > 0:

img = cv2.copyMakeBorder(

img, npad, npad, npad, npad,

border_type, value=border_value)

# crop image patch

corners = (corners + npad).astype(int)

patch = img[corners[0]:corners[2], corners[1]:corners[3]]

# resize to out_size

patch = cv2.resize(patch, (out_size, out_size),

interpolation=interp)

return patch

cv2.warpAffine()

这个是在SiamFC++中使用过,在get_subwindow_tracking函数中被调用:

def get_subwindow_tracking(im,

pos,

model_sz,

original_sz,

avg_chans=(0, 0, 0),

mask=None):

r"""

Get subwindow via cv2.warpAffine

Arguments

---------

im: numpy.array

original image, (H, W, C)

pos: numpy.array

subwindow position

model_sz: int

output size

original_sz: int

subwindow range on the original image

avg_chans: tuple

average values per channel

mask: numpy.array

mask, (H, W)

Returns

-------

numpy.array

image patch within _original_sz_ in _im_ and resized to _model_sz_, padded by _avg_chans_

(model_sz, model_sz, 3)

"""

crop_cxywh = np.concatenate(

[np.array(pos), np.array((original_sz, original_sz))], axis=-1)

crop_xyxy = cxywh2xyxy(crop_cxywh)

# warpAffine transform matrix

M_13 = crop_xyxy[0]

M_23 = crop_xyxy[1]

M_11 = (crop_xyxy[2] - M_13) / (model_sz - 1) # w/output_size

M_22 = (crop_xyxy[3] - M_23) / (model_sz - 1) # h/output_size

mat2x3 = np.array([M_11, 0, M_13, 0, M_22, M_23,]).reshape(2, 3)

im_patch = cv2.warpAffine(im,

mat2x3, (model_sz, model_sz),

flags=(cv2.INTER_LINEAR | cv2.WARP_INVERSE_MAP),

borderMode=cv2.BORDER_CONSTANT,

borderValue=tuple(map(int, avg_chans)))

if mask is not None:

mask_patch = cv2.warpAffine(mask,

mat2x3, (model_sz, model_sz),

flags=(cv2.INTER_NEAREST

| cv2.WARP_INVERSE_MAP))

return im_patch, mask_patch

return im_patch

这时就得去官网查一查cv2.warpAffine()函数的用法:official link 和 usage example:

cv2.warpAffine(src, M, dsize[, dst[, flags[, borderMode[, borderValue]]]]) → dst

- src:输入的图片

- M:affine transformation matrix(2*3)

- dsize:输出图片的大小(W*H)

- dst:输出的图片

- flags:插值的类型,如cv2.INTER_LINEAR,如果加上cv2.WARP_INVERSE_MAP,则使用这个对应关系: dst ( x , y ) = src ( M 11 x + M 12 y + M 13 , M 21 x + M 22 y + M 23 ) \operatorname{dst}(x, y)=\operatorname{src}\left(M_{11} x+M_{12} y+M_{13}, M_{21} x+M_{22} y+M_{23}\right) dst(x,y)=src(M11x+M12y+M13,M21x+M22y+M23)

- borderMode:和bordeType一样,如cv2.BORDER_CONSTANT

- borderValue:当borderMode是cv2.BORDER_CONSTANT,需要指定填充的固定值是多少

这里面的关系和具体做了什么还是很难一下子搞懂【可以先看看那个usage example里面平移,旋转,仿射,透视变换的例子用法】,再看下面的例子:

import cv2

import numpy as np

from matplotlib import pyplot as plt

img = cv2.imread('E:\\PycharmProjects\\py-MDNet-master\\datasets\\OTB\\MotorRolling\\img\\0001.jpg')

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

rows, cols = imgRGB.shape[:2] # (360, 640)

# print("img shape: ", img.shape)

# cv2.WARP_INVERSE_MAP

M1 = np.float32([[1, 0, 100], [0, 1, 50]])

M2 = np.float32([[2, 0, 100], [0, 1, 50]])

dst1 = cv2.warpAffine(imgRGB, M1, (cols,rows), flags=cv2.INTER_LINEAR)

dst2 = cv2.warpAffine(imgRGB, M2, (cols,rows), flags=cv2.INTER_LINEAR)

dst3 = cv2.warpAffine(imgRGB, M1, (cols,rows), flags=(cv2.INTER_LINEAR | cv2.WARP_INVERSE_MAP))

dst4 = cv2.warpAffine(imgRGB, M2, (cols,rows), flags=(cv2.INTER_LINEAR | cv2.WARP_INVERSE_MAP))

M3 = np.float32([[0.5, 0, 100], [0, 1, 50]])

dst5 = cv2.warpAffine(imgRGB, M3, (cols,rows), flags=(cv2.INTER_LINEAR | cv2.WARP_INVERSE_MAP))

fig = plt.figure('subplot demo')

ax1 = fig.add_subplot(3, 2, 1), plt.imshow(imgRGB), plt.title('Original', fontsize=7)

ax2 = fig.add_subplot(3, 2, 2), plt.imshow(dst1), plt.title('Translation', fontsize=7)

ax3 = fig.add_subplot(3, 2, 3), plt.imshow(dst2), plt.title('Expand + Translation', fontsize=7)

ax4 = fig.add_subplot(3, 2, 4), plt.imshow(dst3), plt.title('Inverse Translation', fontsize=7)

ax5 = fig.add_subplot(3, 2, 5), plt.imshow(dst4), plt.title('Shrink + Inverse Translation', fontsize=7)

ax6 = fig.add_subplot(3, 2, 6), plt.imshow(dst5), plt.title('Expand + Inverse Translation', fontsize=7)

plt.tight_layout()

plt.show()

- 当flags中无cv2.WARP_INVERSE_MAP时:

- 平移从左上角往右下角平移, M 13 M_{13} M13为 x x x平移量, M 23 M_{23} M23为 y y y平移量

- M 11 M_{11} M11为 x x x缩放量, M 22 M_{22} M22为 y y y缩放量,是乘以此处的缩放因子

- 平移量和缩放量是互相独立的,不会互相影响

- 当flags中有cv2.WARP_INVERSE_MAP时:

- 平移从右下角往左上角平移, M 13 M_{13} M13为 x x x平移量, M 23 M_{23} M23为 y y y平移量

- M 11 M_{11} M11为 x x x缩放量, M 22 M_{22} M22为 y y y缩放量,是除以此处的缩放因子

- 平移量是会被缩放的,所以缩放量和平移量是互相影响的

其实只要记住怎么用就行,就像上面那样,可以得到下面的demo:

import cv2

import numpy as np

from matplotlib import pyplot as plt

context_amount = 0.5

z_size = 127

def cxywh2xyxy(box):

box = np.array(box, dtype=np.float32)

return np.concatenate([

box[..., [0]] - (box[..., [2]] - 1) / 2, box[..., [1]] -

(box[..., [3]] - 1) / 2, box[..., [0]] +

(box[..., [2]] - 1) / 2, box[..., [1]] + (box[..., [3]] - 1) / 2

],

axis=-1)

img_path = 'E:\\PycharmProjects\\py-MDNet-master\\datasets\\OTB\\MotorRolling\\img\\0001.jpg'

gt_path = 'E:\\PycharmProjects\\py-MDNet-master\\datasets\\OTB\\MotorRolling\\groundtruth_rect.txt'

img = cv2.imread(img_path, cv2.IMREAD_COLOR)

avg_chans = np.mean(img, axis=(0, 1))

with open(gt_path) as f:

gt = np.loadtxt((x.replace('\t', ',') for x in f), delimiter=',')

box = gt[0] # xywh

wt, ht = box[2:]

wt_ = wt + context_amount * (wt + ht)

ht_ = ht + context_amount * (wt + ht)

st = np.sqrt(wt_ * ht_)

cx = box[0] + (box[2] - 1) / 2

cy = box[1] + (box[3] - 1) / 2

# print(tuple(map(int, [box[0]+box[2], box[1]+box[3]])))

patch = get_subwindow_tracking(img, pos=(cx, cy), model_sz=z_size, original_sz=st, avg_chans=avg_chans)

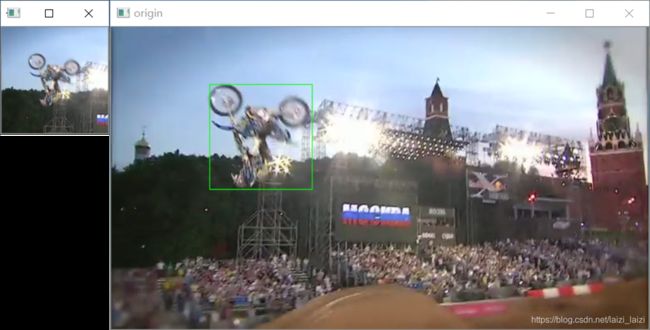

cv2.rectangle(img, pt1=tuple(map(int, box[:2])), pt2=tuple(map(int, [box[0]+box[2], box[1]+box[3]])), color=(0,255,0))

cv2.imshow("origin", img)

cv2.imshow("patch", patch)

cv2.waitKey(0)

cv2.destroyAllWindows()

分割线:2020/08/06更新:另一个跟踪框架级代码pysot里面也在函数_crop_roi中使用了cv2.warpAffine(),但是和SiamFC++写法不同,但是效果是一样的,这里放一下_crop_roi:

其实这里的输入bbox也就是get_subwindow_tracking中的crop_xyxy

def _crop_roi(image, bbox, out_sz, padding=(0, 0, 0)):

# bbox area you want to crop, format: xyxy

bbox = [float(x) for x in bbox]

a = (out_sz - 1) / (bbox[2] - bbox[0]) # output_size/w

b = (out_sz - 1) / (bbox[3] - bbox[1]) # output_size/h

c = -a * bbox[0]

d = -b * bbox[1]

mapping = np.array([[a, 0, c],

[0, b, d]]).astype(np.float)

crop = cv2.warpAffine(image, mapping, (out_sz, out_sz),

borderMode=cv2.BORDER_CONSTANT,

borderValue=padding)

return crop

因为没有用cv2.WARP_INVERSE_MAP这个flag,所以矩阵affine transformation matrix(2*3) M不一样,但是效果是一模一样,所以以后可以二选一,当作模板就行了:

cv2.warpPerspective()

其相关参数和cv2.warpAffine函数的类似,可以说cv2.warpAffine是cv2.warpPerspective()的子集。只不过它的affine transformation matrix是3*3的,最下面一行就是[0, 0, 1],效果是一样的,不再赘述。提供一下:official link 和 usage example:

References

- python的cv2.warpAffine()和cv2.warpPerspective()解析对比