SA-SSD 代码阅读

文章目录

- 一. SingleStageDetector

- 1. 初始化

- 2. 前向传递

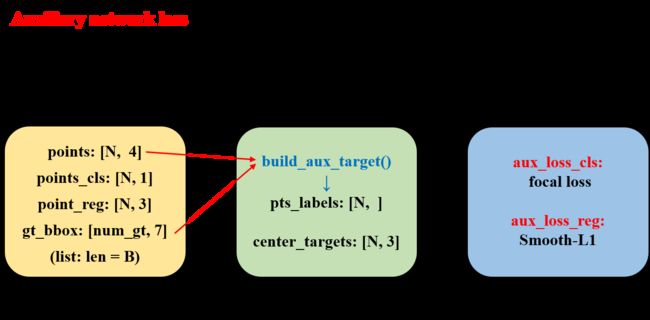

- 二. Auxiliary Network

- 1. 生成 label

- 2. loss 构建

- 三. SSDRotateHead

- 1. 前向传递

- 2. loss 构建

- 3. 生成 label

关于网络的细节也可以看这篇博客,作者介绍的很详细:

- 小白科研笔记:简析CVPR2020论文SA-SSD的网络搭建细节

一. SingleStageDetector

这个是 SA-SSD 的整体网络,由这几个部分组成:

- backbone

- neck

- head

- extra-head

在之后会详细分析每个部分,先来看一下整体的网络:(先看一下有哪些函数,具体的函数内容先省去了)

class SingleStageDetector(BaseDetector, RPNTestMixin, BBoxTestMixin,

MaskTestMixin):

def __init__(self,

backbone,

neck=None,

bbox_head=None,

extra_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None):

super(SingleStageDetector, self).__init__()

self.backbone = builder.build_backbone(backbone)

if neck is not None:

self.neck = builder.build_neck(neck)

else:

raise NotImplementedError

if bbox_head is not None:

self.rpn_head = builder.build_single_stage_head(bbox_head)

if extra_head is not None:

self.extra_head = builder.build_single_stage_head(extra_head)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

self.init_weights(pretrained)

@property

def with_rpn(self):

return hasattr(self, 'rpn_head') and self.rpn_head is not None

def init_weights(self, pretrained=None):

if isinstance(pretrained, str):

logger = logging.getLogger()

load_checkpoint(self, pretrained, strict=False, logger=logger)

def merge_second_batch(self, batch_args):

return ret

def forward_train(self, img, img_meta, **kwargs):

return losses

def forward_test(self, img, img_meta, **kwargs):

return results

1. 初始化

代码分析:

def __init__(self,

backbone,

neck=None,

bbox_head=None,

extra_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None):

super(SingleStageDetector, self).__init__()

# 初始化 Backbone

self.backbone = builder.build_backbone(backbone)

# 初始化 neck

if neck is not None:

self.neck = builder.build_neck(neck)

else:

raise NotImplementedError

# 初始化 head

if bbox_head is not None:

self.rpn_head = builder.build_single_stage_head(bbox_head)

# 初始化 extra-head

if extra_head is not None:

self.extra_head = builder.build_single_stage_head(extra_head)

# 传入 cfg 中的参数

self.train_cfg = train_cfg

self.test_cfg = test_cfg

# 初始化权重

self.init_weights(pretrained)

初始化部分都是一样的,点进去这些函数,就会发现其实都是通过 cfg 文件中的配置 分别初始化这些部分,最后都会进到这个 obj_from_dict 函数。

# 根据字典型变量info去指定初始化一个parrent类对象

# 说白了,就是字典型变量中储存了类的初始化变量。核心调用是getattr

# 总之,obj_from_dict是一种做指定初始化的功能函数

def obj_from_dict(info, parent=None, default_args=None):

"""Initialize an object from dict.

The dict must contain the key "type", which indicates the object type, it

can be either a string or type, such as "list" or ``list``. Remaining

fields are treated as the arguments for constructing the object.

Args:

info (dict): Object types and arguments.

parent (:class:`module`): Module which may containing expected object

classes.

default_args (dict, optional): Default arguments for initializing the

object.

Returns:

any type: Object built from the dict.

"""

# 首先,判断info是不是字典,而且里面必须包含type关键字

# 默认参数也要检查是字典或者为None

assert isinstance(info, dict) and 'type' in info

assert isinstance(default_args, dict) or default_args is None

args = info.copy()

obj_type = args.pop('type')

if mmcv.is_str(obj_type):

if parent is not None:

obj_type = getattr(parent, obj_type)

else:

obj_type = sys.modules[obj_type]

elif not isinstance(obj_type, type):

raise TypeError('type must be a str or valid type, but '

f'got {type(obj_type)}')

if default_args is not None:

for name, value in default_args.items():

args.setdefault(name, value)

return obj_type(**args) # 传入arg里面的参数 相当于实例化了这个类

刚开始看这个函数没整明白,细细看了一下,起始就是根据 cfg 中 设置,找到所要初始化的类,然后再传进去 cfg 中的参数,举个栗子:

neck=dict(

type='SpMiddleFHD',

output_shape=[40, 1600, 1408],

num_input_features=4,

num_hidden_features=64 * 5,

),

这是初始化 neck ,cfg 文件中的配置,首先根据 type='SpMiddleFHD' 找到 SpMiddleFHD 这个类,然后再根据 cfg 中的 参数 实例化这个类。此时

return obj_type(**args)

就相当于:

return SpMiddleFHD(output_shape=[40, 1600, 1408], num_input_features=4, num_hidden_features=64 * 5)

ok, 其他的部分的初始化以此类推,都是这么实现的。应该本身代码是基于 mmdetection 实现的,然后 mmdetection 中就是这么实现的,恩,看懂了就行,以后自己再写代码的时候,也可以这么写,也很方便简洁。

2. 前向传递

然后看一下前向传递的函数:注释也在代码里面了

# img.shape [B, 3, 384, 1248]

# img_meta: dict

# img_meta[0]:

# img_shape : tuple (375, 1242, 3)

# sample_idx

# calib

# kwargs:

# 1. anchors list: len(anchors) = B

# 2. voxels list: len(voxels) = B

# 3. coordinates list: len(coordinates) = B

# 4. num_points list: len(num_points) = B

# 5. anchor_mask list: len(anchor_mask) = B

# 6. gt_labels list: len(gt_labels) = B

# 7. gt_bboxes list: len(gt_bboxes) = B

def forward_train(self, img, img_meta, **kwargs):

# --------------------------------------------------------------------------

# from mmdet.datasets.kitti_utils import draw_lidar

# f = draw_lidar(kwargs["voxels"][0].cpu().numpy(), show=True) # 显示 所有点云

# --------------------------------------------------------------------------

batch_size = len(img_meta) # B

ret = self.merge_second_batch(kwargs)

# vx 就是 ret['voxels']

vx = self.backbone(ret['voxels'], ret['num_points'])

# x.shape = [2, 256, 200, 176]

# conv6.shape = [2, 256, 200, 176]

# point_misc : tuple, shape = 3

# : 1. point_mean : shape [N,4] , [:,0] 是 Batch number

# : 2. point_cls : shape [N,1]

# : 3. point_reg : shape [N.3]

(x, conv6), point_misc = self.neck(vx, ret['coordinates'], batch_size)

losses = dict()

aux_loss = self.neck.aux_loss(*point_misc, gt_bboxes=ret['gt_bboxes'])

losses.update(aux_loss)

# RPN forward and loss

if self.with_rpn:

# rpn_outs : tuple, size = 3

# : 1. box_preds : shape [N, 200, 176, 14]

# : 2. cls_preds : shape [N, 200, 176, 2]

# : 3. dir_cls_preds : shape [N, 200, 176, 4]

rpn_outs = self.rpn_head(x)

# rpn_outs : tuple, shape = 8

rpn_loss_inputs = rpn_outs + (ret['gt_bboxes'], ret['gt_labels'], ret['anchors'], ret['anchors_mask'], self.train_cfg.rpn)

rpn_losses = self.rpn_head.loss(*rpn_loss_inputs)

losses.update(rpn_losses)

# guided_anchors.shape :

# [num_of_guided_anchors, 7]

# + [num_of_gt_bboxes, 7]

# ----------------------------

# = [all_num, 7]

guided_anchors = self.rpn_head.get_guided_anchors(*rpn_outs, ret['anchors'], ret['anchors_mask'], ret['gt_bboxes'], thr=0.1)

else:

raise NotImplementedError

# bbox head forward and loss

if self.extra_head:

bbox_score = self.extra_head(conv6, guided_anchors)

refine_loss_inputs = (bbox_score, ret['gt_bboxes'], ret['gt_labels'], guided_anchors, self.train_cfg.extra)

refine_losses = self.extra_head.loss(*refine_loss_inputs)

losses.update(refine_losses)

return losses

首先传进来的参数 会经过 merge_second_batch() 这个函数,看一下:

def merge_second_batch(self, batch_args):

ret = {}

for key, elems in batch_args.items():

if key in [

'voxels', 'num_points',

]:

ret[key] = torch.cat(elems, dim=0)

elif key == 'coordinates':

coors = []

for i, coor in enumerate(elems): # coor.shape : torch.Size([19480, 3])

coor_pad = F.pad(

coor, [1, 0, 0, 0],

mode='constant',

value=i) # 理解 https://blog.csdn.net/jorg_zhao/article/details/105295686

coors.append(coor_pad)

ret[key] = torch.cat(coors, dim=0)

elif key in [

'img_meta', 'gt_labels', 'gt_bboxes',

]:

ret[key] = elems

else:

ret[key] = torch.stack(elems, dim=0)

return ret

主要就是根据 key 把 batch 合并了,这个没什么问题,注意有这么一步:

coor_pad = F.pad(

coor, [1, 0, 0, 0],

mode='constant',

value=i)

coors.append(coor_pad)

这里 F.pad 的用法见: F.pad

目的就是给 coordinates 多加一个维度 (eg: i = 0,1, …),来保存 Batch

然后就是构建 loss 了,总共由三部分组成 :

l o s s _ a l l = a u g _ l o s s + r p n _ l o s s + e x t r a _ h e a d _ l o s s loss\_all =aug\_loss + rpn\_loss + extra\_head\_loss loss_all=aug_loss+rpn_loss+extra_head_loss

之后每部分 loss 的 具体组成 在后面也会具体分析。

二. Auxiliary Network

1. 生成 label

在 Auxiliary Network 中, 需要分割出 前景点 和 背景点,首先需要生成前景点和背景点的 label

def pts_in_boxes3d(pts, boxes3d):

N = len(pts)

M = len(boxes3d)

pts_in_flag = torch.IntTensor(M, N).fill_(0)

reg_target = torch.FloatTensor(N, 3).fill_(0)

points_op_cpu.pts_in_boxes3d(pts.contiguous(), boxes3d.contiguous(), pts_in_flag, reg_target)

return pts_in_flag, reg_target

其中:

pts_in_flag : [M, N] , pts 在 bbox 中,则 mask = 1

疑惑 :reg_target : [N, 3], 值是什么?又是怎么得到的?

需要解决上面一个疑惑,就需要弄懂这个函数 points_op_cpu.pts_in_boxes3d 。这个函数在 mmdet / ops / points_op / src / points_op.cpp 中,来看一下:

int pts_in_boxes3d_cpu(at::Tensor pts, at::Tensor boxes3d, at::Tensor pts_flag, at::Tensor reg_target){

// param pts: (N, 3)

// param boxes3d: (M, 7) [x, y, z, h, w, l, ry]

// param pts_flag: (M, N)

// param reg_target: (N, 3), center offsets

CHECK_CONTIGUOUS(pts_flag);

CHECK_CONTIGUOUS(pts);

CHECK_CONTIGUOUS(boxes3d);

CHECK_CONTIGUOUS(reg_target);

long boxes_num = boxes3d.size(0);

long pts_num = pts.size(0);

int * pts_flag_flat = pts_flag.data<int>();

float * pts_flat = pts.data<float>();

float * boxes3d_flat = boxes3d.data<float>();

float * reg_target_flat = reg_target.data<float>();

// memset(assign_idx_flat, -1, boxes_num * pts_num * sizeof(int));

// memset(reg_target_flat, 0, pts_num * sizeof(float));

// 这里相当于把 tensor 给展开了遍历 (或者说铺平了?更好理解。懂就好)

int i, j, cur_in_flag;

for (i = 0; i < boxes_num; i++){

for (j = 0; j < pts_num; j++){

cur_in_flag = pt_in_box3d_cpu(pts_flat[j * 3], pts_flat[j * 3 + 1], pts_flat[j * 3 + 2], boxes3d_flat[i * 7],

boxes3d_flat[i * 7 + 1], boxes3d_flat[i * 7 + 2], boxes3d_flat[i * 7 + 3],

boxes3d_flat[i * 7 + 4], boxes3d_flat[i * 7 + 5], boxes3d_flat[i * 7 + 6]);

pts_flag_flat[i * pts_num + j] = cur_in_flag;

if(cur_in_flag==1){

reg_target_flat[j*3] = pts_flat[j*3] - boxes3d_flat[i*7];

reg_target_flat[j*3+1] = pts_flat[j*3+1] - boxes3d_flat[i*7+1];

reg_target_flat[j*3+2] = pts_flat[j*3+2] - (boxes3d_flat[i*7+2] + boxes3d_flat[i*7+3] / 2.0);

}

}

}

return 1;

}

其实已经可以大致理解这个函数在干啥了,通过两层循环遍历,判断点云中的所有点是否在所给定的 bbox 中,如果在 bbox 中, 那就将 该点的值 - bbox 中心点的值 ,就是 reg_target, 用公式表示就是:

r e g _ t a r g e t = P i ( x , y , z ) − P c e n t e r ( x , y , z ) reg\_target =P_{i}(x, y, z) -P_{center}(x,y,z) reg_target=Pi(x,y,z)−Pcenter(x,y,z)

ok,上面的疑问也解开了

2. loss 构建

三. SSDRotateHead

这部分是整个网络的 head 部分,先简单列出来,然后来具体分析一下。

class SSDRotateHead(nn.Module):

def __init__(self,

num_class=1,

num_output_filters=768,

num_anchor_per_loc=2,

use_sigmoid_cls=True,

encode_rad_error_by_sin=True,

use_direction_classifier=True,

box_coder='GroundBox3dCoder',

box_code_size=7,

):

super(SSDRotateHead, self).__init__()

self._num_class = num_class

self._num_anchor_per_loc = num_anchor_per_loc

self._use_direction_classifier = use_direction_classifier

self._use_sigmoid_cls = use_sigmoid_cls

self._encode_rad_error_by_sin = encode_rad_error_by_sin

self._use_direction_classifier = use_direction_classifier

self._box_coder = getattr(boxCoders, box_coder)()

self._box_code_size = box_code_size

self._num_output_filters = num_output_filters

if use_sigmoid_cls: # True

num_cls = num_anchor_per_loc * num_class # 2 * 1

else:

num_cls = num_anchor_per_loc * (num_class + 1)

self.conv_cls = nn.Conv2d(num_output_filters, num_cls, 1)

self.conv_box = nn.Conv2d(

num_output_filters, num_anchor_per_loc * box_code_size, 1)

if use_direction_classifier:

self.conv_dir_cls = nn.Conv2d(

num_output_filters, num_anchor_per_loc * 2, 1)

def add_sin_difference(self, boxes1, boxes2):

def get_direction_target(self, anchors, reg_targets, use_one_hot=True):

def prepare_loss_weights(self, labels,

pos_cls_weight=1.0,

neg_cls_weight=1.0,

loss_norm_type='NormByNumPositives',

dtype=torch.float32):

def create_loss(self,

box_preds, # torch.Size([2, 200, 176, 14])

cls_preds, # torch.Size([2, 200, 176, 2])

cls_targets, # torch.Size([2, 70400])

cls_weights, # torch.Size([2, 70400])

reg_targets, # torch.Size([2, 70400, 7])

reg_weights, # torch.Size([2, 70400])

num_class, # 1

use_sigmoid_cls=True, # True

encode_rad_error_by_sin=True, # True

box_code_size=7): # 7

def forward(self, x):

def get_guided_anchors(self, box_preds, cls_preds, dir_cls_preds, anchors, anchors_mask, gt_bboxes, thr=.1):

1. 前向传递

首先看一下 前向传递 forward 函数 :

def forward(self, x): # torch.Size([2, 256, 200, 176])

box_preds = self.conv_box(x)

cls_preds = self.conv_cls(x)

# [N, C, y(H), x(W)]

box_preds = box_preds.permute(0, 2, 3, 1).contiguous() # torch.Size([2, 200, 176, 14])

cls_preds = cls_preds.permute(0, 2, 3, 1).contiguous() # torch.Size([2, 200, 176, 2])

if self._use_direction_classifier:

dir_cls_preds = self.conv_dir_cls(x)

dir_cls_preds = dir_cls_preds.permute(0, 2, 3, 1).contiguous() # torch.Size([2, 200, 176, 4])

return box_preds, cls_preds, dir_cls_preds

输入就是经过 backbone 得到的 feature map , 然后分成两支,分别预测bbox和物体的类别。

2. loss 构建

看一下,loss 是怎么构建的:

# input

# box_preds : torch.Size([2, 200, 176, 14])

# cls_preds : torch.Size([2, 200, 176, 2])

# gt_bboxes : list:len(gt_bboxes) = B , gt_bboxes[0].shape = torch.Size([num_of_gt_bboxes, 7])

# anchor : torch.Size([2, 70400, 7])

# anchor_mask : torch.Size([2, 70400])

# cfg : from car_cfg.py / train_cfg

def loss(self, box_preds, cls_preds, dir_cls_preds, gt_bboxes, gt_labels, anchors, anchors_mask, cfg):

batch_size = box_preds.shape[0]

# ADD----------------------------------------------------------------------------------------------

add_for_test = False

add_for_pkl = False

# for show gt_bboxes

if add_for_test == True:

bbox3d_for_test = gt_bboxes[0].cpu().numpy()

draw_gt_boxes3d_for_test(center_to_corner_box3d(bbox3d_for_test), draw_text=True, show=True)

# for vis anchor

if add_for_pkl == True:

pkl_data = {}

pkl_data['anchors'] = anchors

pkl_data['anchors_mask'] = anchors_mask

import pickle

with open("/home/seivl/pkl_data.pkl", 'wb') as fo:

pickle.dump(pkl_data, fo)

#-----------------------------------------------------------------------------------------------

# 第一个 create_target_torch 是函数

# 后面变量相当于传参数 进这个函数

# targets 是 reg 的 target

labels, targets, ious = multi_apply(create_target_torch,

anchors, gt_bboxes,

anchors_mask, gt_labels,

similarity_fn=getattr(iou3d_utils, cfg.assigner.similarity_fn)(),

box_encoding_fn = second_box_encode,

matched_threshold=cfg.assigner.pos_iou_thr,

unmatched_threshold=cfg.assigner.neg_iou_thr,

box_code_size=self._box_code_size)

labels = torch.stack(labels,)

targets = torch.stack(targets)

# 生成 cls 和 reg 的权重

cls_weights, reg_weights, cared = self.prepare_loss_weights(labels)

# 生成 cls 的 target

cls_targets = labels * cared.type_as(labels)

# 构建 loss

# 具体解析见下

loc_loss, cls_loss = self.create_loss(

box_preds=box_preds,

cls_preds=cls_preds,

cls_targets=cls_targets,

cls_weights=cls_weights,

reg_targets=targets,

reg_weights=reg_weights,

num_class=self._num_class,

encode_rad_error_by_sin=self._encode_rad_error_by_sin,

use_sigmoid_cls=self._use_sigmoid_cls,

box_code_size=self._box_code_size,

)

loc_loss_reduced = loc_loss / batch_size

loc_loss_reduced *= 2 # loc_loss 的权重

cls_loss_reduced = cls_loss / batch_size

cls_loss_reduced *= 1

loss = loc_loss_reduced + cls_loss_reduced

if self._use_direction_classifier:

# 生成与 dir_cls_preds 对应的真值 dir_labels

dir_labels = self.get_direction_target(anchors, targets, use_one_hot=False).view(-1)

dir_logits = dir_cls_preds.view(-1, 2)

# 设置权值是为了仅仅考虑 labels > 0 的目标(即车这一类)

weights = (labels > 0).type_as(dir_logits)

weights /= torch.clamp(weights.sum(-1, keepdim=True), min=1.0)

# 使用交叉熵做朝向预测的误差损失函数

dir_loss = weighted_cross_entropy(dir_logits, dir_labels,

weight=weights.view(-1),

avg_factor=1.)

dir_loss_reduced = dir_loss / batch_size

dir_loss_reduced *= .2

loss += dir_loss_reduced

return dict(rpn_loc_loss=loc_loss_reduced, rpn_cls_loss=cls_loss_reduced, rpn_dir_loss=dir_loss_reduced)

里面有一个很重要的函数 create_target_torch,是用来生成 label 用的, 具体分析在后面。

具体的 loss 构建函数:

def create_loss(self,

box_preds, # torch.Size([2, 200, 176, 14])

cls_preds, # torch.Size([2, 200, 176, 2])

cls_targets, # torch.Size([2, 70400])

cls_weights, # torch.Size([2, 70400])

reg_targets, # torch.Size([2, 70400, 7])

reg_weights, # torch.Size([2, 70400])

num_class, # 1

use_sigmoid_cls=True, # True

encode_rad_error_by_sin=True, # True

box_code_size=7): # 7

batch_size = int(box_preds.shape[0]) # B = 2

box_preds = box_preds.view(batch_size, -1, box_code_size) # torch.Size([2, 70400, 7])

if use_sigmoid_cls:

cls_preds = cls_preds.view(batch_size, -1, num_class) # torch.Size([2, 70400, 1])

else:

cls_preds = cls_preds.view(batch_size, -1, num_class + 1)

one_hot_targets = one_hot(

cls_targets, depth=num_class + 1, dtype=box_preds.dtype) # torch.Size([2, 70400, 2])

if use_sigmoid_cls:

one_hot_targets = one_hot_targets[..., 1:] # torch.Size([2, 70400, 1])

if encode_rad_error_by_sin:

box_preds, reg_targets = self.add_sin_difference(box_preds, reg_targets)

# torch.Size([2, 70400, 7])

# torch.Size([2, 70400, 7])

loc_losses = weighted_smoothl1(box_preds, reg_targets, beta=1 / 9., \

weight=reg_weights[..., None], avg_factor=1.)

cls_losses = weighted_sigmoid_focal_loss(cls_preds, one_hot_targets, \

weight=cls_weights[..., None], avg_factor=1.)

return loc_losses, cls_losses

3. 生成 label

主要在 create_target_torch 这个函数中,注释和解析如下,

这段代码的作用 主要是为了:

生成 anchor 的 labelbbox 回归的 target- 同时返回 每个 anchor 和 每个 gt_bbox 的 iou

# all_anchors : torch.Size([70400, 7])

# gt_boxes : torch.Size([num_of_gt_bbox, 7])

# anchor_mask : torch.Size(70400,)

# gt_classes : num_of_gt_bbox eg: 14

# similarity_fn : ok 未完待续。