Chap-3: TCP/IP in Embedded Systems

Two guiding principles allow protocol stacks to be implemented as shown in the OSI model:

information hiding and encapsulation.

The physical layer (PHY) is responsible for the modulation and electrical details of data transmission.

One of the responsibilities of the data link layer is to provide an error-free transmission channel known as Connection Oriented (CO) service.Another function of the link layer is to establish the type of framing to be used when the IP packet is transmitted

Network Layer(IP Layer) contains the knowledge of network topology. It includes the routing protocols and understands the network addressing scheme. Although the main responsibility of this layer is routing of packets, it also provides fragmentation to break large packets into smaller pieces so they can be transmitted across an interface that has a small Maximum Transmission Unit (MTU). Another function of IP is the capability to multiplex incoming packets destined for each of the transport protocols.

Differentiating among the classes of addresses is a significant function of the IP layer. There are three fundamental types of IP addresses: unicast addresses for sending a packet to an individual destination, multicast addresses for sending data to multiple destinations, and broadcast addresses for sending packets to everyone within reach

The purpose of ARP is to determine what the physical destination address should be that corresponds to the destination IP address.

The transport layer in TCP/IP consists of two major protocols. It contains a connection-oriented service reliable service otherwise known as a streaming service provided by the TCP protocol. In addition, TCP/IP includes an individual packet transmission service known as an unreliable or datagram service, which is provided by UDP

TCP divides the data stream into segments. The sequence number and acknowledgment numberfields are byte pointers that keep track of the position of the segments within the data stream.

A main advantage of sockets in the Unix or Linux environment is that the socket is treated as a file descriptor, and all the standard IO functions work on sockets in the same way they work on a local file.

The session layer can be thought of as analogous to a signaling protocol where information is exchanged between end points about how to set up a session.

One widely used session layer protocol is the Telnet protocol

Specific Requirements for Embedded OSs:

Timer facility;

Concurrency and multitasking;

Buffer management;

Link layer facility;

Low latency;

Minimal data copying

Chap-4:

Linux Networking Interfaces and Device Drivers

The TCP/IP stack provides a registration mechanism between the device drivers and the layer above, and this registration mechanism allows the output routines in

the networking layer

, such as IP,

to call the driver’s transmit function for a specific interface port without needing to know the driver’s internal details

.

The net_device structure, defined in file linux/include/netdevice.h, is the data structure that defines an instance of a network interface. It tracks the state information of all the network interface devices attached to the TCP/IP stack.

Network Device Initialization:

When the network interface driver’s initialization or probe function is called, the first thing it does is allocate the driver’s private data structure,Next, it must set a few key fields in the structure,

calling dev_alloc_name to set up the name

string, and then directly initializing the other device-specific fields in the net_device structure.

alloc_etherdev

calls

alloc_netdev and passes it a pointer to a setup function as the second argument.

The initialization function in each driver must allocate the net_device structure, which is used to connect the network interface driver with the network layer protocols.

Once the net_device structure is initialized, we can register it.

if

((

rc

=

register_netdev

(

dev

)) !=

0

) {

goto

err_dealloc

;

}

struct pci_driver {

struct list_head node;

char *name;

const struct pci_device_id *id_table ; /* pointer to the PCI configuration space information

must be non-NULL for probe to be called */

int (*probe) (struct pci_dev *dev, const struct pci_device_id * id); /* New device inserted */

void (*remove) (struct pci_dev *dev); /* Device removed (NULL if not a hot-plug capable driver) */

int (*suspend ) (struct pci_dev *dev , pm_message_t state ); /* Device suspended */

int (*suspend_late ) (struct pci_dev *dev , pm_message_t state );

int (*resume_early ) (struct pci_dev *dev );

int (*resume) (struct pci_dev *dev); /* Device woken up */

void (*shutdown ) (struct pci_dev *dev );

struct pci_error_handlers * err_handler;

struct device_driver driver;

struct pci_dynids dynids;

};

After all the initialization of the net device structure and the associated private data structure is complete, the driver can be registered as a networking device.

Network device registration consists of putting the driver’s net_device structure on a linked list.

Most of the functions involved in network device registration use the name field in the net_device structure.This is why driver writers should use the dev_alloc_name function to

ensure that the name field is formatted properly.

The list of net devices is protected by the netlink mutex locking and unlocking functions, rtnl_lock and rtnl_unlock

The list of net devices is protected by the netlink mutex locking and unlocking functions, rtnl_lock and rtnl_unlock. The list of devices should not be manipulated without locking because if the locks are not used, it is possible for the device list to become corrupted or two devices that try to register in parallel to be assigned the same name.

int register_netdev( struct net_device * dev)

{

int err;

rtnl_lock();

/*

* If the name is a format string the caller wants us to do a

* name allocation.

*/

if (strchr (dev -> name, '%')) {

err = dev_alloc_name (dev , dev -> name);

if (err < 0 )

goto out;

}

err = register_netdevice (dev );

out:

rtnl_unlock();

return err;

}

Network Device Registration Utility Functions :

The first function dev_get_by_name finds a device by name. It can be called from any context because it does its own locking. It returns a pointer to a net_device based on the string name.

struct net_device * dev_get_by_name(const char *name);

We send a notification message to any interested protocols that this device is about to be destroyed by calling the notifier_call_chain

notifier_call_chain (&netdev_chain , NETDEV_UNREGISTER , dev );

int

register_netdev

(

struct

net_device

*

dev

);

int

register_netdevice

(

struct

net_device

*

dev

); ->

void

unregister_netdev

(

struct

net_device

*

dev

);

struct

net_device

*

alloc_etherdev

(

int

sizeof_priv

);

Network Interface Driver Service Functions :

The driver's open function is called through the open field in the net device structure

.

int (*open) (struct net_device *dev);

Open is called by the generic dev_open function in linux

/

net

/

core

/

dev

.

c

.

int

dev_open

(

struct

net_device

*

dev

);

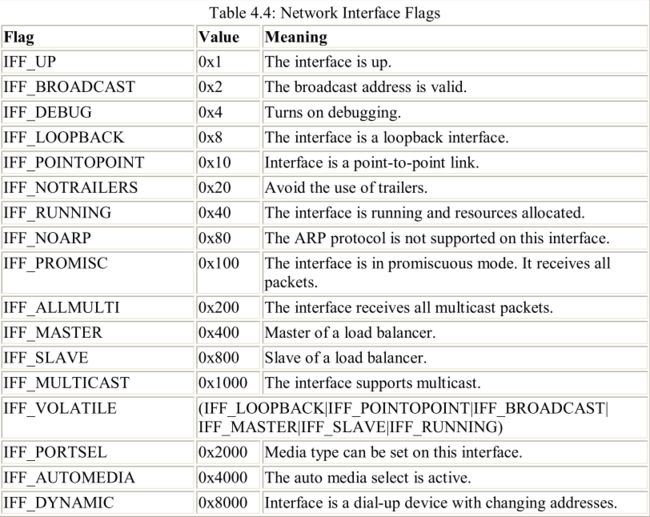

First, dev_open checks to see if the device has already been activated by checking for IFF_UP in the flags field of the net_device structure, and if the driver is already up, we simply return a zero. Next, it checks to see if the physical device is present by calling netif_device_present, which checks the link state bits in the state field of the network device structure. If all this succeeds, dev_open calls the driver through the open field in the net_device structure.

Most drivers use the open function to initialize their internal data structures prior to accepting and transmitting packets. These structures may include the internal queues, watchdog timers, and lists of internal buffers. Next, the driver generally starts up the receive queue by calling netif_start_queue, defined in linux/include/linux/netdevice.h, which starts the queue by clearing the __LINK_STATE_XOFF in the state field of the net_device structure. The states are listed in Table 4.1 and are used by the queuing layer to control the transmit queues for the device. See Section 4.8 for a description of how the packet queuing layer works. Right up to the point where the queuing is started, the driver can change the device’s queuing discipline. Chapter 6 has more detail about Linux’s capability to work with multiple queuing disciplines for packet transmission queues.

If everything is OK, the flags field is set to IFF_UP and the state field is set to LINK_STATE_START to indicate that the network link is active and ready to receive packets. Next, dev_open calls the dev_mc_upload to set up the list of multicast addresses for this device. Finally, dev_open calls dev_activate, which sets up a default queuing discipline for the device, typically pfifo_fast for hardware devices and none for pseudo or software devices.

The set_multicast_list driver service function initializes the list of multicast addresses for the interface.

void (*set_multicast_list )(struct net_device *dev );

Device multicast addresses are contained in a generic structure, dev_mc_list, which allows the interface to support one or more link layer multicast addresses.

struct dev_mc_list

{

struct dev_mc_list *next;

__u8 dmi_addr [MAX_ADDR_LEN ];

unsigned char dmi_addrlen;

int dmi_users;

int dmi_gusers;

} ;

The hard_start_xmit network interface service function starts the transmission of an individual

packet

or

queue of packets

.

int (*hard_start_xmit ) (struct sk_buff *skb , struct net_device *dev )

This function is called from the network queuing layer when a packet is ready for transmission. The first thing the driver must do

in hard_start_xmit is ensure that there are hardware resources available for transmitting the

packet and there is a sufficient number of available buffers.

The change_mtu network interface service function is to change the Maximum Transmission

Unit (MTU) of a device .

int

(*

change_mtu

)(

struct

net_device

*

dev

,

int

new_mtu

);

Get_stats returns a pointer to the network device statistics .

struct net_device_stats* (* get_stats)(struct net_device *dev);

Do_ioctl implements any device-specific socket IO control (ioctl) functions.

int (*do_ioctl )(struct net_device *dev , struct ifreq *ifr , int cmd );

Reciving Paket:

As is the case with any hardware device driver in other operating systems, the first step in packet reception occurs when the device responds to an interrupt from the network interface hardware.

If we detect a receive interrupt, we know that a received packet is available

for processing so we can begin to perform the steps necessary for packet reception. One of the first things we must do is gather the buffer containing the raw received packet into a socket buffer or sk_buff. Most efficient drivers will avoid copying the data at this step. In Linux, the socket buffers are used to contain network data packets, and they can be set up to point directly to the DMA space.

for processing so we can begin to perform the steps necessary for packet reception. One of the first things we must do is gather the buffer containing the raw received packet into a socket buffer or sk_buff. Most efficient drivers will avoid copying the data at this step. In Linux, the socket buffers are used to contain network data packets, and they can be set up to point directly to the DMA space.

Generally, network interface drivers maintain a list of sk_buffs in their private data structure, and once the interrupt indicates that input DMA is complete, we can place the socket buffer containing the new packet on a queue of packets ready for processing by the protocol’s input function.

The netif_rx function declared in file linux/include/linux/netdevice.h is called by the ISR to invoke the input side of packet processing and queue up the packet for processing by the packet receive softirq, NET_RX_SOFTIRQ.

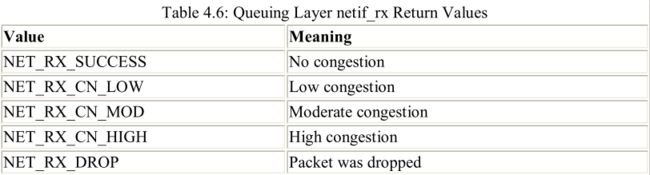

The netif_rx function returns a value indicating the amount of network congestion detected by the queuing layer or whether the packet was dropped altogether. Table 4.6 shows the return values for netif_rx.

netif_rx, is the main function called from interrupt service routines in network

interface drivers. It is defined in file linux/net/core/dev.c.

interface drivers. It is defined in file linux/net/core/dev.c.

Starting with Linux version 2.4, this structure includes a copy of a pseudo net device structure called blog_dev, otherwise known as the backlog device.

/*

* Incoming packets are placed on per-cpu queues so that

* no locking is needed.

*/

struct softnet_data {

struct Qdisc *output_queue ;

struct sk_buff_head input_pkt_queue;

struct list_head poll_list;

struct sk_buff *completion_queue ;

struct napi_struct backlog;

}

struct

napi_struct

{

/* The poll_list must only be managed by the entity which

* changes the state of the NAPI_STATE_SCHED bit. This means

* whoever atomically sets that bit can add this napi_struct

* to the per-cpu poll_list, and whoever clears that bit

* can remove from the list right before clearing the bit.

*/

struct

list_head poll_list

;

unsigned

long

state

;

int

weight

;

int

(*

poll

)(

struct

napi_struct

*,

int

);

#ifdef CONFIG_NETPOLL

spinlock_t poll_lock

;

int

poll_owner

;

#endif

unsigned

int

gro_count

;

struct

net_device

*

dev

;

struct

list_head dev_list

;

struct

sk_buff

*

gro_list

;

struct

sk_buff

*

skb

;

};

以前的softnet_data 被分割为了两部分,但是实现的功能基本上是一样的

Backlog_dev is used by the packet queuing layer to store the packet queues for most nonpolling network interface drivers. The blog_dev device is used instead of the "real" net_device structure to hold the queues, but the actual device is still used to keep track of the network interface from which the packet arrived as the packet is processed by the upper layer protocols.

Transmitting Packets :

Packet transmission is controlled by the upper layers, not by the network interface driver.

That function is actually called from the packet queuing layer when there is one or more packets in a socket buffer ready to transmit. In most drivers, when it is called from the queuing layer, hard_start_xmit will put the sk_buff on a local queue in the driver’s private data structure and enable the transmit available interrupt.

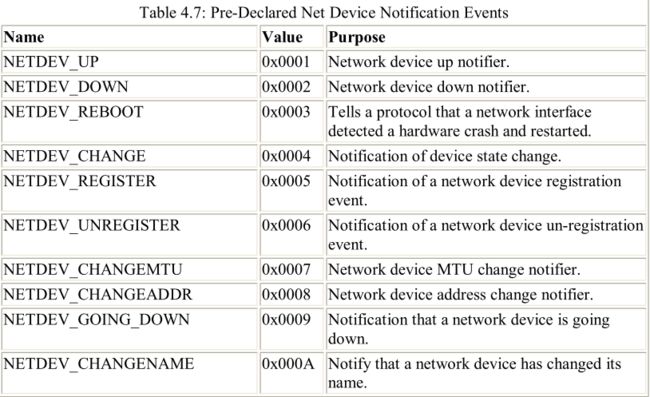

Linux provides a mechanism for device status change notification called notifier chains.

Each location in the linked list is defined by an instance of the notifier_block structure.

/*

* Notifier chains are of four types:

*

* Atomic notifier chains: Chain callbacks run in interrupt/atomic

* context. Callouts are not allowed to block.

* Blocking notifier chains: Chain callbacks run in process context.

* Callouts are allowed to block.

* Raw notifier chains: There are no restrictions on callbacks,

* registration, or unregistration. All locking and protection

* must be provided by the caller.

* SRCU notifier chains: A variant of blocking notifier chains, with

* the same restrictions.

*

* atomic_notifier_chain_register() may be called from an atomic context,

* but blocking_notifier_chain_register() and srcu_notifier_chain_register()

* must be called from a process context. Ditto for the corresponding

* _unregister() routines.

*

* atomic_notifier_chain_unregister(), blocking_notifier_chain_unregister(),

* and srcu_notifier_chain_unregister() _must not_ be called from within

* the call chain.

*

* SRCU notifier chains are an alternative form of blocking notifier chains.

* They use SRCU (Sleepable Read-Copy Update) instead of rw-semaphores for

* protection of the chain links. This means there is _very_ low overhead

* in srcu_notifier_call_chain(): no cache bounces and no memory barriers.

* As compensation, srcu_notifier_chain_unregister() is rather expensive.

* SRCU notifier chains should be used when the chain will be called very

* often but notifier_blocks will seldom be removed. Also, SRCU notifier

* chains are slightly more difficult to use because they require special

* runtime initialization.

*/

struct notifier_block {

int (*notifier_call )(struct notifier_block *, unsigned long, void *);

struct notifier_block * next;

int priority;

};

The first of these, notifier_chain_register, registers a notifier_block with the event notification facility.

int notifier_chain_register( struct notifier_block ** list,

struct notifier_block * n);

To pass an event into the notification call chain , the function notifier_call_chain is called with apointer to the notifier_block list, n an event value , val , and an optional generic argument, v.

int notifier_call_chain( struct notifier_block ** n, unsigned long val ,

void *v

);

int register_netdevice_notifier( struct notifier_block * nb);

A notification function will be called through the notifier_call field in nb

for

all the event types

in Table

4.7

.

int (*notifier_call )(struct notifier_block *self , unsigned long, void *);

说明: 该系列学习笔记主要参考:《

The Linux TCP/IP Stack Networking for Embedded Systems 》,这本书讲的思路对我来说较容易理解,只不过该书内容针对的是2.6早期内核,我在学习的时候结合2.6.34内核源码进行了学习,在学习的过程中发现了内核协议栈也有很多改变,主线基本没变,主要是内核开发人员进行了代码重构,提高了效率。在该书的思路主线下,学习笔记了主要参考2.6.34内核源码

,这种形式也许有些不妥,希望能得到大家的指正和引导。