centos上配置dm-cache

dm-cache概念:

dm-cache作为第一个进入kernel的ssd-cache,在3.9内核版本并入,目前是最稳定的。3.10版本的dm-cache采用了全新的smq淘汰策略。优化了内存消耗,并且提升了性能。

与flashcache类似,dm-cache也是基于device-mapper框架。

在kernel 2.6.x中dm-cache采用和flashcache相同的set associative hash,但是在3.x后代码重构完全去除了该算法,用mq(multi queue)取代,在3.10后是用smq(Stochastic multiqueue),smq算法解决了mq的一些问题,比如内存使用,mq每个cacheblock需要88byte,smq仅仅需要25byte。

dm-cache配置:

为了给后端低速HDD盘做高速缓存,要对SSD开启cache,并虚拟出一个设备用来缓存。

1.查看cache的SSD大小

blockdev --getsize64 /dev/sdb1

2。根据SSD大小计算metadata大小

4194304(4M)+(16 * 53687091200)= 7471104

7471104 / 512 = 14592

所以要从SSD中为metadata分配14592个block。

3.先建立ssd-metadata设备(在SSD上建立)

dmsetup create ssd-metadata --table '0 14592 linear /dev/sdb1 0'

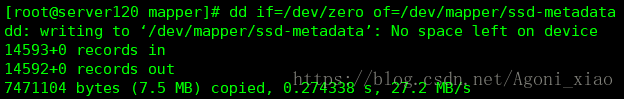

建立完成后,对新建的ssd-metadata设备进行清空

dd if=/dev/zero of=/dev/mapper/ssd-metadata

4.建立ssd-cache并查看

计算SSD分出去后剩余的block块个数

(53687091200 /512) - 14592 = 104843008

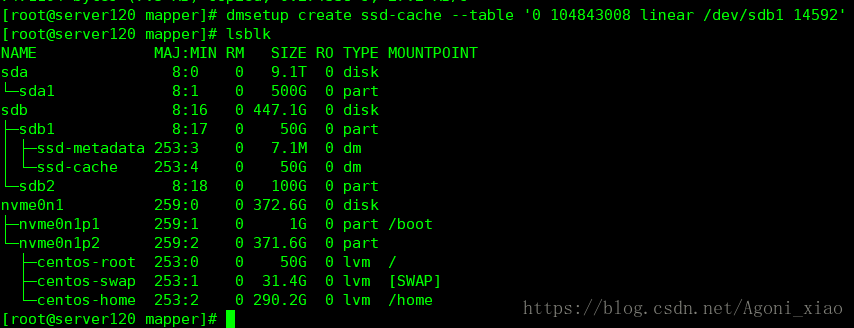

dmsetup create ssd-cache --table '0 104843008 linear /dev/sdb1 14592'

创建完成后,lsblk查看ssd-metadata,ssd-cache

5.获得机械盘的扇区数

blockdev --getsize64 /dev/sdb1

将cache与后端磁盘建立联系,并虚拟出设备hdd-origin

dmsetup create hdd-origin --table '0 1048576000 cache /dev/mapper/ssd-metadata /dev/mapper/ssd-cache /dev/sda1 512 1 writeback default 0'

在创建的时候,需要填写策略,cache设备,metadata设备等参数,下面是我找到的关于创建时候的资料

看不清的话下面有,但是有点错位

sudo blockdev --getsz /dev/sdd #The data device

Im Beispiel liefert dieser Befehl 8388608 zurück.

Folgender Befehl richtet den Cache ein:

sudo dmsetup create device_name --table '0 8388608 cache /dev/sde1 /dev/sde2 /dev/sdd 512 1 writeback default 0'

│ │ │ │ │ │ │ │ │ └─Anzahl der Policy Argumente

│ │ │ │ │ │ │ │ └──────Verwendete Caching Policy

│ │ │ │ │ │ │ └───────────────Feature-Argument:(Write-Cache (writeback oder writethrough))

│ │ │ │ │ │ └─────────────────────Anzahl der Feature-Argumente

│ │ │ │ │ └────────────────────────Blockgröße in Sektoren (256KB = 512*512Byte)

│ │ │ │ └──────────────────────────────Daten-Device

│ │ │ └────────────────────────────────────────Cache-Device

│ │ └──────────────────────────────────────────────────Metadaten-Device

│ └─────────────────────────────────────────────────────────────────Letzter Sektor des Devices

└──────────────────────────────────────────────────────────────────────Erster Sektor des Devices

Das erstellte Device ist danch unter /dev/mapper/device_name verfügbar und kann gemountet werden.

6.验证创建的dm-cache是否成功

ls -l /dev/mapper/hdd-origin

dmsetup status /dev/mapper/hdd-origin

这里面的每个参数代表的意义:

Der Device-Mapper stellt über dmsetup status den aktuellen Status des Caches zur Verfügung.

sudo dmsetup status /dev/mapper/dmcache_test

Beispielausgabe:

0 8388608 cache 34/1280 1781667 761103 200117 225373 0 0 3567 1 1 writethrough 2 migration_threshold 2048 4 random_threshold 4 sequential_threshold 512

│ │ │ │ │ │ │ │ │ │ │ │ │ │ │ └──────────└───────────└───────────└─*

│ │ │ │ │ │ │ │ │ │ │ │ │ │ └─<#policy args>

│ │ │ │ │ │ │ │ │ │ │ │ └────────────└─*

│ │ │ │ │ │ │ │ │ │ │ └─<#core args>

│ │ │ │ │ │ │ │ │ │ └─*

│ │ │ │ │ │ │ │ │ └─<#feature args>

│ │ │ │ │ │ │ │ └─<#dirty>

│ │ │ │ │ │ │ └─<#blocks in cache>

│ │ │ │ │ │ └─<#promotions>

│ │ │ │ │ └─<#demotions>

│ │ │ │ └─<#write misses>

│ │ │ └─<#write hits>

│ │ └─<#read misses>

│ └─<#read hits>

└─<#used metadata blocks>/<#total metadata blocks> Die Erklärung der Variablen wird in der Kernel-Dokumentation kurz erläutert:[3]

#used metadata blocks : Number of metadata blocks used

#total metadata blocks : Total number of metadata blocks

#read hits : Number of times a READ bio has been mapped to the cache

#read misses : Number of times a READ bio has been mapped to the origin

#write hits : Number of times a WRITE bio has been mapped to the cache

#write misses : Number of times a WRITE bio has been mapped to the origin

#demotions : Number of times a block has been removed from the cache

#promotions : Number of times a block has been moved to the cache

#blocks in cache : Number of blocks resident in the cache

#dirty : Number of blocks in the cache that differ from the origin

#feature args : Number of feature args to follow

feature args : 'writethrough' (optional)

#core args : Number of core arguments (must be even)

core args : Key/value pairs for tuning the core e.g. migration_threshold

#policy args : Number of policy arguments to follow (must be even)

policy args : Key/value pairs e.g. 'sequential_threshold 1024

7.停用dm-cache

umount /dev/mapper/hdd-origin

dmsetup remove hdd-origin

dmsetup remove ssd-metadata

dmsetup remove ssd-cache

资料:

Caching beenden

Beim Beenden des Caches müssen alle Daten aus dem Cache auf das Daten-Device geschrieben werden. Folgenden Befehle werden dabei verwendet:

dmsetup suspend device_name

dmsetup reload device_name --table '0 8388608 cache /dev/sde1 /dev/sde2 /dev/sdd 512 0 cleaner 0'

dmsetup resume device_name

dmsetup wait device_name

Danach sind alle Daten vom Cache auf das Daten-Device geschrieben worden und der Cache wird entfernt:

dmsetup suspend device_name

dmsetup clear device_name

dmsetup remove device_name

最后lsblk可以看到刚创建dm-cache的全都没了,在/dev/mapper/ 没有了hdd-origin 如果不放心 可重启后 再lsblk观察是否删干净。

写策略是可以切换的,方法如下:

We can see the current write mode with 'dmsetup status' on the top level object, although the output is what they call somewhat hard to interpret:

# dmsetup status testing-test 0 10485760 cache 8 259/128000 128 4412/81920 16168 6650 39569 143311 0 0 0 1 writeback 2 migration_threshold 2048 mq 10 random_threshold 4 sequential_threshold 512 discard_promote_adjustment 1 read_promote_adjustment 4 write_promote_adjustment 8

I have bolded the important bit. This says that the cache is in the potentially dangerous writeback mode. To change the writeback mode we must redefine the cache, which is a little bit alarming and also requires temporarily suspending and unsuspending the device; the latter may have impacts if, for example, you are actually using it for a mounted filesystem at the time.

DM devices are defined through what the dmsetup manpage describes as 'a table that specifies a target for each sector in the logical device'. Fortunately the tables involved are relatively simple and better yet, we can get dmsetup to give us a starting point:

# dmsetup table testing-test 0 10485760 cache 253:3 253:2 253:4 128 0 default 0

To change the cache mode, we reload an altered table and then suspend and resume the device to activate our newly loaded table. For now I am going to just present the new table with the changes bolded:

# dmsetup reload --table '0 10485760 cache 253:3 253:2 253:4 128 1 writethrough default 0' testing-test # dmsetup suspend testing-test # dmsetup resume testing-test

At this point you can rerun 'dmsetup status' to see that the cache device has changed to writethrough.

So let's talk about the DM table we (re)created here. The first two numbers are the logical sector range and the rest of it describes the target specification for that range. The format of this specification is, to quote the big comment in the kernel's devices/md/dm-cache-target.c:

cache<#feature args> [ ]* <#policy args> [ ]*

The original table's ending of '0 default 0' thus meant 'no feature arguments, default policy, no policy arguments'. Our new version of '1 writethrough default 0' is a change to '1 feature argument of writethrough, still the default policy, no policy arguments'. Also, if you're changing from writethrough back to writeback you don't end the table with '1 writeback default 0' because it turns out that writeback isn't a feature, it's just the default state. So you write the end of the table as '0 default 0' (as it was initially here).

Now it's time for the important disclaimers. The first disclaimer is that I'm not sure what happens to any dirty blocks on your cache device if you switch from writeback to writethrough mode. I assume that they still get flushed back to your real device and that this happens reasonably fast, but I can't prove it from reading the kernel source or my own tests and I can't find any documentation. At the moment I would call this caveat emptor until I know more. In fact I'm not truly confident what happens if you switch between writethrough and writeback in general.

(I do see some indications that there is a flush and it is rapid, but I feel very nervous about saying anything definite until I'm sure of things.)

The second disclaimer is that at the moment the Fedora 20 LVM cannot set up writethrough cache LVs. You can tell it to do this and it will appear to have succeeded, but the actual cache device as created at the DM layer will be writeback. This issue is what prompted my whole investigation of this at the DM level. I have filed Fedora bug #1135639about this, although I expect it's an upstream issue.

The third disclaimer is that all of this is as of Fedora 20 and its 3.15.10-200.fc20 kernel (on 64-bit x86, in specific). All of this may change over time and probably will, as I doubt that the kernel people consider very much of this to be a stable interface.

Given all of the uncertainties involved, I don't plan to consider using LVM caching until LVM can properly create writethrough caches. Apart from the hassle involved, I'm just not happy with converting live dm-cache setups from one mode to the other right now, not unless someone who really knows this system can tell us more about what's really going on and so on.

(A great deal of my basic understanding of dmsetup usage comes from Kyle Manna's entry SSD caching using dm-cache tutorial.)

Sidebar: forcing a flush of the cache

In theory, if you want to be more sure that the cache is clean in a switch between writeback and writethrough you can explicitly force a cache clean by switching to the cleaner policy first and waiting for it to stabilize.

# dmsetup reload --table '0 10485760 cache 253:3 253:2 253:4 128 0 cleaner 0' testing-test # dmsetup wait testing-test

I don't know how long you have to wait for this to be safe. If the cache LV (or other dm-cache device) is quiescent at the user level, I assume that you should be okay when IO to the actual devices goes quiet. But, as before, caveat emptor applies; this is not really well documented.

Sidebar: the output of 'dmsetup status'

The output of 'dmsetup status' is mostly explained in a comment in front of the cache_status()function in devices/md/dm-cache-target.c. To save people looking for it, I will quote it here:

<#used metadata blocks>/<#total metadata blocks>

<#used cache blocks>/<#total cache blocks>

<#read hits> <#read misses> <#write hits> <#write misses>

<#demotions> <#promotions> <#dirty>

<#features>*

<#core args>

<#policy args> *

Unlike with the definition table, 'writeback' is considered a feature here. By cross-referencing this with the earlier 'dmsetup status' output we can discover that mq is the default policy and it actually has a number of arguments, the exact meanings of which I haven't researched (but see here and here).