- nosql数据库技术与应用知识点

皆过客,揽星河

NoSQLnosql数据库大数据数据分析数据结构非关系型数据库

Nosql知识回顾大数据处理流程数据采集(flume、爬虫、传感器)数据存储(本门课程NoSQL所处的阶段)Hdfs、MongoDB、HBase等数据清洗(入仓)Hive等数据处理、分析(Spark、Flink等)数据可视化数据挖掘、机器学习应用(Python、SparkMLlib等)大数据时代存储的挑战(三高)高并发(同一时间很多人访问)高扩展(要求随时根据需求扩展存储)高效率(要求读写速度快)

- ES聚合分析原理与代码实例讲解

光剑书架上的书

大厂Offer收割机面试题简历程序员读书硅基计算碳基计算认知计算生物计算深度学习神经网络大数据AIGCAGILLMJavaPython架构设计Agent程序员实现财富自由

ES聚合分析原理与代码实例讲解1.背景介绍1.1问题的由来在大规模数据分析场景中,特别是在使用Elasticsearch(ES)进行数据存储和检索时,聚合分析成为了一个至关重要的功能。聚合分析允许用户对数据集进行细分和分组,以便深入探索数据的结构和模式。这在诸如实时监控、日志分析、业务洞察等领域具有广泛的应用。1.2研究现状目前,ES聚合分析已经成为现代大数据平台的核心组件之一。它支持多种类型的聚

- WebMagic:强大的Java爬虫框架解析与实战

Aaron_945

Javajava爬虫开发语言

文章目录引言官网链接WebMagic原理概述基础使用1.添加依赖2.编写PageProcessor高级使用1.自定义Pipeline2.分布式抓取优点结论引言在大数据时代,网络爬虫作为数据收集的重要工具,扮演着不可或缺的角色。Java作为一门广泛使用的编程语言,在爬虫开发领域也有其独特的优势。WebMagic是一个开源的Java爬虫框架,它提供了简单灵活的API,支持多线程、分布式抓取,以及丰富的

- 免费的GPT可在线直接使用(一键收藏)

kkai人工智能

gpt

1、LuminAI(https://kk.zlrxjh.top)LuminAI标志着一款融合了星辰大数据模型与文脉深度模型的先进知识增强型语言处理系统,旨在自然语言处理(NLP)的技术开发领域发光发热。此系统展现了卓越的语义把握与内容生成能力,轻松驾驭多样化的自然语言处理任务。VisionAI在NLP界的应用领域广泛,能够胜任从机器翻译、文本概要撰写、情绪分析到问答等众多任务。通过对大量文本数据的

- 如何利用大数据与AI技术革新相亲交友体验

h17711347205

回归算法安全系统架构交友小程序

在数字化时代,大数据和人工智能(AI)技术正逐渐革新相亲交友体验,为寻找爱情的过程带来前所未有的变革(编辑h17711347205)。通过精准分析和智能匹配,这些技术能够极大地提高相亲交友系统的效率和用户体验。大数据的力量大数据技术能够收集和分析用户的行为模式、偏好和互动数据,为相亲交友系统提供丰富的信息资源。通过分析用户的搜索历史、浏览记录和点击行为,系统能够深入了解用户的兴趣和需求,从而提供更

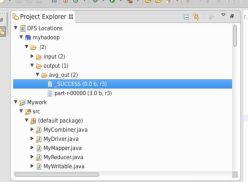

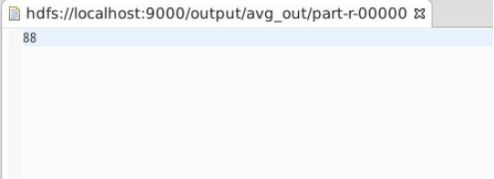

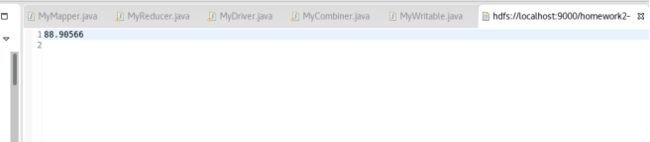

- 浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

- 未来软件市场是怎么样的?做开发的生存空间如何?

cesske

软件需求

目录前言一、未来软件市场的发展趋势二、软件开发人员的生存空间前言未来软件市场是怎么样的?做开发的生存空间如何?一、未来软件市场的发展趋势技术趋势:人工智能与机器学习:随着技术的不断成熟,人工智能将在更多领域得到应用,如智能客服、自动驾驶、智能制造等,这将极大地推动软件市场的增长。云计算与大数据:云计算服务将继续普及,大数据技术的应用也将更加广泛。企业将更加依赖云计算和大数据来优化运营、提升效率,并

- Hadoop

傲雪凌霜,松柏长青

后端大数据hadoop大数据分布式

ApacheHadoop是一个开源的分布式计算框架,主要用于处理海量数据集。它具有高度的可扩展性、容错性和高效的分布式存储与计算能力。Hadoop核心由四个主要模块组成,分别是HDFS(分布式文件系统)、MapReduce(分布式计算框架)、YARN(资源管理)和HadoopCommon(公共工具和库)。1.HDFS(HadoopDistributedFileSystem)HDFS是Hadoop生

- Hadoop架构

henan程序媛

hadoop大数据分布式

一、案列分析1.1案例概述现在已经进入了大数据(BigData)时代,数以万计用户的互联网服务时时刻刻都在产生大量的交互,要处理的数据量实在是太大了,以传统的数据库技术等其他手段根本无法应对数据处理的实时性、有效性的需求。HDFS顺应时代出现,在解决大数据存储和计算方面有很多的优势。1.2案列前置知识点1.什么是大数据大数据是指无法在一定时间范围内用常规软件工具进行捕捉、管理和处理的大量数据集合,

- [转载] NoSQL简介

weixin_30325793

大数据数据库运维

摘自“百度百科”。NoSQL,泛指非关系型的数据库。随着互联网web2.0网站的兴起,传统的关系数据库在应付web2.0网站,特别是超大规模和高并发的SNS类型的web2.0纯动态网站已经显得力不从心,暴露了很多难以克服的问题,而非关系型的数据库则由于其本身的特点得到了非常迅速的发展。NoSQL数据库的产生就是为了解决大规模数据集合多重数据种类带来的挑战,尤其是大数据应用难题。虽然NoSQL流行语

- Kafka详细解析与应用分析

芊言芊语

kafka分布式

Kafka是一个开源的分布式事件流平台(EventStreamingPlatform),由LinkedIn公司最初采用Scala语言开发,并基于ZooKeeper协调管理。如今,Kafka已经被Apache基金会纳入其项目体系,广泛应用于大数据实时处理领域。Kafka凭借其高吞吐量、持久化、分布式和可靠性的特点,成为构建实时流数据管道和流处理应用程序的重要工具。Kafka架构Kafka的架构主要由

- 分享一个基于python的电子书数据采集与可视化分析 hadoop电子书数据分析与推荐系统 spark大数据毕设项目(源码、调试、LW、开题、PPT)

计算机源码社

Python项目大数据大数据pythonhadoop计算机毕业设计选题计算机毕业设计源码数据分析spark毕设

作者:计算机源码社个人简介:本人八年开发经验,擅长Java、Python、PHP、.NET、Node.js、Android、微信小程序、爬虫、大数据、机器学习等,大家有这一块的问题可以一起交流!学习资料、程序开发、技术解答、文档报告如需要源码,可以扫取文章下方二维码联系咨询Java项目微信小程序项目Android项目Python项目PHP项目ASP.NET项目Node.js项目选题推荐项目实战|p

- 疫情,疫情

东山草

2020年,疫情爆发,至今已近三年,反反复复,此起彼伏。不但没被消灭,还自我发展,从德尔塔到奥密克戎,与时俱进的变异着。去年11月,疫情之下,大数据800米范围内,都成为时空伴随者。“你的码儿有没有变颜色”“你绿码还是黄码”成为那段时间的流行语,当然少不了的还有全员核酸。段子手整出来一首歌:我走过你走过的路,这算不算相逢?我吹过你吹过的风,这算不算相拥?800米内我们不曾擦肩而过,你却要我14天相

- 在服务器计算节点中使用 jupyter Lab

ranshan567

程序人生

JupyterLab是一个基于网页的交互式开发环境,用于科学计算、数据分析和机器学.jupyterlab是jupyternotebook的下一代产品,集成了更多功能,使用起来更方便.在进行数据分析及可视化时,个人电脑不能满足大数据的分析需求,就需要用到高性能计算机集群资源,然而计算机集群的计算节点往往没有联网功能,所以在计算机集群中使用jupyterLab需要进行一些配置。具体的步骤如下:

- 大数据真实面试题---SQL

The博宇

大数据面试题——SQL大数据mysqlsql数据库bigdata

视频号数据分析组外包招聘笔试题时间限时45分钟完成。题目根据3张表表结构,写出具体求解的SQL代码(搞笑品类定义:视频分类或者视频创建者分类为“搞笑”)1、表创建语句:createtablet_user_video_action_d(dsint,user_idstring,video_idstring,action_typeint,`timestamp`bigint)rowformatdelimi

- hbase介绍

CrazyL-

云计算+大数据hbase

hbase是一个分布式的、多版本的、面向列的开源数据库hbase利用hadoophdfs作为其文件存储系统,提供高可靠性、高性能、列存储、可伸缩、实时读写、适用于非结构化数据存储的数据库系统hbase利用hadoopmapreduce来处理hbase、中的海量数据hbase利用zookeeper作为分布式系统服务特点:数据量大:一个表可以有上亿行,上百万列(列多时,插入变慢)面向列:面向列(族)的

- Flume:大规模日志收集与数据传输的利器

傲雪凌霜,松柏长青

后端大数据flume大数据

Flume:大规模日志收集与数据传输的利器在大数据时代,随着各类应用的不断增长,产生了海量的日志和数据。这些数据不仅对业务的健康监控至关重要,还可以通过深入分析,帮助企业做出更好的决策。那么,如何高效地收集、传输和存储这些海量数据,成为了一项重要的挑战。今天我们将深入探讨ApacheFlume,它是如何帮助我们应对这些挑战的。一、Flume概述ApacheFlume是一个分布式、可靠、可扩展的日志

- 云服务业界动态简报-20180128

Captain7

一、青云青云QingCloud推出深度学习平台DeepLearningonQingCloud,包含了主流的深度学习框架及数据科学工具包,通过QingCloudAppCenter一键部署交付,可以让算法工程师和数据科学家快速构建深度学习开发环境,将更多的精力放在模型和算法调优。二、腾讯云1.腾讯云正式发布腾讯专有云TCE(TencentCloudEnterprise)矩阵,涵盖企业版、大数据版、AI

- 大数据毕业设计hadoop+spark+hive知识图谱租房数据分析可视化大屏 租房推荐系统 58同城租房爬虫 房源推荐系统 房价预测系统 计算机毕业设计 机器学习 深度学习 人工智能

2401_84572577

程序员大数据hadoop人工智能

做了那么多年开发,自学了很多门编程语言,我很明白学习资源对于学一门新语言的重要性,这些年也收藏了不少的Python干货,对我来说这些东西确实已经用不到了,但对于准备自学Python的人来说,或许它就是一个宝藏,可以给你省去很多的时间和精力。别在网上瞎学了,我最近也做了一些资源的更新,只要你是我的粉丝,这期福利你都可拿走。我先来介绍一下这些东西怎么用,文末抱走。(1)Python所有方向的学习路线(

- 架构评审的自动化与人工智能: 如何提高效率

光剑书架上的书

架构自动化人工智能运维

1.背景介绍架构评审是软件开发过程中的一个关键环节,它旨在确保软件架构的质量、可维护性和可扩展性。传统的架构评审通常是由人工进行,需要大量的时间和精力。随着大数据技术和人工智能的发展,自动化和人工智能技术已经开始应用于架构评审,从而提高评审的效率和准确性。在本文中,我们将讨论如何通过自动化和人工智能技术来提高架构评审的效率。我们将从以下几个方面进行讨论:背景介绍核心概念与联系核心算法原理和具体操作

- 【数字化供应链】数字化供应链架构、全景管理、全流程贯通方案

数字化建设方案

数字化转型数据治理主数据数据仓库供应链数字仓储智慧物流智慧仓储物流园区架构微服务数据挖掘大数据人工智能

原文《数字化供应链架构、全景管理、全流程贯通方案》PPT格式。主要从供应链管理全景、智慧供应链建设总体目标、供应链总体业务流程、供应链总体功能架构、供应链总体技术架构、供应链全流程贯通、供应链全领域管理、供应链数据数据分析、供应链决策中台等进行建设。本文仅对主要内容进行介绍。来源网络公开渠道,旨在交流学习,如有侵权联系速删,更多参考公众号:优享智库基于先进IT技术、大数据能力、物联网应用、区块链平

- 80

鑫_259b

科普一个谈恋爱的方法。在以前,谈恋爱千难万难,就难在对对方不知底细,不知道对方希望自己是一个怎样的人,要耗费大量的时间去试探、再磨合,往往会因为一些小事一些细节,满盘皆输。在一个信息化的时代,在一个大数据近乎变成了流行语的时代,我们要跟上时代的步伐,通过大数据,去寻找异性最希望自己展现出来的形象是什么,才可以在爱情的道路上少走弯路。那这个大数据怎么操作呢?上街发问卷?问别人的择偶标准?一来会被打死

- 解锁企业潜能,Vatee万腾平台引领智能新纪元

自媒体经济说

其他

在数字化转型的浪潮中,企业正站在一个前所未有的十字路口,面对着前所未有的机遇与挑战。解锁企业内在潜能,实现跨越式发展,已成为众多企业的共同追求。而Vatee万腾平台,作为智能科技的先锋,正以其强大的智能赋能能力,引领企业步入一个全新的智能纪元。Vatee万腾平台,是一个集成了人工智能、大数据、云计算等前沿技术的综合性智能服务平台。它不仅仅是一个技术工具,更是企业转型升级的加速器,能够深入企业运营的

- 释放“AI+”新质生产力,深算院如何“把大数据变小”?

YashanDB

YashanDB国产数据库数据库数据库大数据

近期,南都·湾财社推出《新质·中国造》栏目,深入千行百业,遍访湾区企业,解锁湾区新质生产力,共探高质量发展之道。本期对话深圳计算科学研究院YashanDB首席技术官陈志标,探讨国产数据库如何实现创新突围,抢抓数字经济时代的新机遇。以下是专访内容:如何应对AI时代所面临的算力挑战?南都·湾财社:数据、算力和算法是发展人工智能的三要素,深算院做了怎样的前瞻性布局?陈志标:今年,政府工作报告中首次提及开

- 数字化智能工厂数字化供应链架构、全景管理、全流程贯通方案

数字化建设方案

智能制造数字工厂制造业数字化转型工业互联网架构

随着信息技术的飞速发展,数字化转型已成为制造企业提升竞争力的关键途径。数字化智能工厂通过集成先进的物联网(IoT)、大数据、云计算、人工智能(AI)等技术,实现了生产过程的智能化、供应链管理的精准化及决策的科学化。本方案旨在构建一套完善的数字化供应链架构,实现全景管理、全流程贯通、智慧化升级,以数据为驱动,强化技术支撑与安全管理体系,推动企业向智能制造迈进。一、数字化供应链架构1.**集成化平台构

- 日记——我的歌单

静若小猴

又到一年一度大数据汇总的时候了,听歌已经成为很多人生活里的一种乐趣。春夏秋冬,我们都有自己喜欢的歌,歌词歌曲唱出沃尔玛你的心声。还记得大学时候最喜欢听的《春天里》,我有一天单曲回放了30遍,总觉得听着仿佛看到自己声音。还有的歌,初听不知曲中意,再听已经是曲终人,听着歌流泪,听着歌入睡……还记得那些年少的故事吗,总觉得自己才是故事外的人,却不是自己已经入歌。一段时间会喜欢一个人的音乐,一段时间会沉静

- Linux dmesg命令:显示开机信息

fafadsj666

linux数据库数据挖掘机器学习大数据

通过学习《Linux启动管理》一章可以知道,在系统启动过程中,内核还会进行一次系统检测(第一次是BIOS进行加测),但是检测的过程不是没有显示在屏幕上,就是会快速的在屏幕上一闪而过那么,如果开机时来不及查看相关信息,我们是否可以在开机后查看呢?答案是肯定的,使用dmesg命令就可以。无论是系统启动过程中,还是系统运行过程中,只要是内核产生的信息,都会被存储在系统缓冲区中,已经为大家精心准备了大数据

- 大数据新视界 --大数据大厂之揭秘大数据时代 Excel 魔法:大厂数据分析师进阶秘籍

青云交

大数据新视界Excel数据分析函数公式数据透视表图表功能规划求解数据分析工具库大数据新视界数据库

亲爱的朋友们,热烈欢迎你们来到青云交的博客!能与你们在此邂逅,我满心欢喜,深感无比荣幸。在这个瞬息万变的时代,我们每个人都在苦苦追寻一处能让心灵安然栖息的港湾。而我的博客,正是这样一个温暖美好的所在。在这里,你们不仅能够收获既富有趣味又极为实用的内容知识,还可以毫无拘束地畅所欲言,尽情分享自己独特的见解。我真诚地期待着你们的到来,愿我们能在这片小小的天地里共同成长,共同进步。本博客的精华专栏:Ja

- 大数据新视界 --大数据大厂之数据挖掘入门:用 R 语言开启数据宝藏的探索之旅

青云交

大数据新视界数据库大数据数据挖掘R语言算法案例未来趋势应用场景学习建议大数据新视界

亲爱的朋友们,热烈欢迎你们来到青云交的博客!能与你们在此邂逅,我满心欢喜,深感无比荣幸。在这个瞬息万变的时代,我们每个人都在苦苦追寻一处能让心灵安然栖息的港湾。而我的博客,正是这样一个温暖美好的所在。在这里,你们不仅能够收获既富有趣味又极为实用的内容知识,还可以毫无拘束地畅所欲言,尽情分享自己独特的见解。我真诚地期待着你们的到来,愿我们能在这片小小的天地里共同成长,共同进步。本博客的精华专栏:Ja

- 高职人工智能训练师边缘计算实训室解决方案

武汉唯众智创

人工智能训练师边缘计算实训室人工智能训练师实训室边缘计算实训室

一、引言随着物联网(IoT)、大数据、人工智能(AI)等技术的飞速发展,计算需求日益复杂和多样化。传统的云计算模式虽在一定程度上满足了这些需求,但在处理海量数据、保障实时性与安全性、提升计算效率等方面仍面临诸多挑战。在此背景下,边缘计算作为一种新兴的计算模式应运而生,通过将计算能力推向数据生成或用户所在的网络边缘,显著降低了数据传输的延迟,提升了处理效率,并增强了数据安全性。针对高等职业院校的人工

- Spring4.1新特性——综述

jinnianshilongnian

spring 4.1

目录

Spring4.1新特性——综述

Spring4.1新特性——Spring核心部分及其他

Spring4.1新特性——Spring缓存框架增强

Spring4.1新特性——异步调用和事件机制的异常处理

Spring4.1新特性——数据库集成测试脚本初始化

Spring4.1新特性——Spring MVC增强

Spring4.1新特性——页面自动化测试框架Spring MVC T

- Schema与数据类型优化

annan211

数据结构mysql

目前商城的数据库设计真是一塌糊涂,表堆叠让人不忍直视,无脑的架构师,说了也不听。

在数据库设计之初,就应该仔细揣摩可能会有哪些查询,有没有更复杂的查询,而不是仅仅突出

很表面的业务需求,这样做会让你的数据库性能成倍提高,当然,丑陋的架构师是不会这样去考虑问题的。

选择优化的数据类型

1 更小的通常更好

更小的数据类型通常更快,因为他们占用更少的磁盘、内存和cpu缓存,

- 第一节 HTML概要学习

chenke

htmlWebcss

第一节 HTML概要学习

1. 什么是HTML

HTML是英文Hyper Text Mark-up Language(超文本标记语言)的缩写,它规定了自己的语法规则,用来表示比“文本”更丰富的意义,比如图片,表格,链接等。浏览器(IE,FireFox等)软件知道HTML语言的语法,可以用来查看HTML文档。目前互联网上的绝大部分网页都是使用HTML编写的。

打开记事本 输入一下内

- MyEclipse里部分习惯的更改

Array_06

eclipse

继续补充中----------------------

1.更改自己合适快捷键windows-->prefences-->java-->editor-->Content Assist-->

Activation triggers for java的右侧“.”就可以改变常用的快捷键

选中 Text

- 近一个月的面试总结

cugfy

面试

本文是在学习中的总结,欢迎转载但请注明出处:http://blog.csdn.net/pistolove/article/details/46753275

前言

打算换个工作,近一个月面试了不少的公司,下面将一些面试经验和思考分享给大家。另外校招也快要开始了,为在校的学生提供一些经验供参考,希望都能找到满意的工作。

- HTML5一个小迷宫游戏

357029540

html5

通过《HTML5游戏开发》摘抄了一个小迷宫游戏,感觉还不错,可以画画,写字,把摘抄的代码放上来分享下,喜欢的同学可以拿来玩玩!

<html>

<head>

<title>创建运行迷宫</title>

<script type="text/javascript"

- 10步教你上传githib数据

张亚雄

git

官方的教学还有其他博客里教的都是给懂的人说得,对已我们这样对我大菜鸟只能这么来锻炼,下面先不玩什么深奥的,先暂时用着10步干净利索。等玩顺溜了再用其他的方法。

操作过程(查看本目录下有哪些文件NO.1)ls

(跳转到子目录NO.2)cd+空格+目录

(继续NO.3)ls

(匹配到子目录NO.4)cd+ 目录首写字母+tab键+(首写字母“直到你所用文件根就不再按TAB键了”)

(查看文件

- MongoDB常用操作命令大全

adminjun

mongodb操作命令

成功启动MongoDB后,再打开一个命令行窗口输入mongo,就可以进行数据库的一些操作。输入help可以看到基本操作命令,只是MongoDB没有创建数据库的命令,但有类似的命令 如:如果你想创建一个“myTest”的数据库,先运行use myTest命令,之后就做一些操作(如:db.createCollection('user')),这样就可以创建一个名叫“myTest”的数据库。

一

- bat调用jar包并传入多个参数

aijuans

下面的主程序是通过eclipse写的:

1.在Main函数接收bat文件传递的参数(String[] args)

如: String ip =args[0]; String user=args[1]; &nbs

- Java中对类的主动引用和被动引用

ayaoxinchao

java主动引用对类的引用被动引用类初始化

在Java代码中,有些类看上去初始化了,但其实没有。例如定义一定长度某一类型的数组,看上去数组中所有的元素已经被初始化,实际上一个都没有。对于类的初始化,虚拟机规范严格规定了只有对该类进行主动引用时,才会触发。而除此之外的所有引用方式称之为对类的被动引用,不会触发类的初始化。虚拟机规范严格地规定了有且仅有四种情况是对类的主动引用,即必须立即对类进行初始化。四种情况如下:1.遇到ne

- 导出数据库 提示 outfile disabled

BigBird2012

mysql

在windows控制台下,登陆mysql,备份数据库:

mysql>mysqldump -u root -p test test > D:\test.sql

使用命令 mysqldump 格式如下: mysqldump -u root -p *** DBNAME > E:\\test.sql。

注意:执行该命令的时候不要进入mysql的控制台再使用,这样会报

- Javascript 中的 && 和 ||

bijian1013

JavaScript&&||

准备两个对象用于下面的讨论

var alice = {

name: "alice",

toString: function () {

return this.name;

}

}

var smith = {

name: "smith",

- [Zookeeper学习笔记之四]Zookeeper Client Library会话重建

bit1129

zookeeper

为了说明问题,先来看个简单的示例代码:

package com.tom.zookeeper.book;

import com.tom.Host;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.ZooKeeper;

import org.apache.zookeeper.Wat

- 【Scala十一】Scala核心五:case模式匹配

bit1129

scala

package spark.examples.scala.grammars.caseclasses

object CaseClass_Test00 {

def simpleMatch(arg: Any) = arg match {

case v: Int => "This is an Int"

case v: (Int, String)

- 运维的一些面试题

yuxianhua

linux

1、Linux挂载Winodws共享文件夹

mount -t cifs //1.1.1.254/ok /var/tmp/share/ -o username=administrator,password=yourpass

或

mount -t cifs -o username=xxx,password=xxxx //1.1.1.1/a /win

- Java lang包-Boolean

BrokenDreams

boolean

Boolean类是Java中基本类型boolean的包装类。这个类比较简单,直接看源代码吧。

public final class Boolean implements java.io.Serializable,

- 读《研磨设计模式》-代码笔记-命令模式-Command

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

import java.util.ArrayList;

import java.util.Collection;

import java.util.List;

/**

* GOF 在《设计模式》一书中阐述命令模式的意图:“将一个请求封装

- matlab下GPU编程笔记

cherishLC

matlab

不多说,直接上代码

gpuDevice % 查看系统中的gpu,,其中的DeviceSupported会给出matlab支持的GPU个数。

g=gpuDevice(1); %会清空 GPU 1中的所有数据,,将GPU1 设为当前GPU

reset(g) %也可以清空GPU中数据。

a=1;

a=gpuArray(a); %将a从CPU移到GPU中

onGP

- SVN安装过程

crabdave

SVN

SVN安装过程

subversion-1.6.12

./configure --prefix=/usr/local/subversion --with-apxs=/usr/local/apache2/bin/apxs --with-apr=/usr/local/apr --with-apr-util=/usr/local/apr --with-openssl=/

- sql 行列转换

daizj

sql行列转换行转列列转行

行转列的思想是通过case when 来实现

列转行的思想是通过union all 来实现

下面具体例子:

假设有张学生成绩表(tb)如下:

Name Subject Result

张三 语文 74

张三 数学 83

张三 物理 93

李四 语文 74

李四 数学 84

李四 物理 94

*/

/*

想变成

姓名 &

- MySQL--主从配置

dcj3sjt126com

mysql

linux下的mysql主从配置: 说明:由于MySQL不同版本之间的(二进制日志)binlog格式可能会不一样,因此最好的搭配组合是Master的MySQL版本和Slave的版本相同或者更低, Master的版本肯定不能高于Slave版本。(版本向下兼容)

mysql1 : 192.168.100.1 //master mysq

- 关于yii 数据库添加新字段之后model类的修改

dcj3sjt126com

Model

rules:

array('新字段','safe','on'=>'search')

1、array('新字段', 'safe')//这个如果是要用户输入的话,要加一下,

2、array('新字段', 'numerical'),//如果是数字的话

3、array('新字段', 'length', 'max'=>100),//如果是文本

1、2、3适当的最少要加一条,新字段才会被

- sublime text3 中文乱码解决

dyy_gusi

Sublime Text

sublime text3中文乱码解决

原因:缺少转换为UTF-8的插件

目的:安装ConvertToUTF8插件包

第一步:安装能自动安装插件的插件,百度“Codecs33”,然后按照步骤可以得到以下一段代码:

import urllib.request,os,hashlib; h = 'eb2297e1a458f27d836c04bb0cbaf282' + 'd0e7a30980927

- 概念了解:CGI,FastCGI,PHP-CGI与PHP-FPM

geeksun

PHP

CGI

CGI全称是“公共网关接口”(Common Gateway Interface),HTTP服务器与你的或其它机器上的程序进行“交谈”的一种工具,其程序须运行在网络服务器上。

CGI可以用任何一种语言编写,只要这种语言具有标准输入、输出和环境变量。如php,perl,tcl等。 FastCGI

FastCGI像是一个常驻(long-live)型的CGI,它可以一直执行着,只要激活后,不

- Git push 报错 "error: failed to push some refs to " 解决

hongtoushizi

git

Git push 报错 "error: failed to push some refs to " .

此问题出现的原因是:由于远程仓库中代码版本与本地不一致冲突导致的。

由于我在第一次git pull --rebase 代码后,准备push的时候,有别人往线上又提交了代码。所以出现此问题。

解决方案:

1: git pull

2:

- 第四章 Lua模块开发

jinnianshilongnian

nginxlua

在实际开发中,不可能把所有代码写到一个大而全的lua文件中,需要进行分模块开发;而且模块化是高性能Lua应用的关键。使用require第一次导入模块后,所有Nginx 进程全局共享模块的数据和代码,每个Worker进程需要时会得到此模块的一个副本(Copy-On-Write),即模块可以认为是每Worker进程共享而不是每Nginx Server共享;另外注意之前我们使用init_by_lua中初

- java.lang.reflect.Proxy

liyonghui160com

1.简介

Proxy 提供用于创建动态代理类和实例的静态方法

(1)动态代理类的属性

代理类是公共的、最终的,而不是抽象的

未指定代理类的非限定名称。但是,以字符串 "$Proxy" 开头的类名空间应该为代理类保留

代理类扩展 java.lang.reflect.Proxy

代理类会按同一顺序准确地实现其创建时指定的接口

- Java中getResourceAsStream的用法

pda158

java

1.Java中的getResourceAsStream有以下几种: 1. Class.getResourceAsStream(String path) : path 不以’/'开头时默认是从此类所在的包下取资源,以’/'开头则是从ClassPath根下获取。其只是通过path构造一个绝对路径,最终还是由ClassLoader获取资源。 2. Class.getClassLoader.get

- spring 包官方下载地址(非maven)

sinnk

spring

SPRING官方网站改版后,建议都是通过 Maven和Gradle下载,对不使用Maven和Gradle开发项目的,下载就非常麻烦,下给出Spring Framework jar官方直接下载路径:

http://repo.springsource.org/libs-release-local/org/springframework/spring/

s

- Oracle学习笔记(7) 开发PLSQL子程序和包

vipbooks

oraclesql编程

哈哈,清明节放假回去了一下,真是太好了,回家的感觉真好啊!现在又开始出差之旅了,又好久没有来了,今天继续Oracle的学习!

这是第七章的学习笔记,学习完第六章的动态SQL之后,开始要学习子程序和包的使用了……,希望大家能多给俺一些支持啊!

编程时使用的工具是PLSQL