HashMap详解

文章目录

- 1. 简介

- 2. Java 7中的HashMap

- 2.1 问题1:为什么初始容量是2的幂

- 2.2 问题2:为什么会出现死锁

- 2.3 问题3:存在安全问题,大量链表导致性能退化

- 3. Java 8中的HashMap的优化

- 3.1 问题1:为什么把链表转化为红黑树的阈值是8(并且数组长度超过64)

- 3.2 问题2:put方法、resize方法

- 3.3 问题3:get方法

- 4. 面试问题汇总

- 4.1 问题1:HashMap的实现原理

- 4.2 问题2:HashMap扩容、get、put

- 4.3 问题3:jdk1.7和jdk1.8中HashMap区别

- 4.4 问题4:并发问题

- 4.5 问题5:你一般用什么作为HashMap的key

1. 简介

- 核心是基于哈希值的桶和链表

- O(1)的平均查找、插入、删除时间(因为数组的读写复杂度是O(1))

- 缺陷是会产生哈希碰撞

2. Java 7中的HashMap

- 实现方式:数组+链表(数组查询效率高,链表修改效率高)

- 源代码查询

/**

* Hash table based implementation of the Map interface.

*/

2.1 问题1:为什么初始容量是2的幂

/**

* The default initial capacity - MUST be a power of two.

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

初始的HashMap是空的

/**

* An empty table instance to share when the table is not inflated.

*/

static final Entry<?,?>[] EMPTY_TABLE = {};

看put方法,如果table为空,膨胀table

public V put(K key, V value) {

if (table == EMPTY_TABLE) {

inflateTable(threshold);//inflate:膨胀

}

if (key == null)

return putForNullKey(value);

int hash = hash(key);

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

inflateTable方法,roundUpToPowerOf2:向上取整至2的幂,比如我们初始化容量为17,向上调整结果是32

private void inflateTable(int toSize) {

// Find a power of 2 >= toSize

int capacity = roundUpToPowerOf2(toSize);

threshold = (int) Math.min(capacity * loadFactor, MAXIMUM_CAPACITY + 1);

table = new Entry[capacity];

initHashSeedAsNeeded(capacity);

}

如何把int值x分配到0到n-1的范围内?取余:x%n,存在2个缺点:

- 需要先把负数变正数

- 速度比位运算要慢

所以看HashMap如何实现的,put方法,计算出hash值,indexFor判断放在哪个桶中

public V put(K key, V value) {

//...

int hash = hash(key);

int i = indexFor(hash, table.length);

//...

}

static int indexFor(int h, int length) {

// assert Integer.bitCount(length) == 1 : "length must be a non-zero power of 2";

return h & (length-1);

}

具体如何分配到桶中?

按位与:比如hash值:(后8位)10111011,只有length是2的幂,length假设是2的4次方:10000,length-1是:1111,按位与得到hash值的后4位。而如果length不是2的幂,length-1的某几位会是0(比如length=15,1111,length-1,1110),0按位与一定是0,会导致某些序号的桶一直是空的,分布不均匀。

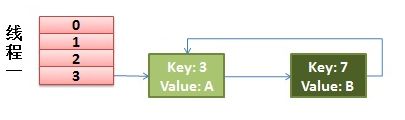

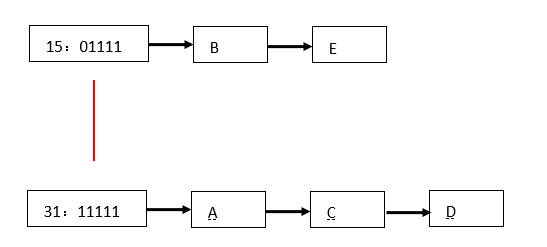

扩容前,有16个桶,n=16,第16个桶(低4位:1111)存放5个元素:A B C D E

扩容后,n=32,32-1=32,二进制11111,要对低5位按位与,第5位要么0、要么1。如果0,即01111,与原来保持不变。如果1,即11111,将元素添加到高位为1的桶中。

接着看put方法,每个桶中存放Entry节点,如果完全重复会先替换原来的Entry,再添加一个新的Entry

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

int hash;

//...

}

public V put(K key, V value) {

//...

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

addEntry方法会判断size是否超过threshold(threshold=当前capacity * load factor),超过就会resize扩容,resize(2 * table.length),(注释部分)resize方法会重新计算桶中元素哈希(rehashe),创建新的更大的桶数组(new Entry[newCapacity]),然后将原有元素添加进去(transfer方法:遍历原来的每一个元素,计算hash,添加)

load factor=0.75保证了时间和空间复杂度的平衡,增大会减小空间复杂度但增加时间复杂度,减小则相反。并且计算阈值:table.length * 3/4可以被优化为(table.length >> 2) << 2) - (table.length >> 2) == table.length - (table.lenght >> 2),位运算效率高

/**

* The next size value at which to resize (capacity * load factor).

* @serial

*/

// If table == EMPTY_TABLE then this is the initial capacity at which the

// table will be created when inflated.

int threshold;

void addEntry(int hash, K key, V value, int bucketIndex) {

if ((size >= threshold) && (null != table[bucketIndex])) {

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

/**

* Rehashes the contents of this map into a new array with a

* larger capacity. This method is called automatically when the

* number of keys in this map reaches its threshold.

*/

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return;

}

Entry[] newTable = new Entry[newCapacity];

transfer(newTable, initHashSeedAsNeeded(newCapacity));

table = newTable;

threshold = (int)Math.min(newCapacity * loadFactor, MAXIMUM_CAPACITY + 1);

}

void transfer(Entry[] newTable, boolean rehash) {

int newCapacity = newTable.length;

for (Entry<K,V> e : table) {

while(null != e) {

Entry<K,V> next = e.next;

if (rehash) {

e.hash = null == e.key ? 0 : hash(e.key);

}

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

newTable[i] = e;

e = next;

}

}

}

2.2 问题2:为什么会出现死锁

是用户自己的问题,文档已经说明

/**

* Note that this implementation is not synchronized.

* If multiple threads access a hash map concurrently, and at least one of

* the threads modifies the map structurally, it must be

* synchronized externally.

*/

详细分析

扩容前:3到7,扩容后,由于采用头插法,变成7到3。最终形成环形链表。7-3-7-3…。此时还在调用get方法,get方法一直有下一个next,陷入死循环。

2.3 问题3:存在安全问题,大量链表导致性能退化

问题说明:tomcat邮件,Tomcat使用哈希表来存储请求参数,而如果参数的哈希值都相同时,会引发性能问题

可以通过精心构造的恶意请求引发 DoS(Denial of service:拒绝服务)

//输出结果:2112、2112、2112

public static void main(String[] args) {

HashMap<String,String> map=new HashMap<>();

List<String> list=Arrays.asList("Aa","BB","C#");

for(String s:list) {

System.out.println(s.hashCode());

map.put(s,s);

}

map.size();

}

优化措施,put方法的inflateTable方法,有初始化hash种子initHashSeedAsNeeded的方法。而在获取hash值时,int h = hashSeed,如果k instanceof String,会使用另外一种String类型的hash算法(不使用String里默认的)计算hash

private void inflateTable(int toSize) {

// Find a power of 2 >= toSize

int capacity = roundUpToPowerOf2(toSize);

threshold = (int) Math.min(capacity * loadFactor, MAXIMUM_CAPACITY + 1);

table = new Entry[capacity];

initHashSeedAsNeeded(capacity);

}

final int hash(Object k) {

int h = hashSeed;

if (0 != h && k instanceof String) {

return sun.misc.Hashing.stringHash32((String) k);

}

h ^= k.hashCode();

// This function ensures that hashCodes that differ only by

// constant multiples at each bit position have a bounded

// number of collisions (approximately 8 at default load factor).

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

3. Java 8中的HashMap的优化

3.1 问题1:为什么把链表转化为红黑树的阈值是8(并且数组长度超过64)

Java中的解释:

因为树节点的带下是普通节点的两倍,所以我们只有在单个桶已经包含了足够多的节点时才会转换成树,而在树变得太小时,将其转换为链表。在hashcode分布良好的情况下,我们是用不到树型桶的。

理想情况下,随机的hashcode遵循泊松分布原则,在给定参数值的情况下,8的对应的分布值已经相当低,在取值方便于后面的位运算的情况下,取值为2的幂次方是很有必要的,两个要求之下,只有8是最适合的。且用户自定的值不应当小于8,避免分布集中。

* Because TreeNodes are about twice the size of regular nodes, we

* use them only when bins contain enough nodes to warrant use

* (see TREEIFY_THRESHOLD). And when they become too small (due to

* removal or resizing) they are converted back to plain bins. In

* usages with well-distributed user hashCodes, tree bins are

* rarely used. Ideally, under random hashCodes, the frequency of

* nodes in bins follows a Poisson distribution

* (http://en.wikipedia.org/wiki/Poisson_distribution) with a

* parameter of about 0.5 on average for the default resizing

* threshold of 0.75, although with a large variance because of

* resizing granularity. Ignoring variance, the expected

* occurrences of list size k are (exp(-0.5) * pow(0.5, k) /

* factorial(k)). The first values are:

*

* 0: 0.60653066

* 1: 0.30326533

* 2: 0.07581633

* 3: 0.01263606

* 4: 0.00157952

* 5: 0.00015795

* 6: 0.00001316

* 7: 0.00000094

* 8: 0.00000006

* more: less than 1 in ten million

static final int TREEIFY_THRESHOLD = 8;

static final int UNTREEIFY_THRESHOLD = 6;

static final int MIN_TREEIFY_CAPACITY = 64;

3.2 问题2:put方法、resize方法

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

计算hash:将高16位和低16位异或(不进位加法)

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

putVal

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

//初始化

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

//当前桶的第一个节点

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

//如果完全相同,覆盖节点

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

//如果是树节点,执行树节点的插入操作

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

//如果是链表

else {

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

//超过变树的阈值,将桶中链表变为树

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

if (++size > threshold)

resize();

afterNodeInsertion(evict);

return null;

}

resize:扩容

newThr = oldThr << 1; // double threshold 扩容翻倍

else { // preserve order 保持元素顺序

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

//首节点为空, 本次循环结束

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

//无后续节点, 重新计算hash位, 本次循环结束

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

//当前是红黑树, 走红黑树的重定位

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

//当前是链表

else { // preserve order

Node<K,V> loHead = null, loTail = null;//低位

Node<K,V> hiHead = null, hiTail = null;//高位

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {//不需要移位

if (loTail == null)

loHead = e;

else

loTail.next = e;//保证原有顺序

loTail = e;

}

else {//移位

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

jdk1.7扩容后需要重新计算hash值(与length-1按位与),但jdk1.8做了优化,先判断(e.hash & oldCap) == 0,如果不为0,移动至新的槽位

resize效率很低,可以初始化时定义一个容量,用空间换时间

3.3 问题3:get方法

public V get(Object key) {

Node<K,V> e;

return (e = getNode(hash(key), key)) == null ? null : e.value;

}

final Node<K,V> getNode(int hash, Object key) {

Node<K,V>[] tab; Node<K,V> first, e; int n; K k;

if ((tab = table) != null && (n = tab.length) > 0 &&

(first = tab[(n - 1) & hash]) != null) {

//检查第一个节点

if (first.hash == hash && // always check first node

((k = first.key) == key || (key != null && key.equals(k))))

return first;

//检查next

if ((e = first.next) != null) {

//桶中是否是红黑数

if (first instanceof TreeNode)

return ((TreeNode<K,V>)first).getTreeNode(hash, key);

//桶中是链表

do {

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);

}

}

return null;

}

4. 面试问题汇总

4.1 问题1:HashMap的实现原理

- 什么是HashMap:散列表,多个散列值相同的散列桶,存放在数组中

- 为什么用数组+链表:数组查询快,链表增删快

- hash冲突你还知道哪些解决办法

- 开放定址法 / 再散列法:当关键字key的哈希地址p=H(key)出现冲突时,以p为基础,产生另一个哈希地址p1,如果p1仍然冲突,再以p为基础,产生另一个哈希地址p2,…,直到找出一个不冲突的哈希地址pi ,将相应元素存入其中。这种方法有一个通用的再散列函数形式:线性探测、二次探测、随机探测

- 链地址法:将所有哈希地址为i的元素构成一个称为同义词链的单链表

- 再哈希法:冲突时在此计算hash值,增加计算时间

- 建立公共溢出区:将哈希表分为基本表和溢出表两部分,凡是和基本表发生冲突的元素,一律填入溢出表

- 用LinkedList代替数组结构可以么:可以,效率低

- 了解TreeMap吗:红黑树实现

- 了解CurrentHashMapp吗:增加synchronized+CAS来保证线程安全性

4.2 问题2:HashMap扩容、get、put

- HashMap为什么不直接使用hashCode()处理后的哈希值直接作为table的下标:hashCode()方法返回的是int整数类型,其范围为-(2 ^ 31)~(2 ^ 31 - 1),没有那么大存储空间

- HashMap在什么条件下扩容:超过阈值(load factor*current capacity)

- 为什么扩容是2的次幂(保证length-1是1111,按位与能保证桶分布均匀性)

- 为什么要先高16位异或低16位再取模运算:降低hash冲突

- 知道hashmap中put元素的过程是什么样吗

- 知道hashmap中get元素的过程是什么样吗

- hash算法是干嘛的?还知道哪些hash算法:把一个大范围映射到一个小范围

- 说说String中hashcode的实现

String类中的hashCode计算方法还是比较简单的,就是以31为权,每一位为字符的ASCII值进行运算,用自然溢出来等效取模。

哈希计算公式可以计为s[0]*31^(n-1) + s[1]*31^(n-2) + … + s[n-1]

那为什么以31为质数呢?主要是因为31是一个奇质数,所以31i=32i-i=(i<<5)-i,这种位移与减法结合的计算相比一般的运算快很多。

public int hashCode() {

int h = hash;

if (h == 0 && value.length > 0) {

char val[] = value;

for (int i = 0; i < value.length; i++) {

h = 31 * h + val[i];

}

hash = h;

}

return h;

}

4.3 问题3:jdk1.7和jdk1.8中HashMap区别

- jdk1.8中HashMap与之前有哪些不同

- 结构

- 头插尾插

- jdk1.7 先扩容后插入,jdk1.8先插入后扩容再移位:和扩容机制有关

- 为什么在解决hash冲突的时候,不直接用红黑树?而选择先用链表,再转红黑树:红黑树保证自平衡需要大量操作,数据少使用链表足以保证查询性能

- 不用红黑树,用二叉查找树可以么:极端条件会退化为单链表

- 当链表转为红黑树后,什么时候退化为链表

为6的时候退转为链表。中间有个差值7可以防止链表和树之间频繁的转换。假设一下,如果设计成链表个数超过8则链表转换成树结构,链表个数小于8则树结构转换成链表,如果一个HashMap不停的插入、删除元素,链表个数在8左右徘徊,就会频繁的发生树转链表、链表转树,效率会很低

4.4 问题4:并发问题

- HashMap在并发编程环境下有什么问题(jdk1.8以后):没有加锁

- 如何解决这些问题:使用线程安全集合

4.5 问题5:你一般用什么作为HashMap的key

-

健可以为Null值么:可以,key为null的时候,hash算法最后的值以0来计算,也就是放在数组的第一个位置

-

一般用什么作为HashMap的key:Integer、String

- String最常用。因为字符串是不可变的,所以在它创建的时候hashcode就被缓存了,不需要重新计算

- 这些类已经很规范的覆写了hashCode()以及equals()方法

-

用可变类当HashMap的key有什么问题:hashCode可能会改变

-

实现一个自定义的class作为HashMap的key该如何实现

- 如何设计一个不变类:final、字段私有、没有set方法

- 重写hashcode和equals

详细答案参考

其他问题参考:

Java源码分析:关于 HashMap 1.8 的重大更新