论文笔记《A Closed-form Solution to Photorealistic Image Stylization-reading notes》

1、Paper basic information

Authors:Yijun Li、Ming-Yu Liu、Xueting Li、Ming-Hsuan Yang、and Jan Kautz ( University of California, Merced ; NVIDIA)

Comments: 11 pages, 14 figures

Subjects: Computer Vision and Pattern Recognition (cs.CV)

Cite as: arXiv:1802.06474 [cs.CV]

2、The understanding of paper

Abstract

First of all,paper point out the aim of photorealistic image style transfer algorithms.

Photorealistic image style transfer algorithms aim at stylizing a content photo using the style of a reference photo with the constraint that the stylized photo should remains photorealistic.

Secondly,pointing out the limitations of several methods exist for this task.

While several methods exist for this task, they tend to generate spatially inconsistent stylizations with noticeable artifacts. In addition, these methods are compu- tationally expensive, requiring several minutes to stylize a VGA photo.

VGA: the color digits is important indicator of resolution,4 bit(16 color,VGA)

Then,introducing the proposed algorithm of this paper.

In this paper, we present a novel algorithm to address the limitations. The proposed algorithm consists of a stylization step and a smoothing step.

Next,pointing out the bright spot of this algorithm.

While the stylization step transfers the style of the reference photo to the content photo, the smoothing step encourages spatially consistent stylizations.

Next,pointing out the difference from the existing algorithm.

Unlike existing algorithms that require iterative optimization, both steps in our algorithm have closed-form solutions.

Finally,describing their great experimental results by comparing to the existing approach.

Experimental results show that the stylized photos generated by our algorithm are twice more preferred by human subjects in average. Moreover, our method runs 60 times faster than the state-of-the-art approach.

1.Introduction

Here are the experience objectives,shortcomings of existing methods,and a brief introduction to the solution of this paper.

Firstly,there is a detailed description of the goal.

The goal of photorealistic image style transfer is to change the style of a photo to resemble that of another one. For a faithful stylization, the content in the output photo should remain the same, while the style of the output photo should resemble the one of the reference photo. Furthermore, the output photo should look like a real photo captured by a camera.

Showing two photorealistic image stylization examples by some figures and introducing these.

Secondly, introducing recent approach.

Due to lack of expressive feature representations, classical stylization approaches are based on matching color statistics (e.g., color transfer [25, 24, 31] or tone transfer [1]) or are limited to specific scenarios (e.g., seasons [12] and headshot portraits [27]).

so, the insufficient is lacking of expressive feature representations、matching color statistics、specific scenarios. And introducing recent approach.

Recently, Gatys et al. [5, 6] show that the correlations between deep features encode the visual style of an image and propose an optimization-based method, called the neural style transfer algorithm, for image style transfer.

The idea of Gatys et al. is correlations between deep features encode the visual style of an image.

they propose an optimization-based method, called the neural style transfer algorithm.

While the method shows impressive performance for artistic style transfer (converting images to paintings), it often introduces structural artifacts and distortions (e.g., extremely bright colors) when applied to the photorealistic image style transfer task as shown in Figure 1(c).

The approach of Gatys et al. is great for artistic style transfer(converting image to painting), but not suitable for photorealistic image style transfer. It often introduces structural artifacts and distortions.

In a follow-up work, Luan et al. [21] propose adding a regularization term to the optimization objective function of the neural style transfer algorithm and show this reduces distortions in the output images.

The idea of Luan et al. is adding a regularization term to the optimization objective function. It show this reduces distortions in the output images.

However, the resulting algorithm tends to stylize semantically uniform regions in images inconsistently as shown in Figure 1(d).

Finally, introducing the idea of this paper.

In this paper, we propose a novel fast photorealistic image style transfer algorithm. It consists of two steps: the stylization step and the smoothing step. Both of the steps have closed-form solutions.

I don’t know what is closed-form.

Introducing the two steps respectively.

The stylization step:

The stylization step is based on the whitening and coloring transform (WCT) algorithm [17] and is referred to as the PhotoWCT step.

Introducing the WCT algorithm and its shortcoming.

The WCT algorithm matches deep feature statistics via feature projections and is developed for artistic style transfer.

Similar to the neural style transfer algorithm, when the WCT algorithm is applied to the photorealistic image style transfer task, the output stylized photos often have structural artifacts.

Introducing the approach of addressing the issue.

The proposed PhotoWCT step addresses the issue by incorporating unpooling layers in the WCT transform.We show this largely improves the photorealistic style transfer performance.

The PhotoWCT step alone cannot guarantee generating spatially consistent stylization.

The smoothing step:

This is resolved by the proposed smoothing step, which is formulated as a manifold ranking problem.

Introducing how to conduct experiments and the advantages of the algorithm.

We conduct extensive experiments with comparison to the state-of-the-art to validate the proposed algorithm. User studies on stylization effects and photorealism show the competitive advantages of the proposed algorithm. In addition, our algorithm runs 60 times faster across various image resolutions thanks to the closed-form formulation.

There are some questions about user studies.

2.Related Works

Firstly, existing stylization methods.

Existing stylization methods are mostly example-based and can be classified into two categories: global and local.

Introducing global and local methods.

Global methods usually construct a spatially-invariant transfer function through matching the means and variances of pixel colors [25], histograms of pixel colors [24], or both [4]. These approaches often only adjust global colors or tones [1] in effects.

Local methods [28, 27, 36, 12, 33] perform spatial color mapping through finding dense correspondences between the content and style photos based on either low-level or high-level features. These approaches are slow in practice. Moreover, they are often limited to specific scenarios.

Secondly, Gatys et al. propose the neural style transfer algorithm.

In a seminar work, Gatys et al. [5, 6] propose the neural style transfer algorithm for the artistic style transfer task. The core of the algorithm is to solve an optimization problem of matching the Gram matrices of deep features extracted from the content and style photos.

An optimization problem of matching the Gram matrices of deep features.

There’s a question about the Gram matrices .

A number of methods have been developed [14, 34, 11, 16, 3, 8, 9, 17] to further improve its stylization performance and speed.

However, these works do not aim for preserving photorealism (see Fig- ure 1(c)).

Post-processing techniques [15, 22] are proposed to refine these results by matching the gradients between the content and output photo.

Secondly, putting forward another important line of related work.

Another important line of related works is image-to-image translation [10, 35, 20, 32, 29, 19, 43] where the goal is to translate an image from one domain to another.

Difference of photorealistic image style transfer.

Unlike image-to-image translation, photorealistic image style transfer does not require a training dataset to learn the translation. It just needs one single reference image.

Furthermore, photorealistic image style transfer performs can make image translation more specific. Not only can it transfer a photo to a different domain (e.g., form day to night-time) but also can transfer the specific style (e.g., extent of darkness) in a reference style image.

Finally, introducing the closest to paper’s work: Luan et al.

Closest to our work is the method of Luan et al. [21], which significantly improves photorealism of the stylization results of the neural style transfer algorithm by enforcing local constraints as an additional loss function.

the idea of Luan et al. is enforcing local constraints as an additional loss function.

However, it often generates noticeable artifacts, which cause inconsistent stylization (Figure 1(d)). Moreover, it is computationally expensive.

introducing the shortcoming of Luan et al. and pointing out the corresponding aims.

The proposed algorithm aims at efficient and effective photorealistic image style transfer. We demonstrate that it performs favorably against state-of-the-art methods in terms of both quality and speed.

3.Photorealistic Image Stylization

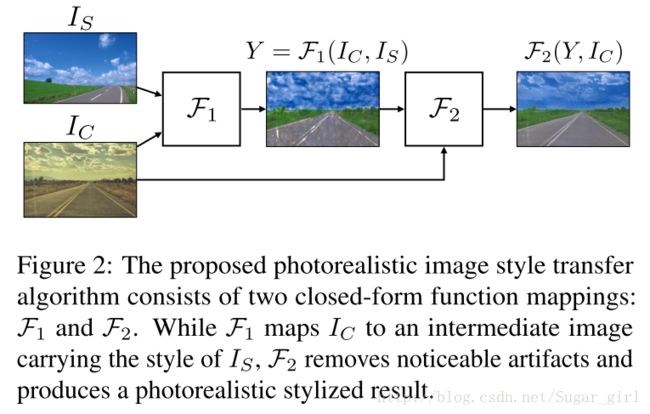

Firstly, using the figure of two steps to describe the process of approach.

Our photorealistic image style transfer algorithm consists of two steps as illustrated in Figure 2.

The first step is a stylization transform F1 F 1 called PhotoWCT. Given a style photo IS I S , F1 F 1 transfer the style of IS I S to the content photo IC I C while minimizing structural artifacts in the output image.

F2(F1(IC,IS),IC). F 2 ( F 1 ( I C , I S ) , I C ) .

In the following, we discuss the two steps in details.

3.1 Stylization

Firstly, introducing PhotoWCT improves WCT by using a novel network design.

Our PhotoWCT is based on the WCT [17]. It improves the WCT for the photorealistic image style transfer task by using a novel network design. For completeness, we briefly review the WCT in this section.

Secondly, introducing WCT.

WCT. The WCT formulates stylization as an image reconstruction problem with feature projections. To utilize WCT, an auto-encoder for general image reconstruction is first trained.

WCT is an auto-encoder for general image reconstruction.

Li et al. [17] employ the VGG-19 model [30] as the encoder ε ε , fix the encoder weights, and train a decoder D D for reconstructing the input image. The decoder is designed to be symmetrical to the encoder, with upsampling layers (pink blocks in Figure 3(a)) used to enlarge the spatial resolutions of the feature maps.

Using the upsampling layers to enlarge the spatial resolutions of the feature maps.

There are some question about decoder.

Once the auto-encoder is trained, a pair of projection functions are inserted at the network bottleneck to perform stylization through the whitening ( PC P C ) and coloring ( PS P S ) transforms.

The key idea behind the WCT is to directly match feature correlations of the content image to those of the style image via the two projections. Specifically, given a pair of content image IC I C and style image IS I S , the WCT first extracts their vectorised VGG features HC=ε(IC) H C = ε ( I C ) and HS=ε(IS) H S = ε ( I S ) , and then transform the content feature HC H C via

HCS=PSPCHC H C S = P S P C H Cwhere PC=ECΛ−12CETC P C = E C Λ C − 1 2 E C T , and PS=ESΛ12SETS P S = E S Λ S 1 2 E S T . Here ΛC Λ C and ΛS Λ S are the diagonal matrices with the eigenvalues of the covariance matrix HCHTC H C H C T and H_{S}H_{S}^{T} respectively. The matrices EC E C and ES E S are the corresponding orthonormal matrices of the eigenvectors, respectively.

After the transformation, the correlations of transformed features match those of the style features, i.e., HCSHTCS=HSHTS. H C S H C S T = H S H S T .

Finally, the stylized image is obtained by directly feeding the transformed feature map into the decoder: Y=D(HCS) Y = D ( H C S ) .

These formulas are not well understood.

For better stylization performance, Li et al. [17] use a multilevel stylization strategy, which performs the WCT on the VGG features at different layers.

Then, pointing the problem of WCT, and the PhotoWCT can solve it.

The WCT performs well for artistic style transfer. However it generates structural artifacts (e.g., distortions on object boundaries) for photorealistic image stylization (Figure 4(c)). Our PhotoWCT is proposed for suppressing these structural artifacts.

Next, introducing the PhotoWCT.

The PhotoWCT observe that the max-pooling operation reduces the spatial information.

PhotoWCT. Our PhotoWCT design is motivated by the observation that the max-pooling operation in the WCT reduces the spatial information in feature maps.

Simply upsampling feature maps in the decoder fails to recover detailed structures of the input image.

That is, we need to pass the lost spatial information to the decoder to facilitate reconstructing these fine details.

Simply upsampling loses spatial information, unpooling layer preserve spatial information. so exchange them.

Inspired by the success of the unpooling layer [39, 41, 23] in preserving spatial information, we propose to replace the upsampling layers in the WCT with unpooling layers for photorealistic stylization.

As a result, the PhotoWCT function is formulated as

Y=F1(IC,IS)=D¯(PSPCHC) Y = F 1 ( I C , I S ) = D ¯ ( P S P C H C )where D¯ D ¯ is the decoder trained with unpooling layers for image reconstruction. Figure 3 illustrates the difference in network architecture between the WCT and PhotoWCT.

Using building boundary to display the effect of algorithm.

Figure 4(c) and (d) show the results using the WCT and PhotoWCT. As highlighted in close-ups, the straight lines along the building boundary in the content image become zigzagged when applying the WCT but remains straight when applying the PhotoWCT. The PhotoWCT-stylized image has much fewer structural artifacts.

3.2 Photorealistic Smoothing

Firstly, pointing out the shortcoming of the PhotoWCT-stylized result, and giving a example.

The PhotoWCT-stylized result (Figure 4(d)) still looks less like a photo since semantically similar regions are stylized inconsistently.

For example, as we stylize the day-time photo using the night-time photo in Figure 4, the stylized sky region would be more photorealistic if it were uniformly dark blue instead of partially dark and partially light blue.

This motivates us to employ the pixel affinities in the content photo to smooth the PhotoWCT-stylized result.

Pointing out employing the pixel affinities in the content photo.

Two goals in the smoothing step.

We aim to achieve two goals in the smoothing step.

First, pixels with similar content in a local neighborhood should be stylized similarly.

Second, the smoothed result should not deviate significantly from the PhotoWCT result in order to maintain the global stylization effects.

As such, we first represent all pixels as nodes in a graph and define an affinity matrix W=ωij∈RN×N W = ω i j ∈ R N × N (N is the number of pixels) to describe pixel similarities.

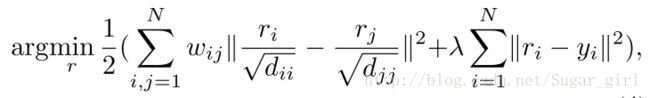

Defining a smoothness term and a fitting term.

We define a smoothness term and a fitting term that model these two goals in the following optimization problem:

where dii=∑jωij d i i = ∑ j ω i j is the diagonal element in the degree matrix D of W, i.e., D=diagd11,d22,...,dNN D = d i a g d 11 , d 22 , . . . , d N N . Here, yi y i is the pixel color in the PhotoWCT-stylized result Y Y and ri r i is the pixel color in the desired smoothed output R R . In (4), λ λ controls the balance of these two terms.

There are some question about degree matrix.

Next,introducing the motivation of formulation which is the graph-based ranking algorithms.

Our formulation is motivated by the graph-based ranking algorithms [42, 38]. In their algorithms, Y is a binary input where each element indicates if a specific item is a query (yi = 1 if yi is a query and yi = 0 otherwise).

The optimal solution R is the ranking values of all the items based on their pairwise affinities.

In our algorithm, we set Y as the PhotoWCT-stylized result. The optimal solution R is the smoothed version of Y based on the pairwise pixel affinities, which encourages consistent stylization within semantically similar regions.

Next, pointing out the closed-form solution of the smoothing step.

The above optimization problem is a simple quadratic problem with a closed-form solution, which is given by

R∗=(1−α)(I−αS)−1Y R ∗ = ( 1 − α ) ( I − α S ) − 1 Ywhere I I is the identity matrix, α=11+λ α = 1 1 + λ and S S is the normalized Laplacian matrix computed from IC I C , i.e., S=D−12WD−12∈RN×N S = D − 1 2 W D − 1 2 ∈ R N × N . As the constructed graph is often sparsely connected (i.e., most elements inW are zero), the inverse operation in (5) is computationally efficient. With the close-form solution, the smoothing step can be written as a function mapping given by:

R∗=F2(Y,IC)=(1−α)(I−αS)−1Y R ∗ = F 2 ( Y , I C ) = ( 1 − α ) ( I − α S ) − 1 Y

Next, introducing how to compute the affinity matrix W.

Affinity. The affinity matrix W is computed using the content photo based on an 8-connected image graph assumption. While several choices of affinity metrics exist, a popular one is to define the affinity (denoted as the Gaussian affinity) as ωij=e−∥Ii−Ij∥2/σ2 ω i j = e − ‖ I i − I j ‖ 2 / σ 2 where Ii I i , Ij I j are the RGB values of adjacent pixels i i , j j and σ σ is a global scaling hyper-parameter [26].

Solving the problem of selecting one global scaling hyper-parameter σ σ .

However, it is difficult to determine the σ σ value in practice. It often results in either over-smoothing the entire photo (Figure 5(e)) or stylizing the photo inconsistently (Figure 5(f)).

To avoid selecting one global scaling hyper-parameter, we resort to the matting affinity [13, 40] where the affinity between two pixels is based on means and variances of pixels in a local window. Figure 5(d) shows that the matting affinity is able to simultaneously smooth different regions well.

Using matting affinity where the affinity between two pixels is based on means and variances of pixels in a local window.

Finally, trying WCT plus Smoothing to prove the advantage of PhotoWCT plus Smoothing.The disadvantage of the WCT result are severely misaligned due to spatial distortions. However, the aim of PhotoWCT is removing distortions first.

WCT plus Smoothing? We note that the smoothing step can also remove structural artifacts in the WCT as shown in Figure 4(e). However, it leads to unsatisfactory stylization.

The main reason is that the content photo and the WCT result are severely misaligned due to spatial distortions.

For example, a stylized pixel of the building in the WCT result may correspond to a pixel of the sky in the content photo. Consequently this causes wrong queries in Y for the smoothing step.

That is why we need to use the PhotoWCT to remove distortions first. Figure 4(f) shows that the combination of PhotoWCT and smoothing leads to better photorealism while still maintaining faithful stylization.

4.Experimental Results

Descirbing from three aspects.

We first discuss the implementation details. We then present qualitative results comparing the proposed algorithm to several competing algorithms. Finally, we analyze the run-time and several algorithm design choices. Code and additional results are available at https://github.com/ NVIDIA/FastPhotoStyle.

First, discussing the implementation details.

Implementation details. We use the layers from conv1_1 to conv4_1 of the VGG-19 [30] network as the encoder ε ε . We initialize the encoder using the pretrained weights, which are kept fixed during training. The architecture of the decoder D¯ D ¯ is symmetrical to the encoder.

We train the auto-encoder for minimizing the sum of the L2 L 2 reconstruction loss and perceptual loss [11] using the Microsoft COCO dataset [18].

As suggested in [17] for better stylization effects, we apply the PhotoWCT on VGG features at multiple layers to better capture the characteristics of the style photo.

The details of the network design are given in the appendix.

Second paragraph of Implementation details.

To obtain better photorealistic image stylization performance, we use semantic label maps for more localized content and style matching, which are also used by the competing algorithms [7, 21].

Specifically, in the PhotoWCT step, we compute a pair of projection matrices PC P C and PS P S for each semantic label.

We gather the encoder feature vectors corresponding to the same semantic label in the content image to compute a PC P C , and those in the style image to compute a PS P S .

The feature vectors with a specific semantic label in the content image is then transformed using the corresponding PC P C and PS P S . We also use the post-processing filtering step in Luan et al. [21] for a fair comparison.

Secondly, doing visual comparisons to several competing algorithms.

Comparison of different photorealistic stylization methods.

Visual comparisons. In Figure 6, we provide a visual comparison between the proposed algorithm and two photorealistic stylization methods [24, 21].

The method of Piti´e et al. [24] performs a global image transfer through matching the color statistics between the content and style photos, while the method of Luan et al. [21] improves upon the neural style transfer algorithm [6] with a regularization term.

From Figure 6, we find that the proposed algorithm outperforms the competing algorithms in generating photorealistic image stylization results.

The method of Piti´e et al. [24] tends to simply change the color of the content photo and fail to transfer the style. The method of Luan et al. [21] achieves good stylization results, but it renders output images with inconsistent stylizations and noticeable artifacts (e.g., the purple color on the house in the second row).

Comparison with artistic stylization methods.

In Figure 7, we compare the proposed algorithm to two artistic style transfer methods [6, 9]:

the neural style transfer algorithm [6] and its fast variant [9]. Both methods successfully stylize the content images but render noticeable structural artifacts and inconsistent stylizations across the images. In contrast, our method produces more photorealistic results.

Finally, we analyze the run-time and several algorithm design choices.

By using the Amazon Mechanical Turk (AMT) to user studies.

User studies. As photorealistic image stylization is a highly subjective task, we resort to user studies using the Amazon Mechanical Turk (AMT) platform to better evaluate the performance of the proposed algorithm.

We compare the proposed algorithm to three competing algorithms, namely the neural style transfer algorithm of Gatys et al. [6], the method of Huang and Belongie [9], and the state-of-the-art photorealistic style transfer algorithm of Luan et al. [21].

We use a set of 32 content–style pairs provided by Luan et al. [21] as a benchmark dataset. In each question, we show the AMT workers the content–style pair and the stylized results of each algorithm in a random order and ask the worker to select an image based on the instructions. A worker must have a lifetime HIT (Human Intelligent Task) approval rate greater than 98% to be qualified to answer the question.

For a statistically significant test, each image pair is sent to 10 different workers. This constitutes to 320 questions for each study. We then calculate the average number of times the images from an algorithm is selected, which is the preference score.We conduct two user studies.

In one study, we ask the AMT workers to select which stylized photo better carries the style of the style photo.

In the other study, we ask the workers to select which stylized photo looks more like a real photo.

In both studies, detailed job instructions with examples are provided to define the task properly for the worker.

Through the two studies, we want to answer which algorithm better stylizes a content image and which algorithm renders better photorealistic output images, respectively.

The user study results are shown as round charts in Figure 8. We find the proposed algorithm is preferred for its stylization effect 50% of the times, which is two-times more frequent than the second best method of Luan et al. [21].

For the user study on photorealism, our method is preferred 62% of the time, which significantly outperforms the competing algorithms.

Speed.

Speed. We compare our method to the state-of-the-art method [21] in terms of run-time. The method of Luan et al. [21] stylizes a photo by solving two optimization problems in sequence.

The first one is to obtain an initial stylized result by solving the optimization problem in the neural style transfer algorithm [6].

The second one is to refine the initial result by adding another regularization term to the optimization objective function of the neural style transfer algorithm.

We report the run-time of solving both optimization problems and the run-time of solving the second optimization problem alone.

We resize the content images in the benchmark dataset to different sizes and report the average run-time for each image size. The results are shown in Figure 9.

I don’t know why this approach can reduce run-time so much.

WCT versus PhotoWCT. We compare the proposed algorithm with a variant where the PhotoWCT step is replaced by the WCT [17].

Again, we conduct two user studies for the comparison where one compares stylization effects while the other compares photorealism as described earlier. The result shows that the proposed algorithm is favored over its variant for better stylization 83% of the times and favored for better photorealism 88% of the times.

Sensitivity analysis on λ.

Sensitivity analysis on λ. In the photorealistic smoothing step, the λ balances between the smoothness term and fitting term in (4).

A smaller λ renders smoother results, while a larger λ renders results that are more faithfulness to the queries (the PhotoWCT result).

Figure 10 shows results of using different λ value. In general, decreasing λ helps remove artifacts and hence improves photorealism.

However, if λ is too small, the output image tends to be over-smoothed. In order to find the optimal λ, we perform a grid search.

We use the similarity between the boundary maps extracted from stylized and original content photos as the criteria since object boundaries should remain the same despite the stylization [2].

We employ the HED method [37] for boundary detection and use two standard boundary detection metrics: ODS and OIS. A higher ODS or OIS score means a stylized photo better preserves the content in the original photo.

The average scores over the benchmark dataset are shown in Figure 11. Based on the results, we use λ = 10−4 10 − 4 in all the experiments.

Alternative smoothing techniques.

Alternative smoothing techniques. In Figure 12, we compare our photorealistic smoothing step with two alternative approaches.

In the first approach, we use the PhotoWCT-stylized photo as the initial solution for solving the second optimization problem in the method of Luan et al. [21]. The result is shown in Figure 12(e).

We find that this approach leads to noticeable artifacts as the color on the road is distorted.

In the second approach, we use the method of Mechrez et al. [22], which refines stylized results by matching the gradients in the stylized photo to those in the content photo. As shown in Figure 12(f), we find this approach performs well for removing structural distortions on boundaries but does not remove visual artifacts.

In contrast, as shown in Figure 12(d), our method generates more photorealistic results with an efficient closed-form solution.

5. Conclusion

We propose a novel fast photorealistic image style transfer algorithm.

It consists of a stylization step and a photorealistic smoothing step.

Both steps have a closed-form solution.

Experimental results show that our algorithm generates results that are twice more favored and runs 60 times faster than the state-of-the-art method.